Creating Maintainable AI Workflows with Python, Pydantic and LangChain

Overview and Basic Concepts In this lesson, we'll explore how to create maintainable AI workflows using Python and Pydantic. We'll focus on building a story analysis system that can be easily modified and extended. Basic Concepts Pydantic Models are the foundation of type-safe data handling in modern Python applications. They allow us to define the structure of our data and validate it automatically. When working with AI workflows, this becomes crucial as it helps us maintain consistency and catch errors early. A Model in Pydantic is a class that inherits from BaseModel and defines the expected structure of your data. Each field in the model can have type hints, which Pydantic uses for validation. Building a Story Analysis System Let's create a simple story analysis system to demonstrate these concepts. You will need to install the following packages: pip install pydantic langchain-openai Models from pydantic import BaseModel from typing import List class Story(BaseModel): title: str content: str genre: str = "unknown" This model defines the basic structure of a story with title, content, and an optional genre field. Please note that the documentation of the models will be used by the AI to generate the correct output. Usually, the LLM can pick up the cue if you name your variables right, but sometimes (as in case of children's stories) we need to be explicit, and tell the LLM what we want. This is done by adding a docstring (just under the "class StoryForChildren(Story):" line) to the model. Models that we will use: class StoryForChildren(Story): """ A story that is specifically tailored to be children's story. Appropriate for children aged 2-6 years old. """ pass class StoryForBabies(Story): """ A story that is specifically tailored to be a baby's story. Appropriate for infants and toddlers aged 0-2 years old. Features: - Simple, repetitive language - Basic concepts - Short sentences - Sensory-rich descriptions """ pass class StoryAnalysis(BaseModel): # Character elements character_names: List[str] character_descriptions: List[str] # Theme elements main_theme: str supporting_themes: List[str] symbols: List[str] # Plot elements exposition: str climax: str resolution: str key_events: List[str] # Style and interpretation writing_techniques: List[str] overall_interpretation: str Creating AI Agents We create specialized agents that transform content between our defined models using the get_agent_function function. The function takes the input model, the output model and a temperature parameter. It returns a new function that we can use to transform our data. def get_agent_function( input_model: Union[BaseModel, str], output_model: BaseModel, temperature: float = 0.3 ) -> Callable[[Union[str, BaseModel]], BaseModel]: llm = ChatOpenAI( model="gpt-4", temperature=temperature ) def run_llm(input_data: Union[str, BaseModel]) -> BaseModel: if not isinstance(input_data, input_model): raise ValueError(f"Input must be an instance of {input_model}") if isinstance(input_data, BaseModel): input_data = input_data.model_dump_json() llm = llm.with_structured_output(output_model) response = llm.invoke(input_data) return response return run_llm Using the Workflow Here's how we can use our models and agents together: # Create specialized agents parse_story = get_agent_function(str, Story) get_baby_friendly_story = get_agent_function(Story, StoryForBabies) analyze_story = get_agent_function(Story, StoryAnalysis) # Execute the workflow story = parse_story(story_text) baby_friendly_story = get_baby_friendly_story(story) analysis = analyze_story(baby_friendly_story) Key Benefits Model-Driven Development with Built-in LLM Prompting The docstrings in our Pydantic models serve as direct prompts for the LLM By updating the model's docstring, we automatically update how the LLM interprets and generates content Example: The StoryForBabies model's docstring explicitly guides the LLM to use simple language and sensory descriptions Single Source of Truth Instead of maintaining separate prompts and data structures, the Pydantic model serves both purposes When you need to change how the AI behaves, you only need to update the model's docstring When you need to change the data structure, you only need to update the model's fields This eliminates the common problem of having prompts and code get out of sync Type Safety and Validation Pydantic automatically validates all data flowing through your AI workflow If the LLM generates invalid output, you'll know immediately This catches errors early in the development process, before they reach production Exa

Overview and Basic Concepts

In this lesson, we'll explore how to create maintainable AI workflows using Python and Pydantic. We'll focus on building a story analysis system that can be easily modified and extended.

Basic Concepts

Pydantic Models are the foundation of type-safe data handling in modern Python applications. They allow us to define the structure of our data and validate it automatically. When working with AI workflows, this becomes crucial as it helps us maintain consistency and catch errors early.

A Model in Pydantic is a class that inherits from BaseModel and defines the expected structure of your data. Each field in the model can have type hints, which Pydantic uses for validation.

Building a Story Analysis System

Let's create a simple story analysis system to demonstrate these concepts.

You will need to install the following packages:

pip install pydantic langchain-openai

Models

from pydantic import BaseModel

from typing import List

class Story(BaseModel):

title: str

content: str

genre: str = "unknown"

This model defines the basic structure of a story with title, content, and an optional genre field.

Please note that the documentation of the models will be used by the AI to generate the correct output.

Usually, the LLM can pick up the cue if you name your variables right, but sometimes (as in case of children's stories)

we need to be explicit, and tell the LLM what we want.

This is done by adding a docstring (just under the "class StoryForChildren(Story):" line) to the model.

Models that we will use:

class StoryForChildren(Story):

"""

A story that is specifically tailored to be children's story.

Appropriate for children aged 2-6 years old.

"""

pass

class StoryForBabies(Story):

"""

A story that is specifically tailored to be a baby's story.

Appropriate for infants and toddlers aged 0-2 years old.

Features:

- Simple, repetitive language

- Basic concepts

- Short sentences

- Sensory-rich descriptions

"""

pass

class StoryAnalysis(BaseModel):

# Character elements

character_names: List[str]

character_descriptions: List[str]

# Theme elements

main_theme: str

supporting_themes: List[str]

symbols: List[str]

# Plot elements

exposition: str

climax: str

resolution: str

key_events: List[str]

# Style and interpretation

writing_techniques: List[str]

overall_interpretation: str

Creating AI Agents

We create specialized agents that transform content between our defined models

using the get_agent_function function.

The function takes the input model, the output model and a temperature parameter.

It returns a new function that we can use to transform our data.

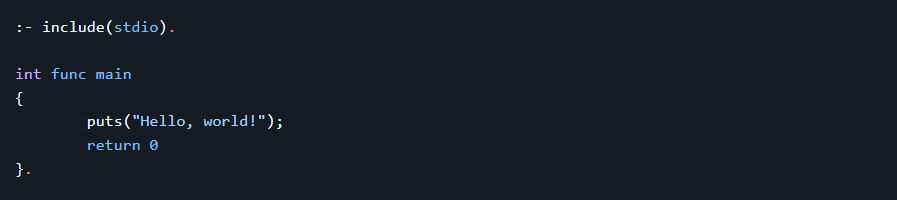

def get_agent_function(

input_model: Union[BaseModel, str],

output_model: BaseModel,

temperature: float = 0.3

) -> Callable[[Union[str, BaseModel]], BaseModel]:

llm = ChatOpenAI(

model="gpt-4",

temperature=temperature

)

def run_llm(input_data: Union[str, BaseModel]) -> BaseModel:

if not isinstance(input_data, input_model):

raise ValueError(f"Input must be an instance of {input_model}")

if isinstance(input_data, BaseModel):

input_data = input_data.model_dump_json()

llm = llm.with_structured_output(output_model)

response = llm.invoke(input_data)

return response

return run_llm

Using the Workflow

Here's how we can use our models and agents together:

# Create specialized agents

parse_story = get_agent_function(str, Story)

get_baby_friendly_story = get_agent_function(Story, StoryForBabies)

analyze_story = get_agent_function(Story, StoryAnalysis)

# Execute the workflow

story = parse_story(story_text)

baby_friendly_story = get_baby_friendly_story(story)

analysis = analyze_story(baby_friendly_story)

Key Benefits

-

Model-Driven Development with Built-in LLM Prompting

- The docstrings in our Pydantic models serve as direct prompts for the LLM

- By updating the model's docstring, we automatically update how the LLM interprets and generates content

- Example: The

StoryForBabiesmodel's docstring explicitly guides the LLM to use simple language and sensory descriptions

-

Single Source of Truth

- Instead of maintaining separate prompts and data structures, the Pydantic model serves both purposes

- When you need to change how the AI behaves, you only need to update the model's docstring

- When you need to change the data structure, you only need to update the model's fields

- This eliminates the common problem of having prompts and code get out of sync

-

Type Safety and Validation

- Pydantic automatically validates all data flowing through your AI workflow

- If the LLM generates invalid output, you'll know immediately

- This catches errors early in the development process, before they reach production

- Example: If the LLM forgets to include character names, Pydantic will raise an error

-

Self-Documenting Architecture

- The models clearly show both the structure of your data and the intended behavior of the AI

- New team members can understand the entire workflow by reading the models

- The docstrings serve as both documentation and functional code

- Example: The

StoryForChildrenmodel's docstring clearly indicates the target age range

Practice Exercise

Copy this code and try creating a new story type called StoryForTeenagers and modify the workflow to generate age-appropriate content for teenagers. Think about what specific fields or validation rules might be relevant for this audience.

![iFixit Tears Down New M4 MacBook Air [Video]](https://www.iclarified.com/images/news/96717/96717/96717-640.jpg)

![Apple Officially Announces Return of 'Ted Lasso' for Fourth Season [Video]](https://www.iclarified.com/images/news/96710/96710/96710-640.jpg)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)

.jpg?#)

.jpg?#)