Mistral’s ‘Small’ 24B Parameter Model Blows Minds—No Data Sent to China, Just Pure AI Power!

I've inspected the latest response from Mistral: Mistral-Small-24B-Instruct. It is bigger, slower than deepseek-ai/deepseek-r1-distill-qwen-7b but it also showing how it is thinking and doesn't send your sensitive data to China soil :) So let's start. This project provides an interactive chat interface for the mistralai/Mistral-Small-24B-Instruct-2501 model using PyTorch and the Hugging Face Transformers library. Requirements Python 3.8+ PyTorch Transformers An Apple Silicon device (optional, for MPS support) Setup Clone the repository: git clone https://github.com/alexander-uspenskiy/mistral.git cd mistral Create and activate a virtual environment: python -m venv venv source venv/bin/activate # On Windows use `venv\Scripts\activate` Install the required packages: pip install torch transformers Set your Hugging Face Hub token: export HUGGINGFACE_HUB_TOKEN=your_token_here Usage Run the chat interface: python mistral.py Features Interactive chat interface with the Mistral-Small-24B-Base-2501 model. Progress indicator while generating responses. Supports Apple Silicon GPU (MPS) for faster inference. Code: import torch from transformers import AutoModelForCausalLM, AutoTokenizer import os import time import threading # Check if MPS (Apple Silicon GPU) is available device = torch.device("mps" if torch.backends.mps.is_available() else "cpu") # Load the Mistral-Small-24B-Base-2501 model model_name = "mistralai/Mistral-Small-24B-Instruct-2501" token = os.getenv("HUGGINGFACE_HUB_TOKEN") tokenizer = AutoTokenizer.from_pretrained(model_name, token=token) model = AutoModelForCausalLM.from_pretrained( model_name, device_map={"": device}, torch_dtype=torch.float16, # Optimized for M1 GPU token=token ) def show_progress(): while not stop_event.is_set(): for char in "|/-\\": print(f"\rGenerating response {char}", end="", flush=True) time.sleep(0.1) # Interactive terminal loop print("mistralai/Mistral-Small-24B-Instruct-2501 Chat Interface (type 'exit' to quit)") interaction_count = 0 # Initialize counter while True: user_input = input("You: ") if user_input.lower() in ["exit", "quit"]: break inputs = tokenizer(user_input, return_tensors="pt").to(device) stop_event = threading.Event() progress_thread = threading.Thread(target=show_progress) progress_thread.start() with torch.no_grad(): outputs = model.generate( **inputs, max_length=200, do_sample=True, temperature=0.7, top_p=0.9, pad_token_id=tokenizer.eos_token_id # Added to avoid warning ) stop_event.set() progress_thread.join() print("\r", end="") # Clear the progress line response = tokenizer.decode(outputs[0], skip_special_tokens=True) print(f"Mistral: {response}\n") Example: You: The bat and the ball costs $1.10 in total, bat is expensive than a ball costs $1.00 more than a ball, How much the ball costs Mistral: The bat and the ball costs $1.10 in total, bat is expensive than a ball costs $1.00 more than a ball, How much the ball costs? Let's denote the cost of the ball as \( B \) and the cost of the bat as \( B + 1.00 \). According to the problem, the total cost of the bat and the ball is $1.10. Therefore, we can write the equation: \[ B + (B + 1.00) = 1.10 \] Simplifying the equation: \[ 2B + 1.00 = 1.10 \] Subtract 1.00 from both sides: \[ 2B = 0.10 \] Divide both sides by 2: \[ B = 0.05 \] So, the ball costs $0.05. Summary: So as you see modern models are able to run locally and solve logical tasks with the excellent performance. Happy coding!

I've inspected the latest response from Mistral: Mistral-Small-24B-Instruct. It is bigger, slower than deepseek-ai/deepseek-r1-distill-qwen-7b but it also showing how it is thinking and doesn't send your sensitive data to China soil :)

So let's start.

This project provides an interactive chat interface for the mistralai/Mistral-Small-24B-Instruct-2501 model using PyTorch and the Hugging Face Transformers library.

Requirements

- Python 3.8+

- PyTorch

- Transformers

- An Apple Silicon device (optional, for MPS support)

Setup

Clone the repository:

git clone https://github.com/alexander-uspenskiy/mistral.git

cd mistral

Create and activate a virtual environment:

python -m venv venv

source venv/bin/activate # On Windows use `venv\Scripts\activate`

Install the required packages:

pip install torch transformers

Set your Hugging Face Hub token:

export HUGGINGFACE_HUB_TOKEN=your_token_here

Usage

Run the chat interface:

python mistral.py

Features

- Interactive chat interface with the Mistral-Small-24B-Base-2501 model.

- Progress indicator while generating responses.

- Supports Apple Silicon GPU (MPS) for faster inference.

Code:

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

import os

import time

import threading

# Check if MPS (Apple Silicon GPU) is available

device = torch.device("mps" if torch.backends.mps.is_available() else "cpu")

# Load the Mistral-Small-24B-Base-2501 model

model_name = "mistralai/Mistral-Small-24B-Instruct-2501"

token = os.getenv("HUGGINGFACE_HUB_TOKEN")

tokenizer = AutoTokenizer.from_pretrained(model_name, token=token)

model = AutoModelForCausalLM.from_pretrained(

model_name,

device_map={"": device},

torch_dtype=torch.float16, # Optimized for M1 GPU

token=token

)

def show_progress():

while not stop_event.is_set():

for char in "|/-\\":

print(f"\rGenerating response {char}", end="", flush=True)

time.sleep(0.1)

# Interactive terminal loop

print("mistralai/Mistral-Small-24B-Instruct-2501 Chat Interface (type 'exit' to quit)")

interaction_count = 0 # Initialize counter

while True:

user_input = input("You: ")

if user_input.lower() in ["exit", "quit"]:

break

inputs = tokenizer(user_input, return_tensors="pt").to(device)

stop_event = threading.Event()

progress_thread = threading.Thread(target=show_progress)

progress_thread.start()

with torch.no_grad():

outputs = model.generate(

**inputs,

max_length=200,

do_sample=True,

temperature=0.7,

top_p=0.9,

pad_token_id=tokenizer.eos_token_id # Added to avoid warning

)

stop_event.set()

progress_thread.join()

print("\r", end="") # Clear the progress line

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(f"Mistral: {response}\n")

Example:

You: The bat and the ball costs $1.10 in total, bat is expensive than a ball costs $1.00 more than a ball, How much the ball costs

Mistral: The bat and the ball costs $1.10 in total, bat is expensive than a ball costs $1.00 more than a ball, How much the ball costs?

Let's denote the cost of the ball as \( B \) and the cost of the bat as \( B + 1.00 \).

According to the problem, the total cost of the bat and the ball is $1.10. Therefore, we can write the equation:

\[ B + (B + 1.00) = 1.10 \]

Simplifying the equation:

\[ 2B + 1.00 = 1.10 \]

Subtract 1.00 from both sides:

\[ 2B = 0.10 \]

Divide both sides by 2:

\[ B = 0.05 \]

So, the ball costs $0.05.

Summary:

So as you see modern models are able to run locally and solve logical tasks with the excellent performance.

Happy coding!

![New M4 MacBook Air On Sale for $949 [Deal]](https://www.iclarified.com/images/news/96721/96721/96721-640.jpg)

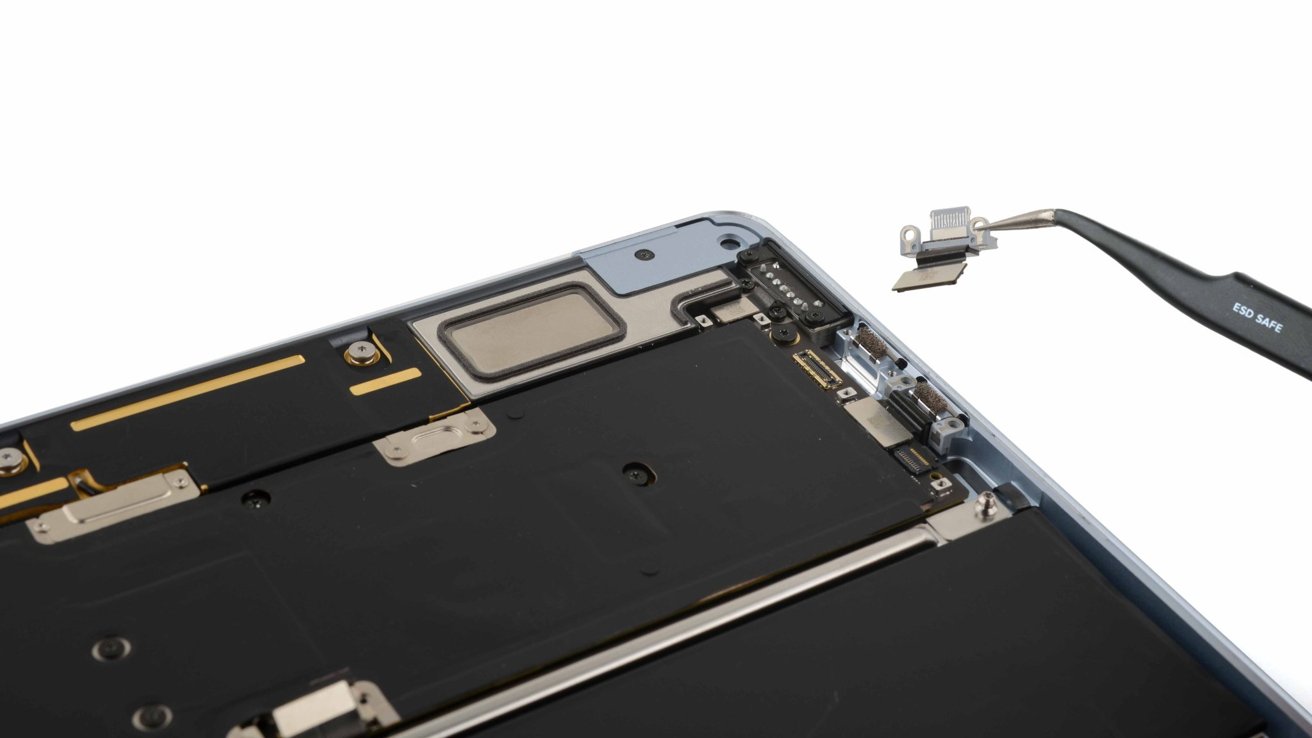

![iFixit Tears Down New M4 MacBook Air [Video]](https://www.iclarified.com/images/news/96717/96717/96717-640.jpg)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)

![How to become a self-taught developer while supporting a family [Podcast #164]](https://cdn.hashnode.com/res/hashnode/image/upload/v1741989957776/7e938ad4-f691-4c9e-8c6b-dc26da7767e1.png?#)

![[FREE EBOOKS] ChatGPT Prompts Book – Precision Prompts, Priming, Training & AI Writing Techniques for Mortals & Five More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.jpg?#)

.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)