Quantum and AI: A Marriage Made of Valid Expectations or Hype?

Editor’s Note: The blending of Quantum and AI — QuantumAI — is a hot topic currently. What exactly does that mean? HPCwire asked Bob Sutor, a longtime high-tech researcher and quantum industry veteran (bio at end of article) to weigh on the subject. You may be familiar with Bob as the author of Dancing with Qubits and founder of Sutor Group Intelligence and Advisory Never shy, and with the experience and technical chops to back up his opinion, Bob says there are two distinct versions of the QuantumAI story. Check out his analysis. - John Russell On Tuesday, February 11, I took part in a panel discussion on Quantum and AI at the LEAP/DeepFest conference in Riyadh, Saudi Arabia. What follows is an expansion of my remarks. I don’t think there are two more Deep Tech topics discussed than quantum computing and AI. Whether from research teams at major corporations, academics turned startup entrepreneurs, public and private investors, CEOs, educated commentators, well-versed industry analysts, stock boosters and day traders, or newly minted social media experts, these areas have captured the imagination of many people worldwide. We can consider them separately, of course, but what is their potential when their capabilities are linked or at least used in the same neighborhood? Let’s look at three ways of using them together. Quantum for AI If AI is great, and quantum is great, why don’t we use quantum to make AI even better? Quantum computing is a different programming paradigm from what we have in classical computing. The latter, with its roots in the 1940s, uses millions or billions or more 0 or 1 bits to do almost everything we have in our phones, laptops, desktop computers, embedded processors, and data center and cloud servers. Quantum computers use qubits, a portmanteau of quantum bits. One qubit holds two pieces of numeric data, and while a relationship between them must hold, it has significantly more information than a bit. What’s even more exciting is that you double the number of data components every time you can add an additional qubit. So, two qubits have 4 pieces of information, three have 8, four have 16, and so on. This is true exponential growth! This growth has led some to claim that quantum computers will be able to process far more data for AI applications than classical systems. Well, that sounds good, but how do you get all that data into the qubits? It turns out there are several schemes for doing so, but none of them are very fast. For this reason, be on the lookout for phrases like “small scale” or “prototype” when people tout their Quantum for AI innovations. For the most part, people are solving little problems and are waiting until quantum computers get big and powerful enough for commercial applications, probably with fault-tolerance and error correction. Without the error correction, we have a very short time to do anything interesting with qubits. If loading data for AI takes a long time, we may not have any left to compute with the data. It also means that when we start the actual algorithm, we may be unable to perform many instructions. In summary, Quantum for AI sounds good if you have a small amount of data and don’t want to do much with it. I suspect classical AI will work fine here in many cases in the short run. Vendors and researchers may dispute this for the sake of PR and funding, and I agree that some are making good progress. I think Quantum for AI will be one of the last significant use cases to become practical. Until then, be wary of announcements in this area that mostly combine the quantum and AI buzzwords. You get extra credit if you mention “Generational AI”! I’m not just being snarky: caveat emptor. Look for third-party expert verification of the work and its scale. Why bother exploring this now? Much of what goes on with machine learning is finding patterns in data and then doing something insightful with them. Since the quantum computing model is so different from classical, we may be able to discover new patterns or classically findable patterns much faster. At this point in the discussion, speakers and authors usually throw in the impressive fancy terms “superposition,” “entanglement,” “interference,” and “measurement,” but we don’t need them at this high level. Vendors will often demonstrate Quantum for AI on the hardware they build. That’s reasonable to show a milestone in each development area. However, I would rather you showed me a quantum chemistry example since I believe that’s the first general use case area that will be practical for quantum computing. AI for Quantum For me, this is much more interesting today than the above technology with the words reversed. Can we use machine learning to make better quantum computers? As I mentioned, quantum computers use qubits as their basic information units. How many qubits do we need? The range of answers to this question is fascinating, with people claiming that dozens to the low thousands will be enough. My

Editor’s Note: The blending of Quantum and AI — QuantumAI — is a hot topic currently. What exactly does that mean? HPCwire asked Bob Sutor, a longtime high-tech researcher and quantum industry veteran (bio at end of article) to weigh on the subject. You may be familiar with Bob as the author of Dancing with Qubits and founder of Sutor Group Intelligence and Advisory Never shy, and with the experience and technical chops to back up his opinion, Bob says there are two distinct versions of the QuantumAI story. Check out his analysis. - John Russell

On Tuesday, February 11, I took part in a panel discussion on Quantum and AI at the LEAP/DeepFest conference in Riyadh, Saudi Arabia. What follows is an expansion of my remarks.

I don’t think there are two more Deep Tech topics discussed than quantum computing and AI. Whether from research teams at major corporations, academics turned startup entrepreneurs, public and private investors, CEOs, educated commentators, well-versed industry analysts, stock boosters and day traders, or newly minted social media experts, these areas have captured the imagination of many people worldwide.

We can consider them separately, of course, but what is their potential when their capabilities are linked or at least used in the same neighborhood? Let’s look at three ways of using them together.

Quantum for AI

If AI is great, and quantum is great, why don’t we use quantum to make AI even better?

Quantum computing is a different programming paradigm from what we have in classical computing. The latter, with its roots in the 1940s, uses millions or billions or more 0 or 1 bits to do almost everything we have in our phones, laptops, desktop computers, embedded processors, and data center and cloud servers. Quantum computers use qubits, a portmanteau of quantum bits.

One qubit holds two pieces of numeric data, and while a relationship between them must hold, it has significantly more information than a bit. What’s even more exciting is that you double the number of data components every time you can add an additional qubit. So, two qubits have 4 pieces of information, three have 8, four have 16, and so on. This is true exponential growth!

This growth has led some to claim that quantum computers will be able to process far more data for AI applications than classical systems. Well, that sounds good, but how do you get all that data into the qubits? It turns out there are several schemes for doing so, but none of them are very fast. For this reason, be on the lookout for phrases like “small scale” or “prototype” when people tout their Quantum for AI innovations. For the most part, people are solving little problems and are waiting until quantum computers get big and powerful enough for commercial applications, probably with fault-tolerance and error correction.

Without the error correction, we have a very short time to do anything interesting with qubits. If loading data for AI takes a long time, we may not have any left to compute with the data. It also means that when we start the actual algorithm, we may be unable to perform many instructions. In summary, Quantum for AI sounds good if you have a small amount of data and don’t want to do much with it. I suspect classical AI will work fine here in many cases in the short run.

Vendors and researchers may dispute this for the sake of PR and funding, and I agree that some are making good progress. I think Quantum for AI will be one of the last significant use cases to become practical. Until then, be wary of announcements in this area that mostly combine the quantum and AI buzzwords. You get extra credit if you mention “Generational AI”! I’m not just being snarky: caveat emptor. Look for third-party expert verification of the work and its scale.

Vendors and researchers may dispute this for the sake of PR and funding, and I agree that some are making good progress. I think Quantum for AI will be one of the last significant use cases to become practical. Until then, be wary of announcements in this area that mostly combine the quantum and AI buzzwords. You get extra credit if you mention “Generational AI”! I’m not just being snarky: caveat emptor. Look for third-party expert verification of the work and its scale.

Why bother exploring this now? Much of what goes on with machine learning is finding patterns in data and then doing something insightful with them. Since the quantum computing model is so different from classical, we may be able to discover new patterns or classically findable patterns much faster. At this point in the discussion, speakers and authors usually throw in the impressive fancy terms “superposition,” “entanglement,” “interference,” and “measurement,” but we don’t need them at this high level.

Vendors will often demonstrate Quantum for AI on the hardware they build. That’s reasonable to show a milestone in each development area. However, I would rather you showed me a quantum chemistry example since I believe that’s the first general use case area that will be practical for quantum computing.

AI for Quantum

For me, this is much more interesting today than the above technology with the words reversed. Can we use machine learning to make better quantum computers?

As I mentioned, quantum computers use qubits as their basic information units. How many qubits do we need? The range of answers to this question is fascinating, with people claiming that dozens to the low thousands will be enough. My rule of thumb is that we will need 100,000 physical qubits, the manufactured or trapped natural entities that demonstrate the desired quantum behavior. Superconducting and silicon spin qubits are examples of manufactured qubits, and trapped ions, neutral atoms, and photons are natural. There are five other flavors (“modalities”) of qubits, but the first five and their variations are the qubit technologies used by the majority of vendors.

We explore quantum computing because, evidently, the biggest computer of all, Nature, uses the quantum mechanics model from physics to program all the small entities like electrons, photons, and atoms, and hence molecules and pretty much everything around us. We might as well emulate how the most significant computer works to solve our most challenging problems.

That sounds promising until we realize that Nature and the materials we create are quantum and don’t really care about interfering with our intentional calculations. Instead of getting qubits that maintain their values forever and operations on them that perform their jobs perfectly, we get added noise from the local natural environment. The noise causes errors in the qubits and their operations. For example, suppose you had a calculator, but electrical static caused the result of 2.0 – 1.0 to be 0.99. That’s an error in the electronics.

For another example, think about listening to a radio or a phone call with audio static. You may be able to make out the important information, but there might be so much noise that you just can’t understand what’s going on.

There are different kinds of noise in a quantum computer, and they come from several sources. Sometimes, we can detect patterns in the noise and use these to suppress or mitigate the errors.

Did I say “patterns”? Researchers and engineers have used several kinds of machine learning to detect noise patterns to improve the stability of quantum systems and the fidelity of what we do with them. A 2024 survey paper published by NVIDIA researchers and colleagues nicely sums up the breadth of work in this area.

It may very well be that we first do AI for Quantum to eventually get Quantum for AI.

Quantum and AI in the Same Workflow

Scientists and researchers in physics and computer science may not always be aware of industrial business processes and workflows to run their companies and provide value to their customers. It’s fine to work intensely in the hardware and algorithmic weeds, but someone has to raise themselves up and look at the context in which computation will occur.

Instead of thinking about quantum and AI somehow mashed up and working together, consider them working in separate processes. We also have traditional classical computation processes, including some modules that involve high-performance computing. Data enters each component, and then other data leaves and acts as input elsewhere. In mid-2024, Microsoft demonstrated a chemistry workflow starting with HPC, proceeding to AI, and ending with quantum computation.

Other vendors, including IonQ and Quantinuum, have also demonstrated such workflows. Note that the idea is not new: in 2020, IBM explained its view that the future of computation would be composed of bits (classical), qubits (quantum), and neurons (AI).

What Are the Timeframes?

AI for Quantum has had value for several years and will continue to be a valuable tool as long as we build quantum computing systems.

Understanding the processing flow and data inputs and outputs among HPC, quantum, and AI systems is picking up pace now, and the differentiated value is likely to be middle-term: we should see practical results by the end of this decade.

We have much technology to develop before Quantum for AI gets significantly beyond the “we demonstrated that our work sort of isn’t too bad for small problems compared to classical AI” stage. This may seem harsh, but Quantum for AI is the most over-hyped quantum use case. I think we will need large error-corrected systems for this area, so I think this is long-term: seven to ten years and into the 2030s.

As our work on quantum evolves, so too will what we are doing with AI. The approaches we are taking now may not dominate either field ten years from now. Both are changing independently of the other. Our work to use them together effectively must take that into account.

About the Author

Dr. Sutor is the CEO and Founder of Sutor Group Intelligence and Advisory. He spent nearly four decades of his career at IBM. The majority of that time was at the T. J. Watson Research Center, where he held roles such as Vice President of Mathematical Sciences. Bob spent two years as an executive at the neutral-atom quantum Infleqtion. He is an Adjunct Professor in the Department of Computer Science and Engineering at the University of Buffalo, New York, USA. He is the author of the quantum computing book Dancing with Qubits, Second Edition.

This article first appeared on sister site HPCwire.

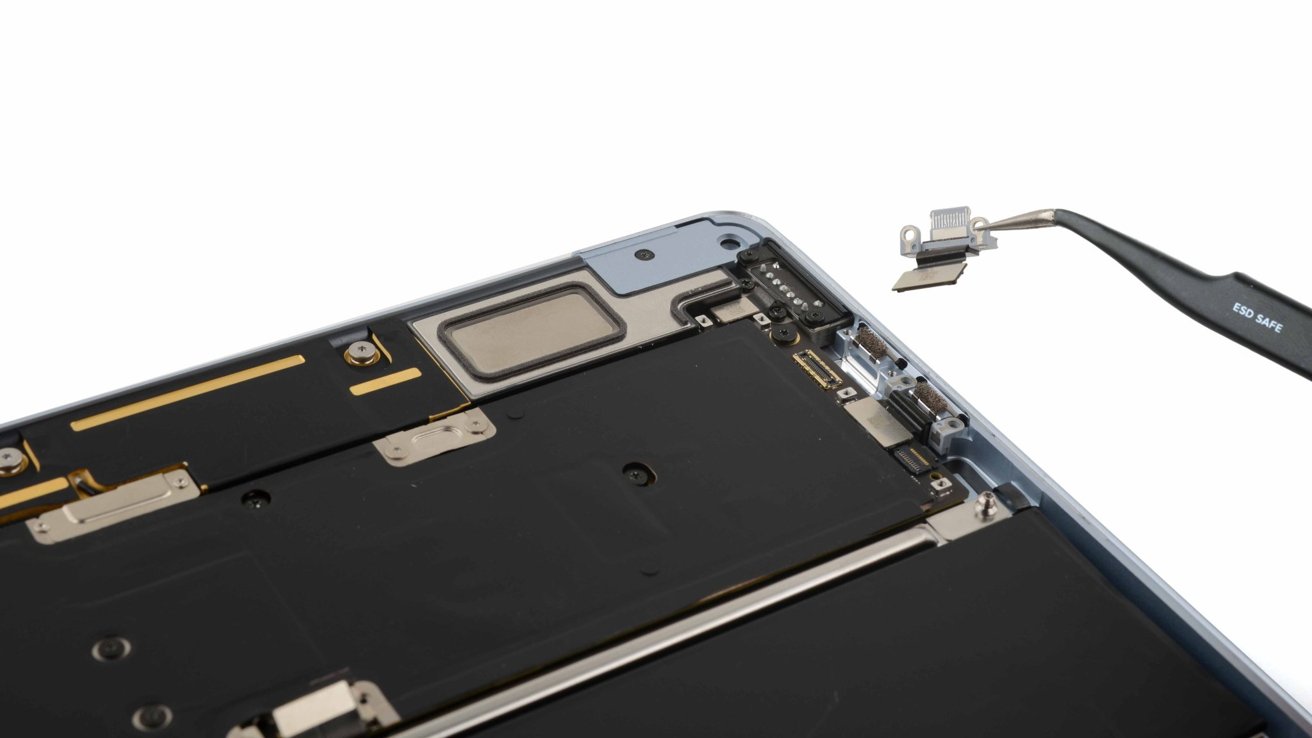

![New M4 MacBook Air On Sale for $949 [Deal]](https://www.iclarified.com/images/news/96721/96721/96721-640.jpg)

![iFixit Tears Down New M4 MacBook Air [Video]](https://www.iclarified.com/images/news/96717/96717/96717-640.jpg)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)

![How to become a self-taught developer while supporting a family [Podcast #164]](https://cdn.hashnode.com/res/hashnode/image/upload/v1741989957776/7e938ad4-f691-4c9e-8c6b-dc26da7767e1.png?#)

![[FREE EBOOKS] ChatGPT Prompts Book – Precision Prompts, Priming, Training & AI Writing Techniques for Mortals & Five More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

.jpg?#)

(1).webp?#)