Rant: state of generative AI in code generation.

TL;DR; AI Code generation still makes a lot of mistakes, as of February, 2025. We may use strictly defined shorthand algorithms (along with some additional comments) to generate better code. The reason is, natural human languages (like English or any other) themselves are ambiguous, algorithms and programing languages are not! What is it about? Code generation with Gen AI is not yet up to the mark. Claude 3.5 Sonnet is better, but it too makes time-consuming debugging errors from time to time. I'll keep using tools like GitHub Copilot for overall productivity, but I must say, I still couldn't figure out how to make most out of Generative AI for coding! I hope that'll change in 2025. Alternatives to GitHub Copilot, like Cursor, Windsurf etc. are in my radar, but I want to use Copilot more efficiently before I fully dive into the other options. A little more background Earlier today I was using GitHub Copilot to write a CLI Shell script, but it was making time consuming mistakes. The script is about syncing Firefox profiles in two different computers - in my specific case, two Laptops, both with Ubuntu installed. Yes, instead of keeping 100s of open tabs, I create 100s of Firefox profiles in command line. Each profile is fine tuned for specific tasks, projects etc. As a web developer, I have to use different browsers, but I use Firefox more. Also, I could use the sync option within Firefox, but I don't use it for good reasons. AI code generation - friend or foe? Few days ago, I used GitHub copilot for developing a browser plugin in Vanilla JavaScript - just for my personal use. It did a much better job then. But for today's shell script project, it gave me more headache than I expected! So basically, AI code generation is still on and off for me. Overall it's productive, but I expect more. It's possible that other people are using AI code generation more efficiently than me, but I highly doubt that's the case. My observation In my experience with AI code generation, in visual projects like UI development or JavaScript based animation (using three.js or p5.js) etc. AI tools work pretty well. However, in the projects where we require highly precise complex logical reasoning, gen AI sometimes make terrible mistakes that are time consuming to debug! Why this is the case? Is it because we can easily ignore the mistakes in visual output, and consider them as acceptable variations? Or is it because, non-programmers and beginners usually ask for these sort of code generation and easily get mesmerized and then share their experiences with others in social media, who in turn also get mesmerized - as neither of them are capable enough nor willing enough to find the issues in the generated code? Or is it because, as humans we are fine tuned to share the generated code that perform well, but when they don't do very well, we tend not to share them on social media—thinking it just might be our fault, perhaps we couldn't instruct the AI well enough! Whatever the case may be, there's another interesting observation I have about AI generated code: I've got far better results when I used shorthand strict algorithms instead of Natural Languages like English. Yes, Gen AI works pretty well in generating code when we use shorthand strict algorithms as prompts! For example, following is an algorithm like prompt that extracts Firefox profile names from their corresponding directory names. It worked for me pretty well: Convert the following algorithm to shell code, lines starting with # are comments with additional instructions: profiles=array for each directory in sync_from # ending = * after 1st dot (non empty) if directory name = "something.ending" profile_name=ending profiles[]=profile_name end_if end_for This resulted in the following Shell code using Claude 3.5 Sonnet in GitHub Copilot: #!/bin/bash # Initialize profiles array declare -a profiles # Iterate through directories in sync_from for dir in "$sync_from"/*; do # Check if it's a directory if [ -d "$dir" ]; then # Get base name of directory base_name=$(basename "$dir") # Extract part after first dot profile_name=${base_name#*.} # Check if profile_name is not empty and not equal to base_name if [ "$profile_name" != "$base_name" ] && [ -n "$profile_name" ]; then # Add to array profiles+=("$profile_name") fi fi done And the following Shell code with DeepSeek R1: #!/bin/bash profiles=() for dir in "$sync_from"/*; do if [[ -d "$dir" ]]; then dirname=$(basename "$dir") first_part="${dirname%%.*}" ending="${dirname#*.}" if [[ "$dirname" != "$first_part" && -n "$ending" ]]; then profiles+=("$ending") fi fi done Suffice it to say, both worked. However, when I tried to generate the same code with Natural La

TL;DR;

AI Code generation still makes a lot of mistakes, as of February, 2025.

We may use strictly defined shorthand algorithms (along with some additional comments) to generate better code. The reason is, natural human languages (like English or any other) themselves are ambiguous, algorithms and programing languages are not!

What is it about?

Code generation with Gen AI is not yet up to the mark. Claude 3.5 Sonnet is better, but it too makes time-consuming debugging errors from time to time.

I'll keep using tools like GitHub Copilot for overall productivity, but I must say, I still couldn't figure out how to make most out of Generative AI for coding! I hope that'll change in 2025.

Alternatives to GitHub Copilot, like Cursor, Windsurf etc. are in my radar, but I want to use Copilot more efficiently before I fully dive into the other options.

A little more background

Earlier today I was using GitHub Copilot to write a CLI Shell script, but it was making time consuming mistakes.

The script is about syncing Firefox profiles in two different computers - in my specific case, two Laptops, both with Ubuntu installed.

Yes, instead of keeping 100s of open tabs, I create 100s of Firefox profiles in command line. Each profile is fine tuned for specific tasks, projects etc. As a web developer, I have to use different browsers, but I use Firefox more.

Also, I could use the sync option within Firefox, but I don't use it for good reasons.

AI code generation - friend or foe?

Few days ago, I used GitHub copilot for developing a browser plugin in Vanilla JavaScript - just for my personal use. It did a much better job then. But for today's shell script project, it gave me more headache than I expected!

So basically, AI code generation is still on and off for me. Overall it's productive, but I expect more.

It's possible that other people are using AI code generation more efficiently than me, but I highly doubt that's the case.

My observation

In my experience with AI code generation, in visual projects like UI development or JavaScript based animation (using three.js or p5.js) etc. AI tools work pretty well.

However, in the projects where we require highly precise complex logical reasoning, gen AI sometimes make terrible mistakes that are time consuming to debug!

Why this is the case? Is it because we can easily ignore the mistakes in visual output, and consider them as acceptable variations?

Or is it because, non-programmers and beginners usually ask for these sort of code generation and easily get mesmerized and then share their experiences with others in social media, who in turn also get mesmerized - as neither of them are capable enough nor willing enough to find the issues in the generated code?

Or is it because, as humans we are fine tuned to share the generated code that perform well, but when they don't do very well, we tend not to share them on social media—thinking it just might be our fault, perhaps we couldn't instruct the AI well enough!

Whatever the case may be, there's another interesting observation I have about AI generated code: I've got far better results when I used shorthand strict algorithms instead of Natural Languages like English.

Yes, Gen AI works pretty well in generating code when we use shorthand strict algorithms as prompts!

For example, following is an algorithm like prompt that extracts Firefox profile names from their corresponding directory names. It worked for me pretty well:

Convert the following algorithm to shell code, lines starting with # are comments with additional instructions:

profiles=array

for each directory in sync_from

# ending = * after 1st dot (non empty)

if directory name = "something.ending"

profile_name=ending

profiles[]=profile_name

end_if

end_for

This resulted in the following Shell code using Claude 3.5 Sonnet in GitHub Copilot:

#!/bin/bash

# Initialize profiles array

declare -a profiles

# Iterate through directories in sync_from

for dir in "$sync_from"/*; do

# Check if it's a directory

if [ -d "$dir" ]; then

# Get base name of directory

base_name=$(basename "$dir")

# Extract part after first dot

profile_name=${base_name#*.}

# Check if profile_name is not empty and not equal to base_name

if [ "$profile_name" != "$base_name" ] && [ -n "$profile_name" ]; then

# Add to array

profiles+=("$profile_name")

fi

fi

done

And the following Shell code with DeepSeek R1:

#!/bin/bash

profiles=()

for dir in "$sync_from"/*; do

if [[ -d "$dir" ]]; then

dirname=$(basename "$dir")

first_part="${dirname%%.*}"

ending="${dirname#*.}"

if [[ "$dirname" != "$first_part" && -n "$ending" ]]; then

profiles+=("$ending")

fi

fi

done

Suffice it to say, both worked.

However, when I tried to generate the same code with Natural Language (English), I got unexpected results. I finally could write natural language prompts with expected output, but that's not an efficient way to write code.

I needed several trial and error phases, along with hands on code fix for English prompts to work as expected, but with the algorithm prompt, it produced predictable results in both Claude 3.5 and DeepSeek R1 at the first attempt!

Questions to developer community:

- What's your experience in AI code generation?

- Do you know of any better way, or a better algorithm that produces predictable results with different Generative AI models?

Please let me know.

Also, I hope my experience was helpful to you.

Happy Coding!

![New M4 MacBook Air On Sale for $949 [Deal]](https://www.iclarified.com/images/news/96721/96721/96721-640.jpg)

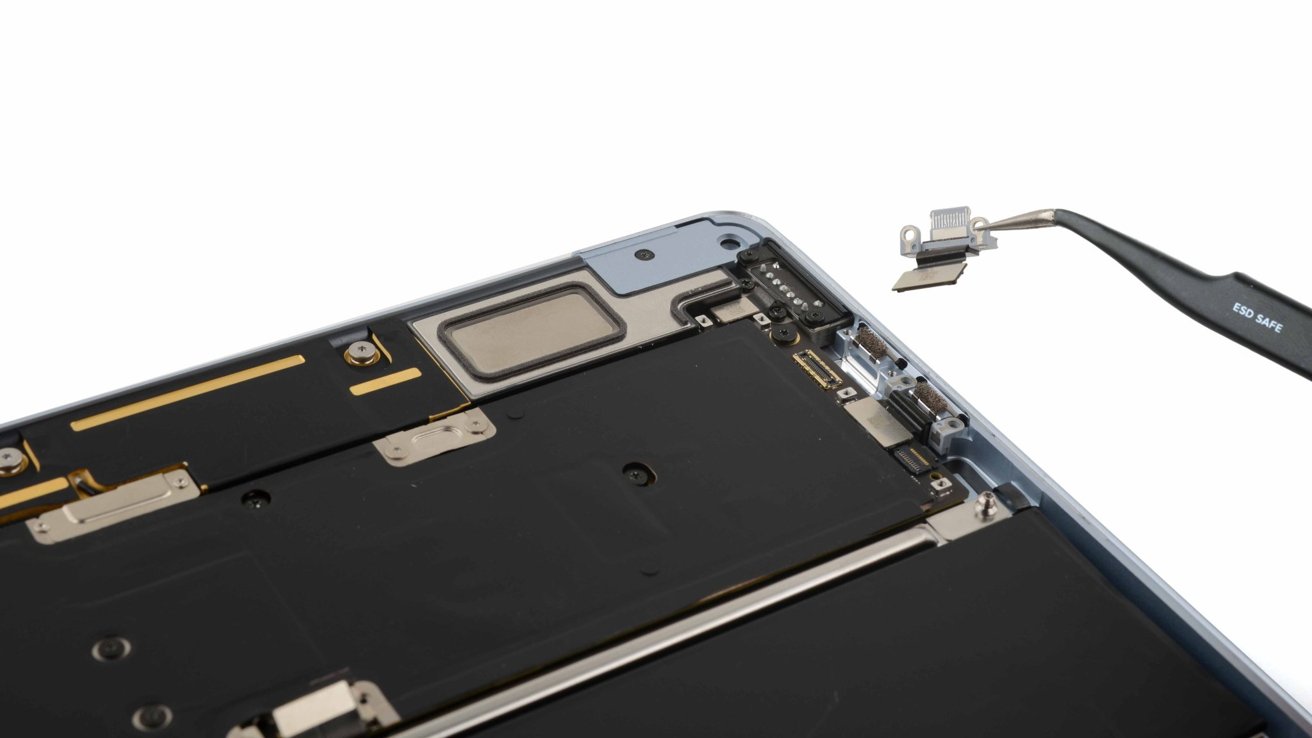

![iFixit Tears Down New M4 MacBook Air [Video]](https://www.iclarified.com/images/news/96717/96717/96717-640.jpg)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)

![How to become a self-taught developer while supporting a family [Podcast #164]](https://cdn.hashnode.com/res/hashnode/image/upload/v1741989957776/7e938ad4-f691-4c9e-8c6b-dc26da7767e1.png?#)

![[FREE EBOOKS] ChatGPT Prompts Book – Precision Prompts, Priming, Training & AI Writing Techniques for Mortals & Five More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.jpg?#)

.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)