AI Governance Is Too Vague. It’s Time to Get Practical

The conversation around AI governance has often been frustratingly vague. Organizations talk the talk about AI ethics and regulatory compliance, but when it comes to practical implementation, many are paralyzed by uncertainty. Governance, as it stands today, is often a high-level corporate directive rather than a concrete, practicable plan. What if AI governance wasn’t just a generic, one-size-fits-all framework? What if, instead, it was a model-specific strategy, one that ensures transparency, accountability, and fairness at the operational level? According to Guy Reams, chief revenue officer at digital transformation firm Blackstraw.ai, governance cannot remain an abstract principle. Instead, it must be deeply embedded into AI systems at the model level, accounting for everything from data lineage to bias detection to domain-specific risks. Without this shift, governance becomes a performative exercise that does little to prevent AI failures or regulatory penalties. To explore this shift in thinking, AIwire sat down with Reams to discuss why AI governance must evolve and what enterprises should be doing now to get ahead of the curve. Why Companies Can’t Afford to Wait for Regulation A common misconception in AI governance is that regulators will dictate the rules, relieving enterprises of the burden of responsibility. The Biden administration’s executive order on AI (since rescinded by the Trump administration), along with the EU’s AI Act, has established broad guidelines, but these policies will always lag behind technological innovation. The rapid evolution of AI, particularly since the emergence of ChatGPT in 2022, has only widened this gap. Reams warns that companies expecting AI regulations to provide complete clarity or absolve them of responsibility are setting themselves up for failure. “[Governance] ultimately is their responsibility,” he says. “The danger of having an executive order or a specific regulation is that it generally moves people's idea away from their responsibility and puts the responsibility on the regulation or the governance body.” In other words, regulation alone won’t make AI safe or ethical. Companies must take ownership. While Reams acknowledges the need for some regulatory pressure, he argues that governance should be a collaborative, industry-wide effort, not a rigid, one-size-fits-all mandate. “I think an individual mandate creates a potential problem where people will think the problem is solved when it's not,” he explains. Instead of waiting for regulators to act, businesses must proactively establish their own AI governance frameworks. The risk of inaction isn’t just regulatory—it’s operational, financial, and strategic. Enterprises that neglect AI governance today will find themselves unable to scale AI effectively, vulnerable to compliance risks, and at risk of losing credibility with stakeholders. Model-Specific AI Governance: A New Imperative The biggest flaw in many AI governance strategies is that they are often too abstract to be effective. Broad ethical guidelines and regulatory checkboxes don’t easily translate into real accountability when AI systems are deployed at scale. Governance must be embedded at the model level, shaping how AI is built, trained, and implemented across the organization. Reams argues that one of the first steps in effective AI governance is establishing a cross-functional governance board. Too often, AI oversight is dominated by legal and compliance teams, resulting in overly cautious policies that stifle innovation. “I recently saw a policy where an enterprise decided to ban the use of all AI corporate-wide, and the only way you can use AI is if you use their own internal AI tool,” Reams recalls. “So, you can go do natural language processing with a chatbot they've created, but you cannot use ChatGPT or Anthropic (Claude) or any others. And that, to me, is when you have way too much legal representation on that governance decision.” Instead of blanket restrictions dictated by a few, governance should include input from not just legal, but also IT security, business leaders, data scientists, and data governance teams, to ensure that AI policies are both risk-aware and practical, Reams says. Transparency is another critical pillar of model-specific governance. Google pioneered the concept of model cards, which are short documents provided with machine learning models that give context on what a model was trained on, what biases may exist, and how often it has been updated. Reams sees this as a best practice that all enterprises should adopt in order to shed light on the black box. “That way, when somebody goes to use AI, they have a very open, transparent model card that pops up and tells them that the answer to their question was based on this model, this is how accurate it is, and this is the last time we modified this model,” Reams says. Data lineage also plays a key role in the transparency pillar in the fight against opaque AI syste

The conversation around AI governance has often been frustratingly vague. Organizations talk the talk about AI ethics and regulatory compliance, but when it comes to practical implementation, many are paralyzed by uncertainty. Governance, as it stands today, is often a high-level corporate directive rather than a concrete, practicable plan.

What if AI governance wasn’t just a generic, one-size-fits-all framework? What if, instead, it was a model-specific strategy, one that ensures transparency, accountability, and fairness at the operational level?

According to Guy Reams, chief revenue officer at digital transformation firm Blackstraw.ai, governance cannot remain an abstract principle. Instead, it must be deeply embedded into AI systems at the model level, accounting for everything from data lineage to bias detection to domain-specific risks. Without this shift, governance becomes a performative exercise that does little to prevent AI failures or regulatory penalties.

To explore this shift in thinking, AIwire sat down with Reams to discuss why AI governance must evolve and what enterprises should be doing now to get ahead of the curve.

Why Companies Can’t Afford to Wait for Regulation

A common misconception in AI governance is that regulators will dictate the rules, relieving enterprises of the burden of responsibility. The Biden administration’s executive order on AI (since rescinded by the Trump administration), along with the EU’s AI Act, has established broad guidelines, but these policies will always lag behind technological innovation. The rapid evolution of AI, particularly since the emergence of ChatGPT in 2022, has only widened this gap.

Reams warns that companies expecting AI regulations to provide complete clarity or absolve them of responsibility are setting themselves up for failure.

“[Governance] ultimately is their responsibility,” he says. “The danger of having an executive order or a specific regulation is that it generally moves people's idea away from their responsibility and puts the responsibility on the regulation or the governance body.”

In other words, regulation alone won’t make AI safe or ethical. Companies must take ownership. While Reams acknowledges the need for some regulatory pressure, he argues that governance should be a collaborative, industry-wide effort, not a rigid, one-size-fits-all mandate.

“I think an individual mandate creates a potential problem where people will think the problem is solved when it's not,” he explains.

Instead of waiting for regulators to act, businesses must proactively establish their own AI governance frameworks. The risk of inaction isn’t just regulatory—it’s operational, financial, and strategic. Enterprises that neglect AI governance today will find themselves unable to scale AI effectively, vulnerable to compliance risks, and at risk of losing credibility with stakeholders.

Model-Specific AI Governance: A New Imperative

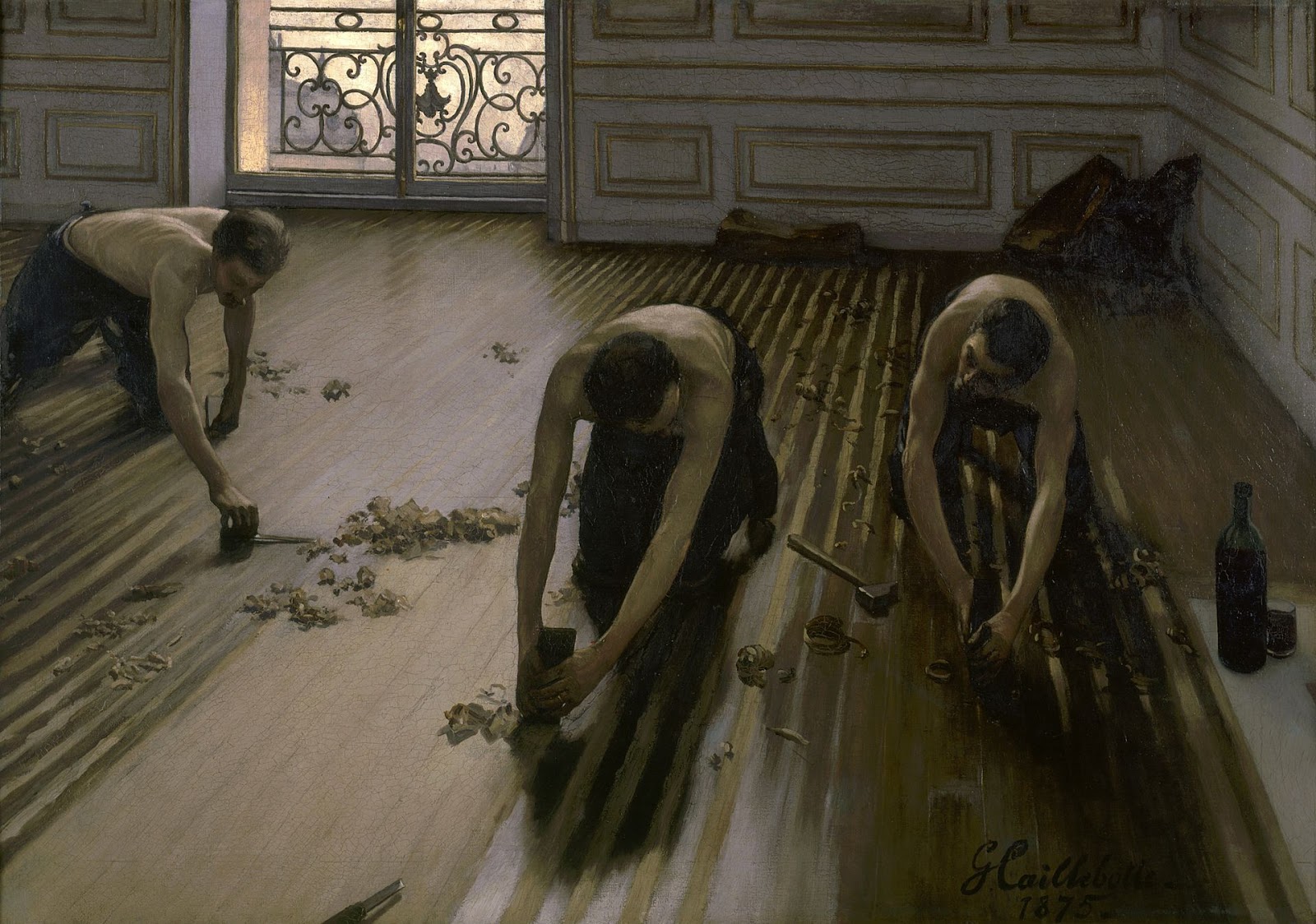

The biggest flaw in many AI governance strategies is that they are often too abstract to be effective. Broad ethical guidelines and regulatory checkboxes don’t easily translate into real accountability when AI systems are deployed at scale. Governance must be embedded at the model level, shaping how AI is built, trained, and implemented across the organization.

Reams argues that one of the first steps in effective AI governance is establishing a cross-functional governance board. Too often, AI oversight is dominated by legal and compliance teams, resulting in overly cautious policies that stifle innovation.

“I recently saw a policy where an enterprise decided to ban the use of all AI corporate-wide, and the only way you can use AI is if you use their own internal AI tool,” Reams recalls. “So, you can go do natural language processing with a chatbot they've created, but you cannot use ChatGPT or Anthropic (Claude) or any others. And that, to me, is when you have way too much legal representation on that governance decision.”

Instead of blanket restrictions dictated by a few, governance should include input from not just legal, but also IT security, business leaders, data scientists, and data governance teams, to ensure that AI policies are both risk-aware and practical, Reams says.

Transparency is another critical pillar of model-specific governance. Google pioneered the concept of model cards, which are short documents provided with machine learning models that give context on what a model was trained on, what biases may exist, and how often it has been updated. Reams sees this as a best practice that all enterprises should adopt in order to shed light on the black box.

“That way, when somebody goes to use AI, they have a very open, transparent model card that pops up and tells them that the answer to their question was based on this model, this is how accurate it is, and this is the last time we modified this model,” Reams says.

Data lineage also plays a key role in the transparency pillar in the fight against opaque AI systems. “I think there's this black box mentality, which is, I ask a question, then [the model] goes and figures it out and gives me an answer,” Reams says. “I don't think we should be training end users to think that they can trust the black box.”

AI systems must be able to trace their outputs back to specific data sources, ensuring compliance with privacy laws, fostering accountability, and reducing the risk of hallucinations.

Bias detection is another critical component of transparency in governance to help ensure that models do not unintentionally favor certain perspectives due to imbalanced training data. According to Reams, bias in AI isn't always the overt kind we associate with social discrimination—it can be a subtler issue of models skewing results based on the limited data they were trained on. To address this, companies can use bias detection engines, which are third-party tools that analyze AI outputs against a trained dataset to identify patterns of unintentional bias.

“Of course, you would have to trust the third-party tool, but this is why, as a company, you need to get your act together, because you need to start thinking about these things and start evaluating and figuring out what works for you,” Reams notes, pointing out that companies must proactively evaluate which bias mitigation approaches align with their industry-specific needs.

Human oversight also remains a key safeguard. AI-driven decision-making is accelerating, but keeping a human in the loop ensures accountability. In high-risk areas like fraud detection or hiring decisions, human reviewers must validate AI-generated insights before action is taken.

Reams believes this is a necessary check against AI’s limitations: “When we develop the framework, we make sure that decisions are reviewable by humans, so that humans can be in the loop on the decisions being made, and we can use those human decisions to further train the AI model. The model can then be further enhanced and further improved when we keep humans in the loop, as humans can evaluate and review the decisions that are being made.”

AI Governance as a Competitive Advantage

AI governance is not just about compliance, but it can also serve as a business resilience strategy that defines a company’s ability to responsibly scale AI while maintaining trust and avoiding legal pitfalls. Reams says organizations that ignore governance are missing an opportunity and could suffer the loss of a competitive advantage as AI continues to advance.

“What's going to happen is [AI] is going to grow bigger and become more complicated. And if you're not on top of governance, then you won't be prepared to implement this when you need to, and you'll have no way of dealing with it. If you haven't done the work, then when AI really hits big, which it hasn't even started yet, you're going to be unprepared,” he says.

Companies that embed governance at the model level will be better positioned to scale AI operations responsibly, reduce exposure to regulatory risks, and build stakeholder confidence. But governance doesn’t happen in a vacuum, and maintaining this competitive advantage also requires trust in AI vendors. Most organizations depend on AI vendors and external providers for tools and infrastructure, making transparency and accountability across the AI supply chain critical.

Reams emphasizes that AI vendors have a crucial role to play, particularly when it comes to the aforementioned pillars of transparency like model cards, disclosure of training data sources, and bias mitigation strategies. Companies should establish vendor accountability measures like ongoing bias detection assessments before deploying vendor-supplied AI models. While governance begins internally, it must extend to the broader AI ecosystem to ensure sustainable and ethical AI adoption.

Organizations that approach AI governance with this holistic mindset will not only protect themselves from risk but will also position themselves as leaders in responsible AI implementation. Enterprises must shift governance from a compliance checkbox to a deeply integrated, model-specific practice.

Companies that fail to act now will struggle to scale AI responsibly and will be at a strategic disadvantage when AI adoption moves from an experimental phase to an operational necessity. In this new paradigm, the future of AI governance will not be defined by regulators but will be shaped by the organizations that lead the way in setting practical, model-level governance frameworks.

![Sonos Abandons Streaming Device That Aimed to Rival Apple TV [Report]](https://www.iclarified.com/images/news/96703/96703/96703-640.jpg)

![HomePod With Display Delayed to Sync With iOS 19 Redesign [Kuo]](https://www.iclarified.com/images/news/96702/96702/96702-640.jpg)

![iPhone 17 Air to Measure 9.5mm Thick Including Camera Bar [Rumor]](https://www.iclarified.com/images/news/96699/96699/96699-640.jpg)

_Andrii_Yalanskyi_Alamy.jpg?#)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)

.png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

-Rainbow-Six-Siege-X---Official-Gameplay-Trailer-00-01-00.png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)