Simular Releases Agent S2: An Open, Modular, and Scalable AI Framework for Computer Use Agents

In today’s digital landscape, interacting with a wide variety of software and operating systems can often be a tedious and error-prone experience. Many users face challenges when navigating through complex interfaces and performing routine tasks that demand precision and adaptability. Existing automation tools frequently fall short in adapting to subtle interface changes or learning from […] The post Simular Releases Agent S2: An Open, Modular, and Scalable AI Framework for Computer Use Agents appeared first on MarkTechPost.

In today’s digital landscape, interacting with a wide variety of software and operating systems can often be a tedious and error-prone experience. Many users face challenges when navigating through complex interfaces and performing routine tasks that demand precision and adaptability. Existing automation tools frequently fall short in adapting to subtle interface changes or learning from past mistakes, leaving users to manually oversee processes that could otherwise be streamlined. This persistent gap between user expectations and the capabilities of traditional automation calls for a system that not only performs tasks reliably but also learns and adjusts over time.

Simular has introduced Agent S2, an open, modular, and scalable framework designed to assist with computer use agents. Agent S2 builds upon the foundation laid by its predecessor, offering a refined approach to automating tasks on computers and smartphones. By integrating a modular design with both general-purpose and specialized models, the framework can be adapted to a variety of digital environments. Its design is inspired by the human brain’s natural modularity, where different regions work together harmoniously to handle complex tasks, thereby fostering a system that is both flexible and robust.

Technical Details and Benefits

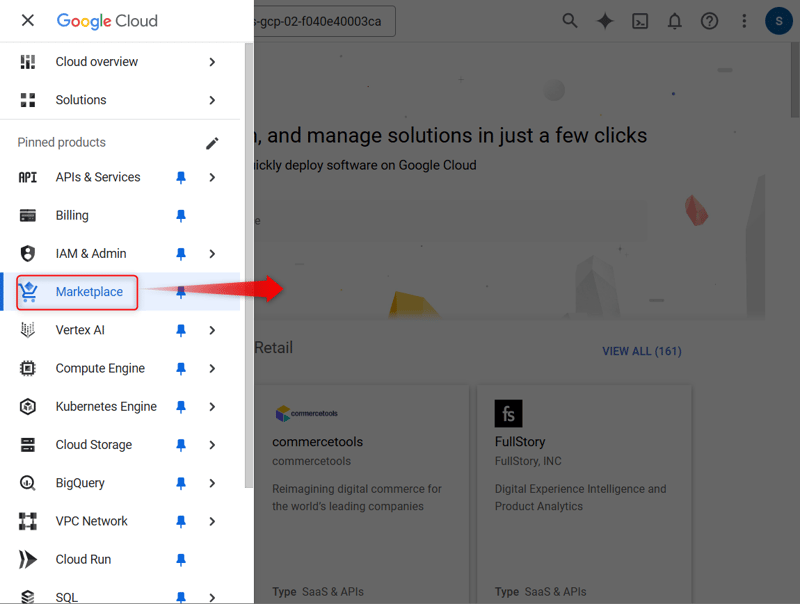

At its core, Agent S2 employs experience-augmented hierarchical planning. This method involves breaking down long and intricate tasks into smaller, more manageable subtasks. The framework continuously refines its strategy by learning from previous experiences, thereby improving its execution over time. An important aspect of Agent S2 is its visual grounding capability, which allows it to interpret raw screenshots for precise interaction with graphical user interfaces. This eliminates the need for additional structured data and enhances the system’s ability to correctly identify and interact with UI elements. Moreover, Agent S2 utilizes an advanced Agent-Computer Interface that delegates routine, low-level actions to expert modules. Complemented by an adaptive memory mechanism, the system retains useful experiences to guide future decision-making, resulting in a more measured and effective performance.

Results and Insights

Evaluations on real-world benchmarks indicate that Agent S2 performs reliably in both computer and smartphone environments. On the OSWorld benchmark—which tests the execution of multi-step computer tasks—Agent S2 achieved a success rate of 34.5% on a 50-step evaluation, reflecting a modest yet consistent improvement over earlier models. Similarly, on the AndroidWorld benchmark, the framework reached a 50% success rate in executing smartphone tasks. These results underscore the practical benefits of a system that can plan ahead and adapt to dynamic conditions, ensuring that tasks are completed with improved accuracy and minimal manual intervention.

Conclusion

Agent S2 represents a thoughtful approach to enhancing everyday digital interactions. By addressing common challenges in computer automation through a modular design and adaptive learning, the framework provides a practical solution for managing routine tasks more efficiently. Its balanced combination of proactive planning, visual understanding, and expert delegation makes it well-suited for both complex computer tasks and mobile applications. In an era where digital workflows continue to evolve, Agent S2 offers a measured, reliable means of integrating automation into daily routines—helping users achieve better outcomes while reducing the need for constant manual oversight.

Check out the Technical details and GitHub Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 80k+ ML SubReddit.

![Sonos Abandons Streaming Device That Aimed to Rival Apple TV [Report]](https://www.iclarified.com/images/news/96703/96703/96703-640.jpg)

![HomePod With Display Delayed to Sync With iOS 19 Redesign [Kuo]](https://www.iclarified.com/images/news/96702/96702/96702-640.jpg)

![iPhone 17 Air to Measure 9.5mm Thick Including Camera Bar [Rumor]](https://www.iclarified.com/images/news/96699/96699/96699-640.jpg)

-xl-(1)-xl-xl-xl.jpg)

_Andrii_Yalanskyi_Alamy.jpg?#)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)

.png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

-Rainbow-Six-Siege-X---Official-Gameplay-Trailer-00-01-00.png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)