RavenDB 7.0 Released: AI & Vector Search

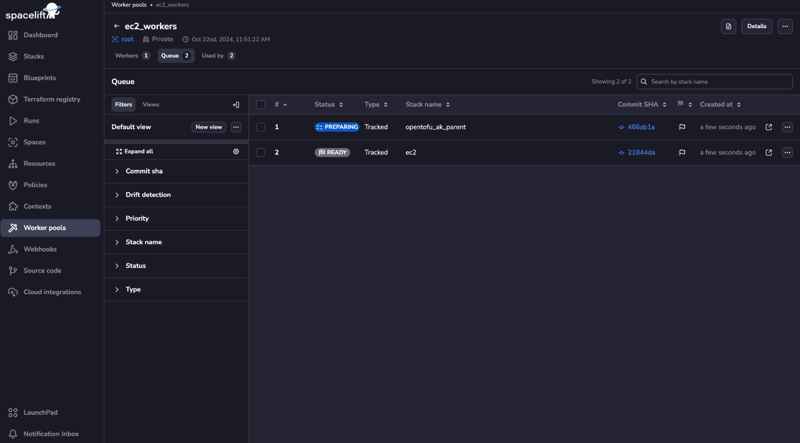

RavenDB 7.0 is out, and the big news is vector search and AI integration in the box. You can download the new bits, run it in the cloud, or just look at the public instance to test it out.Before discussing the actual feature, I want to show you what we have done:$query = 'I feel like italian today' from Products where vector.search(embedding.text(Name), $query)Try that in the public instance using the sample database, here is what you get back!I wrote parts of the vector search for RavenDB, and even so, Iwas pretty amazed when I realized that this query above just works. Note that there is no actual setup to be done here. You just issue a query and ask it to use the vector.search() during execution. RavenDB handles everything else.You can read more about the vector search feature in our documentation, but the gist of it is that you can run a piece of data (text, image, or even a video) through a large language model and get a vector back. That vector allows you to query using the model’s understanding of the data. The idea is that this moves you beyond querying with keywords or even full-text search. You are now able to search for meaning and intent. You can leverage models such as OpenAI, Ollama, Grok, Mistral, or DeepSeek to give your users deep insight into their data inside RavenDB.RavenDB embeds a small model (bge-micro-v2) and can apply it during auto-indexes, both for generating embeddings for your data and for queries. As you can see, even with a tiny model, the results are spectacular. Naturally, you can also use larger models, including OpenAI, Ollama, Grok, and more. Using a large model means it has a better contextual understanding of the relationships between the data and the query and can provide more accurate results. RavenDB’s support for vector search includes:Approximate neighbor search using the HNSW algorithm.Exact neighbor search.Support for vectors using float arrays, base64 encoded, and binary attachments.RavenVector type for optimizing disk space and improving the read speed of vectors.Using full vectors or providing quantized ones to reduce disk space.Support for auto-quantization of vectors during indexing & queries.Our aim with the new RavenDB 7.0 release is to make it possible for you to find meaning - to be able to leverage vectors, embeddings, large language models, and AI without fuss or trouble. I’m really proud to say that we have exceeded all my expectations.There are a lot more exciting announcements about RavenDB & AI integration in the pipeline, and I’m really excited to share them with you in the near future.There are actually other features that are part of RavenDB 7.0, but AI is eating the world, so we’ll cover them in a separate blog post instead of giving them a tertiary spot in this one.

RavenDB 7.0 is out, and the big news is vector search and AI integration in the box. You can download the new bits, run it in the cloud, or just look at the public instance to test it out.

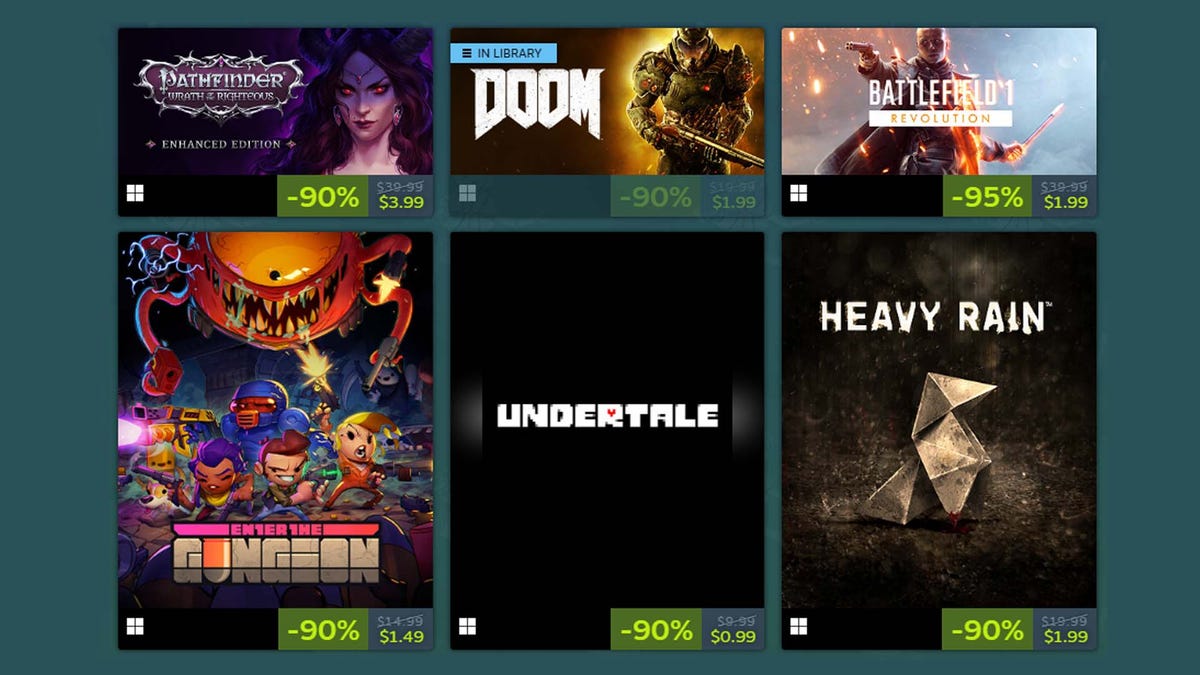

Before discussing the actual feature, I want to show you what we have done:

$query = 'I feel like italian today'

from Products

where vector.search(embedding.text(Name), $query)Try that in the public instance using the sample database, here is what you get back!

I wrote parts of the vector search for RavenDB, and even so, Iwas pretty amazed when I realized that this query above just works. Note that there is no actual setup to be done here. You just issue a query and ask it to use the vector.search() during execution. RavenDB handles everything else.

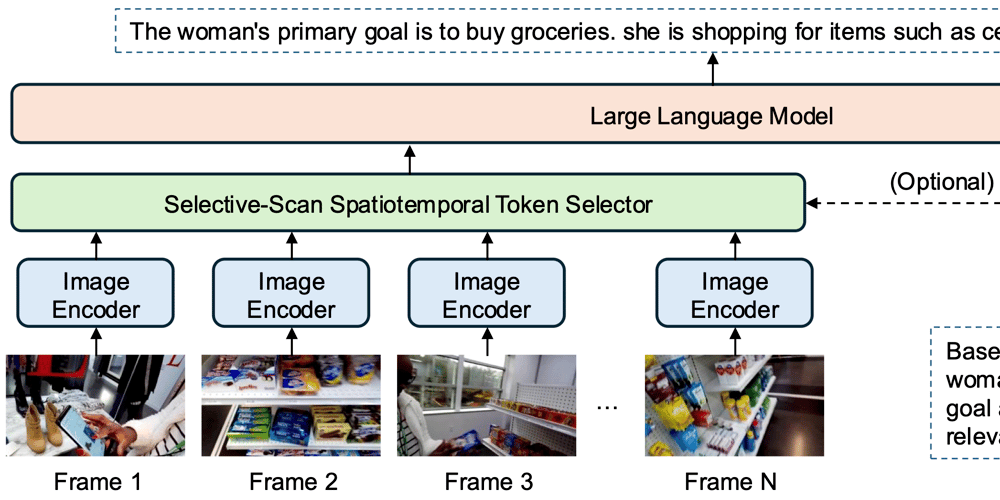

You can read more about the vector search feature in our documentation, but the gist of it is that you can run a piece of data (text, image, or even a video) through a large language model and get a vector back. That vector allows you to query using the model’s understanding of the data.

The idea is that this moves you beyond querying with keywords or even full-text search. You are now able to search for meaning and intent. You can leverage models such as OpenAI, Ollama, Grok, Mistral, or DeepSeek to give your users deep insight into their data inside RavenDB.

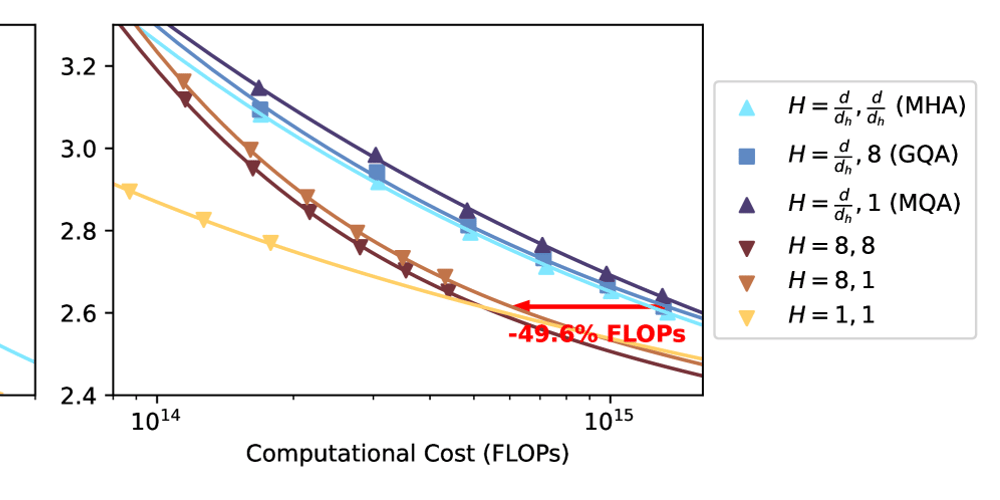

RavenDB embeds a small model (bge-micro-v2) and can apply it during auto-indexes, both for generating embeddings for your data and for queries. As you can see, even with a tiny model, the results are spectacular.

Naturally, you can also use larger models, including OpenAI, Ollama, Grok, and more. Using a large model means it has a better contextual understanding of the relationships between the data and the query and can provide more accurate results.

RavenDB’s support for vector search includes:

- Approximate neighbor search using the HNSW algorithm.

- Exact neighbor search.

- Support for vectors using float arrays, base64 encoded, and binary attachments.

RavenVectortype for optimizing disk space and improving the read speed of vectors.- Using full vectors or providing quantized ones to reduce disk space.

- Support for auto-quantization of vectors during indexing & queries.

Our aim with the new RavenDB 7.0 release is to make it possible for you to find meaning - to be able to leverage vectors, embeddings, large language models, and AI without fuss or trouble. I’m really proud to say that we have exceeded all my expectations.

There are a lot more exciting announcements about RavenDB & AI integration in the pipeline, and I’m really excited to share them with you in the near future.

There are actually other features that are part of RavenDB 7.0, but AI is eating the world, so we’ll cover them in a separate blog post instead of giving them a tertiary spot in this one.

![Sonos Abandons Streaming Device That Aimed to Rival Apple TV [Report]](https://www.iclarified.com/images/news/96703/96703/96703-640.jpg)

![HomePod With Display Delayed to Sync With iOS 19 Redesign [Kuo]](https://www.iclarified.com/images/news/96702/96702/96702-640.jpg)

![iPhone 17 Air to Measure 9.5mm Thick Including Camera Bar [Rumor]](https://www.iclarified.com/images/news/96699/96699/96699-640.jpg)

_Andrii_Yalanskyi_Alamy.jpg?#)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)

.png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

-Rainbow-Six-Siege-X---Official-Gameplay-Trailer-00-01-00.png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)