The Pain and Agony of Parsing Nightmares

Lately, I've been diving deep into the world of parser and tokenizers, attempting to rebuild Stata from scratch in JavaScript using Chevrotain. It's been an exciting yet incredibly frustrating journey, and I've hit a roadblock that has me questioning my life choices. The Problem: Context-Sensitive Keywords. In Stata, it’s perfectly acceptable for command names to double as variable names. Consider this simple example: generate generate = 2 Here, the first generate is a command, while the second is simply a variable name. This kind of flexibility is intuitive for us humans, but it can turn into a real headache for a parser. In my initial implementation, I ran into two major issues: My token definitions were matching command keywords like generate indiscriminately, regardless of context. The command tokens were checked before identifier tokens in my lexer's order of precedence. This created a impossible situation where the parser couldn't distinguish between commands and identifiers with the same name. I'm not Alone After some digging through parsing forums and documentation, I discovered that this isn’t an isolated problem. Many language implementers have wrestled with context-sensitive keywords. The common solutions often involve elaborate workarounds ranging from context-tracking mechanisms to entirely separate parsing passes. Some Suggested Solutions: Token Precedence Unfortunately, with my current Chevrotain setup, reordering token precedence isn’t feasible because Chevrotain is a parser generator library for JS that uses LL(k) parsing techniques. LL(k) parsing techniques top-down parser - starts with start symbol on stack, and repeatedly replace nonterminals until string is generated. predictive parser - predict next rewrite rule first L of LL means - read input string left to right second L of LL means - produces leftmost derivation k - number of lookahead symbols It provides tools for building recursive-descent parser with full control over grammar definitions. This doesn’t mean it can’t handle token precedence it definitely can but it requires manual implementation, which involves considerable effort. Contextual Parsing Chevrotain has built-in support for contextual tokens. We could define your keywords as contextual and enable them only in certain parsing rules. Post-Lexer Transformation This is one is an interesting one post-lexing examines the token stream and reclassifies command tokens to identifiers based on their syntactic position. Each of these methods has its merits and its tradeoffs. If anyone has tackled this issue from a different angle or has additional suggestions, I’d love to hear your thoughts. Let’s turn these parsing nightmares into stepping stones for better, more robust language design!

Lately, I've been diving deep into the world of parser and tokenizers, attempting to rebuild Stata from scratch

in JavaScript using Chevrotain. It's been an exciting yet incredibly frustrating journey, and I've hit a roadblock

that has me questioning my life choices.

The Problem: Context-Sensitive Keywords.

In Stata, it’s perfectly acceptable for command names to double as variable names. Consider this simple example:

generate generate = 2

Here, the first generate is a command, while the second is simply a variable name. This kind of flexibility is intuitive

for us humans, but it can turn into a real headache for a parser.

In my initial implementation, I ran into two major issues:

- My token definitions were matching command keywords like

generateindiscriminately, regardless of context. - The command tokens were checked before identifier tokens in my lexer's order of precedence.

This created a impossible situation where the parser couldn't distinguish between commands and identifiers with

the same name.

I'm not Alone

After some digging through parsing forums and documentation, I discovered that this isn’t an isolated problem. Many

language implementers have wrestled with context-sensitive keywords. The common solutions often involve elaborate

workarounds ranging from context-tracking mechanisms to entirely separate parsing passes.

Some Suggested Solutions:

Token Precedence

Unfortunately, with my current Chevrotain setup, reordering token precedence isn’t feasible because Chevrotain is a

parser generator library for JS that uses LL(k) parsing techniques.

LL(k) parsing techniques

- top-down parser - starts with start symbol on stack, and repeatedly replace nonterminals until string is generated.

- predictive parser - predict next rewrite rule

- first L of LL means - read input string left to right

- second L of LL means - produces leftmost derivation

- k - number of lookahead symbols

It provides tools for building recursive-descent parser

with full control over grammar definitions. This doesn’t mean it can’t handle token precedence it definitely can but it

requires manual implementation, which involves considerable effort.

Contextual Parsing

Chevrotain has built-in support for contextual tokens. We could define your keywords as contextual and enable them

only in certain parsing rules.

Post-Lexer Transformation

This is one is an interesting one post-lexing examines the token stream and reclassifies command tokens to identifiers based

on their syntactic position.

Each of these methods has its merits and its tradeoffs. If anyone has tackled this issue from a different angle or has additional

suggestions, I’d love to hear your thoughts. Let’s turn these parsing nightmares into stepping stones for better, more robust

language design!

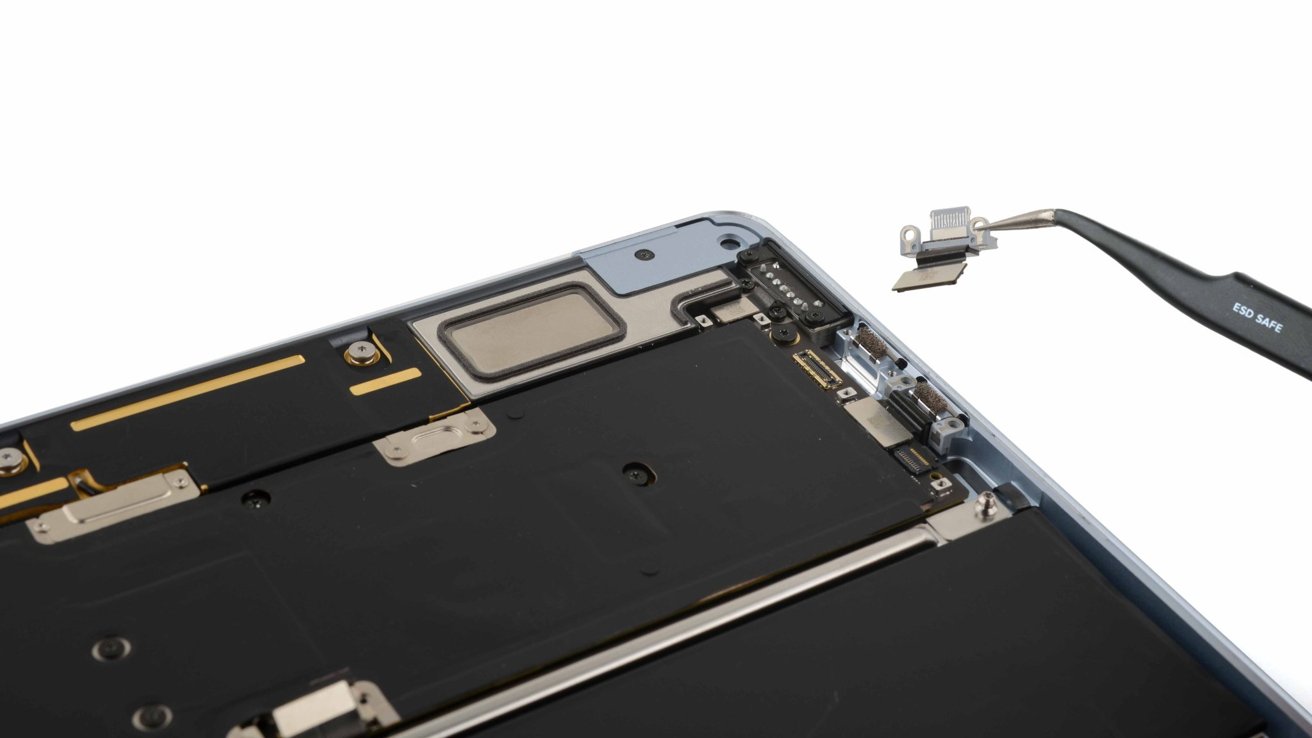

![iFixit Tears Down New M4 MacBook Air [Video]](https://www.iclarified.com/images/news/96717/96717/96717-640.jpg)

![Apple Officially Announces Return of 'Ted Lasso' for Fourth Season [Video]](https://www.iclarified.com/images/news/96710/96710/96710-640.jpg)

![[Update: Fix] Chromecast (2nd gen) and Audio can’t Cast in ‘Untrusted’ outage](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2019/08/chromecast_audio_1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)

.jpg?#)