From CosmosDB to DynamoDB: Migrating and Containerizing a FastAPI + React App

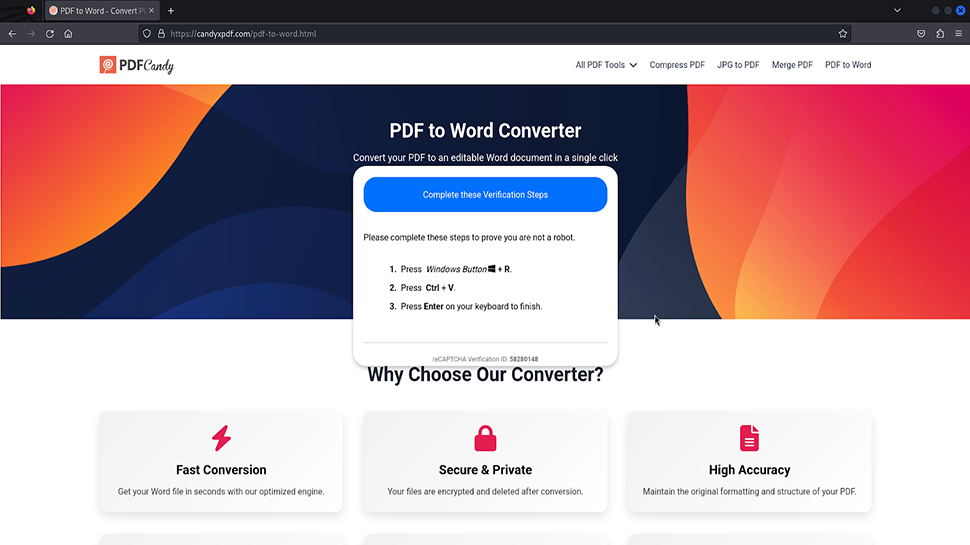

Building and deploying a full-stack application involves more than just writing code. It's a journey through infrastructure, automation, and inevitable troubleshooting. As part of the Learn to Cloud initiative, I recently expanded on a previous Phase 2 Capstone Project (a Serverless Movies API) to focus on applying core DevOps practices like containerization, CI/CD, and eventually observability/monitoring. The target was a simple TV show application (a sample is available here. The path from local development to a containerized deployment on AWS using CI/CD was filled with valuable lessons. Here's a look at the experience, the hurdles, and how I overcame them. The Goal: A DevOps-Focused TV Show App The application idea was straightforward: a web app where users could browse TV shows and view details about their seasons and episodes. The core goal, however, was applying modern DevOps techniques using this stack: Backend: FastAPI (Python) for its speed and ease of use. Frontend: React for building the user interface. Database: Initially Azure Cosmos DB, but migrated to AWS DynamoDB. Deployment: Docker containers running on an AWS EC2 instance. Automation: GitHub Actions for CI/CD. Challenge 1: Migrating the Backend (Cosmos DB to DynamoDB) One of the first major tasks was switching the database. Moving from Azure Cosmos DB’s SQL API to DynamoDB required more than just changing connection strings. Problem: Different SDKs (azure-cosmos vs. boto3) and fundamentally different query models (SQL-like queries vs. DynamoDB's key-value/document operations like scan and get_item). AWS authentication also needed handling. Solution: I refactored the backend’s data access layer to use boto3. Listing all shows involved a scan operation (noting its potential performance impact on large tables), while fetching specific show details used the efficient get_item. For authentication, I transitioned from potential key-based access to using an IAM Role attached to the EC2 instance, which is much more secure. This involved ensuring the role had the correct DynamoDB permissions (dynamodb:Scan, dynamodb:GetItem, etc.). Challenge 2: Containerizing the Stack Dockerizing the FastAPI backend and the React frontend (using an Nginx server) seemed standard, but ensuring they worked together smoothly within Docker Compose required careful configuration. The multi-stage build for the React/Nginx frontend was key to keeping the final image small. Challenge 3: Setting Up the EC2 Environment I chose Amazon Linux 2023 on a t4g.medium instance (for that Graviton price-performance!). Getting the environment ready for Docker threw up some unexpected curveballs. Problem: Installing Docker Compose. My initial attempts (sudo dnf install docker-compose) failed because the package name corresponds to the deprecated V1. Trying the correct V2 package (sudo dnf install docker-compose-plugin) also failed initially! Solution: After some head-scratching, I realized the dnf package manager's cache was likely stale. Running sudo dnf clean all before retrying the docker-compose-plugin installation resolved the issue. This was a good reminder that package managers aren't infallible and sometimes need a refresh. Challenge 4: “Unable to Connect” — The Dreaded Networking Hurdle With the containers seemingly running via docker compose up, I tried accessing the frontend from my browser using the EC2 instance's public IP and the mapped port (3000). Result: "Unable to connect." Problem: The connection wasn’t even reaching the application. This almost always points to a firewall issue. Solution: The culprit was the EC2 Security Group. It acts as a stateful firewall, and I hadn’t created an inbound rule to allow traffic on TCP port 3000 from my IP address (or 0.0.0.0/0 for testing). Adding this rule immediately fixed the connection issue. Testing connectivity locally on the instance (curl localhost:3000) also helped confirm the containers were running and mapped correctly, isolating the problem to the external firewall (the Security Group). Challenge 5: Docker Compose Runtime Issues Even after connecting, the application wasn’t fully working. docker compose ps showed the frontend running, but the backend was missing. Problem: Docker Compose logs (docker compose logs api-backend) revealed the backend container was failing to start because required environment variables (AWS_REGION, DYNAMODB_TABLE_NAME) were missing. Solution: I had created a .env file, but I had to double-check: Was it in the same directory as docker-compose.yml? (Checked with ls -a). Did it contain the correct variable names and values? (Checked with cat .env). Was the docker-compose.yml correctly referencing these variables using the ${VAR} syntax in the environment section for the backend service? Correcting a small mistake here and restarting with docker compose down && docker compose up -d brought the backend online. Challenge 6: Automating Builds with GitHub Act

Building and deploying a full-stack application involves more than just writing code. It's a journey through infrastructure, automation, and inevitable troubleshooting. As part of the Learn to Cloud initiative, I recently expanded on a previous Phase 2 Capstone Project (a Serverless Movies API) to focus on applying core DevOps practices like containerization, CI/CD, and eventually observability/monitoring. The target was a simple TV show application (a sample is available here. The path from local development to a containerized deployment on AWS using CI/CD was filled with valuable lessons. Here's a look at the experience, the hurdles, and how I overcame them.

The Goal: A DevOps-Focused TV Show App

The application idea was straightforward: a web app where users could browse TV shows and view details about their seasons and episodes. The core goal, however, was applying modern DevOps techniques using this stack:

- Backend: FastAPI (Python) for its speed and ease of use.

- Frontend: React for building the user interface.

- Database: Initially Azure Cosmos DB, but migrated to AWS DynamoDB.

- Deployment: Docker containers running on an AWS EC2 instance.

- Automation: GitHub Actions for CI/CD.

Challenge 1: Migrating the Backend (Cosmos DB to DynamoDB)

One of the first major tasks was switching the database. Moving from Azure Cosmos DB’s SQL API to DynamoDB required more than just changing connection strings.

Problem: Different SDKs (

azure-cosmos vs. boto3) and fundamentally different query models (SQL-like queries vs. DynamoDB's key-value/document operations likescanandget_item). AWS authentication also needed handling.Solution: I refactored the backend’s data access layer to use boto3. Listing all shows involved a scan operation (noting its potential performance impact on large tables), while fetching specific show details used the efficient get_item. For authentication, I transitioned from potential key-based access to using an IAM Role attached to the EC2 instance, which is much more secure. This involved ensuring the role had the correct DynamoDB permissions (

dynamodb:Scan,dynamodb:GetItem, etc.).

Challenge 2: Containerizing the Stack

Dockerizing the FastAPI backend and the React frontend (using an Nginx server) seemed standard, but ensuring they worked together smoothly within Docker Compose required careful configuration. The multi-stage build for the React/Nginx frontend was key to keeping the final image small.

Challenge 3: Setting Up the EC2 Environment

I chose Amazon Linux 2023 on a t4g.medium instance (for that Graviton price-performance!). Getting the environment ready for Docker threw up some unexpected curveballs.

Problem: Installing Docker Compose. My initial attempts (

sudo dnf install docker-compose) failed because the package name corresponds to the deprecated V1. Trying the correct V2 package (sudo dnf install docker-compose-plugin) also failed initially!Solution: After some head-scratching, I realized the dnf package manager's cache was likely stale. Running

sudo dnf clean allbefore retrying thedocker-compose-plugininstallation resolved the issue. This was a good reminder that package managers aren't infallible and sometimes need a refresh.

Challenge 4: “Unable to Connect” — The Dreaded Networking Hurdle

With the containers seemingly running via docker compose up, I tried accessing the frontend from my browser using the EC2 instance's public IP and the mapped port (3000). Result: "Unable to connect."

Problem: The connection wasn’t even reaching the application. This almost always points to a firewall issue.

Solution: The culprit was the EC2 Security Group. It acts as a stateful firewall, and I hadn’t created an inbound rule to allow traffic on TCP port 3000 from my IP address (or

0.0.0.0/0for testing). Adding this rule immediately fixed the connection issue. Testing connectivity locally on the instance (curl localhost:3000) also helped confirm the containers were running and mapped correctly, isolating the problem to the external firewall (the Security Group).

Challenge 5: Docker Compose Runtime Issues

Even after connecting, the application wasn’t fully working. docker compose ps showed the frontend running, but the backend was missing.

Problem: Docker Compose logs (

docker compose logs api-backend) revealed the backend container was failing to start because required environment variables (AWS_REGION,DYNAMODB_TABLE_NAME) were missing.Solution: I had created a

.envfile, but I had to double-check:

Was it in the same directory as

docker-compose.yml? (Checked withls -a).Did it contain the correct variable names and values? (Checked with

cat .env).Was the

docker-compose.ymlcorrectly referencing these variables using the${VAR}syntax in theenvironmentsection for the backend service? Correcting a small mistake here and restarting withdocker compose down && docker compose up -dbrought the backend online.

Challenge 6: Automating Builds with GitHub Actions & AWS OIDC

Manually building and deploying gets tedious fast. Setting up a CI/CD pipeline using GitHub Actions to build images and push them to AWS ECR was the next goal. This involved securely authenticating GitHub Actions with AWS using OpenID Connect (OIDC).

Problem: The workflow failed during the AWS authentication step with

Error: Could not assume role with OIDC: No OpenIDConnect provider found.... Even after creating the OIDC provider in AWS IAM, further errors occurred when debugging the IAM Role's Trust Policy.Solution: This required careful configuration in AWS IAM:

Creating the OIDC Provider: Explicitly adding

token.actions.githubusercontent.comas an identity provider in IAM.Configuring the Role Trust Policy: This was tricky. The initial policy was wrong (it trusted EC2, not GitHub OIDC). The correct policy needed:

Principal: Set to"Federated"referencing the OIDC provider's ARN.Action: Set to"sts:AssumeRoleWithWebIdentity".Condition: Carefully crafting thesubclaim condition to match therepo:ORG/REPO:ref...format, ensuring I didn't mistakenly include thehttps://github.com/prefix. Getting the trust policy exactly right allowed the workflow to assume the role and push images to ECR successfully.

Key Takeaways

Cloud Services Have Nuances: Migrating between databases or setting up authentication requires understanding the specific service’s model (e.g., DynamoDB queries, AWS IAM roles).

Firewalls are Foundational: Security Groups are often the first place to look for connectivity issues to EC2 instances.

Container Orchestration Needs Precision: Docker Compose relies heavily on correct file paths (

.env,docker-compose.yml), environment variable syntax, and networking between containers. Logs are essential (docker compose logs).Package Managers Can Be Quirky: Sometimes, a simple cache clean (

dnf clean all) is all you need. Know the difference between V1 and V2 (e.g.,docker-composevsdocker compose).Secure CI/CD Requires Careful Setup: OIDC is powerful for keyless authentication but demands precise configuration of the IAM provider and role trust policies in AWS.

Iterative Debugging is Key: Very rarely does everything work on the first try. Systematically checking logs, configurations, and documentation is crucial.

Conclusion & Next Steps

This project provided a rich learning experience across backend development, cloud database migration, containerization, EC2 instance management, and CI/CD automation within the “Learn to Cloud” framework. Encountering and solving issues with package management, security groups, environment variables, and IAM policies was invaluable. The application now runs on EC2, with deployments automated via GitHub Actions pushing to ECR. See repo here

This provides a solid foundation. The next exciting phase involves using Infrastructure as Code (IaC) with Terraform to provision the AWS resources automatically. This could set the stage for deploying the application onto Amazon Elastic Kubernetes Service (EKS) for enhanced scalability, resilience, and management, further deepening the exploration of DevOps practices on AWS.

![Mobile Legends: Bang Bang [MLBB] Free Redeem Codes April 2025](https://www.talkandroid.com/wp-content/uploads/2024/07/Screenshot_20240704-093036_Mobile-Legends-Bang-Bang.jpg)

![Apple Releases iOS 18.5 Beta 3 and iPadOS 18.5 Beta 3 [Download]](https://www.iclarified.com/images/news/97076/97076/97076-640.jpg)

![Apple Seeds visionOS 2.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97077/97077/97077-640.jpg)

![Apple Seeds tvOS 18.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97078/97078/97078-640.jpg)

![Apple Seeds watchOS 11.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97079/97079/97079-640.jpg)

![Lenovo shows off its next 8.8-inch Legion Tab with vague AI promises [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/04/lenovo-legion-tab-y700-2025-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

_Tanapong_Sungkaew_via_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![BPMN-procesmodellering [closed]](https://i.sstatic.net/l7l8q49F.png)

![From fast food worker to cybersecurity engineer with Tae'lur Alexis [Podcast #169]](https://cdn.hashnode.com/res/hashnode/image/upload/v1745242807605/8a6cf71c-144f-4c91-9532-62d7c92c0f65.png?#)