Mobile Agents Powered by LLMs: Revolutionizing On-Device Intelligence

The rise of Large Language Models (LLMs) has unlocked a new era of intelligent mobile applications. Unlike traditional virtual assistants, mobile agents are on-device AI systems that leverage LLMs to provide personalized, context-aware, and autonomous experiences. These agents are not just responding to user queries—they’re anticipating needs, learning from behavior, and seamlessly integrating into our daily lives. In this post, we’ll explore how LLM-powered mobile agents are transforming the mobile landscape, the technical challenges they address, and why they’re a game-changer for the future of mobile technology. What Makes Mobile Agents Unique? Mobile agents are AI-driven systems designed specifically for mobile devices. Unlike cloud-based conversational AI, mobile agents often operate on-device, leveraging the processing power of modern smartphones to deliver fast, secure, and personalized experiences. Here’s what sets them apart: 1. On-Device Processing Mobile agents powered by LLMs can run locally on your device, reducing latency and ensuring privacy. This is a significant shift from cloud-dependent AI systems. 2. Contextual Awareness These agents understand the unique context of mobile usage, such as location, time, and app usage patterns. For example, a mobile agent can suggest turning on "Do Not Disturb" when you enter a meeting venue. 3. Seamless Integration Mobile agents integrate deeply with the operating system and apps, enabling them to perform tasks like scheduling, navigation, and notifications without switching between apps. 4. Personalization By learning from user behavior, mobile agents offer tailored recommendations, such as suggesting a playlist for your morning commute or reminding you to order groceries based on past habits. How LLMs Enable Smarter Mobile Agents Large Language Models (LLMs) like OpenAI’s GPT, Google’s Bard, and Meta’s LLaMA are the driving force behind modern mobile agents. Here’s how they enhance mobile intelligence: 1. Natural Language Understanding (NLU) LLMs enable mobile agents to interpret complex user queries and respond in a conversational manner. For example, you can ask, “What’s the best route to avoid traffic right now?” and get a detailed response. 2. Multimodal Capabilities Modern LLMs can process text, images, and even audio. This allows mobile agents to perform tasks like identifying objects in photos, transcribing voice notes, or summarizing articles. 3. Real-Time Adaptation LLMs can adapt to user preferences in real-time. For instance, if you frequently ask for vegan restaurant recommendations, the agent will prioritize those in future suggestions. 4. Edge Computing With advancements in edge computing, LLMs can now run efficiently on mobile devices, enabling faster and more secure interactions without relying on cloud servers. The Impact of Mobile Agents on the Mobile Ecosystem Mobile agents are reshaping the mobile ecosystem in profound ways: 1. Enhanced User Experiences From personalized recommendations to proactive assistance, mobile agents are making interactions with devices more intuitive and enjoyable. 2. Improved Productivity Mobile agents can automate routine tasks, such as scheduling meetings, managing emails, or organizing to-do lists, freeing up time for users. 3. Accessibility By enabling voice-based and natural language interactions, mobile agents are making technology more accessible to users with disabilities. 4. New Opportunities for Developers Developers can leverage LLMs to build innovative apps that integrate intelligent agents, opening up new possibilities in areas like healthcare, education, and entertainment. Real-World Applications of Mobile Agents Here are some cutting-edge examples of LLM-powered mobile agents in action: 1. OpenAI’s ChatGPT Mobile App OpenAI’s ChatGPT app brings the power of GPT-4 to mobile devices, enabling users to have intelligent conversations, automate tasks, and even generate creative content. Learn more about ChatGPT Mobile. 2. Google’s Gemini Integration Google is integrating its LLM, Gemini, into Android devices to enhance Google Assistant’s capabilities, making it more conversational and context-aware. Explore Google Gemini. 3. Microsoft’s Copilot on Mobile Microsoft’s Copilot, powered by GPT, is being integrated into mobile apps like Outlook and Teams to provide intelligent assistance for tasks like email drafting and meeting scheduling. Discover Microsoft Copilot. 4. Hugging Face’s On-Device LLMs Hugging Face offers tools for deploying LLMs on mobile devices, enabling developers to build privacy-focused mobile agents. Check out Hugging Face. Challenges and Opportunities While mobile agents powered by LLMs hold immense potential, they

The rise of Large Language Models (LLMs) has unlocked a new era of intelligent mobile applications. Unlike traditional virtual assistants, mobile agents are on-device AI systems that leverage LLMs to provide personalized, context-aware, and autonomous experiences. These agents are not just responding to user queries—they’re anticipating needs, learning from behavior, and seamlessly integrating into our daily lives. In this post, we’ll explore how LLM-powered mobile agents are transforming the mobile landscape, the technical challenges they address, and why they’re a game-changer for the future of mobile technology.

What Makes Mobile Agents Unique?

Mobile agents are AI-driven systems designed specifically for mobile devices. Unlike cloud-based conversational AI, mobile agents often operate on-device, leveraging the processing power of modern smartphones to deliver fast, secure, and personalized experiences. Here’s what sets them apart:

1. On-Device Processing

Mobile agents powered by LLMs can run locally on your device, reducing latency and ensuring privacy. This is a significant shift from cloud-dependent AI systems.

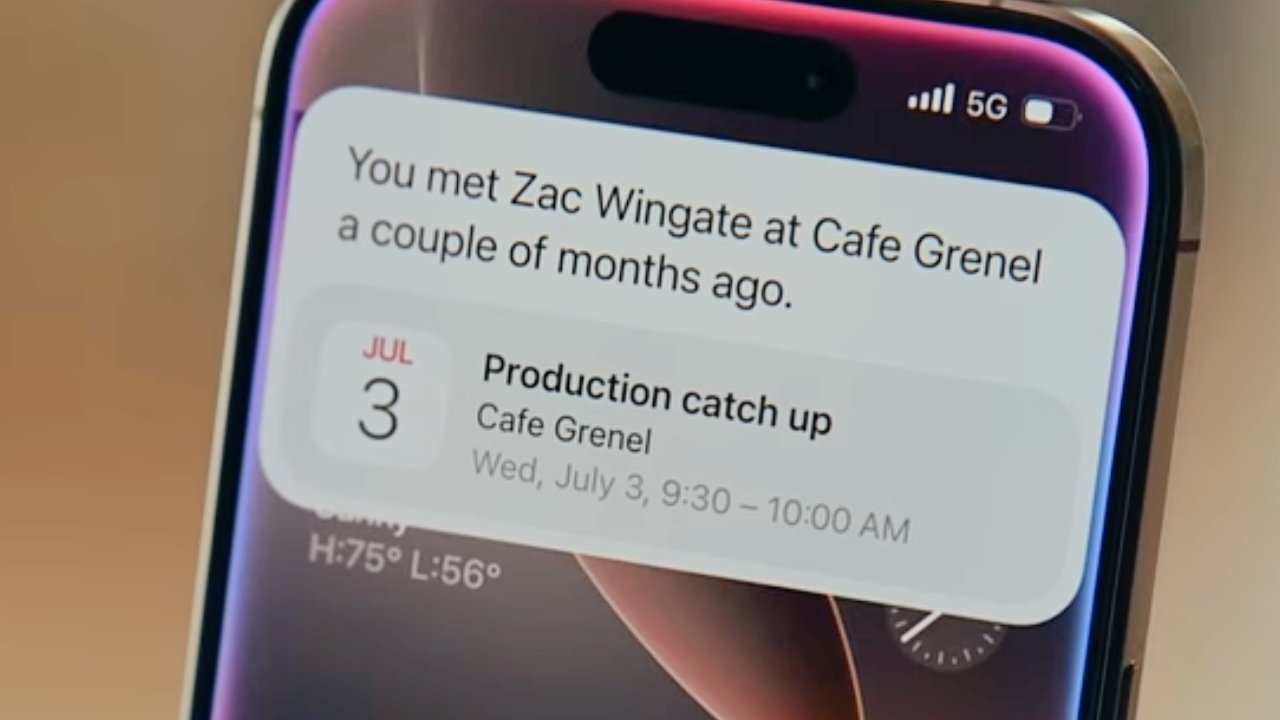

2. Contextual Awareness

These agents understand the unique context of mobile usage, such as location, time, and app usage patterns. For example, a mobile agent can suggest turning on "Do Not Disturb" when you enter a meeting venue.

3. Seamless Integration

Mobile agents integrate deeply with the operating system and apps, enabling them to perform tasks like scheduling, navigation, and notifications without switching between apps.

4. Personalization

By learning from user behavior, mobile agents offer tailored recommendations, such as suggesting a playlist for your morning commute or reminding you to order groceries based on past habits.

How LLMs Enable Smarter Mobile Agents

Large Language Models (LLMs) like OpenAI’s GPT, Google’s Bard, and Meta’s LLaMA are the driving force behind modern mobile agents. Here’s how they enhance mobile intelligence:

1. Natural Language Understanding (NLU)

LLMs enable mobile agents to interpret complex user queries and respond in a conversational manner. For example, you can ask, “What’s the best route to avoid traffic right now?” and get a detailed response.

2. Multimodal Capabilities

Modern LLMs can process text, images, and even audio. This allows mobile agents to perform tasks like identifying objects in photos, transcribing voice notes, or summarizing articles.

3. Real-Time Adaptation

LLMs can adapt to user preferences in real-time. For instance, if you frequently ask for vegan restaurant recommendations, the agent will prioritize those in future suggestions.

4. Edge Computing

With advancements in edge computing, LLMs can now run efficiently on mobile devices, enabling faster and more secure interactions without relying on cloud servers.

The Impact of Mobile Agents on the Mobile Ecosystem

Mobile agents are reshaping the mobile ecosystem in profound ways:

1. Enhanced User Experiences

From personalized recommendations to proactive assistance, mobile agents are making interactions with devices more intuitive and enjoyable.

2. Improved Productivity

Mobile agents can automate routine tasks, such as scheduling meetings, managing emails, or organizing to-do lists, freeing up time for users.

3. Accessibility

By enabling voice-based and natural language interactions, mobile agents are making technology more accessible to users with disabilities.

4. New Opportunities for Developers

Developers can leverage LLMs to build innovative apps that integrate intelligent agents, opening up new possibilities in areas like healthcare, education, and entertainment.

Real-World Applications of Mobile Agents

Here are some cutting-edge examples of LLM-powered mobile agents in action:

1. OpenAI’s ChatGPT Mobile App

OpenAI’s ChatGPT app brings the power of GPT-4 to mobile devices, enabling users to have intelligent conversations, automate tasks, and even generate creative content.

Learn more about ChatGPT Mobile.

2. Google’s Gemini Integration

Google is integrating its LLM, Gemini, into Android devices to enhance Google Assistant’s capabilities, making it more conversational and context-aware.

Explore Google Gemini.

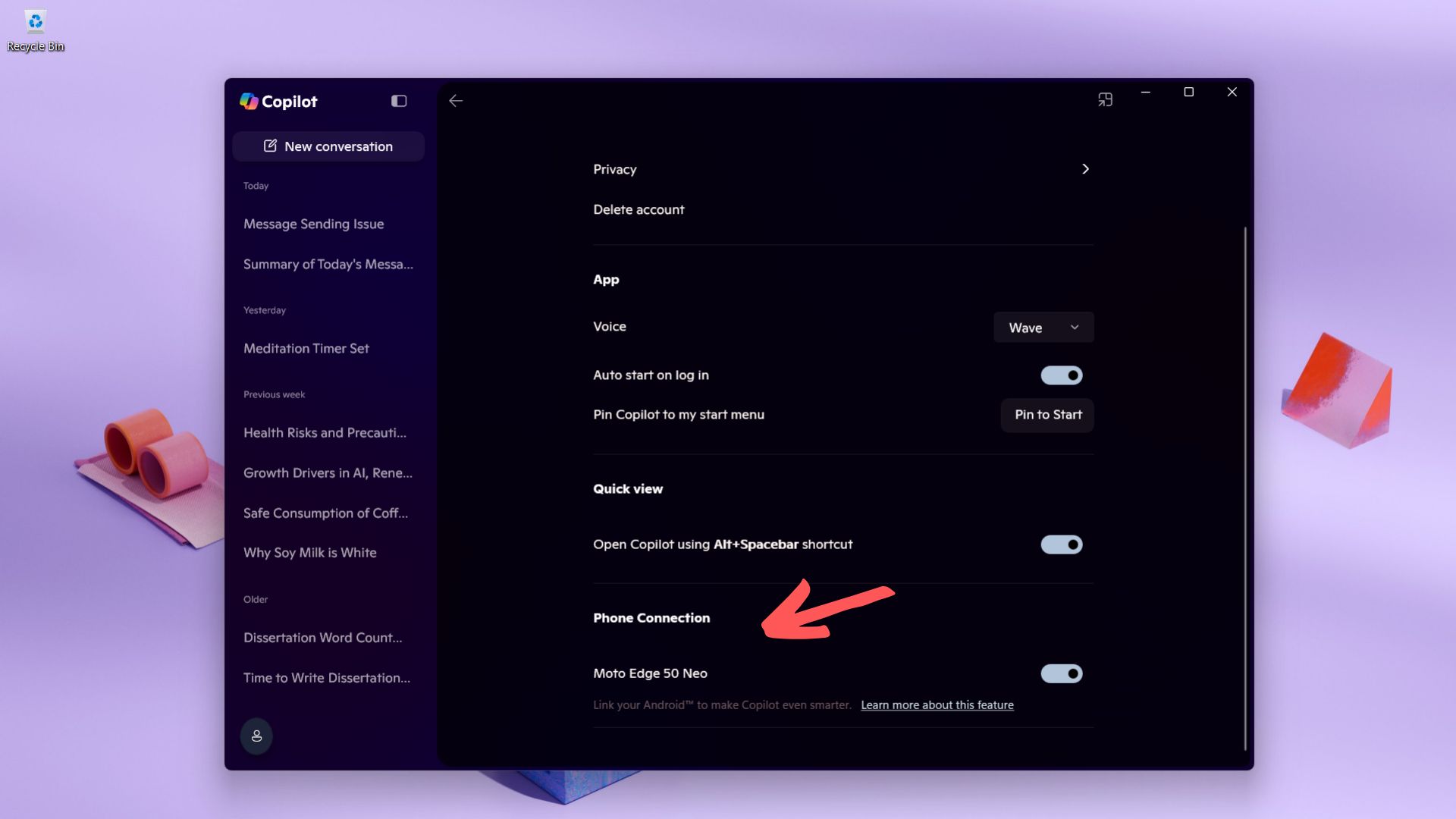

3. Microsoft’s Copilot on Mobile

Microsoft’s Copilot, powered by GPT, is being integrated into mobile apps like Outlook and Teams to provide intelligent assistance for tasks like email drafting and meeting scheduling.

Discover Microsoft Copilot.

4. Hugging Face’s On-Device LLMs

Hugging Face offers tools for deploying LLMs on mobile devices, enabling developers to build privacy-focused mobile agents.

Check out Hugging Face.

Challenges and Opportunities

While mobile agents powered by LLMs hold immense potential, they also come with challenges:

1. Privacy Concerns

On-device processing helps, but ensuring user data is handled securely remains a critical challenge.

2. Resource Constraints

Running LLMs on mobile devices requires optimizing for limited processing power and battery life.

3. Ethical Considerations

As mobile agents become more autonomous, ensuring they act in the user’s best interest is essential.

Despite these challenges, the opportunities are vast. Mobile agents can revolutionize industries, from healthcare to education, by providing intelligent, personalized solutions.

Conclusion: The Future of Mobile Intelligence

Mobile agents powered by LLMs are not just a technological advancement—they’re a paradigm shift in how we interact with our devices. By combining on-device processing, contextual awareness, and natural language understanding, these agents are making mobile experiences smarter, faster, and more personalized.

What do you think about the rise of mobile agents? Are they the future of mobile intelligence, or do they raise concerns about privacy and autonomy? Share your thoughts in the comments below!

If you enjoyed this post, feel free to share it and follow me for more insights into emerging technologies and their impact on our lives.

![Apple Teases Spike Jonze 'Someday' Film Showcasing AirPods 4 with ANC [Video]](https://www.iclarified.com/images/news/96727/96727/96727-640.jpg)

![Apple Working on Two New Studio Display Models [Gurman]](https://www.iclarified.com/images/news/96724/96724/96724-640.jpg)

![Dummy Models Allegedly Reveal Design of iPhone 17 Lineup [Images]](https://www.iclarified.com/images/news/96725/96725/96725-640.jpg)

![New M4 MacBook Air On Sale for $949 [Deal]](https://www.iclarified.com/images/news/96721/96721/96721-640.jpg)

![Amazon to kill Echo's local voice processing feature in favor of Voice ID [u]](https://photos5.appleinsider.com/gallery/62978-130749-62782-130279-IMG_0502-xl-xl.jpg)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)

![How to become a self-taught developer while supporting a family [Podcast #164]](https://cdn.hashnode.com/res/hashnode/image/upload/v1741989957776/7e938ad4-f691-4c9e-8c6b-dc26da7767e1.png?#)

![[FREE EBOOKS] ChatGPT Prompts Book – Precision Prompts, Priming, Training & AI Writing Techniques for Mortals & Five More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)