Understanding Model Calibration: A Gentle Introduction & Visual Exploration

How Reliable Are Your Predictions? About To be considered reliable, a model must be calibrated so that its confidence in each decision closely reflects its true outcome. In this blog post we’ll take a look at the most commonly used definition for calibration and then dive into a frequently used evaluation measure for model calibration. […] The post Understanding Model Calibration: A Gentle Introduction & Visual Exploration appeared first on Towards Data Science.

How Reliable Are Your Predictions?

About

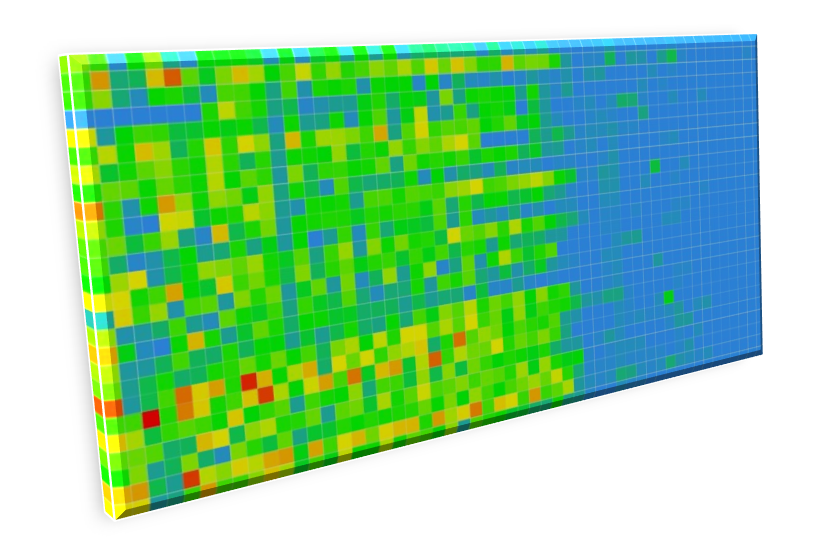

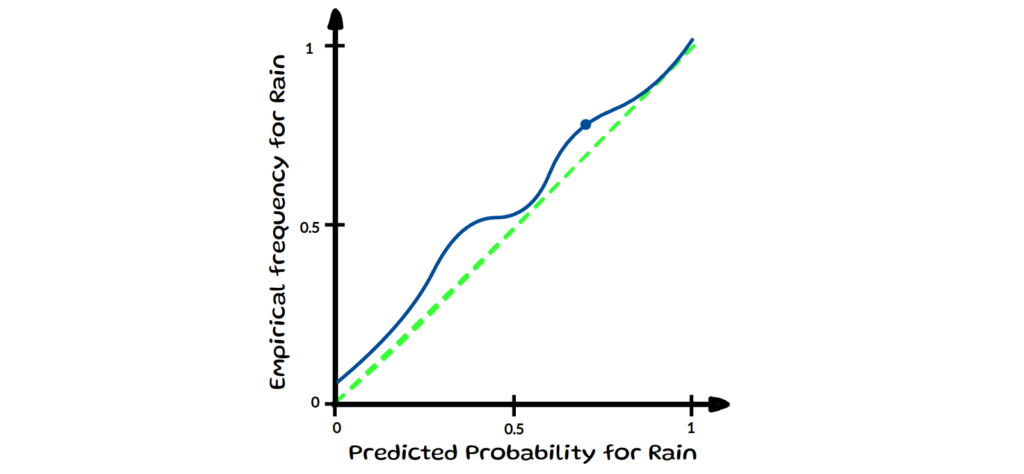

To be considered reliable, a model must be calibrated so that its confidence in each decision closely reflects its true outcome. In this blog post we’ll take a look at the most commonly used definition for calibration and then dive into a frequently used evaluation measure for Model Calibration. We’ll then cover some of the drawbacks of this measure and how these surfaced the need for additional notions of calibration, which require their own new evaluation measures. This post is not intended to be an in-depth dissection of all works on calibration, nor does it focus on how to calibrate models. Instead, it is meant to provide a gentle introduction to the different notions and their evaluation measures as well as to re-highlight some issues with a measure that is still widely used to evaluate calibration.

Table of Contents

- What is Calibration?

- Evaluating Calibration — Expected Calibration Error (ECE)

- Most frequently mentioned Drawbacks of ECE

- Other definitions of Calibration

- Summary

What is Calibration?

Calibration makes sure that a model’s estimated probabilities match real-world outcomes. For example, if a weather forecasting model predicts a 70% chance of rain on several days, then roughly 70% of those days should actually be rainy for the model to be considered well calibrated. This makes model predictions more reliable and trustworthy, which makes calibration relevant for many applications across various domains.

Now, what calibration means more precisely depends on the specific definition being considered. We will have a look at the most common notion in machine learning (ML) formalised by Guo and termed confidence calibration by Kull. But first, let’s define a bit of formal notation for this blog.

In this blog post we consider a classification task with K possible classes, with labels Y ∈ {1, …, K} and a classification model p̂ :

![Apple Officially Announces Return of 'Ted Lasso' for Fourth Season [Video]](https://www.iclarified.com/images/news/96710/96710/96710-640.jpg)

![Apple Plans Live Translation Feature for AirPods in iOS 19 [Report]](https://www.iclarified.com/images/news/96712/96712/96712-640.jpg)

![Apple Shares Official Trailer for 'F1' Starring Brad Pitt [Video]](https://www.iclarified.com/images/news/96714/96714/96714-640.jpg)

![[Update: Fix] Chromecast (2nd gen) and Audio can’t Cast in ‘Untrusted’ outage](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2019/08/chromecast_audio_1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

_Tanapong_Sungkaew_Alamy.jpg?#)

_JIRAROJ_PRADITCHAROENKUL_Alamy.jpg?#)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)