Vision Transformers (ViT) Explained: Are They Better Than CNNs?

Understanding how a groundbreaking architecture for computer vision tasks works The post Vision Transformers (ViT) Explained: Are They Better Than CNNs? appeared first on Towards Data Science.

1. Introduction

Ever since the introduction of the self-attention mechanism, Transformers have been the top choice when it comes to Natural Language Processing (NLP) tasks. Self-attention-based models are highly parallelizable and require substantially fewer parameters, making them much more computationally efficient, less prone to overfitting, and easier to fine-tune for domain-specific tasks [1]. Furthermore, the key advantage of transformers over past models (like RNN, LSTM, GRU and other neural-based architectures that dominated the NLP domain prior to the introduction of Transformers) is their ability to process input sequences of any length without losing context, through the use of the self-attention mechanism that focuses on different parts of the input sequence, and how those parts interact with other parts of the sequence, at different times [2]. Because of these qualities, Transformers has made it possible to train language models of unprecedented size, with more than 100B parameters, paving the way for the current state-of-the-art advanced models like the Generative Pre-trained Transformer (GPT) and the Bidirectional Encoder Representations from Transformers (BERT) [1].

However, in the field of computer vision, convolutional neural networks or CNNs, remain dominant in most, if not all, computer vision tasks. While there has been an increasing collection of research work that attempts to implement self-attention-based architectures to perform computer vision tasks, very few has reliably outperformed CNNs with promising scalability [3]. The main challenge with integrating the transformer architecture with image-related tasks is that, by design, the self-attention mechanism, which is the core component of transformers, has a quadratic time complexity with respect to sequence length, i.e. O(n2), as shown in Table I and as discussed further in Part 2.1. This is usually not a problem for NLP tasks that use a relatively small number of tokens per input sequence (e.g., a 1,000-word paragraph will only have 1,000 input tokens, or a few more if sub-word units are used as tokens instead of full words). However, in computer vision, the input sequence (the image) can have a token size with orders of magnitude greater than that of NLP input sequences. For example, a relatively small 300 x 300 x 3 image can easily have up to 270,000 tokens and require a self-attention map with up to 72.9 billion parameters (270,0002) when self-attention is applied naively.

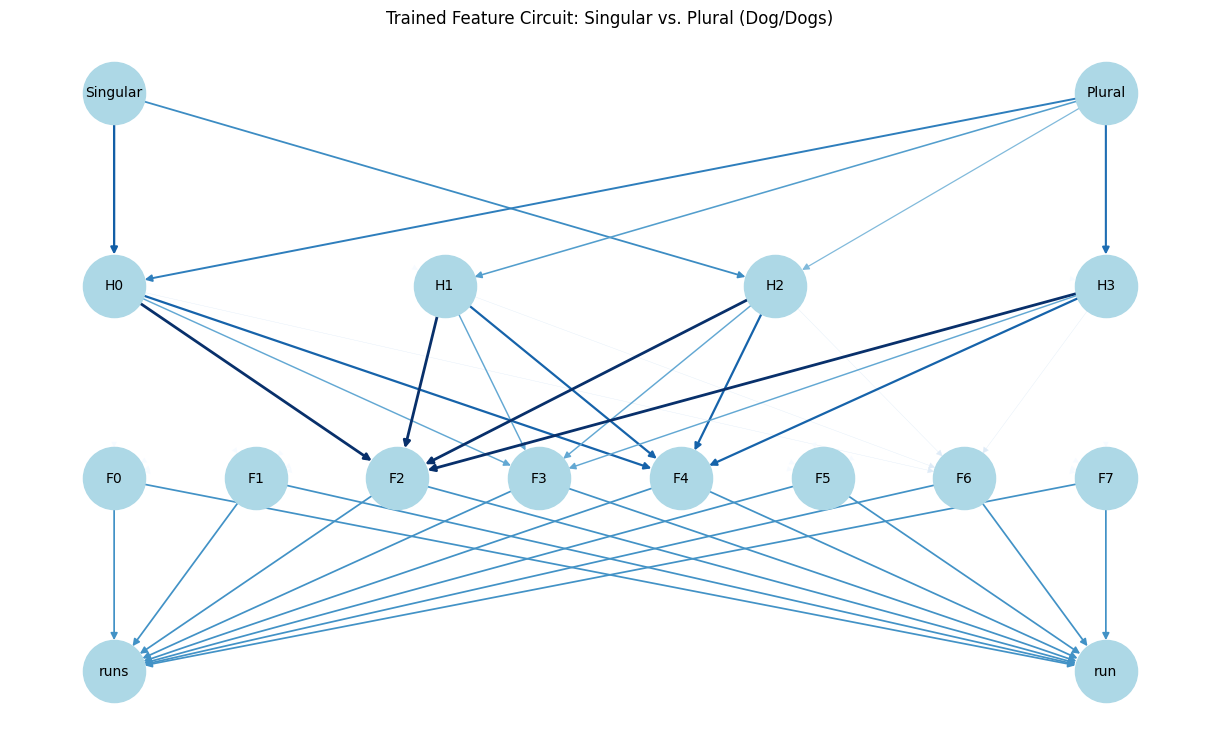

For this reason, most of the research work that attempt to use self-attention-based architectures to perform computer vision tasks did so either by applying self-attention locally, using transformer blocks in conjunction with CNN layers, or by only replacing specific components of the CNN architecture while maintaining the overall structure of the network; never by only using a pure transformer [3]. The goal of Dr. Dosovitskiy, et. al. in their work, “An Image is Worth 16×16 Words: Transformers for Image Recognition at Scale”, is to show that it is indeed possible to implement image classification by applying self-attention globally through the use of the basic Transformer encoder architure, while at the same time requiring significantly less computational resources to train, and outperforming state-of-the-art convolutional neural networks like ResNet.

2. The Transformer

Transformers, introduced in the paper titled “Attention is All You Need” by Vaswani et al. in 2017, are a class of neural network architectures that have revolutionized various natural language processing and machine learning tasks. A high level view of its architecture is shown in Fig. 1.

and decoder components (right block) [2]

Since its introduction, transformers have served as the foundation for many state-of-the-art models in NLP; including BERT, GPT, and more. Fundamentally, they are designed to process sequential data, such as text data, without the need for recurrent or convolutional layers [2]. They achieve this by relying heavily on a mechanism called self-attention.

The self-attention mechanism is a key innovation introduced in the paper that allows the model to capture relationships between different elements in a given sequence by weighing the importance of each element in the sequence with respect to other elements [2]. Say for instance, you want to translate the following sentence:

“The animal didn’t cross the street because it was too tired.”

What does the word “it” in this particular sentence refer to? Is it referring to the street or the animal? For us humans, this may be a trivial question to answer. But for an algorithm, this can be considered a complex task to perform. However, through the self-attention mechanism, the transformer model is able to estimate the relative weight of each word with respect to all the other words in the sentence, allowing the model to associate the word “it” with “animal” in the context of our given sentence [4].

2.1. The Self-Attention Mechanism

A transformer transforms a given input sequence by passing each element through an encoder (or a stack of encoders) and a decoder (or a stack of decoders) block, in parallel [2]. Each encoder block contains a self-attention block and a feed forward neural network. Here, we only focus on the transformer encoder block as this was the component used by Dosovitskiy et al. in their Vision Transformer image classification model.

As is the case with general NLP applications, the first step in the encoding process is to turn each input word into a vector using an embedding layer which converts our text data into a vector that represents our word in the vector space while retaining its contextual information. We then compile these individual word embedding vectors into a matrix X, where each row i represents the embedding of each element i in the input sequence. Then, we create three sets of vectors for each element in the input sequence; namely, Key (K), Query (Q), and Value (V). These sets are derived by multiplying matrix X with the corresponding trainable weight matrices WQ, WK, and WV [2].

Afterwards, we perform a matrix multiplication between K and Q, divide the result by the square-root of the dimensionality of K: ![]() …and then apply a softmax function to normalize the output and generate weight values between 0 and 1 [2].

…and then apply a softmax function to normalize the output and generate weight values between 0 and 1 [2].

We will call this intermediary output the attention factor. This factor, shown in Eq. 4, represents the weight that each element in the sequence contributes to the calculation of the attention value at the current position (word being processed). The idea behind the softmax operation is to amplify the words that the model thinks are relevant to the current position, and attenuate the ones that are irrelevant. For example, in Fig. 3, the input sentence “He later went to report Malaysia for one year” is passed into a BERT encoder unit to generate a heatmap that illustrates the contextual relationship of each word with each other. We can see that words that are deemed contextually associated produce higher weight values in their respective cells, visualized in a dark pink color, while words that are contextually unrelated have low weight values, represented in pale pink.

Finally, we multiply the attention factor matrix to the value matrix V to compute the aggregated self-attention value matrix Z of this layer [2], where each row i in Z represents the attention vector for word i in our input sequence. This aggregated value essentially bakes the “context” provided by other words in the sentence into the current word being processed. The attention equation shown in Eq. 5 is sometimes also referred to as the Scaled Dot-Product Attention.

2.2 The Multi-Headed Self-Attention

In the paper by Vaswani et. al., the self-attention block is further augmented with a mechanism known as the “multi-headed” self-attention, shown in Fig 4. The idea behind this is instead of relying on a single attention mechanism, the model employs multiple parallel attention “heads” (in the paper, Vaswani et. al. used 8 parallel attention layers), wherein each of these attention heads learns different relationships and provides unique perspectives on the input sequence [2]. This improves the performance of the attention layer in two important ways:

First, it expands the ability of the model to focus on different positions within the sequence. Depending on multiple variations involved in the initialization and training process, the calculated attention value for a given word (Eq. 5) can be dominated by other certain unrelated words or phrases or even by the word itself [4]. By computing multiple attention heads, the transformer model has multiple opportunities to capture the correct contextual relationships, thus becoming more robust to variations and ambiguities in the input.Second, since each of our Q, K, V matrices are randomly initialized independently across all the attention heads, the training process then yields several Z matrices (Eq. 5), which gives the transformer multiple representation subspaces [4]. For example, one head might focus on syntactic relationships while another might attend to semantic meanings. Through this, the model is able to capture more diverse relationships within the data.

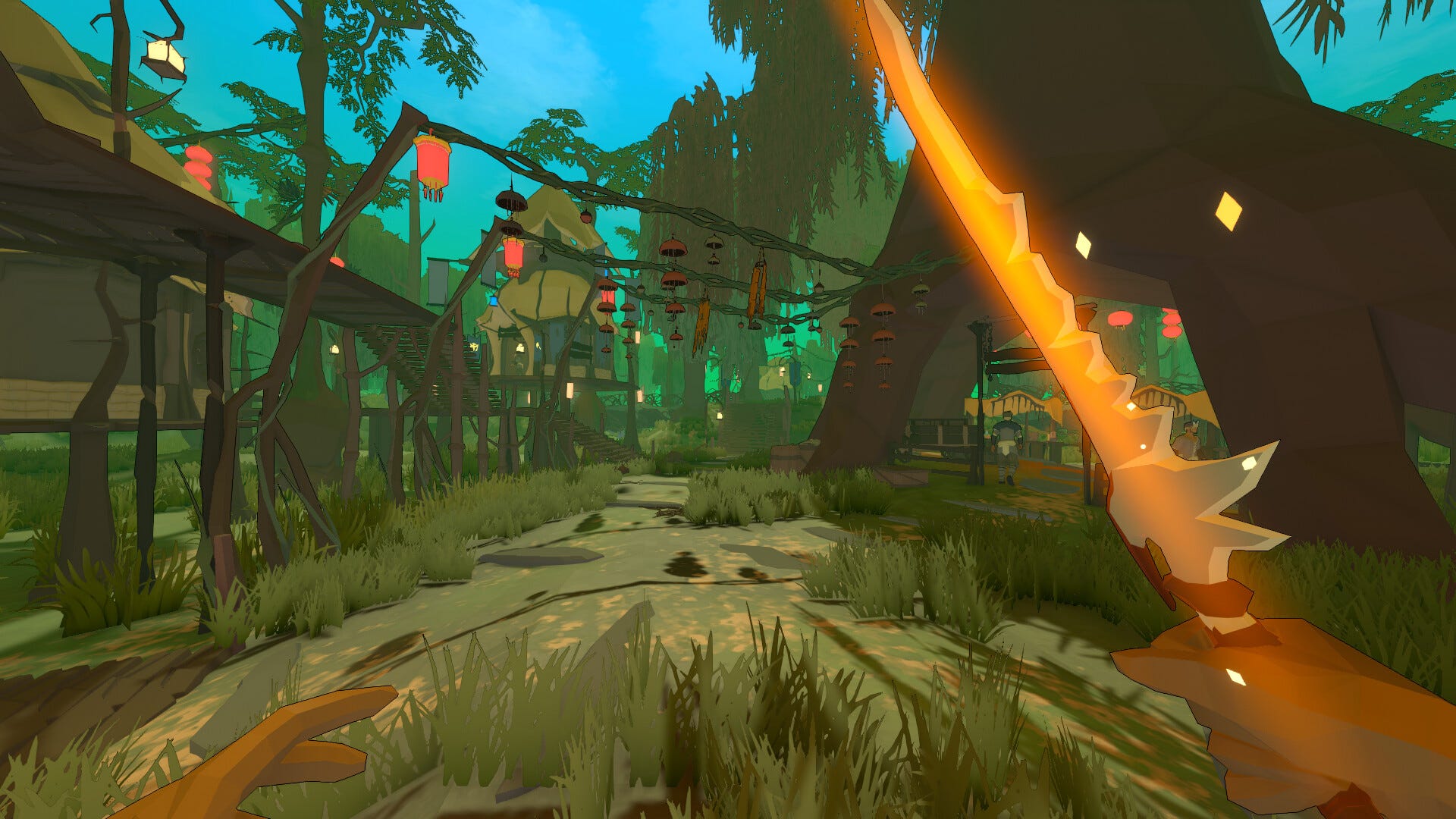

3. The Vision Transformer

The fundamental innovation behind the Vision Transformer (ViT) revolves around the idea that images can be processed as sequences of tokens rather than grids of pixels. In traditional CNNs, input images are analyzed as overlapping tiles via a sliding convolutional filter, which are then processed hierarchically through a series of convolutional and pooling layers. In contrast, ViT treats the image as a collection of non-overlapping patches, which are treated as the input sequence to a standard Transformer encoder unit.

derived from the Fig. 1 (right)[3].

By defining the input tokens to the transformer as non-overlapping image patches rather than individual pixels, we are therefore able to reduce the dimension of the attention map from ⟮

![Apple Officially Announces Return of 'Ted Lasso' for Fourth Season [Video]](https://www.iclarified.com/images/news/96710/96710/96710-640.jpg)

![Apple Plans Live Translation Feature for AirPods in iOS 19 [Report]](https://www.iclarified.com/images/news/96712/96712/96712-640.jpg)

![Apple Shares Official Trailer for 'F1' Starring Brad Pitt [Video]](https://www.iclarified.com/images/news/96714/96714/96714-640.jpg)

![[Update: Fix] Chromecast (2nd gen) and Audio can’t Cast in ‘Untrusted’ outage](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2019/08/chromecast_audio_1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

_Tanapong_Sungkaew_Alamy.jpg?#)

_JIRAROJ_PRADITCHAROENKUL_Alamy.jpg?#)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)