Securing Generative AI – Mitigating Data Leakage Risks

Generative artificial intelligence (GenAI) has emerged as a transformative force across industries, enabling content creation, data analysis, and decision-making breakthroughs. However, its rapid adoption has exposed critical vulnerabilities, with data leakage emerging as the most pressing security challenge. Recent incidents, including the alleged OmniGPT breach impacting 34 million user interactions and Check Point’s finding that […] The post Securing Generative AI – Mitigating Data Leakage Risks appeared first on Cyber Security News.

Generative artificial intelligence (GenAI) has emerged as a transformative force across industries, enabling content creation, data analysis, and decision-making breakthroughs.

However, its rapid adoption has exposed critical vulnerabilities, with data leakage emerging as the most pressing security challenge.

Recent incidents, including the alleged OmniGPT breach impacting 34 million user interactions and Check Point’s finding that 1 in 13 GenAI prompts contain sensitive data, underscore the urgent need for robust mitigation strategies.

This article examines the evolving threat landscape and analyzes technical safeguards, organizational policies, and regulatory considerations shaping the future of secure GenAI deployment.

The Expanding Attack Surface of Generative AI Systems

Modern GenAI platforms face multifaceted data leakage risks from their architectural complexity and dependence on vast training datasets.

Large language models (LLMs) like ChatGPT exhibit “memorization” tendencies. They reproduce verbatim excerpts from training data containing personally identifiable information (PII) or intellectual property.

A 2024 Netskope study revealed that 46% of GenAI data policy violations involved proprietary source code shared with public models. LayerX research found that 6% of employees regularly paste sensitive data into GenAI tools.

Integrating GenAI into enterprise workflows compounds these risks through prompt injection attacks, where malicious actors manipulate models to reveal training data through carefully crafted inputs.

Cross-border data flows introduce additional compliance challenges. Gartner predicts that 40% of AI-related breaches by 2027 will stem from improper transnational GenAI usage.

Technical Safeguards – From Differential Privacy to Secure Computation

Leading organizations are adopting mathematical privacy frameworks to harden GenAI systems. Differential privacy (DP) has emerged as a gold standard, injecting calibrated noise into training datasets to prevent model memorization of individual records.

Microsoft’s implementation in text generation models demonstrates DP can maintain 98% utility while reducing PII leakage risks by 83%.

Federated learning architectures provide complementary protection by decentralizing model training. As implemented in healthcare and financial sectors, this approach enables collaborative learning across institutions without sharing raw patient or transaction data.

NTT Data’s trials show federated systems reduce data exposure surfaces by 72% compared to centralized alternatives. For high-stakes applications, secure multi-party computation (SMPC) offers military-grade protection.

This cryptographic technique, exemplified in ArXiv’s decentralized GenAI framework, splits models across nodes so that no single party can access complete data or algorithms.

Early adopters report 5-10% accuracy improvements over traditional models while eliminating centralized breach risks.

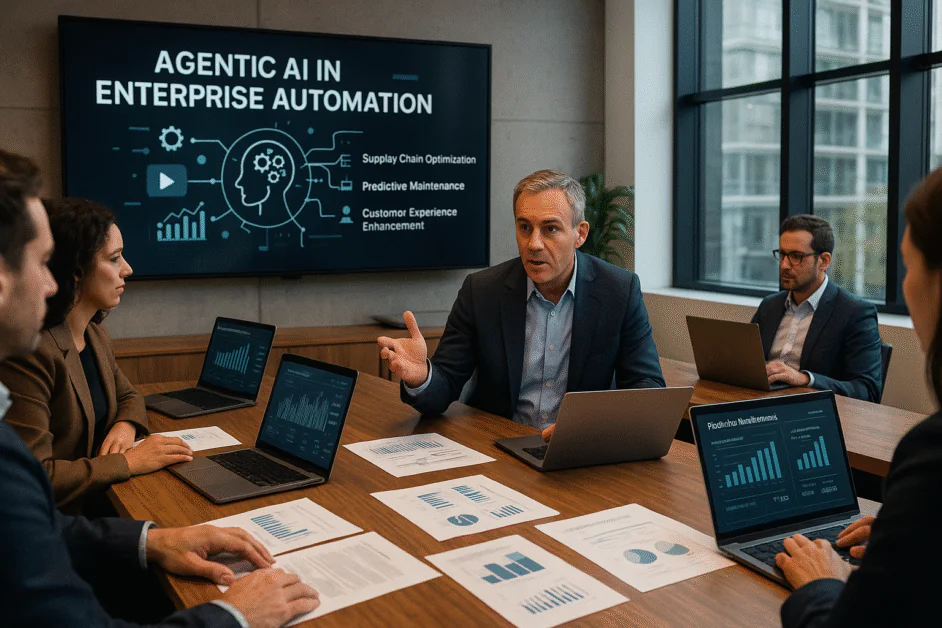

Organizational Strategies – Balancing Innovation and Risk Management

Progressive enterprises are moving beyond blanket GenAI bans to implement nuanced governance frameworks. Samsung’s post-leak response illustrates this shift – rather than prohibiting ChatGPT, they deployed real-time monitoring tools that redact 92% of sensitive inputs before processing.

Three pillars define modern GenAI security programs:

- Data sanitization pipelines leveraging AI-powered anonymization to scrub 98.7% of PII from training corpora

- Cross-functional review boards that reduced improper data sharing by 64% at Fortune 500 firms

- Continuous model auditing systems detect 89% of potential leakage vectors pre-deployment

The cybersecurity arms race has spurred $2.9 billion in venture funding for GenAI-specific defense tools since 2023.

SentinelOne’s AI Guardian platform typifies this innovation. It uses reinforcement learning to block 94% of prompt injection attempts while maintaining sub-200ms latency.

Regulatory Landscape and Future Directions

Global policymakers are scrambling to address GenAI risks through evolving frameworks. The EU’s AI Act mandates DP implementation for public-facing models, while U.S. NIST guidelines require federated architectures for federal AI systems.

Emerging standards like ISO/IEC 5338 aim to certify GenAI compliance across 23 security dimensions by 2026.

Technical innovations on the horizon promise to reshape the security paradigm:

- Homomorphic encryption enabling fully private model inference (IBM prototypes show 37x speed improvements)

- Neuromorphic chips with built-in DP circuitry reduce privacy overhead by 89%

- Blockchain-based audit trails provide an immutable model of provenance records

As GenAI becomes ubiquitous, its security imperative grows exponentially. Organizations adopting multilayered defense strategies combining technical safeguards, process controls, and workforce education report 68% fewer leakage incidents than their peers.

The path forward demands continuous adaptation – as GenAI capabilities and attacker sophistication evolve, so must our defenses.

This ongoing transformation presents both a challenge and an opportunity.

Enterprises that master secure GenAI deployment stand to gain $4.4 trillion in annual productivity gains by 2030, while those neglecting data protection face existential risks. The era of AI security has truly begun, with data integrity as its defining battleground.

Find this News Interesting! Follow us on Google News, LinkedIn, & X to Get Instant Updates!

The post Securing Generative AI – Mitigating Data Leakage Risks appeared first on Cyber Security News.

![Apple Pay, Apple Card, Wallet and Apple Cash Currently Experiencing Service Issues [Update: Fixed]](https://images.macrumors.com/t/RQPLZ_3_iMyj3evjsWnMLVwPdyA=/1600x/article-new/2023/11/apple-pay-feature-dynamic-island.jpg)

![iPhone 17 Air Could Get a Boost From TDK's New Silicon Battery Tech [Report]](https://www.iclarified.com/images/news/97344/97344/97344-640.jpg)

![Vision Pro Owners Say They Regret $3,500 Purchase [WSJ]](https://www.iclarified.com/images/news/97347/97347/97347-640.jpg)

![Apple Showcases 'Magnifier on Mac' and 'Music Haptics' Accessibility Features [Video]](https://www.iclarified.com/images/news/97343/97343/97343-640.jpg)

![Sony WH-1000XM6 Unveiled With Smarter Noise Canceling and Studio-Tuned Sound [Video]](https://www.iclarified.com/images/news/97341/97341/97341-640.jpg)

![How to upgrade the M4 Mac mini SSD and save hundreds [Video]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/05/M4-Mac-mini-SSD-Upgrade-Tutorial-2TB.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[The AI Show Episode 147]: OpenAI Abandons For-Profit Plan, AI College Cheating Epidemic, Apple Says AI Will Replace Search Engines & HubSpot’s AI-First Scorecard](https://www.marketingaiinstitute.com/hubfs/ep%20147%20cover.png)

![How to Enable Remote Access on Windows 10 [Allow RDP]](https://bigdataanalyticsnews.com/wp-content/uploads/2025/05/remote-access-windows.jpg)

![How to make Developer Friends When You Don't Live in Silicon Valley, with Iraqi Engineer Code;Life [Podcast #172]](https://cdn.hashnode.com/res/hashnode/image/upload/v1747360508340/f07040cd-3eeb-443c-b4fb-370f6a4a14da.png?#)

-Marathon-Gameplay-Overview-Trailer-00-04-50.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)