Supercon 2024: Photonics/Optical Stack for Smart-Glasses

Smart glasses are a complicated technology to work with. The smart part is usually straightforward enough—microprocessors and software are perfectly well understood and easy to integrate into even very compact …read more

Smart glasses are a complicated technology to work with. The smart part is usually straightforward enough—microprocessors and software are perfectly well understood and easy to integrate into even very compact packages. It’s the glasses part that often proves challenging—figuring out the right optics to create a workable visual interface that sits mere millimeters from the eye.

Dev Kennedy is no stranger to this world. He came to the 2024 Hackaday Supercon to give a talk and educate us all on photonics, optical stacks, and the technology at play in the world of smart glasses.

Good Optics

Dev’s talk begins with an apology. He notes that it’s not possible to convey an entire photonics and optics syllabus in a short presentation, which is understandable enough. His warning, regardless, is that his talk is as dense as possible to maximise the insight into the technical information he has to offer.

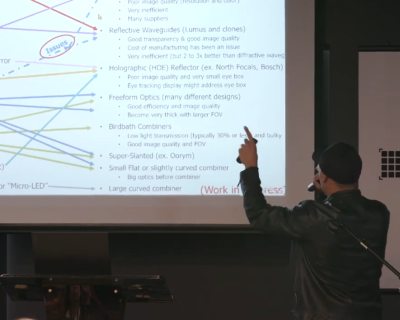

Things get heavy fast, as Dev dives into a breakdown of all the different basic technologies out there that can be used for building smart glasses. On one slide, he lays them all out with pros and cons across the board. There are a wide range of different illumination and projection technologies, everything from micro-OLED displays to fancy liquid crystal on silicon (LCOS) devices that are used to create an image with the aid of laser illumination. When you’re building smart glasses, though, that’s only half the story.

Once you’ve got something to make an image, you then need something to put it on in front of the eye. Dev goes on to talk about different techniques for doing this, from reflective waveguides to the amusingly-named birdbath combiners. Ultimately, you’re hunting for something that provides a clear and visible image to the user in all conditions, while still providing a great view of the world around them, too. This can be particularly challenging in high-brightness conditions, like walking around outdoors in daylight.

The talk also focuses on a particular bugbear for Dev—the fact that AR and VR aren’t treated as differently as they should be. “VR is a stack of pancakes,” says Dev. “Why is it a stack of pancakes? It’s because all of the PCBs, the optics, the emissions source for the light—is in front of the user’s nose.” Because VR is just about beaming images into the eye, with no regard for the outside world, it’s a little more straightforward. “It’s basically a stack of technology outward from the eye relief point to the back of the device.” Dev explains.

When it comes to AR, though, the solutions must be more complicated. “What’s different is AR is actually an archer,” says Dev, referring to the way such devices must fling light around. “What an archer does is it shoots light around the side of the arm, and it might have to bend it one way or another, up on the crossbar and spread it out through a waveguide, and at the very exist point… at the coupling out portion… the light has to make one more right turn… towards your eye.” Ultimately, the optics and display hardware involved tend to diverge a long way from what can be used in VR displays. “These technologies are fundamentally different,” says Dev. “It strains me to great extent that people kind of batch them into the same category.”

The talk also steps away from raw hardware chat, and covers some of the devices on the market, and those that left it years ago. Dev makes casual mention of Google Glass, spawned all the way back in 2013, before also noting developments Microsoft made with Hololens over the year. As for the current state of play, Dev namechecks Project Orion from Meta, as well as the fifth-generation of Snapchat Spectacles.

He gives particular credit to Meta for their work on refining input modalities that work with the smart glasses interrface paradigm. Meanwhile, he notes Snapchat needs work on “comfort, weight, and looks,” given how bulky their current product is. Overall, with these products, there are problems to be overcome before they can really become mainstream tools for every day use. “The important part is the relatability of these devices,” Dev goes on to explain. “We don’t see that just yet, as a $25,000 device from Meta and something that is too thick to be socially acceptable from Snapchat.

Fundamentally, as Dev’s talk highlights, AR remains a technology still at a nascent stage of development. It’s worth remembering—it took decades to develop computers that could fit in our pockets (smartphones) or on our wrists (smartwatches). Expect smart glasses to actually go mainstream as soon as the technical and optical issues are worked out, and the software and interface solutions actually help people in day to day life.

![Apple Shares Trailer for First Immersive Feature Film 'Bono: Stories of Surrender' [Video]](https://www.iclarified.com/images/news/97168/97168/97168-640.jpg)

![New Hands-On iPhone 17 Dummy Video Shows Off Ultra-Thin Air Model, Updated Pro Designs [Video]](https://www.iclarified.com/images/news/97171/97171/97171-640.jpg)

![Apple testing Stage Manager for iPhone, Photographic Styles for video, and more [Video]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/04/iOS-Decoded-iOS-18.5.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

-xl.jpg)

.webp?#)

_Jochen_Tack_Alamy.png?width=1280&auto=webp&quality=80&disable=upscale#)

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)