What is Apache Kafka? The Open Source Business Model, Funding, and Community

Abstract This post takes an in-depth look into Apache Kafka—a distributed streaming platform that revolutionizes real-time data processing and stands as a prime example of sustainable open source funding and business models. We explore its history, technical foundations, funding channels, community contributions, challenges, and future innovations. By comparing Apache Kafka’s model with emerging trends such as decentralized funding and tokenization, readers will gain valuable insights into its enduring success and technical excellence. Introduction Apache Kafka has become a cornerstone of modern data ecosystems with its ability to handle massive real-time data streams. Initially developed at LinkedIn and later contributed to the Apache Software Foundation, Kafka is widely recognized not only for its robustness in data streaming but also for its exemplary open source funding and business model. From incorporating distributed commit logs to sustaining community-driven development, Apache Kafka demonstrates a delicate balance between corporate sponsorship and grassroots collaboration. In the first hundred words of the original article, the multifaceted world of Apache Kafka was introduced through its technical capabilities and open licensing via the Apache License Version 2.0. This post builds on that foundation, offering a holistic perspective of Apache Kafka by discussing its background, core concepts, use cases, funding models, and future outlook. Our goal is to provide both technical experts and newcomers with a clear, accessible exploration of this influential platform, while weaving in SEO-optimized keywords such as Apache Kafka, open source funding, distributed streaming, Apache License 2.0, and community contributions. Background and Context Apache Kafka’s roots lie in its inception at LinkedIn, where it was designed to serve as a robust messaging system for real-time data analytics. Its evolution into an enterprise-grade distributed streaming platform is a testament to the power of open source collaboration and corporate sponsorship. Key historical milestones include: LinkedIn Creation: Developed as a messaging system for handling high-scale data. Open Sourcing and ASF Contribution: Transition to the Apache Software Foundation allowed for broader community inputs. Expanding Ecosystem: Integration with stream processing frameworks like Apache Flink and Apache Storm magnified its reach. Below is a table summarizing the historical evolution of Apache Kafka: Milestone Description Impact Inception at LinkedIn Designed for high-throughput messaging Enabled real-time data processing ASF Contribution Open sourced under the Apache License 2.0 Boosted community collaboration Ecosystem Expansion Integrates with Apache Flink, Apache Storm, and Kubernetes Broadened its use across industries Corporate Sponsorship Direct funding from enterprise stakeholders Promoted continuous innovation Apache Kafka’s success is underpinned by a dual approach to funding—combining traditional corporate sponsorships with grassroots community grants, thereby ensuring its longevity and technical excellence. Core Concepts and Features Apache Kafka is built on a few fundamental concepts that allow it to excel as a distributed streaming platform: Distributed Commit Log: Kafka’s architecture is centered around a distributed, fault-tolerant commit log that stores streams of records in a durable and high-throughput fashion. Producers and Consumers: Data flows into Kafka via producers that send messages to topics. Consumers then subscribe to these topics, processing the messages in real time. Scalability and Fault Tolerance: Kafka clusters can scale horizontally, with multiple servers coordinating to distribute data load. Its design ensures that even if individual nodes fail, the overall system remains resilient. Modular Integration: Thanks to its modular approach, Kafka integrates seamlessly with other systems including container orchestration platforms like Kubernetes and third-party tools such as Apache Hadoop. The open source model that supports Apache Kafka prioritizes transparency and community contributions. With a large number of global developers pushing regular updates to the Apache Kafka repository on GitHub, each update reinforces its reliability and scalability. Bullet List of Key Features: High Throughput: Designed to handle millions of messages per second. Fault Tolerance: Robust cluster architecture that minimizes data loss. Open Licensing: Operates under the Apache License 2.0 which promotes free use and modification. Modular Architecture: Easily integrates with other tools and systems. Scalability: Supports both vertical and horizontal scaling for enterprise-grade load management. Applications and Use Cases Apache Kafka’s application spans a wide range of industries and technical scenarios. Here are a few practical exa

Abstract

This post takes an in-depth look into Apache Kafka—a distributed streaming platform that revolutionizes real-time data processing and stands as a prime example of sustainable open source funding and business models. We explore its history, technical foundations, funding channels, community contributions, challenges, and future innovations. By comparing Apache Kafka’s model with emerging trends such as decentralized funding and tokenization, readers will gain valuable insights into its enduring success and technical excellence.

Introduction

Apache Kafka has become a cornerstone of modern data ecosystems with its ability to handle massive real-time data streams. Initially developed at LinkedIn and later contributed to the Apache Software Foundation, Kafka is widely recognized not only for its robustness in data streaming but also for its exemplary open source funding and business model. From incorporating distributed commit logs to sustaining community-driven development, Apache Kafka demonstrates a delicate balance between corporate sponsorship and grassroots collaboration.

In the first hundred words of the original article, the multifaceted world of Apache Kafka was introduced through its technical capabilities and open licensing via the Apache License Version 2.0. This post builds on that foundation, offering a holistic perspective of Apache Kafka by discussing its background, core concepts, use cases, funding models, and future outlook. Our goal is to provide both technical experts and newcomers with a clear, accessible exploration of this influential platform, while weaving in SEO-optimized keywords such as Apache Kafka, open source funding, distributed streaming, Apache License 2.0, and community contributions.

Background and Context

Apache Kafka’s roots lie in its inception at LinkedIn, where it was designed to serve as a robust messaging system for real-time data analytics. Its evolution into an enterprise-grade distributed streaming platform is a testament to the power of open source collaboration and corporate sponsorship. Key historical milestones include:

- LinkedIn Creation: Developed as a messaging system for handling high-scale data.

- Open Sourcing and ASF Contribution: Transition to the Apache Software Foundation allowed for broader community inputs.

- Expanding Ecosystem: Integration with stream processing frameworks like Apache Flink and Apache Storm magnified its reach.

Below is a table summarizing the historical evolution of Apache Kafka:

| Milestone | Description | Impact |

|---|---|---|

| Inception at LinkedIn | Designed for high-throughput messaging | Enabled real-time data processing |

| ASF Contribution | Open sourced under the Apache License 2.0 | Boosted community collaboration |

| Ecosystem Expansion | Integrates with Apache Flink, Apache Storm, and Kubernetes | Broadened its use across industries |

| Corporate Sponsorship | Direct funding from enterprise stakeholders | Promoted continuous innovation |

Apache Kafka’s success is underpinned by a dual approach to funding—combining traditional corporate sponsorships with grassroots community grants, thereby ensuring its longevity and technical excellence.

Core Concepts and Features

Apache Kafka is built on a few fundamental concepts that allow it to excel as a distributed streaming platform:

Distributed Commit Log:

Kafka’s architecture is centered around a distributed, fault-tolerant commit log that stores streams of records in a durable and high-throughput fashion.Producers and Consumers:

Data flows into Kafka via producers that send messages to topics. Consumers then subscribe to these topics, processing the messages in real time.Scalability and Fault Tolerance:

Kafka clusters can scale horizontally, with multiple servers coordinating to distribute data load. Its design ensures that even if individual nodes fail, the overall system remains resilient.Modular Integration:

Thanks to its modular approach, Kafka integrates seamlessly with other systems including container orchestration platforms like Kubernetes and third-party tools such as Apache Hadoop.

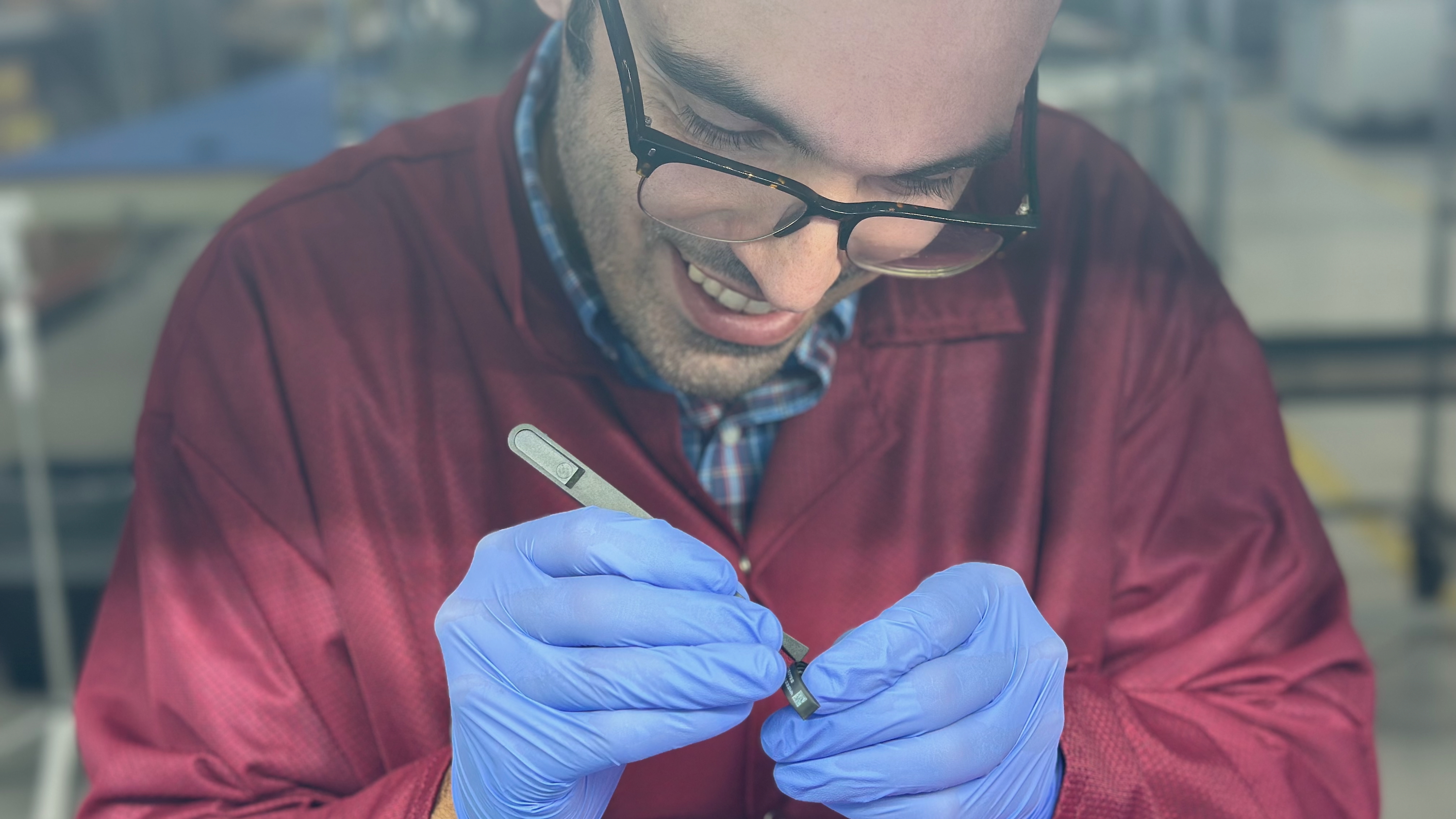

The open source model that supports Apache Kafka prioritizes transparency and community contributions. With a large number of global developers pushing regular updates to the Apache Kafka repository on GitHub, each update reinforces its reliability and scalability.

Bullet List of Key Features:

- High Throughput: Designed to handle millions of messages per second.

- Fault Tolerance: Robust cluster architecture that minimizes data loss.

- Open Licensing: Operates under the Apache License 2.0 which promotes free use and modification.

- Modular Architecture: Easily integrates with other tools and systems.

- Scalability: Supports both vertical and horizontal scaling for enterprise-grade load management.

Applications and Use Cases

Apache Kafka’s application spans a wide range of industries and technical scenarios. Here are a few practical examples:

1. Financial Services

Global banks and financial institutions use Apache Kafka to power high-frequency trading systems and risk analysis pipelines. Its low latency and high throughput make it an ideal choice for systems that require real-time data processing.

2. E-Commerce and Retail

Kafka facilitates real-time inventory management and customer behavior analytics in e-commerce platforms. Large retail corporations rely on its capacity to deliver continuous streams of transactional data and user interactions.

3. Social Media and Telecommunications

Social networks and telecom companies leverage Kafka to aggregate and process user data in real time, enabling personalized experiences, fraud detection, and improved content delivery.

In each of these cases, the open source nature of Kafka ensures flexible customization while the robust funding model supports constant upgrades and innovations—providing both enterprise value and community benefits.

Challenges and Limitations

Despite its many strengths, Apache Kafka is not without challenges. Understanding these limitations is key to managing expectations and optimizing its adoption:

Complexity in Operations:

Deploying and managing Kafka clusters can be complex, especially at scale. It requires thorough expertise in distributed systems.Latency and Throughput Optimization:

Organizations must invest time into tuning Kafka configurations to balance latency with throughput, especially in high-demand environments.Resource Intensive Monitoring:

Continuous monitoring is crucial to avoid system bottlenecks. Integrating sophisticated monitoring tools can add to the administrative overhead.Balancing Open Source and Commercial Interests:

While Apache Kafka maintains a delicate mix between community contributions and corporate sponsorship, this balance sometimes results in competing priorities, such as rapid feature enhancements versus long-term reliability.

Understanding these challenges helps organizations deploy Kafka more effectively and allocate resources for both technical support and community engagement.

Future Outlook and Innovations

The future of Apache Kafka is closely tied to the rapid evolution of distributed systems and innovative funding models. Here are several trends and emerging areas:

Integration with Decentralized Funding Models

There is ongoing exploration into decentralized funding mechanisms, including tokenizing open source contributions—a concept discussed in projects like tokenizing open source licenses. Such innovations could lead to more resilient funding streams for projects like Kafka.

Enhanced Ecosystem Integration

Apache Kafka is expected to further integrate with emerging technologies like container orchestration, real-time AI processing, and blockchain interoperability. These integrations will expand Kafka’s functionality and cement its role as a hub in a connected open source ecosystem.

Improved Developer Tools and Monitoring

Advancements in developer tools, automated monitoring, and intelligent alerting systems are on the horizon. These improvements will help mitigate some of the operational challenges currently faced and streamline the maintenance of large Kafka deployments.

Sustainability Through Community and Corporate Synergy

The continued success of Apache Kafka lies in its unique balance of corporate sponsorship and community grants. As discussed in several articles—such as the in-depth exploration of GitHub Sponsors—this model is likely to evolve, with enhanced transparency and innovative funding approaches supporting long-term sustainability.

Below is an overview table summarizing upcoming trends and innovations:

| Future Trend | Description | Expected Impact |

|---|---|---|

| Decentralized Funding Models | Exploring tokenization and blockchain-based sponsorship mechanisms | Enhanced community rewards |

| Ecosystem Integration | Tighter integration with emerging tech like AI and blockchain | Broader application and reach |

| Advanced Monitoring and Tools | Development of smarter monitoring solutions and developer-friendly enhancements | Lower operational overhead |

| Sustainable Synergy Model | Continued balance of corporate and community contributions | Long-term project resilience |

Summary

Apache Kafka stands as a prime example of a successful open source project that combines technical excellence with a robust and sustainable funding model. Over decades, its evolution—from a LinkedIn innovation to a globally adopted distributed streaming platform—has been driven by both corporate sponsorship and a dedicated open source community.

Key Takeaways:

- Background and Growth: Apache Kafka has evolved from a messaging system at LinkedIn to an enterprise-grade platform under the Apache Software Foundation.

- Technical Excellence: With its distributed commit log, producer/consumer model, and modular architecture, Kafka is designed for high throughput and fault tolerance.

- Open Source Funding: Its success is bolstered by diversified funding channels, balancing corporate sponsorship with community grants.

- Future Innovations: Emerging trends such as decentralized funding models and enhanced ecosystem integrations promise to further elevate Kafka’s capabilities.

For those interested in a deeper dive into Apache Kafka’s multifaceted world, further details can be found on the official Kafka website and the Apache Kafka GitHub repository. Additionally, exploring innovative funding models via resources like tokenizing open source licenses provides insight into the future of open source software sustainability.

As organizations continue to rely on real-time data processing, Apache Kafka’s blend of community-driven innovation and corporate financial backing sets a benchmark. By continuously evolving and addressing challenges such as operational complexity and maintenance overhead, Kafka not only highlights the best practices in distributed systems but also serves as a model for sustainable open source development.

Further Reading and Resources:

- Apache Kafka Overview

- Apache Software Foundation

- Apache License Version 2.0

- GitHub Sponsors and Open Source Funding

- Exploring Decentralized Funding for Open Source

In conclusion, Apache Kafka’s technological prowess—combined with its innovative and balanced open source funding model—continues to drive its widespread adoption. As the ecosystem evolves further with advancements in decentralized funding and smarter integration tools, Apache Kafka remains poised to lead the way in real-time data streaming and open source development for years to come.

![Apple M4 MacBook Air Hits New All-Time Low of $824 [Deal]](https://www.iclarified.com/images/news/97288/97288/97288-640.jpg)

![An Apple Product Renaissance Is on the Way [Gurman]](https://www.iclarified.com/images/news/97286/97286/97286-640.jpg)

![Apple to Sync Captive Wi-Fi Logins Across iPhone, iPad, and Mac [Report]](https://www.iclarified.com/images/news/97284/97284/97284-640.jpg)

![Apple M4 iMac Drops to New All-Time Low Price of $1059 [Deal]](https://www.iclarified.com/images/news/97281/97281/97281-640.jpg)

![[Fixed] Gemini 2.5 Flash missing file upload for free app users](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/03/google-gemini-workspace-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![As Galaxy Watch prepares a major change, which smartwatch design to you prefer? [Poll]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/07/Galaxy-Watch-Ultra-and-Apple-Watch-Ultra-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![Ditching a Microsoft Job to Enter Startup Purgatory with Lonewolf Engineer Sam Crombie [Podcast #171]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746753508177/0cd57f66-fdb0-4972-b285-1443a7db39fc.png?#)

![[DEALS] Internxt Cloud Storage Lifetime Subscription: 10TB Plan (88% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)