Why You Need Application Security Guardrails for Cursor and Windsurf AI Agents

As AI-powered coding assistants like Cursor and Windsurf continue to reshape modern development workflows, their growing autonomy also raises new security concerns. These tools do more than just assist with code suggestions—they independently write and execute logic based on prompts. Without guardrails in place, such autonomy could introduce serious risks into your software supply chain. To counter these risks, Application Security Guardrails must be embedded across your SDLC. They function as security boundaries—automated checks and policy enforcements—to ensure the outputs of AI coding agents are secure, ethical, and compliant with industry standards. Understanding AppSec Guardrails in an AI Context Unlike traditional security controls, AppSec guardrails for AI agents are designed to monitor behavior, restrict unauthorized actions, and verify the integrity of generated code in real-time. For tools like Cursor and Windsurf, they provide oversight at both input (user prompts) and output (code suggestions), reducing the likelihood of data leaks, insecure configurations, or flawed logic being committed to production. Guardrails combine SAST, SCA, RBAC, secret scanning, and policy-as-code to enable scalable security enforcement—catching vulnerabilities before the code is even deployed. Key Reasons Guardrails Are Essential for Cursor and Windsurf Preventing Unsafe Code Suggestions: Guardrails flag insecure code patterns or misconfigurations generated by AI, helping developers catch issues early. Controlling Access Privileges: Through role-based controls, AI agents are limited to essential tasks only—preventing unauthorized access or privilege escalation. Input and Output Filtering: Guardrails analyze prompts for injection attempts and ensure outputs do not expose sensitive data or perform unintended actions. Compliance and Traceability: Logging and monitoring AI code activity supports compliance audits and helps identify risky behavior patterns quickly. Dependency Validation: With integrated SCA tools, AI-generated code is screened for vulnerable libraries or outdated packages. Implementing Effective Guardrails Start by defining organization-specific AppSec policies tailored to AI-generated code. Integrate security tools like SAST and SCA into your CI/CD workflows and code editors. Enable prompt validation, enforce secure output sanitization, and monitor agent behavior through logging and alerting systems. Additionally, secure configuration files and apply version control to ensure the integrity of AI agent rule sets. Limit critical commands and enforce approvals for high-risk operations. Developers’ Role in AI Code Security Developers must treat all AI outputs as untrusted by default. Every code suggestion needs to be understood, tested, and reviewed before merging. Avoid embedding credentials in prompts and ensure generated code adheres to company coding standards. Closing Thoughts AI agents like Cursor and Windsurf promise faster development—but without security guardrails, they can introduce silent risks. By embedding application security across their usage, teams can unlock the full potential of AI coding tools—securely and responsibly.

As AI-powered coding assistants like Cursor and Windsurf continue to reshape modern development workflows, their growing autonomy also raises new security concerns. These tools do more than just assist with code suggestions—they independently write and execute logic based on prompts. Without guardrails in place, such autonomy could introduce serious risks into your software supply chain.

To counter these risks, Application Security Guardrails must be embedded across your SDLC. They function as security boundaries—automated checks and policy enforcements—to ensure the outputs of AI coding agents are secure, ethical, and compliant with industry standards.

Understanding AppSec Guardrails in an AI Context

Unlike traditional security controls, AppSec guardrails for AI agents are designed to monitor behavior, restrict unauthorized actions, and verify the integrity of generated code in real-time. For tools like Cursor and Windsurf, they provide oversight at both input (user prompts) and output (code suggestions), reducing the likelihood of data leaks, insecure configurations, or flawed logic being committed to production.

Guardrails combine SAST, SCA, RBAC, secret scanning, and policy-as-code to enable scalable security enforcement—catching vulnerabilities before the code is even deployed.

Key Reasons Guardrails Are Essential for Cursor and Windsurf

Preventing Unsafe Code Suggestions: Guardrails flag insecure code patterns or misconfigurations generated by AI, helping developers catch issues early.

- Controlling Access Privileges: Through role-based controls, AI agents are limited to essential tasks only—preventing unauthorized access or privilege escalation.

- Input and Output Filtering: Guardrails analyze prompts for injection attempts and ensure outputs do not expose sensitive data or perform unintended actions.

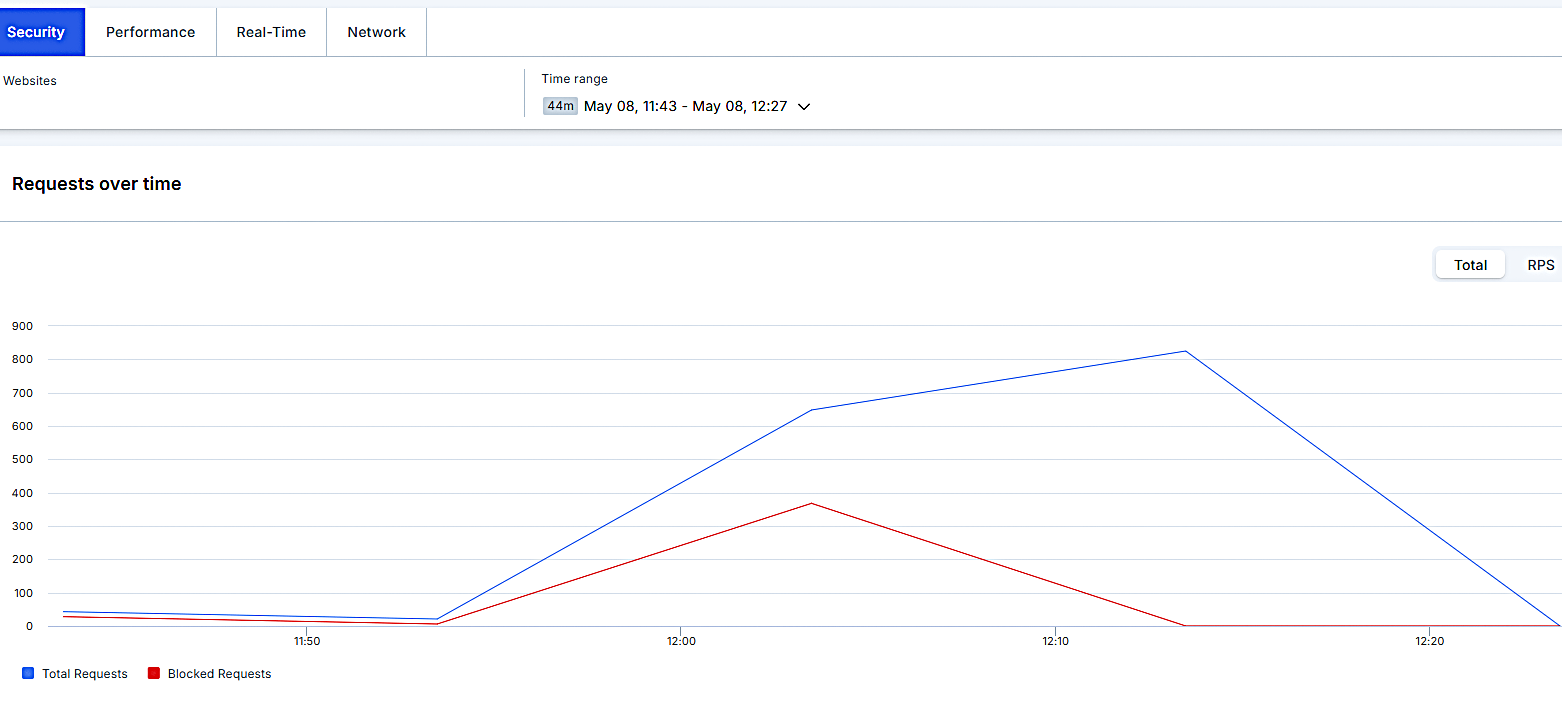

- Compliance and Traceability: Logging and monitoring AI code activity supports compliance audits and helps identify risky behavior patterns quickly.

- Dependency Validation: With integrated SCA tools, AI-generated code is screened for vulnerable libraries or outdated packages.

Implementing Effective Guardrails

Start by defining organization-specific AppSec policies tailored to AI-generated code. Integrate security tools like SAST and SCA into your CI/CD workflows and code editors. Enable prompt validation, enforce secure output sanitization, and monitor agent behavior through logging and alerting systems.

Additionally, secure configuration files and apply version control to ensure the integrity of AI agent rule sets. Limit critical commands and enforce approvals for high-risk operations.

Developers’ Role in AI Code Security

Developers must treat all AI outputs as untrusted by default. Every code suggestion needs to be understood, tested, and reviewed before merging. Avoid embedding credentials in prompts and ensure generated code adheres to company coding standards.

Closing Thoughts

AI agents like Cursor and Windsurf promise faster development—but without security guardrails, they can introduce silent risks. By embedding application security across their usage, teams can unlock the full potential of AI coding tools—securely and responsibly.

![Sony WH-1000XM6 Unveiled With Smarter Noise Canceling and Studio-Tuned Sound [Video]](https://www.iclarified.com/images/news/97341/97341/97341-640.jpg)

![Trump Tells Cook to Stop Building iPhones in India and Build in the U.S. Instead [Video]](https://www.iclarified.com/images/news/97329/97329/97329-640.jpg)

![[The AI Show Episode 147]: OpenAI Abandons For-Profit Plan, AI College Cheating Epidemic, Apple Says AI Will Replace Search Engines & HubSpot’s AI-First Scorecard](https://www.marketingaiinstitute.com/hubfs/ep%20147%20cover.png)

![How to Enable Remote Access on Windows 10 [Allow RDP]](https://bigdataanalyticsnews.com/wp-content/uploads/2025/05/remote-access-windows.jpg)

![Artist Shocked To Find Her Poster Designs From 2017 In Bungie's Marathon: 'A Major Company Has Deemed It Easier To Pay A Designer To Imitate Or Steal My Work Than To Write Me An Email' [Update]](https://i.kinja-img.com/image/upload/c_fill,h_675,pg_1,q_80,w_1200/4ce7afff77473c3cccca9cc349c42790.jpg)