Your GenAI-git trainer

For the final Capstone project of the 5-Day GenAI Intensive Course (Q1 2025), we were asked to create a Kaggle notebook. After some initial testing with a chat instance using google-api.genai, the project quickly evolved—thanks to great documentation and solid examples online—into a more structured idea. Why not build a trainer to speed up onboarding for others or help experienced developers learn something new through "learning by doing"? You’ll find the link to the Kaggle notebook at the end of this article. How to start First, you'll need to get a new Google Studio api key. The website is a bit buggy, so if you can’t log in or retrieve your API key, it’s likely because you haven’t confirmed your age yet. The idea The idea is to guide the user through a series of seamless steps: We provide a simple component that integrates every layer: presentation, application, and model. It couldn’t be simpler! The solution I know—not using production-ready tools like LangGraph isn’t ideal. But here, the goal was to explore the challenges and tradeoffs of building an AI solution from scratch. The user always interacts with the agent interface. Based on their state and prompt, the agent constructs a response using relevant documents and acts on a system it manages. Gemini, you're an expert. Trust me If a user clearly says they are a beginner and want to learn how to commit, why would the model ask for that info again? #instruction prompt You are an experienced trainer ... . The way you teach a user is by: - ask for: - one or more topic: {topics} - one level: {levels} .... Example interaction: #user-model chat User: "I'm beginner using git and terminal. I want to learn how to make a commit" Model: "OK. I'll teach you how to make a commit. First, please provide the difficulty level you want to start with: BEGINNER, MEDIUM, ADVANCED, or UNKNOWN. Also, let me know the specific topics you're interested in. For example: basic commits, basic staging. You can provide multiple topics, separated by commas." Let’s try fixing this in a few ways: Raise temperature Try tweaking temperature settings to see if the model becomes more flexible with prompts: for i in range(0,10): temp = 0+i/10 chat = client.chats.create( model="gemini-2.0-flash", config=types.GenerateContentConfig( system_instruction=instruction, tools=tools, temperature=temp ) ) resp = chat.send_message("I'm beginner using git and terminal. I want to learn how to make a commit") print(f'Temp {temp} {resp.text}') Observation: The model consistently follows the instruction prompt—maybe too rigidly. Refine the Instruction Prompt We iterated the prompt wording until we found one that worked: ask or infer if user already specified the following ask or just infer if user already specified the following: gather from the user topics and his/her current level and the general topic of the exercise they want. Ask or just infer if user already specified the following: Interestingly, the last sentence got the same response as earlier versions. Debugging Function Calls It’s easier to debug a conversation flow when you can view every step: import textwrap def print_chat_turns(chat): """Prints out each turn in the chat history, including function calls and responses.""" for event in chat.get_history(): print(f"{event.role.capitalize()}:") for part in event.parts: if txt := part.text: print(f' "{txt}"') elif fn := part.function_call: args = ", ".join(f"{key}={val}" for key, val in fn.args.items()) print(f" Function call: {fn.name}({args})") elif resp := part.function_response: print(" Function response:") print(textwrap.indent(str(resp.response['result']), " ")) print() print_chat_turns(chat) the model doesn’t always call what we think it should! Example: User: "I'm beginner in using git and terminal. I want to learn how to make a commit" Model: "OK. let's start with the basics of Git commits. What level of difficulty are you comfortable with (BEGINNER, MEDIUM, ADVANCED, or UNKNOWN)? Also, besides "basic commits", is there any other specific topic you're interested in right now? For example, "basic staging"? " User: "As topic is commits and difficult very beginner" Model: "OK, I will create a basic commit exercise for you. " Function call: start_exercise(motivation=Commits are the fundamental building blocks of a Git repository. Each commit represents a snapshot of your project at a specific point in time. Understanding how to create commits is essential for tracking changes and collaborating with others., title=Creating Your First Commit, startup_script=['git init', 'git config --global use

For the final Capstone project of the 5-Day GenAI Intensive Course (Q1 2025), we were asked to create a Kaggle notebook.

After some initial testing with a chat instance using google-api.genai, the project quickly evolved—thanks to great documentation and solid examples online—into a more structured idea.

Why not build a trainer to speed up onboarding for others or help experienced developers learn something new through "learning by doing"?

You’ll find the link to the Kaggle notebook at the end of this article.

How to start

First, you'll need to get a new Google Studio api key. The website is a bit buggy, so if you can’t log in or retrieve your API key, it’s likely because you haven’t confirmed your age yet.

The idea

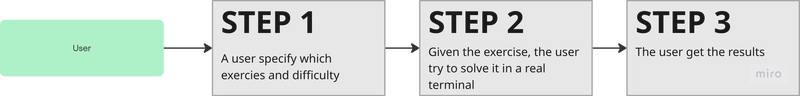

The idea is to guide the user through a series of seamless steps:

We provide a simple component that integrates every layer: presentation, application, and model.

It couldn’t be simpler!

The solution

I know—not using production-ready tools like LangGraph isn’t ideal.

But here, the goal was to explore the challenges and tradeoffs of building an AI solution from scratch.

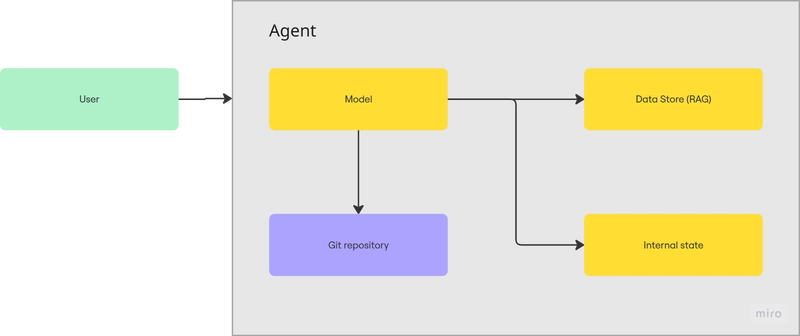

The user always interacts with the agent interface. Based on their state and prompt, the agent constructs a response using relevant documents and acts on a system it manages.

Gemini, you're an expert. Trust me

If a user clearly says they are a beginner and want to learn how to commit, why would the model ask for that info again?

#instruction prompt

You are an experienced trainer ... .

The way you teach a user is by:

- ask for:

- one or more topic: {topics}

- one level: {levels}

....

Example interaction:

#user-model chat

User:

"I'm beginner using git and terminal. I want to learn how to make a commit"

Model:

"OK. I'll teach you how to make a commit.

First, please provide the difficulty level you want to start with: BEGINNER, MEDIUM, ADVANCED, or UNKNOWN.

Also, let me know the specific topics you're interested in. For example: basic commits, basic staging.

You can provide multiple topics, separated by commas."

Let’s try fixing this in a few ways:

Raise temperature

Try tweaking temperature settings to see if the model becomes more flexible with prompts:

for i in range(0,10):

temp = 0+i/10

chat = client.chats.create(

model="gemini-2.0-flash",

config=types.GenerateContentConfig(

system_instruction=instruction,

tools=tools,

temperature=temp

)

)

resp = chat.send_message("I'm beginner using git and terminal. I want to learn how to make a commit")

print(f'Temp {temp} {resp.text}')

Observation: The model consistently follows the instruction prompt—maybe too rigidly.

Refine the Instruction Prompt

We iterated the prompt wording until we found one that worked:

- ask or infer if user already specified the following

- ask or just infer if user already specified the following:

- gather from the user topics and his/her current level and the general topic of the exercise they want. Ask or just infer if user already specified the following:

Interestingly, the last sentence got the same response as earlier versions.

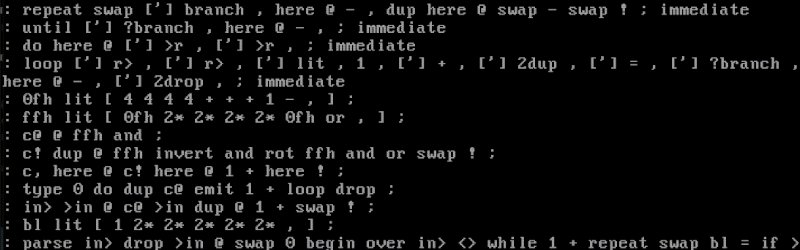

Debugging Function Calls

It’s easier to debug a conversation flow when you can view every step:

import textwrap

def print_chat_turns(chat):

"""Prints out each turn in the chat history, including function calls and responses."""

for event in chat.get_history():

print(f"{event.role.capitalize()}:")

for part in event.parts:

if txt := part.text:

print(f' "{txt}"')

elif fn := part.function_call:

args = ", ".join(f"{key}={val}" for key, val in fn.args.items())

print(f" Function call: {fn.name}({args})")

elif resp := part.function_response:

print(" Function response:")

print(textwrap.indent(str(resp.response['result']), " "))

print()

print_chat_turns(chat)

the model doesn’t always call what we think it should!

Example:

User:

"I'm beginner in using git and terminal. I want to learn how to make a commit"

Model:

"OK. let's start with the basics of Git commits. What level of difficulty are you comfortable with (BEGINNER, MEDIUM, ADVANCED, or UNKNOWN)? Also, besides "basic commits", is there any other specific topic you're interested in right now? For example, "basic staging"?

"

User:

"As topic is commits and difficult very beginner"

Model:

"OK, I will create a basic commit exercise for you.

"

Function call: start_exercise(motivation=Commits are the fundamental building blocks of a Git repository. Each commit represents a snapshot of your project at a specific point in time. Understanding how to create commits is essential for tracking changes and collaborating with others., title=Creating Your First Commit, startup_script=['git init', 'git config --global user.email "you@example.com"', 'git config --global user.name "Your Name"', 'touch my_file.txt', 'echo "Hello, world!" > my_file.txt'], task=Create a new file named `my_file.txt`, add some content to it, and then commit it to the Git repository with a meaningful message., useful_commands=git add, git commit, git status)

User:

Function response:

None

Model:

"I've created an exercise titled "Creating Your First Commit". The exercise involves creating a file, adding content, and committing it to the repository. I've also provided a few useful commands like `git add`, `git commit`, and `git status`.

Now, please execute the commands in the terminal to complete the exercise and tell me the commands you've used.

Separation of concern:

If you define tool (Gemini tool concept) with mixed behaviour like teh following, poorly documented and not well clear instructions the model can expose your function signature and return to the user prompt what was planned to be passed to the function_call!

This is bad function tool:

def start_exercise(title: str, motivation: str, task: str, useful_commands: str, startup_script: typing.List[str]) -> None:

"""Give exercise to a user and setup workspace for them.

"""

print(f'Executing startup script {startup_script}')

print(f"Hey user do it: {title}\n{motivation}\n{task}\n{useful_commands}")

Better Version:

def start_exercise(title: str, motivation: str, task: str, useful_commands: str, startup_script: typing.List[str]) -> None:

"""Give exercise to a user and setup workspace for them.

title, motivation, task, useful_commands will be sent to the user.

startup_script will be evaluated in the context of a bash shell that will be used by the user

Args:

title: title of this exercise.

motivation: a multiline string representing a markdown file about why the exercise and the topic is useful to know.

task: a multiline string representing a markdown file about the goal.

useful_commands: a multiline string representing a markdown file containing some usefull command that can use to perform the task.

startup_script: a list of bash commands, one per string instance which will be executed in the shell.

"""

print(f'Executing startup script {startup_script}')

print(f"Hei user do it: {title}\n{motivation}\n{task}\n{useful_commands}")

Now that function is well documented we have to explain clearly what to do and not.

An even more clear approach is to clearly divide what are the actions as agentic ai and what is the user prompt. Here is a refined version:

## STEP 3

This step consists of two actions: One function call called start_exercise with the parameter startup_script, and the second is returning an exercise to the user.

- startup_script is a list of commands used to set up the environment:

- the sequence of terminal commands which will be executed in an empty folder in order to create a clean situation where the user can start to execute commands and solve the exercise

- startup script must contains only the following commands: git, touch, mkdir, echo

- everytime it starts with those 3 commands: git config --global user.email "you@example.com", git config --global user.name "Your Name" and git init

- an exercise is made of title, motivation, task, useful_commands:

- title: a maximum of 10 words summarizing the topic of the exercise

- motivation: a brief explanation of the topic and why it’s important to understand

- task: a description of the exercise (no more than three paragraphs)

- useful_commands: a list of commands that may be helpful in the context of the tas

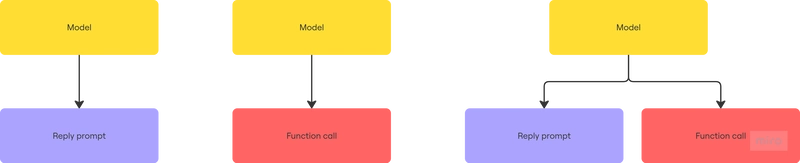

Prompt Design Strategy

To get both a function call and a user-facing message, you need to:

- Clearly instruct the model to generate a response AND call a function

- Define how and when to use the function

- Avoid ambiguity

Without this, the model might do only one thing or worse, expose internal implementation details!

Kaggle link

Kaggle Notebook

You can find the live version of the trainer here:

![Mobile Legends: Bang Bang [MLBB] Free Redeem Codes April 2025](https://www.talkandroid.com/wp-content/uploads/2024/07/Screenshot_20240704-093036_Mobile-Legends-Bang-Bang.jpg)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)