A Quick Guide to Horizontal Scaling for Surging Transactions

It's Friday afternoon, and your team has spent weeks preparing for the biggest flash sale of the year. Marketing has hyped it up, inventory is stocked, and your product team is confident. Then, 3 p.m. hits, and thousands of customers flood your payment gateway. Transaction failures start piling up, customer complaints pour in, and your biggest sales day becomes a technical nightmare. This scenario is all too common for teams managing payment infrastructure during high-traffic events. As illustrated in the diagram above, what typically works smoothly during normal conditions can quickly break down during surge traffic. The difference between success and failure often comes down to how well your systems can scale to handle these sudden transaction surges. This guide will show you strategies to scale your payment infrastructure horizontally. These techniques have been proven at scale in systems processing millions of Naira in transactions during flash sales and Black Friday events. By the end of this guide, you'll have a concrete playbook for handling traffic that's ten times your normal volume without sacrificing transaction integrity or customer experience. What sets this guide apart is its focus on the specific challenges of payment scaling. We'll cover: Maintaining transaction consistency during these scaling events. Managing database connections efficiently. Handling rate limits with external payment processors. Implementing scaling triggers that respond proactively. ## What is Horizontal Scaling? Horizontal scaling refers to the practice of adding more machines to your existing infrastructure rather than upgrading the resources of a single machine. When traffic spikes, you distribute the workload across multiple servers instead of buying a more powerful server. When a server fails in a horizontally scaled system, the other servers continue running, preventing complete outages from happening. Horizontal scaling works through: Load balancing: Traffic is intelligently distributed across multiple servers to prevent any single server from becoming overwhelmed. Data partitioning: Data is divided across multiple machines, allowing for parallel processing and improved performance. Service replication: Critical services are duplicated across multiple nodes to ensure high availability. ## What is Vertical scaling? Vertical scaling, also known as "scaling up," refers to the process of enhancing a system's performance by adding more resources to an existing machine or server. Unlike horizontal scaling, which distributes workloads across multiple machines, vertical scaling focuses on upgrading a single server with more processing power, memory, storage capacity, or other hardware components. Limitations of Vertical Scaling While vertical scaling offers simplicity, it has notable constraints when compared to horizontal scaling. A significant concern is that it introduces a single point of failure - if your server experiences hardware or software failure, your entire workload is affected. This makes fault tolerance more challenging to implement. Differences Between Horizontal and Vertical Scaling Approach When deciding between horizontal and vertical scaling, consider your application's specific requirements: Aspect Horizontal Scaling Vertical Scaling Implementation Add more machines Upgrade existing machine Scalability Nearly unlimited Limited by hardware Fault tolerance High Limited (single point of failure) Cost structure Incremental, potentially lower long-term Higher upfront costs for high-end hardware Complexity More complex networking, distribution Simpler architecture Application compatibility May require redesign Usually works with existing code The Unique Challenges of Scaling a Payment Service Payment systems present some distinct scaling challenges compared to other microservices. Traditional scaling approaches often fail when applied to payments because of their unique requirements. Below are some challenges you might face when trying to scale a payment service, particularly in the context of horizontal vs. Vertical scaling: Stateful vs. Stateless Considerations Unlike content delivery or product catalog services, payment processing services are stateful. Each transaction has multiple stages (authorization, capture, settlement) that must maintain perfect consistency. Customers expect their payments to either completely succeed or completely fail. There's no middle ground. Dealing with stateful applications comes with a specific set of challenges that you must consider. If any service dies mid-transaction, you risk double-charging customers if retries aren't idempotent, losing transactions if the state isn't externalized, and inconsistent reporting if transactions are partially recorded. To fix that, payment systems need three key things: Externalized transaction state: This means storing all transaction information outside the applic

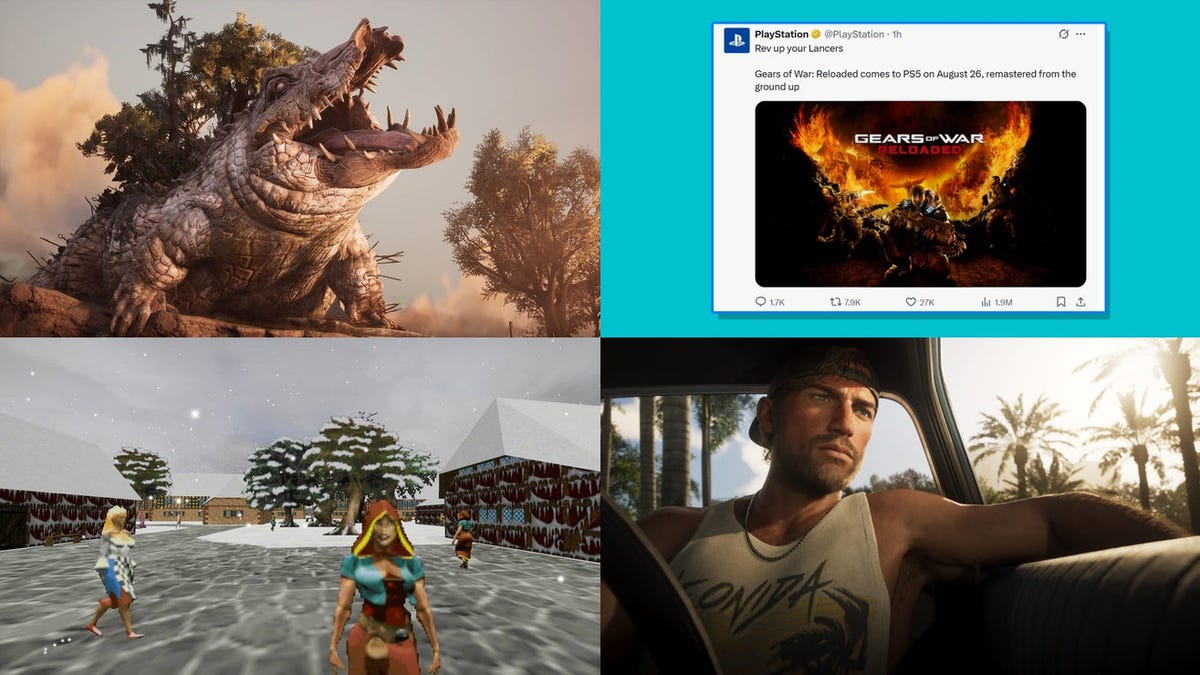

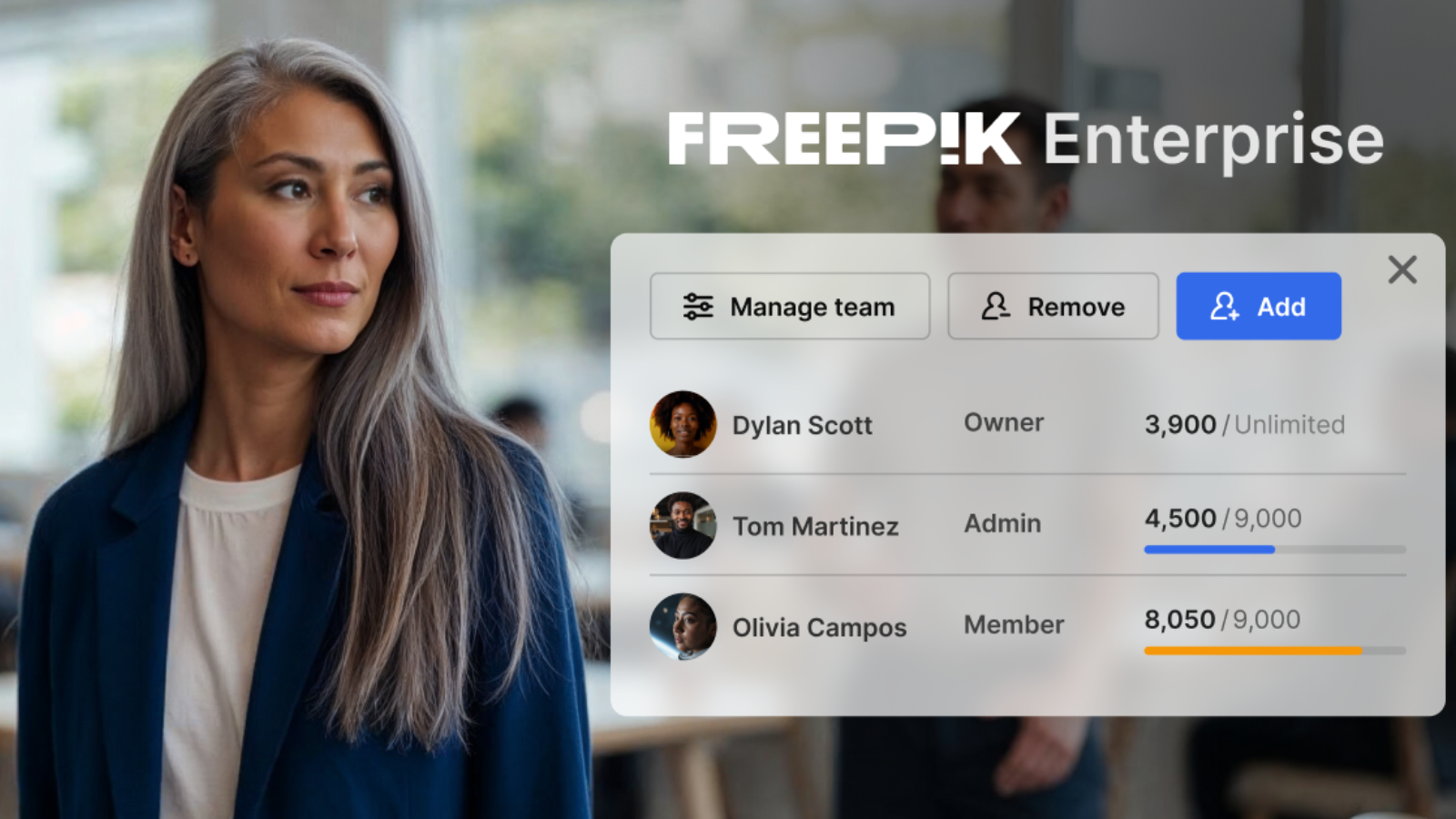

It's Friday afternoon, and your team has spent weeks preparing for the biggest flash sale of the year. Marketing has hyped it up, inventory is stocked, and your product team is confident. Then, 3 p.m. hits, and thousands of customers flood your payment gateway. Transaction failures start piling up, customer complaints pour in, and your biggest sales day becomes a technical nightmare. This scenario is all too common for teams managing payment infrastructure during high-traffic events.

As illustrated in the diagram above, what typically works smoothly during normal conditions can quickly break down during surge traffic.

The difference between success and failure often comes down to how well your systems can scale to handle these sudden transaction surges. This guide will show you strategies to scale your payment infrastructure horizontally. These techniques have been proven at scale in systems processing millions of Naira in transactions during flash sales and Black Friday events. By the end of this guide, you'll have a concrete playbook for handling traffic that's ten times your normal volume without sacrificing transaction integrity or customer experience.

What sets this guide apart is its focus on the specific challenges of payment scaling. We'll cover:

- Maintaining transaction consistency during these scaling events.

- Managing database connections efficiently.

- Handling rate limits with external payment processors.

- Implementing scaling triggers that respond proactively. ## What is Horizontal Scaling?

Horizontal scaling refers to the practice of adding more machines to your existing infrastructure rather than upgrading the resources of a single machine. When traffic spikes, you distribute the workload across multiple servers instead of buying a more powerful server.

When a server fails in a horizontally scaled system, the other servers continue running, preventing complete outages from happening.

Horizontal scaling works through:

- Load balancing: Traffic is intelligently distributed across multiple servers to prevent any single server from becoming overwhelmed.

- Data partitioning: Data is divided across multiple machines, allowing for parallel processing and improved performance.

- Service replication: Critical services are duplicated across multiple nodes to ensure high availability. ## What is Vertical scaling?

Vertical scaling, also known as "scaling up," refers to the process of enhancing a system's performance by adding more resources to an existing machine or server. Unlike horizontal scaling, which distributes workloads across multiple machines, vertical scaling focuses on upgrading a single server with more processing power, memory, storage capacity, or other hardware components.

Limitations of Vertical Scaling

While vertical scaling offers simplicity, it has notable constraints when compared to horizontal scaling. A significant concern is that it introduces a single point of failure - if your server experiences hardware or software failure, your entire workload is affected. This makes fault tolerance more challenging to implement.

Differences Between Horizontal and Vertical Scaling Approach

When deciding between horizontal and vertical scaling, consider your application's specific requirements:

| Aspect | Horizontal Scaling | Vertical Scaling |

|---|---|---|

| Implementation | Add more machines | Upgrade existing machine |

| Scalability | Nearly unlimited | Limited by hardware |

| Fault tolerance | High | Limited (single point of failure) |

| Cost structure | Incremental, potentially lower long-term | Higher upfront costs for high-end hardware |

| Complexity | More complex networking, distribution | Simpler architecture |

| Application compatibility | May require redesign | Usually works with existing code |

The Unique Challenges of Scaling a Payment Service

Payment systems present some distinct scaling challenges compared to other microservices. Traditional scaling approaches often fail when applied to payments because of their unique requirements. Below are some challenges you might face when trying to scale a payment service, particularly in the context of horizontal vs. Vertical scaling:

Stateful vs. Stateless Considerations

Unlike content delivery or product catalog services, payment processing services are stateful. Each transaction has multiple stages (authorization, capture, settlement) that must maintain perfect consistency. Customers expect their payments to either completely succeed or completely fail. There's no middle ground.

Dealing with stateful applications comes with a specific set of challenges that you must consider.

If any service dies mid-transaction, you risk double-charging customers if retries aren't idempotent, losing transactions if the state isn't externalized, and inconsistent reporting if transactions are partially recorded.

To fix that, payment systems need three key things:

- Externalized transaction state: This means storing all transaction information outside the application, typically in a database, instead of keeping payment details only in the server's memory (which disappears if the server crashes).

- Idempotency keys for all operations: An idempotency key is a unique identifier that lets you safely retry operations without accidentally processing the same transaction twice.

- Distributed tracing to track transactions across services: This is a way to follow a transaction as it moves through different parts of your system.

Common Payment Bottlenecks During Spikes

Here are some common bottlenecks during the investigation and analysis (post-mortem) of a failed payment system. The following ones occur most often:

- Transaction lock contention: When multiple instances try to update the same database rows (like account balances or inventory), lock contention creates a series of slowdowns.

- Third-party API rate limits: External payment processors have strict rate limits. One instance might respect those limits, but ten instances calling the same API will hit those limits without coordination.

- Redis/cache saturation: Payment systems rely heavily on caching for fraud checks and customer data. Redis connection pools fill up before CPU limits are hit.

- Timeout cascades: One slow component can trigger a cascade of timeouts across the system. For example, if the authorization service slows down, authentication requests back up, and new payment initiation is eventually affected.

Scaling Out vs. Scaling Up

Vertical scaling ("just add more CPU") introduces more problems than it solves. For payment systems, horizontal scaling is the way to go. The following sections provide implementation details for scaling out while addressing those payment-specific challenges.

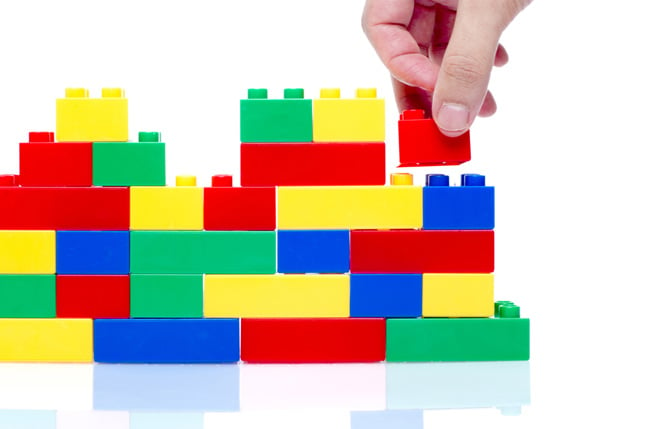

Before jumping into auto-scaling configurations, you need to lay the groundwork. Think of this as building the foundation before adding floors to your building.

Here's what you need to do before you start scaling:

- Containerize your payment services for quick and consistent deployment.

- Externalize any shared state (to databases, caches, etc.).

- Make your operations safely repeatable without side effects (idempotent).

- Configure your load balancer to distribute traffic effectively.

Monitoring and Alerting Setup

You can't improve what you don't measure. Before implementing scaling, set up monitoring for:

- Transaction latency: How long does a payment request take to process?

- Error rates: What percentage of transactions are failing?

- Resource utilization: CPU, memory, connection pools, etc.

- Queue depths: Are requests backing up?

Establish a baseline performance during normal operations to know what “good” looks like.

For Kubernetes, a combination of Prometheus and Grafana works well. Here’s a minimal Prometheus config to track basic payment metrics:

scrape_configs:

- job_name: 'payment-service'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_label_app]

action: keep

regex: payment-service

Testing Strategy

Never test in production. Have a testing strategy:

- Load testing: Use Locust or K6 to simulate real payment patterns.

- Failover testing: Verify graceful handling when instances are removed.

- Chaos testing: Introduce random failures to verify resilience.

A basic K6 script for payment load testing might look like this:

import http from 'k6/http';

import { check, sleep } from 'k6';

export let options = {

stages: [

{ duration: '1m', target: 50 }, // Ramp up to 50 users

{ duration: '3m', target: 50 }, // stay at 50 users

{ duration: '1m', target: 200 }, // Spike to 200 users

{ duration: '3m', target: 200 }, // Stay at 200 users

{ duration: '1m', target: 0 }, // scale back down

],

};

export default function() {

let payload = JSON.stringify({

amount: 1000,

currency: 'NGN',

payment_method: 'card',

card: {

number: '4111111111111111',

cvv: '123',

expiry_month: '01',

expiry_year: '25'

}

});

let params = {

headers: {

'Content-Type': 'application/json',

},

};

let res = http.post('https://staging-api.example.com/payments', payload, params);

check(res, {

'status is 200': (r) => r.status === 200,

'transaction successful': (r) => r.json().status === 'successful',

});

sleep(Math.random() * 3);

}

Implementing Auto-scaling for Payment Services

With the groundwork laid, let’s take the next step in making sure our payment service can handle whatever traffic comes its way. We'll focus on Kubernetes, but the principles also apply to AWS ECS.

CPU-Based Auto-scaling Configuration

While many engineers default to CPU-based scaling, it's worth understanding when it really works well for payment services and when it falls short.

CPU-based scaling works best in situations where you're doing a lot of:

- Cryptographic operations (encryption/decryption)

- Fraud detection algorithms

- Signature validation

- Complex fee calculations

Here's a Kubernetes Horizontal Pod Autoscaler (HPA) configuration:

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: payment-processor-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: payment-processor

minReplicas: 3

maxReplicas: 20

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 70

behavior:

scaleUp:

stabilizationWindowSeconds: 60

policies:

- type: Percent

value: 100

periodSeconds: 60

- type: Pods

value: 4

periodSeconds: 60

selectPolicy: Max

scaleDown:

stabilizationWindowSeconds: 300

policies:

- type: Percent

value: 20

periodSeconds: 60

Here are some key configuration parameters to keep in mind:

- Target utilization of 70%: Provides headroom for traffic spikes while efficiently using resources. You don't want to set that too high (90%), which can lead to degraded performance during scale-up periods.

- Minimum three replicas: A production payment service should never drop below three instances to maintain high availability across zones.

- Quick scale-up policies: You want to be able to double your capacity quickly. A 100% increase every 60 seconds can do that. You can also set a 4-pod increase every 60 seconds to ensure scaling at an absolute minimum rate. And you can select the policy that scales faster.

- Conservative scale-down approach: You don't want to drop instances that are still processing payments. A 20% reduction every 60 seconds can prevent that. You should also have a five-minute stabilization window to prevent thrashing when volumes fluctuate.

Memory-Based Scaling Considerations

Memory-based scaling is worth considering if your payment service relies on large in-memory caches or queues:

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: payment-processor-memory-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: payment-processor

minReplicas: 3

maxReplicas: 20

metrics:

- type: Resource

resource:

name: memory

target:

type: Utilization

averageUtilization: 80

That's because memory-based scaling works well when your service:

- Maintains large transaction caches

- Buffer requests or responses

- Uses in-memory queues

Health Checks and Service Discovery

Auto-scaling only works if new instances are properly integrated with your system. This means you need well-designed health checks and service discovery.

Designing Effective Health Checks

Health checks for payment services need special care to prevent routing traffic to instances that aren’t fully ready:

livenessProbe:

httpGet:

path: /health/liveness

port: 8080

initialDelaySeconds: 30

periodSeconds: 10

failureThreshold: 3

readinessProbe:

httpGet:

path: /health/readiness

port: 8080

initialDelaySeconds: 10

periodSeconds: 5

A good readiness endpoint should check for database connectivity, message queue connectivity, third-party payment processor API availability, and required cache access. This makes sure the service is fully operational and ready to process transactions. A good liveness endpoint should focus on internal service health, memory leaks/issues, and deadlocks. This informs service discovery during scaling events so traffic can be routed intelligently to maintain system integrity as instances come and go.

Here’s an example of a health endpoint for payment services:

@app.route('/health/readiness')

def readiness_check():

status = {

'database': check_db_connection(),

'redis': check_redis_connection(),

'payment_processor': check_payment_processor(),

'message_queue': check_queue_connection()

}

if all(status.values()):

return jsonify(status), 200

else:

return jsonify(status), 503

Readiness vs. Liveness Probes

Understanding the difference between readiness and liveness probes is key for payment systems. Liveness probes determine if an instance should be restarted, checking for unrecoverable issues like deadlocks or memory leaks while avoiding external dependency checks. Readiness probes determine if an instance should receive traffic by checking all required dependencies and removing an instance from the load balancer if any dependency is unavailable. This ensures your payment system remains stable during scaling events.

For payment processing, failing readiness should remove an instance from the load balancer, but failing liveness should only restart the instance if it’s truly unhealthy. A temporary API outage shouldn’t cause restarts but should prevent new transaction routing.

Service Discovery Patterns

As your payment service scales up and down, other services need to find it. In Kubernetes, this is handled by Services:

apiVersion: v1

kind: Service

metadata:

name: payment-processor

spec:

selector:

app: payment-processor

ports:

- port: 80

targetPort: 8080

sessionAffinity: ClientIP

sessionAffinityConfig:

clientIP:

timeoutSeconds: 180

The sessionAffinity: ClientIP setting is super important for payment services. This lets requests from the same customer session go to the same instance as much as possible for multi-step payment flows.

Database Scaling Strategies

Your app might scale great, but if your database can’t handle the load, you’ll still fail. Here are some database scaling strategies:

Read Replicas Configuration

For payment systems with heavy read loads (transaction lookups, reporting), read replicas can help:

apiVersion: apps/v1

kind: Deployment

metadata:

name: payment-processor

spec:

template:

spec:

containers:

- name: payment-processor

env:

- name: DB_WRITE_HOST

value: "payment-db-primary"

- name: DB_READ_HOST

value: "payment-db-read"

Your app code needs to direct writes to the primary and reads to the replica:

def get_transaction(transaction_id):

# Use read replica for lookups

return db_read_pool.query("SELECT * FROM transactions WHERE id = %s", transaction_id)

def create_transaction(transaction_data):

# Use primary for writes

return db_write_pool.execute("INSERT INTO transactions (amount, status) VALUES (%s, %s)",

transaction_data['amount'], 'pending')

Caching Strategies

Not everything in a payment system needs to be a direct database hit. Consider caching:

- Customer information (with short TTLs)

- Product and pricing data

- Configuration settings

- Authentication tokens (with appropriate security)

For Redis-based caching:

const Redis = require('ioredis');

const redis = new Redis({

host: process.env.REDIS_HOST,

maxRetriesPerRequest: 3,

retryStrategy(times) {

// Exponential backoff with jitter

const delay = Math.min(times * 50, 2000) + Math.random() * 100;

return delay;

}

});

async function getTransactionStatus(txId) {

// Try cache first

const cachedStatus = await redis.get(`tx:status:${txId}`);

if (cachedStatus) {

return JSON.parse(cachedStatus);

}

// Fall back to database

const status = await db.query('SELECT status FROM transactions WHERE id = $1', [txId]);

// Cache for 60 seconds

await redis.set(`tx:status:${txId}`, JSON.stringify(status), 'EX', 60);

return status;

}

Load Testing and Validation

Before trusting your auto-scaling in production, validate it thoroughly through load testing.

A good payment load test should have these characteristics:

- Realistic traffic patterns: Include gradual increases, sudden spikes, and sustained plateaus.

- Mixed transaction types: Include various payment methods (card, bank transfer, mobile money).

- Error scenarios: Test declined cards, timeouts, and API failures.

- Sustained duration: Run tests long enough to trigger scaling events.

Using K6, you can define stages to simulate a flash sale:

export let options = {

stages: [

{ duration: '5m', target: 50 }, // Normal traffic

{ duration: '2m', target: 500 }, // Flash sale starts - 10x spike

{ duration: '15m', target: 500 }, // Sustained high traffic

{ duration: '5m', target: 50 } // Return to normal

],

};

How to Use Flutterwave to Support Scalable Payment Architecture

If your business handles large transaction volumes during flash sales or high-traffic events, you’ll need infrastructure that can scale fast without losing reliability. You can use Flutterwave to build a payment system that performs under pressure and grows with your business.

Here’s how to apply Flutterwave’s features to solve common scaling problems:

- Use webhooks to scale real-time payment updates without polling.

- Use rate limiting controls to manage spikes without timeouts.

- Adopt a single API to handle cross-border payments without extra overhead.

Use Webhooks to Eliminate Polling Bottlenecks

Polling introduces unnecessary pressure on your servers and database, especially during spikes. You can use Flutterwave’s webhook infrastructure to shift to an event-driven model. Instead of querying for payment updates, your application receives real-time notifications. This reduces your read operations and frees you to scale horizontally without worrying about request coordination or missed status updates.

Use Rate Limiting Strategies to Prevent Timeouts

When scaling payment processing, hitting rate limits can cause cascading failures throughout your system. You can implement intelligent rate limiting with Flutterwave's API by setting your request timeouts to 25 seconds (below Flutterwave's 28-second window) to prevent hanging connections or implement proper error handling for 429 responses with automatic retries.

Handle Cross-Border Payments with a Single Integration

Payment scaling becomes more complex when dealing with multiple currencies and payment methods. You can simplify cross-border transactions by leveraging Flutterwave's unified API, which allows for:

- Support for multiple currencies and payment methods.

- Cross-border payment collections up to 10 times faster than industry averages.

- Unified API across 30+ markets.

Wrapping up

Horizontal scaling for payment systems has its own unique set of challenges. By focusing on the ones that matter most—transaction integrity and database connections—and by leveraging Flutterwave's infrastructure, your payment systems inherit these scaling capabilities, allowing you to focus on your core business rather than payment infrastructure concerns.

Ready to build a payment system that scales with your business? Sign up for Flutterwave today and access our developer-friendly APIs, comprehensive documentation, and 24/7 support.

![Gurman: First Foldable iPhone 'Should Be on the Market by 2027' [Updated]](https://images.macrumors.com/t/7O_4ilWjMpNSXf1pIBM37P_dKgU=/2500x/article-new/2025/03/Foldable-iPhone-2023-Feature-Homescreen.jpg)

![Apple Shares 'Last Scene' Short Film Shot on iPhone 16 Pro [Video]](https://www.iclarified.com/images/news/97289/97289/97289-640.jpg)

![Apple M4 MacBook Air Hits New All-Time Low of $824 [Deal]](https://www.iclarified.com/images/news/97288/97288/97288-640.jpg)

![An Apple Product Renaissance Is on the Way [Gurman]](https://www.iclarified.com/images/news/97286/97286/97286-640.jpg)

![Apple to Sync Captive Wi-Fi Logins Across iPhone, iPad, and Mac [Report]](https://www.iclarified.com/images/news/97284/97284/97284-640.jpg)

![What Google Messages features are rolling out [May 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/12/google-messages-name-cover.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[Fixed] Gemini 2.5 Flash missing file upload for free app users](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/03/google-gemini-workspace-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Ditching a Microsoft Job to Enter Startup Purgatory with Lonewolf Engineer Sam Crombie [Podcast #171]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746753508177/0cd57f66-fdb0-4972-b285-1443a7db39fc.png?#)

![[DEALS] Internxt Cloud Storage Lifetime Subscription: 10TB Plan (88% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

-Tony-Hawk's™-Pro-Skater™-3-+-4-Reveal-Trailer-00-00-27.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)