An LLM for the Raspberry Pi

Microsoft’s latest Phi4 LLM has 14 billion parameters that require about 11 GB of storage. Can you run it on a Raspberry Pi? Get serious. However, the Phi4-mini-reasoning model is …read more

Microsoft’s latest Phi4 LLM has 14 billion parameters that require about 11 GB of storage. Can you run it on a Raspberry Pi? Get serious. However, the Phi4-mini-reasoning model is a cut-down version with “only” 3.8 billion parameters that requires 3.2 GB. That’s more realistic and, in a recent video, [Gary Explains] tells you how to add this LLM to your Raspberry Pi arsenal.

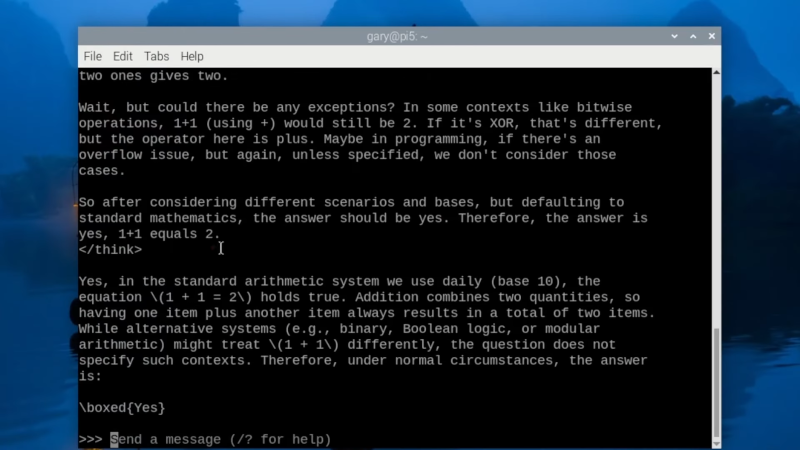

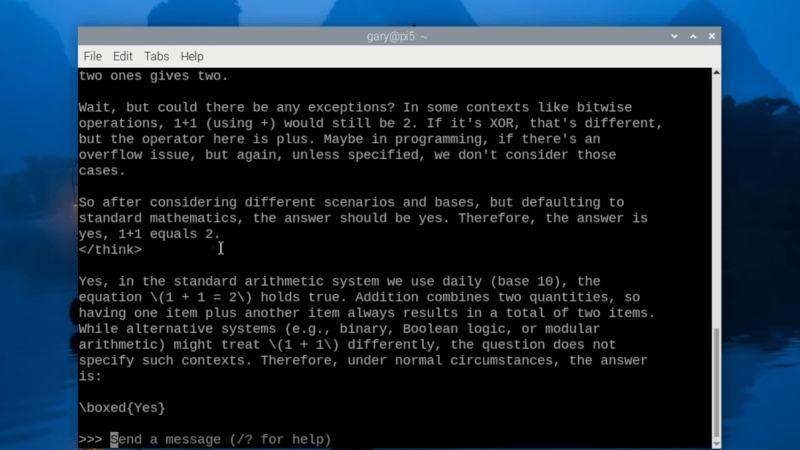

The version [Gary] uses has four-bit quantization and, as you might expect, the performance isn’t going to be stellar. If you are versed in all the LLM lingo, the quantization is the way weights are stored, and, in general, the more parameters a model uses, the more things it can figure out.

As a benchmark, [Gary] likes to use what he calls “the Alice question.” In other words, he asks for an answer to this question: “Alice has five brothers and she also has three sisters. How many sisters does Alice’s brother have?” While it probably took you a second to think about it, you almost certainly came up with the correct answer. With this model, a Raspberry Pi can answer it, too.

The first run seems fairly speedy, but it is running on a PC with a GPU. He notes that the same question takes about 10 minutes to pop up on a Raspberry Pi 5 with 4 cores and 8GB of RAM.

We aren’t sure what you’d do with a very slow LLM, but it does work. Let us know what you’d use it for, if anything, in the comments.

There are some other small models if you don’t like Phi4.

![An Apple Product Renaissance Is on the Way [Gurman]](https://www.iclarified.com/images/news/97286/97286/97286-640.jpg)

![Apple to Sync Captive Wi-Fi Logins Across iPhone, iPad, and Mac [Report]](https://www.iclarified.com/images/news/97284/97284/97284-640.jpg)

![Apple M4 iMac Drops to New All-Time Low Price of $1059 [Deal]](https://www.iclarified.com/images/news/97281/97281/97281-640.jpg)

![Beats Studio Buds + On Sale for $99.95 [Lowest Price Ever]](https://www.iclarified.com/images/news/96983/96983/96983-640.jpg)

![[Fixed] Gemini 2.5 Flash missing file upload for free app users](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/03/google-gemini-workspace-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![As Galaxy Watch prepares a major change, which smartwatch design to you prefer? [Poll]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/07/Galaxy-Watch-Ultra-and-Apple-Watch-Ultra-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![Ditching a Microsoft Job to Enter Startup Purgatory with Lonewolf Engineer Sam Crombie [Podcast #171]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746753508177/0cd57f66-fdb0-4972-b285-1443a7db39fc.png?#)

![[DEALS] Internxt Cloud Storage Lifetime Subscription: 10TB Plan (88% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)