CaMeL: A Robust Defense Against LLM Prompt Injection Attacks

Originally published at ssojet Google DeepMind has introduced CaMeL (CApabilities for MachinE Learning) as a defense mechanism against prompt injection attacks, a significant concern for large language models (LLMs). Prompt injection can lead to unauthorized data access or harmful operations by injecting malicious instructions into the model through untrusted data sources. Traditional security measures have proven insufficient, prompting the need for a more robust solution. CaMeL creates a protective layer around LLMs without requiring retraining or modification of existing models. It utilizes a dual-model architecture consisting of a Privileged LLM and a Quarantined LLM. The Privileged LLM manages task orchestration and isolates sensitive operations, while the Quarantined LLM handles potentially harmful data without tool-calling capabilities. This architecture ensures that untrusted inputs do not directly influence decision-making processes. To enforce security, CaMeL assigns metadata or "capabilities" to each data value, defining strict policies on how information can be utilized. A custom Python interpreter enforces these policies, monitoring data provenance and ensuring compliance with control-flow constraints. Initial evaluations using the AgentDojo benchmark indicate that CaMeL successfully mitigated 67% of prompt injection attacks, showcasing significant improvements over traditional defenses. For a detailed exploration of the technical specifications, refer to the original paper Defeating Prompt Injections by Design and further discussions on Simon Willison's insights about CaMeL. Addressing Vulnerabilities in LLMs Prompt injection attacks exploit the concatenation of trusted user prompts with untrusted data, leading to potential breaches of security. This issue has been likened to SQL injection, where malicious instructions can compromise the system. CaMeL's approach minimizes risks by maintaining a clear separation between trusted and untrusted inputs. Simon Willison's proposed Dual LLM pattern is referenced, where the Privileged LLM never interfaces directly with compromised data sources. Instead, it only processes filtered results from the Quarantined LLM, which is designed to handle potentially harmful content. While the Dual LLM pattern provides a security enhancement, CaMeL addresses its flaws by introducing capabilities within a custom interpreter to effectively manage dependencies and security policies. The inclusion of "capabilities" allows for granular control over data manipulation and ensures that sensitive operations are performed only under trusted conditions. For more on the implications of this design, check out further discussions on Simon Willison's blog. Enhancing Security with Fine-Grained Policies The implementation of capabilities in CaMeL allows for precise tracking of data and operations. This method restricts actions based on trust levels assigned to each data source. For instance, while retrieving an email, the system checks whether the derived email address is from a trusted source before proceeding to send any communication. The innovative design not only bolsters security but also offers potential privacy advantages. By allowing users to operate with a less complex Quarantined LLM locally, sensitive data can remain within the user's environment, minimizing exposure to external threats. This approach aligns well with modern needs for enhanced security and privacy in digital interactions. Drawing parallels to the world of IAM (Identity and Access Management), implementing secure SSO (Single Sign-On) and user management is crucial for enterprise clients. SSOJet's API-first platform provides robust features like directory synchronization and supports protocols such as SAML and OIDC, allowing for seamless integration and security. For additional insights into IAM practices, consider exploring SSOJet’s offerings. Conclusion: A Strong Call to Action CaMeL signifies a pivotal advancement in the defense of LLMs against prompt injection attacks. Its architecture not only enhances security but also introduces a new paradigm for handling potentially malicious inputs. As digital ecosystems evolve, the adoption of solutions like CaMeL will be vital for maintaining user trust and ensuring secure interactions. For organizations seeking to enhance their security posture, SSOJet provides an API-first platform tailored for enterprise-level authentication and user management. Explore our services or contact us at ssojet.com to learn how we can help secure your operations with advanced SSO, MFA, and Passkey solutions.

Originally published at ssojet

Google DeepMind has introduced CaMeL (CApabilities for MachinE Learning) as a defense mechanism against prompt injection attacks, a significant concern for large language models (LLMs). Prompt injection can lead to unauthorized data access or harmful operations by injecting malicious instructions into the model through untrusted data sources. Traditional security measures have proven insufficient, prompting the need for a more robust solution.

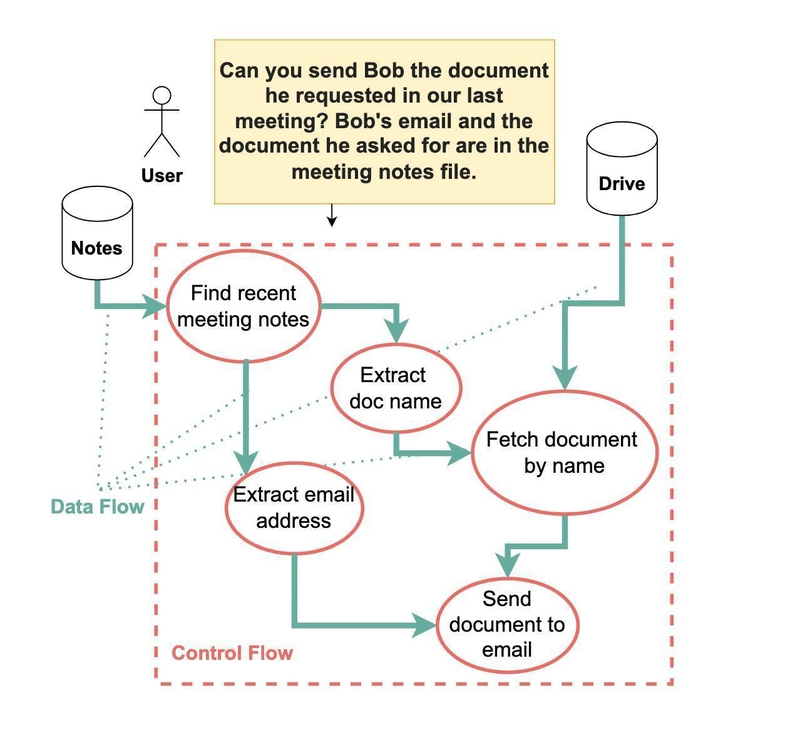

CaMeL creates a protective layer around LLMs without requiring retraining or modification of existing models. It utilizes a dual-model architecture consisting of a Privileged LLM and a Quarantined LLM. The Privileged LLM manages task orchestration and isolates sensitive operations, while the Quarantined LLM handles potentially harmful data without tool-calling capabilities. This architecture ensures that untrusted inputs do not directly influence decision-making processes.

To enforce security, CaMeL assigns metadata or "capabilities" to each data value, defining strict policies on how information can be utilized. A custom Python interpreter enforces these policies, monitoring data provenance and ensuring compliance with control-flow constraints. Initial evaluations using the AgentDojo benchmark indicate that CaMeL successfully mitigated 67% of prompt injection attacks, showcasing significant improvements over traditional defenses.

For a detailed exploration of the technical specifications, refer to the original paper Defeating Prompt Injections by Design and further discussions on Simon Willison's insights about CaMeL.

Addressing Vulnerabilities in LLMs

Prompt injection attacks exploit the concatenation of trusted user prompts with untrusted data, leading to potential breaches of security. This issue has been likened to SQL injection, where malicious instructions can compromise the system.

CaMeL's approach minimizes risks by maintaining a clear separation between trusted and untrusted inputs. Simon Willison's proposed Dual LLM pattern is referenced, where the Privileged LLM never interfaces directly with compromised data sources. Instead, it only processes filtered results from the Quarantined LLM, which is designed to handle potentially harmful content.

While the Dual LLM pattern provides a security enhancement, CaMeL addresses its flaws by introducing capabilities within a custom interpreter to effectively manage dependencies and security policies. The inclusion of "capabilities" allows for granular control over data manipulation and ensures that sensitive operations are performed only under trusted conditions.

For more on the implications of this design, check out further discussions on Simon Willison's blog.

Enhancing Security with Fine-Grained Policies

The implementation of capabilities in CaMeL allows for precise tracking of data and operations. This method restricts actions based on trust levels assigned to each data source. For instance, while retrieving an email, the system checks whether the derived email address is from a trusted source before proceeding to send any communication.

The innovative design not only bolsters security but also offers potential privacy advantages. By allowing users to operate with a less complex Quarantined LLM locally, sensitive data can remain within the user's environment, minimizing exposure to external threats. This approach aligns well with modern needs for enhanced security and privacy in digital interactions.

Drawing parallels to the world of IAM (Identity and Access Management), implementing secure SSO (Single Sign-On) and user management is crucial for enterprise clients. SSOJet's API-first platform provides robust features like directory synchronization and supports protocols such as SAML and OIDC, allowing for seamless integration and security.

For additional insights into IAM practices, consider exploring SSOJet’s offerings.

Conclusion: A Strong Call to Action

CaMeL signifies a pivotal advancement in the defense of LLMs against prompt injection attacks. Its architecture not only enhances security but also introduces a new paradigm for handling potentially malicious inputs. As digital ecosystems evolve, the adoption of solutions like CaMeL will be vital for maintaining user trust and ensuring secure interactions.

For organizations seeking to enhance their security posture, SSOJet provides an API-first platform tailored for enterprise-level authentication and user management. Explore our services or contact us at ssojet.com to learn how we can help secure your operations with advanced SSO, MFA, and Passkey solutions.

![M4 MacBook Air Drops to Just $849 - Act Fast! [Lowest Price Ever]](https://www.iclarified.com/images/news/97140/97140/97140-640.jpg)

![Apple Smart Glasses Not Close to Being Ready as Meta Targets 2025 [Gurman]](https://www.iclarified.com/images/news/97139/97139/97139-640.jpg)

![iPadOS 19 May Introduce Menu Bar, iOS 19 to Support External Displays [Rumor]](https://www.iclarified.com/images/news/97137/97137/97137-640.jpg)

_Olekcii_Mach_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] Koofr Cloud Storage: Lifetime Subscription (1TB) (80% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)