My Journey Creating a Hate Speech Detection App with Machine Learning

Protecting online conversations from harmful or offensive content is an ongoing challenge for web and app developers. As online communication grows, so does the scale and subtlety of hate speech and harmful language. That's what motivated me to build and share my own free online hate speech detection tool, designed specifically to help us, and wider communities, use machine learning for real-world moderation and safety. Why Build a Hate Speech Detection Tool? Day by day, online spaces become more central to our personal, professional, and educational interactions. While this offers incredible opportunities, it comes with the persistent spread of hate speech, harassment, and divisive language—even in places that strive to be safe and inclusive. Manual moderation is tough to scale, especially for indie platforms, open-source communities, or open chat rooms like those so many of us developers frequent. With interest in both AI and social impact, I wanted to create an easily accessible, privacy-focused platform that leverages hate speech detection using machine learning. The idea was to enable both moderation of user-generated content and quick self-checks for individuals, all without data leaving the browser. The Challenge of Hate Speech Online Hate speech online is complex—not just obvious slurs or insults, but coded language or context-dependent threats that shift over time. Identifying it accurately and at scale means understanding not just words, but intent and context. False positives risk restricting free expression; false negatives mean harmful material slips through. This context made the challenge appealing as a project: How can a tool balance sensitivity with fairness, and scale into real environments? Machine Learning in Action: Building the Model At the core of this tool is hate speech detection using machine learning. Here’s a walkthrough of how the system works: Data & Preprocessing: I sourced a combination of public hate speech datasets and balanced samples to create a representative training set. Text preprocessing strips extra characters, normalizes casing, and applies tokenization—handling everything from single-word attacks to subtle phrase patterns. Model Choice: The tool relies on advanced OpenAI-based detection, using transformer models flexible enough to capture nuanced expressions. The algorithms measure not only word presence but also structure, tone, and broader context. Multi-Category Detection: The moderation process checks for hate speech, harassment, explicit or violent content, and self-harm risks. Clear thresholds (which can be tuned for future customization) determine when language crosses into problematic territory. Real-Time Processing: Everything happens instantly—within milliseconds—so moderation can be interactive, immediate, and actionable. Privacy matters. No text is stored or shared; after moderation, it’s discarded. Users don’t need to sign up or submit any identifiers. Walkthrough: How the Hate Speech Detection Tool Works Paste your text or input sample content. Hit “Check Content”. The system runs AI-driven analysis, then flags any found hate speech, harassment, or sensitive content within comprehensive categories. You’ll get instant moderation results: clear, annotated feedback so you can decide how to react or moderate further. User experience is kept simple, letting community managers, developers, teachers, or concerned individuals check content in seconds. Ready to see it in action? Try it out:➡️ Hate Speech Detection tool Lessons Learned & Development Insights Building this project surfaced a few important technical and ethical lessons that might resonate with fellow devs: Balancing accuracy and fairness: Preventing overzealous moderation (false positives) was as important as detecting truly harmful language. Context sensitivity: Models wrestle with sarcasm and coded speech; real-world deployment always needs a fallback to human judgment for edge cases. User privacy: Handling moderation without storing or logging data delivers meaningful privacy while retaining tool effectiveness. UI/UX”: Tech is only as impactful as it is accessible—friction-free design means a teacher, forum admin, or parent can use the service right away. Try the Tool—And Share Your Feedback If you moderate an open-source platform, build chat features, or just care about keeping spaces healthy, I invite you to try my AI-powered hate speech detection tool. Development is ongoing, so community feedback will shape its next stages—ML developers: fork ideas, share improvements, or raise an issue with training data sources.

Protecting online conversations from harmful or offensive content is an ongoing challenge for web and app developers. As online communication grows, so does the scale and subtlety of hate speech and harmful language. That's what motivated me to build and share my own free online hate speech detection tool, designed specifically to help us, and wider communities, use machine learning for real-world moderation and safety.

Why Build a Hate Speech Detection Tool?

Day by day, online spaces become more central to our personal, professional, and educational interactions. While this offers incredible opportunities, it comes with the persistent spread of hate speech, harassment, and divisive language—even in places that strive to be safe and inclusive. Manual moderation is tough to scale, especially for indie platforms, open-source communities, or open chat rooms like those so many of us developers frequent.

With interest in both AI and social impact, I wanted to create an easily accessible, privacy-focused platform that leverages hate speech detection using machine learning. The idea was to enable both moderation of user-generated content and quick self-checks for individuals, all without data leaving the browser.

The Challenge of Hate Speech Online

Hate speech online is complex—not just obvious slurs or insults, but coded language or context-dependent threats that shift over time. Identifying it accurately and at scale means understanding not just words, but intent and context. False positives risk restricting free expression; false negatives mean harmful material slips through.

This context made the challenge appealing as a project: How can a tool balance sensitivity with fairness, and scale into real environments?

Machine Learning in Action: Building the Model

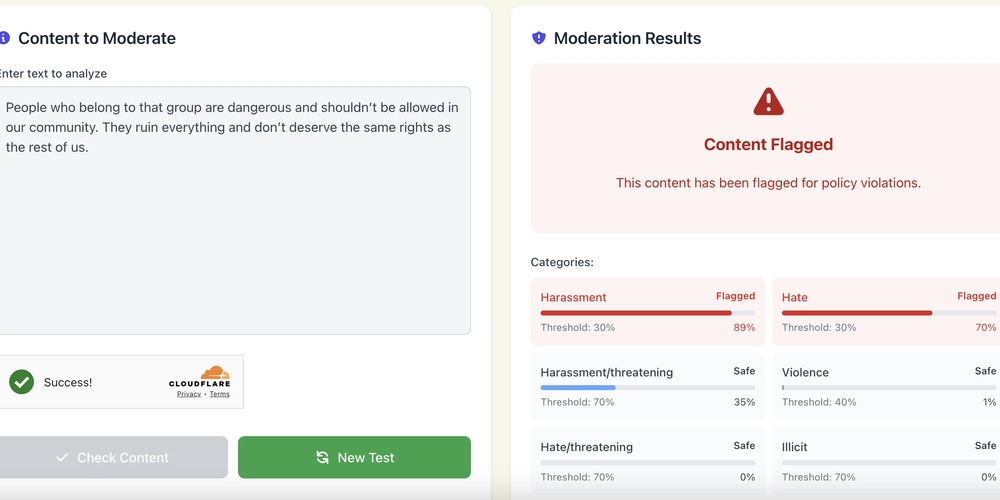

At the core of this tool is hate speech detection using machine learning. Here’s a walkthrough of how the system works:

Data & Preprocessing: I sourced a combination of public hate speech datasets and balanced samples to create a representative training set. Text preprocessing strips extra characters, normalizes casing, and applies tokenization—handling everything from single-word attacks to subtle phrase patterns.

Model Choice: The tool relies on advanced OpenAI-based detection, using transformer models flexible enough to capture nuanced expressions. The algorithms measure not only word presence but also structure, tone, and broader context.

Multi-Category Detection: The moderation process checks for hate speech, harassment, explicit or violent content, and self-harm risks. Clear thresholds (which can be tuned for future customization) determine when language crosses into problematic territory.

Real-Time Processing: Everything happens instantly—within milliseconds—so moderation can be interactive, immediate, and actionable.

Privacy matters. No text is stored or shared; after moderation, it’s discarded. Users don’t need to sign up or submit any identifiers.

Walkthrough: How the Hate Speech Detection Tool Works

Paste your text or input sample content.

Hit “Check Content”.

The system runs AI-driven analysis, then flags any found hate speech, harassment, or sensitive content within comprehensive categories.

You’ll get instant moderation results: clear, annotated feedback so you can decide how to react or moderate further.

User experience is kept simple, letting community managers, developers, teachers, or concerned individuals check content in seconds.

Ready to see it in action? Try it out:➡️ Hate Speech Detection tool

Lessons Learned & Development Insights

Building this project surfaced a few important technical and ethical lessons that might resonate with fellow devs:

Balancing accuracy and fairness: Preventing overzealous moderation (false positives) was as important as detecting truly harmful language.

Context sensitivity: Models wrestle with sarcasm and coded speech; real-world deployment always needs a fallback to human judgment for edge cases.

User privacy: Handling moderation without storing or logging data delivers meaningful privacy while retaining tool effectiveness.

UI/UX”: Tech is only as impactful as it is accessible—friction-free design means a teacher, forum admin, or parent can use the service right away.

Try the Tool—And Share Your Feedback

If you moderate an open-source platform, build chat features, or just care about keeping spaces healthy, I invite you to try my AI-powered hate speech detection tool. Development is ongoing, so community feedback will shape its next stages—ML developers: fork ideas, share improvements, or raise an issue with training data sources.

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![What’s new in Android’s April 2025 Google System Updates [U: 4/18]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[FREE EBOOKS] Machine Learning Hero, AI-Assisted Programming for Web and Machine Learning & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)