Understanding Docker Image Layers: Optimizing Build Performance

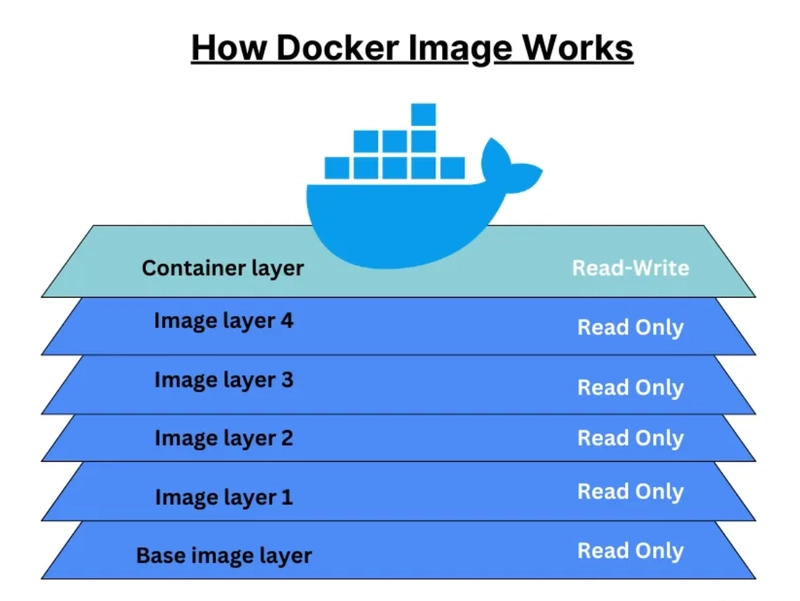

Docker images are layer-based, meaning each instruction in a Dockerfile creates a new layer. This structure allows Docker to efficiently cache unchanged layers, significantly speeding up builds. Understanding how Docker handles image layers can help optimize builds, prevent unnecessary re-executions, and make development more efficient. What is an Image Layer? A Docker image consists of multiple layers: Each instruction (FROM, RUN, COPY, etc.) in a Dockerfile creates a separate layer. Layers are cached, meaning if an instruction remains unchanged, Docker reuses the existing layer instead of rebuilding it. If a layer is modified, all subsequent layers are re-executed. Example: Running Docker Build Multiple Times If you run: docker build . twice, the second time will execute much faster because Docker retrieves cached layers instead of re-running instructions. But if you change the source code and rebuild: docker build . You'll notice that all dependencies are reinstalled, even though they haven't changed! This happens because subsequent layers are invalidated when any layer before them changes. Common Inefficiencies in Layer Usage Consider this Dockerfile: FROM node WORKDIR /app COPY . /app # Copies everything, including package.json RUN npm install # Installs dependencies EXPOSE 80 CMD ["node", "server.js"] Issue: If any file in the source code changes, Docker invalidates the COPY layer. Since dependencies (npm install) are installed after COPY, the RUN npm install step is re-executed, wasting time! Optimized Dockerfile for Faster Builds Instead, restructure the Dockerfile to separate dependencies from source code: FROM node WORKDIR /app # First, copy only package.json to leverage caching COPY package.json /app # Install dependencies RUN npm install # Copy remaining source code separately COPY . /app EXPOSE 80 CMD ["node", "server.js"] Why is this better? ✅ Improved Layer Caching npm install happens before copying source code, so dependencies remain cached unless package.json changes. If only the source code changes, Docker reuses cached dependencies, avoiding unnecessary re-installation. ✅ Faster Builds Changes to source code won’t trigger redundant dependency installations. Dependencies are only rebuilt when package.json changes. Image vs Container: Key Differences Image: Contains your application and dependencies. Container: A thin runtime layer that executes the image but remains standalone once running. Common Misconceptions

Docker images are layer-based, meaning each instruction in a Dockerfile creates a new layer. This structure allows Docker to efficiently cache unchanged layers, significantly speeding up builds. Understanding how Docker handles image layers can help optimize builds, prevent unnecessary re-executions, and make development more efficient.

What is an Image Layer?

A Docker image consists of multiple layers:

- Each instruction (

FROM,RUN,COPY, etc.) in a Dockerfile creates a separate layer. - Layers are cached, meaning if an instruction remains unchanged, Docker reuses the existing layer instead of rebuilding it.

- If a layer is modified, all subsequent layers are re-executed.

Example: Running Docker Build Multiple Times

If you run:

docker build .

twice, the second time will execute much faster because Docker retrieves cached layers instead of re-running instructions.

But if you change the source code and rebuild:

docker build .

You'll notice that all dependencies are reinstalled, even though they haven't changed!

This happens because subsequent layers are invalidated when any layer before them changes.

Common Inefficiencies in Layer Usage

Consider this Dockerfile:

FROM node

WORKDIR /app

COPY . /app # Copies everything, including package.json

RUN npm install # Installs dependencies

EXPOSE 80

CMD ["node", "server.js"]

Issue:

- If any file in the source code changes, Docker invalidates the COPY layer.

- Since dependencies (

npm install) are installed after COPY, theRUN npm installstep is re-executed, wasting time!

Optimized Dockerfile for Faster Builds

Instead, restructure the Dockerfile to separate dependencies from source code:

FROM node

WORKDIR /app

# First, copy only package.json to leverage caching

COPY package.json /app

# Install dependencies

RUN npm install

# Copy remaining source code separately

COPY . /app

EXPOSE 80

CMD ["node", "server.js"]

Why is this better?

✅ Improved Layer Caching

-

npm installhappens before copying source code, so dependencies remain cached unlesspackage.jsonchanges. - If only the source code changes, Docker reuses cached dependencies, avoiding unnecessary re-installation.

✅ Faster Builds

- Changes to source code won’t trigger redundant dependency installations.

- Dependencies are only rebuilt when

package.jsonchanges.

Image vs Container: Key Differences

- Image: Contains your application and dependencies.

- Container: A thin runtime layer that executes the image but remains standalone once running.

![Mobile Legends: Bang Bang [MLBB] Free Redeem Codes April 2025](https://www.talkandroid.com/wp-content/uploads/2024/07/Screenshot_20240704-093036_Mobile-Legends-Bang-Bang.jpg)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)