Weekly Insights: AI, Cloud, and Quantum Advances (Apr 27 - May 3, 2025)

Last week, breakthroughs surged across artificial intelligence, cloud computing, and quantum technologies, reshaping how developers build, deploy, and envision the future of computing. From Meta’s ambitious bid to democratize powerful AI tools to Google’s massive bet on multi-cloud cybersecurity and IonQ’s groundbreaking quantum network, we’ve seen an electrifying shift: technologies once thought futuristic are rapidly becoming today’s developer toolkits. Here’s why these advancements matter and why tech professionals should pay close attention. AI: Advancements in Models, Tools, and Funding Meta’s LlamaCon empowers open-source AI Meta hosted its first-ever LlamaCon developer conference (April 29th) dedicated to its Llama AI models. Meta announced an upcoming Llama API: a customizable, “no-lock-in” API (now in preview) that lets developers integrate Llama models with just one line of code. The API features one-click API key creation, an interactive playground for trying out models, and compatibility with OpenAI’s SDK, making it easy to plug Llama into existing apps. This emphasis on developer experience (speedy integration and full model flexibility) signals Meta’s commitment to an open AI ecosystem. Meta also revealed that Llama models have been downloaded over 1.2 billion times by the community and even launched a standalone “Meta AI” assistant app for end-users. These moves lower barriers to experimenting with state-of-the-art models (avoiding infrastructure headaches and vendor lock-in) and foster a vibrant open-source AI community. Essentially, Meta is trying to build a *“*real developer ecosystem” around Llama (complete with platforms, tools, and community support) rather than just dropping model weights on the internet. This could accelerate innovation by enabling developers to customize and deploy advanced AI models in their own products with greater ease and without dependency on a single cloud provider. OpenAI adds a shopping savvy to ChatGPT OpenAI rolled out a significant update that enhances ChatGPT’s web browsing capabilities for online shopping assistance. Announced April 28, the update enables ChatGPT to provide personalized product recommendations complete with images, reviews, and direct purchase links when users ask for shopping advice. OpenAI is not serving ads or affiliate links in these results; the recommendations are purely based on user queries and third-party product metadata (price, descriptions, reviews) rather than paid placement. This feature positions ChatGPT as a user-centric alternative to traditional search engines, which often prioritize advertising. This development showcases an AI system seamlessly integrating with live web data and structured results for a practical use case (personal shopping assistant). It also hints at new opportunities for AI-driven features that combine natural language understanding with domain-specific data. The fact that ChatGPT handled over 1 billion web searches in the past week underscores the demand for such AI-enhanced search experiences. In practical terms, this update could inspire developers to incorporate similar AI-driven recommendation or search features in their own applications, improving user experience by delivering context-aware results without invasive ads, and pushing the envelope in the AI-as-search trend that directly challenges the status quo of web search. Microsoft to host Musk’s Grok model on Azure In a notable partnership, Microsoft announced plans to host Elon Musk’s upcoming “Grok” AI model on Azure. (Musk’s new AI startup, xAI, has been developing Grok as a competitor in the large language model space.) This Azure-xAI collaboration is striking because Microsoft is already deeply invested in OpenAI, yet it’s extending support to another AI venture. The deal suggests that Azure aims to be a neutral, go-to cloud platform for any cutting-edge AI models, not just OpenAI’s: a sign of how cloud providers are competing to attract top AI workloads. For developers and AI startups, Microsoft’s cloud infrastructure backing means greater access to scalable compute power for training and deploying advanced models. It also implies more choice of AI models available as hosted services on Azure. In the bigger picture, this move underscores the convergence of AI and cloud. Cloud platforms are racing to offer the best hardware, GPUs, and tools to attract leading AI projects, which in turn drive developer adoption. Consequently, developers can expect improved performance, easier AI model deployment, and potentially more interoperable frameworks. In short, Azure securing Grok’s deployment is exciting because it fosters a more open AI marketplace where developers might soon access multiple high-end AI models (OpenAI, Meta, xAI, etc.) under one roof, picking the best tool for their use case. AI meets enterprise automation via UiPath The week also saw AI making inroads into e

Last week, breakthroughs surged across artificial intelligence, cloud computing, and quantum technologies, reshaping how developers build, deploy, and envision the future of computing. From Meta’s ambitious bid to democratize powerful AI tools to Google’s massive bet on multi-cloud cybersecurity and IonQ’s groundbreaking quantum network, we’ve seen an electrifying shift: technologies once thought futuristic are rapidly becoming today’s developer toolkits. Here’s why these advancements matter and why tech professionals should pay close attention.

AI: Advancements in Models, Tools, and Funding

Meta’s LlamaCon empowers open-source AI

Meta hosted its first-ever LlamaCon developer conference (April 29th) dedicated to its Llama AI models. Meta announced an upcoming Llama API: a customizable, “no-lock-in” API (now in preview) that lets developers integrate Llama models with just one line of code.

The API features one-click API key creation, an interactive playground for trying out models, and compatibility with OpenAI’s SDK, making it easy to plug Llama into existing apps. This emphasis on developer experience (speedy integration and full model flexibility) signals Meta’s commitment to an open AI ecosystem. Meta also revealed that Llama models have been downloaded over 1.2 billion times by the community and even launched a standalone “Meta AI” assistant app for end-users.

These moves lower barriers to experimenting with state-of-the-art models (avoiding infrastructure headaches and vendor lock-in) and foster a vibrant open-source AI community. Essentially, Meta is trying to build a *“*real developer ecosystem” around Llama (complete with platforms, tools, and community support) rather than just dropping model weights on the internet. This could accelerate innovation by enabling developers to customize and deploy advanced AI models in their own products with greater ease and without dependency on a single cloud provider.

OpenAI adds a shopping savvy to ChatGPT

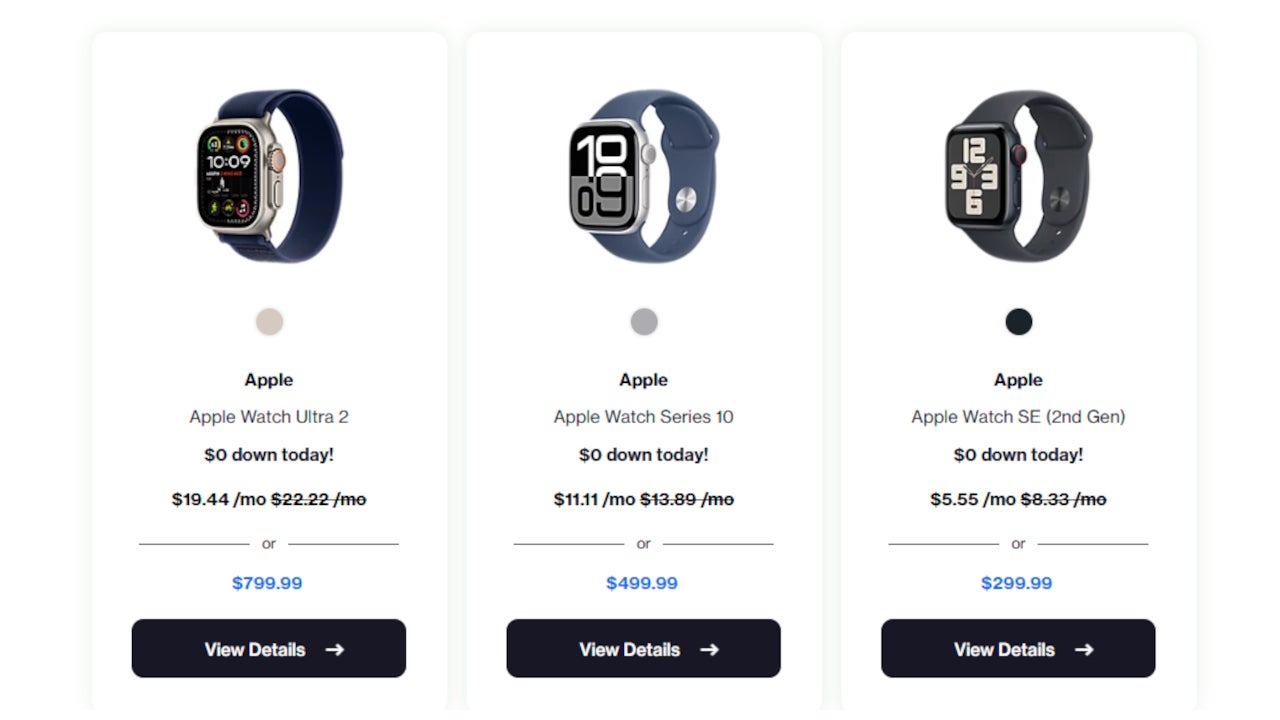

OpenAI rolled out a significant update that enhances ChatGPT’s web browsing capabilities for online shopping assistance. Announced April 28, the update enables ChatGPT to provide personalized product recommendations complete with images, reviews, and direct purchase links when users ask for shopping advice.

OpenAI is not serving ads or affiliate links in these results; the recommendations are purely based on user queries and third-party product metadata (price, descriptions, reviews) rather than paid placement. This feature positions ChatGPT as a user-centric alternative to traditional search engines, which often prioritize advertising.

This development showcases an AI system seamlessly integrating with live web data and structured results for a practical use case (personal shopping assistant). It also hints at new opportunities for AI-driven features that combine natural language understanding with domain-specific data. The fact that ChatGPT handled over 1 billion web searches in the past week underscores the demand for such AI-enhanced search experiences. In practical terms, this update could inspire developers to incorporate similar AI-driven recommendation or search features in their own applications, improving user experience by delivering context-aware results without invasive ads, and pushing the envelope in the AI-as-search trend that directly challenges the status quo of web search.

Microsoft to host Musk’s Grok model on Azure

In a notable partnership, Microsoft announced plans to host Elon Musk’s upcoming “Grok” AI model on Azure. (Musk’s new AI startup, xAI, has been developing Grok as a competitor in the large language model space.) This Azure-xAI collaboration is striking because Microsoft is already deeply invested in OpenAI, yet it’s extending support to another AI venture. The deal suggests that Azure aims to be a neutral, go-to cloud platform for any cutting-edge AI models, not just OpenAI’s: a sign of how cloud providers are competing to attract top AI workloads.

For developers and AI startups, Microsoft’s cloud infrastructure backing means greater access to scalable compute power for training and deploying advanced models. It also implies more choice of AI models available as hosted services on Azure. In the bigger picture, this move underscores the convergence of AI and cloud. Cloud platforms are racing to offer the best hardware, GPUs, and tools to attract leading AI projects, which in turn drive developer adoption. Consequently, developers can expect improved performance, easier AI model deployment, and potentially more interoperable frameworks. In short, Azure securing Grok’s deployment is exciting because it fosters a more open AI marketplace where developers might soon access multiple high-end AI models (OpenAI, Meta, xAI, etc.) under one roof, picking the best tool for their use case.

AI meets enterprise automation via UiPath

The week also saw AI making inroads into enterprise software via UiPath’s new AI-powered automation platform. UiPath, a leader in robotic process automation (RPA) – launched “UiPath Maestro” with built-in AI agents, aiming to blend advanced AI into RPA workflows. This means the bots that automate repetitive tasks can now incorporate AI-driven decision-making, computer vision, and natural language understanding to handle more complex and unstructured tasks.

For example, an AI agent might observe how human employees handle exceptions or interpret documents, and then replicate those actions autonomously. This promises to supercharge developer productivity in enterprise settings: routine processes (from invoice processing to customer support workflows) could become self-driving to a greater extent, freeing developers and IT teams from writing brittle rules for every edge case.

UiPath’s integration points to improved performance and scalability of automation. It brings advanced AI capabilities (like language understanding) directly into the tools that many businesses use daily, potentially enabling new use cases (e.g. automated customer email triage or intelligent document processing) with minimal additional development effort. Bots that can adapt to new scenarios using AI, rather than breaking when something unexpected happens. However, industry experts have urged caution, noting skepticism about the ROI and scalability of such AI-RPA fusions. It’s a reminder that while the technology is exciting, enterprises will need best practices to ensure these AI-enhanced workflows are reliable and cost-effective at scale.

Thinking Machines’ record AI funding

The AI boom continued to attract enormous investment, signalling optimism for new AI tools and platforms. Notably, Mira Murati, the former CTO of OpenAI, made headlines as her new startup Thinking Machines Lab is speculated to be seeking a $2 billion seed funding round. (For perspective, that scale is usually seen in late-stage investments, not seed rounds.) If secured, this would value the fledgling company at over $10 billion.

Thinking Machines, founded by a cadre of OpenAI veterans (including researchers who helped build ChatGPT and DALL-E), aims to develop next-generation multimodal AI models with advanced reasoning capabilities. Such a massive war chest for a startup underscores the huge expectations around AI, with investors betting that delivering more powerful and general AI systems will unlock transformative new applications (and markets).

This trend means a proliferation of AI platforms and services in the near future, beyond the familiar big-tech offerings. A well-funded startup like Murati’s could, for example, produce a new model or developer framework that becomes part of a programmer’s toolkit. In addition, competition from richly funded newcomers pushes incumbents (Google, OpenAI, Meta, etc.) to accelerate their own innovations, which we’re already seeing in the rapid rollout of model improvements and APIs. In short, the flood of capital into AI (venture funding in AI startups exceeded $73 billion in 2025’s first months, globally) will likely yield more choices and better tools for developers, from specialized models tuned to industries, to improved AI reasoning and reliability that make building on AI easier and more robust.

Evolving Cloud Landscape

Google’s $32B Wiz acquisition

Google Cloud signed a definitive agreement to acquire Wiz, Inc. for $32 billion, one of the largest cybersecurity acquisitions in history. Wiz is a fast-growing cloud security startup known for its platform that secures workloads across AWS, Azure, and Google Cloud environments. By connecting to all major clouds and even on-prem code, Wiz provides a unified view of security risks in cloud applications. Google’s investment here is a strategic push to “accelerate improved cloud security and multicloud capabilities in the AI era.” In other words, Google Cloud is doubling down on tools that let customers run applications across multiple clouds with strong, automated security, something increasingly important as companies avoid single-vendor lock-in.

For developers and DevOps teams, this promises easier management of security across hybrid and multi-cloud deployments. Google + Wiz will aim to “vastly improve how security is designed, operated and automated” for cloud apps, including lowering the cost and complexity of implementing defences.

The deal also highlights how AI and cloud are intersecting: Wiz’s tools will benefit from Google’s AI expertise to detect threats and misconfigurations, and one stated goal is to protect against new threats emerging due to AI. In practical terms, developers might soon see more built-in security scanning and automated remediation in Google Cloud (and possibly as standalone tools) that work across their entire stack. The acquisition also underscores a trend: as cloud providers compete, they’re expanding beyond raw infrastructure into value-add services (like security, data analytics, AI APIs), effectively giving developers more integrated capabilities out-of-the-box. Bottom line: expect more secure-by-design cloud platforms, where much of the heavy security lifting is handled by the platform, enabling devs to focus on building features rather than fighting exploits.

IBM & BNP Paribas forge a 10-year cloud+AI partnership

In the enterprise cloud arena, IBM renewed and expanded its cloud partnership with European banking giant BNP Paribasfor another 10 years. Announced April 29, this partnership will see BNP Paribas dedicate a new area of its own data centers for IBM Cloud, extending the private/dedicated IBM Cloud infrastructure it’s hosted since 2019. The aim is to bolster the bank’s operational resilience and compliance: the setup is designed for uninterrupted continuity of critical banking services (like payments), with full control over data security to meet strict European regulations (notably the EU’s Digital Operational Resilience Act).

What’s exciting is that beyond just traditional hosting, generative AI is a key focus of this collaboration: the bank will leverage GPUs on IBM Cloud to develop AI models and applications securely within its regulated environment. Essentially, BNP Paribas wants to adopt AI (for things like risk analysis, customer service, fraud detection) but in a way that maintains compliance and trust. IBM’s solution gives them cloud scalability and advanced hardware on-premises, plus likely access to IBM’s AI software stack, all under the bank’s control.

For developers in finance and other regulated industries, this is an encouraging sign: cloud providers are tailoring offerings to overcome compliance hurdles, meaning you can use modern cloud tools (containers, serverless, AI services) and meet governance requirements. This deal exemplifies how multi-cloud strategies are being adopted by enterprises: BNP will use IBM Cloud alongside other providers, choosing the right tool for each workload. The implication for devs is more freedom to innovate (e.g. deploying a new microservice with AI capabilities) without being blocked by IT policy, because the cloud infrastructure itself is being engineered to satisfy those policies.

Partnerships like this often lead to new solutions (e.g. finance-specific cloud services, better encryption or auditing features) that eventually become available to other customers. In short, IBM’s win here highlights the maturing of cloud for mission-critical, AI-driven workloads in banking, a trend likely to expand to healthcare, government, and other sensitive sectors.

Cloud giants’ growth face-off – Azure surges, AWS steady

The latest earnings reports underscored an ongoing shift in the cloud provider landscape. Microsoft Azure posted impressive growth (approximately 33% year-over-year increase in revenue for its cloud services), significantly outpacing AWS’s 17% YoY growth in the same quarter. (Google Cloud also grew around 28% YoY, above the market average.)

While AWS remains the largest cloud provider by market share, the faster growth of Azure (and to an extent, Google) indicates that many organizations are spreading out their cloud investments, often motivated by Azure’s strengths in enterprise integration and the infusion of AI services. Microsoft’s CEO Satya Nadella highlighted that they are “infusing AI across every layer” of the tech stack: Azure’s OpenAI Service and other AI offerings have been a big draw, potentially contributing to that surge in usage.

For developers and CTOs, this intense competition is largely positive. For one, it’s driving a rapid rollout of new features. We’re seeing cloud vendors constantly one-upping each other with new capabilities (from AI/ML toolkits to database improvements to developer experience enhancements), and the surge in demand for GPU instances and AI platforms is being met with expanded cloud offerings for AI training and inference. Second, competitive pressure helps on pricing and flexibility (e.g., discounts, free tiers, and favourable licensing to attract startups and projects). Notably, Amazon’s slight slowdown has prompted it to emphasize efficiency and price-performance (as evidenced by AWS’s cost-optimization tools and new Graviton3 instances), whereas Microsoft and Google are touting high-end services that deliver more value per dollar (like advanced analytics, AI APIs, etc.). Another implication of this multi-cloud momentum for developers is the rise of interoperability tools such as Kubernetes and Terraform, as companies demand the freedom to move workloads.

The bottom line is that the cloud “big three” are in a heated race, and developers stand to benefit from better services: whether it’s more global regions to deploy in, improved performance (e.g. faster networks, specialized silicon), or scalability features that simplify building globally distributed applications. The numbers this quarter show that whoever delivers the best blend of performance and developer-friendly features (especially around emerging needs like AI) will win market share – so we can expect all providers to keep innovating aggressively.

Quantum: Bridging Theory and Implementation

IonQ & EPB create a first-of-its-kind Quantum Hub in the U.S.

In a milestone for real-world quantum adoption, IonQ announced a $22 million deal with EPB of Chattanooga (Tennessee) to establish America’s first quantum computing and networking hub. EPB is a municipal utility known for its citywide fibre-optic network, and now that fibre will underpin a quantum network connecting a state-of-the-art IonQ Forte Enterprise quantum computer at the new EPB Quantum Center. This means Chattanooga will host a dedicated quantum datacenter + quantum communication network available for commercial and research use. The hub will allow businesses, startups, and researchers to experiment with quantum computing on-site (rather than via the cloud only) and leverage a quantum network for ultra-secure communications (quantum key distribution) across the city.

Importantly, IonQ and EPB are also investing in developing a quantum-ready workforce: IonQ will open an office there and provide training, while both partners will work with local institutions to educate developers in quantum programming. They’re even collaborating on quantum algorithms for optimizing the energy grid, aligning with EPB’s utility focus.

This hub will bring quantum tech out of the lab and into a community, essentially creating a living testbed for quantum applications. Developers in the region (and via remote collaborations) will get hands-on experience with a 36-qubit trapped-ion quantum computer and a live quantum network, something previously only accessible in select research labs. The implications are significant: we’ll learn how quantum systems perform in a sustained, real-world environment, and uncover practical use cases (and challenges) of integrating quantum computing with classical infrastructure (for example, hooking the quantum computer into cloud workflows or using it for specific optimization tasks in tandem with EPB’s classical supercomputing resources).

For the broader tech ecosystem, this initiative demonstrates a model that other cities or companies might follow, combining regional strengths (like Chattanooga’s fibre network) with quantum tech to create innovation hubs. It’s a step toward making quantum computing tangible for developers, who can start developing quantum algorithms for real problems (energy distribution, material science, finance, etc.) in a semi-production setting, gaining skills for the coming quantum era.

Cisco’s vision: networked quantum data centres for scalable QaaS

On the cutting-edge infrastructure front, Cisco shared details of its ambitious plans to enable quantum computing as a cloud service by networking many quantum processors. At a recent Quantum Summit, Cisco’s head of quantum research, Dr. Reza Nejabati, argued that trying to build a single mega–quantum computer with millions of qubits is not practical in the near term; instead, he proposed an approach where smaller quantum computers are interconnected in a data centre via high-speed quantum networks. Cisco introduced QFabric, a specialized quantum network using standard fibre-optic infrastructure to distribute entangled photons between quantum nodes. This would allow multiple quantum computers to function as one large virtual quantum machine, coordinating via entanglement and classical sync, much like distributed computing in classical cloud, but for qubits.

They are also developing Quantum Orchestra software to orchestrate entanglement routing and resource allocation across this network. If realized, this approach could make scaling quantum power more modular: data centers could add quantum “tiles” and link them, rather than waiting for leaps in single-chip qubit counts.

For developers, the prospect of cloud-based quantum computing that scales like cloud VMs is thrilling: it means down the road one could request quantum computing time with certain specs (couple hundred qubits, certain entanglement topology) similarly to how we request GPU instances today.

Cisco’s vision also emphasizes heterogeneity and flexibility: in their scenario, different types of quantum processors (superconducting, photonic, trapped-ion, etc.) could be connected as needed, and developers could tap into the strengths of each for different tasks. Moreover, security is a built-in benefit, such a network would inherently support quantum key distribution for ultra-secure communication between nodes and clients. While Cisco’s plans are forward-looking (much of this work is still in simulation and R&D), they underscore a key trend that has shown up several times through this article: massive convergence with the cloud. It hints that the future of cloud computing might include quantum resources as first-class citizens, orchestrated alongside classical compute.

Keeping an eye on efforts like QFabric is worthwhile, as they will shape the APIs and frameworks with which developers might interact with quantum computers at scale (perhaps via extensions to cloud SDKs or new quantum network programming models). In short, Cisco is preparing the groundwork so that once quantum hardware is more mature, it can be deployed akin to cloud infrastructure (scalable, shared, and accessible) bringing quantum capabilities to developers worldwide… without each needing a PhD in quantum physics.

Proof of new quantum advantage – a breakthrough in research

A notable scientific development last week provided a fresh example of a provable quantum speed-up, offering guidance on useful problems quantum computers can tackle. A Caltech-led research team (with collaborators from the AWS Quantum Computing Center) reported in Nature Physics a quantum algorithm that can efficiently find the lowest-energy states of certain materials, a common physics and chemistry problem, whereas all known classical algorithms would take exponentially longer for the same task. In simpler terms, they identified a problem in simulating how a material cools (finding its “local minima” energy configurations) where a quantum computer can outperform classical computers in principle.

This result is significant because most prior proofs of “quantum advantage” were either contrived mathematical scenarios or the famous case of factoring large numbers (Shor’s algorithm) which threatens cryptography but isn’t a routine industrial problem. Here, the problem (stabilizing states of a physical system) is directly relevant to materials science, chemistry, and even optimization. The researchers developed a novel quantum algorithm and showed theoretically that as the problem size grows, a quantum machine would handle it efficiently while a classical one would bog down infeasibly.

This kind of result illuminates which kinds of real-world problems could see breakthroughs from quantum computing. It encourages software developers in those domains to start formulating their challenges in ways that are compatible with quantum algorithms. For example, finding ground states is analogous to optimization tasks (like optimizing a supply chain or machine learning model) but perhaps quantum approaches could eventually contribute there as well. This research also underscores the importance of quantum algorithms work happening ahead of hardware availability: by the time quantum computers with enough qubits and low error rates exist to run such algorithms, we could already have a library of quantum routines ready to solve meaningful problems.

In summary, the Caltech result is a reminder that quantum isn’t just about hardware; it’s also about clever algorithms. Each new proven advantage builds confidence that quantum computers will eventually deliver unique value, guiding developers on what applications to prepare for (in this case, complex simulations and optimizations that classical computers struggle with).

Surge in quantum funding signals confidence

The broader quantum industry is enjoying a wave of investment momentum. Over $1.2 billion in private funding poured into quantum computing startups in the first quarter of 2025 alone (a 125% increase year-over-year). Last week, for instance, saw news of large funding rounds being secured: Quantum Machines, which makes control systems for quantum hardware, raised $170 million in fresh capital, and Alphabet’s spin-off SandboxAQ (focused on post-quantum cryptography and AI) added $150 million from investors including Google and NVIDIA. The fact that investors, including tech giants, are willing to put such sizeable bets on quantum tech indicates a strong belief that commercial payoffs are on the horizon.

This influx of funding is promising—it means rapid progress on the supporting technology that makes quantum computing usable: better software development kits, more stable hardware, cloud access to quantum processors, and robust error-correction techniques. We’re likely to see startups accelerating the availability of developer-friendly quantum tools, such as higher-level quantum programming languages, libraries for specific domains (quantum machine learning, quantum chemistry), and cloud platforms that let you integrate quantum workflows into classical applications. Increased funding also fosters a healthy competitive environment where multiple approaches (superconducting qubits, trapped ions, photonic qubits, etc.) are explored in parallel, raising the odds of breakthroughs that improve performance (more qubits, less error) and scalability of quantum systems.

In short, the rising tide of investment suggests that the industry expects quantum computing to transition from experimental to practical in the coming years. For tech professionals, now is a great time to start familiarizing oneself with quantum development, as the tools will rapidly evolve and opportunities to innovate (or even join well-funded ventures) are growing. The confidence shown by investors adds pressure and incentive for quantum tech companies to deliver on real use cases sooner than later, meaning the long-term promises of quantum may start materializing in tangible ways that developers can leverage, perhaps faster than many anticipated.

The week’s developments across AI, Cloud, and Quantum demonstrate an increasingly intertwined tech landscape: AI advancements are driving cloud adoption and innovation, cloud platforms are essential for training and deploying AI (and one day quantum) at scale, and quantum computing is emerging as the next frontier that both AI and cloud fields are preparing to integrate. For developers, keeping abreast of these trends is crucial. The exciting product launches (like Meta’s Llama API or OpenAI’s new ChatGPT capabilities) offer new tools to build smarter applications right now. The strategic partnerships and cloud evolutions (multi-cloud security, industry-specific clouds) mean more reliable and scalable infrastructure to deploy those applications globally. And the breakthroughs in research and the surge in funding hint at the technologies on the horizon (from quantum algorithms to new AI startups) that could become part of our everyday development toolkit in the near future.

It’s an exhilarating time to be a tech professional, as each week brings innovations that can fundamentally enhance how we design, build, and secure systems for the world.

DJ Leamen is a Machine Learning and Generative Al Developer and Computer Science student with an interest in emerging technology and ethical development.

Stay up to date on all the latest tech news for free!

![Apple Shares Official Teaser for 'Highest 2 Lowest' Starring Denzel Washington [Video]](https://www.iclarified.com/images/news/97221/97221/97221-640.jpg)

![New Powerbeats Pro 2 Wireless Earbuds On Sale for $199.95 [Lowest Price Ever]](https://www.iclarified.com/images/news/97217/97217/97217-640.jpg)

![Under-Display Face ID Coming to iPhone 18 Pro and Pro Max [Rumor]](https://www.iclarified.com/images/news/97215/97215/97215-640.jpg)

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)