Three Ways AI Can Weaken Your Cybersecurity

Even before generative AI arrived on the scene, companies struggled to adequately secure their data, applications, and networks. In the never-ending cat-and-mouse game between the good guys and the bad guys, the bad guys win their share of battles. However, the arrival of GenAI brings new cybersecurity threats, and adapting to them is the only hope for survival. There’s a wide variety of ways that AI and machine learning interact with cybersecurity, some of them good and some of them bad. But in terms of what’s new to the game, there are three patterns that stand out and deserve particular attention, including slopsquatting, prompt injection, and data poisoning. Slopsquatting “Slopsquatting” is a fresh AI take on “typosquatting,” where ne’er-do-wells spread malware to unsuspecting Web travelers who happen to mistype a URL. With slopsquatting, the bad guys are spreading malware through software development libraries that have been hallucinated by GenAI. We know that large language models (LLMs) are prone to hallucinations. The tendency to create things out of whole cloth is not so much a bug of LLMs, but a feature that’s intrinsic to the way LLMs are developed. Some of these confabulations are humorous, but others can be serious. Slopsquatting falls into the latter category. Large companies have reportedly recommended Pythonic libraries that have been hallucinated by GenAI. In a recent story in The Register, Bar Lanyado, security researcher at Lasso Security, explained that Alibaba recommended users install a fake version of the legitimate library called “huggingface-cli.” While it is still unclear whether the bad guys have weaponized slopsquatting yet, GenAI’s tendency to hallucinate software libraries is perfectly clear. Last month, researchers published a paper that concluded that GenAI recommends Python and JavaScript libraries that don’t exist about one-fifth of the time. “Our findings reveal that that the average percentage of hallucinated packages is at least 5.2% for commercial models and 21.7% for open-source models, including a staggering 205,474 unique examples of hallucinated package names, further underscoring the severity and pervasiveness of this threat,” the researchers wrote in the paper, titled “We Have a Package for You! A Comprehensive Analysis of Package Hallucinations by Code Generating LLMs.” Out of the 205,00+ instances of package hallucination, the names appeared to be inspired by real packages 38% of the time, were the results of typos 13% of the time, and were completely fabricated 51% of the time. Prompt Injection Just when you thought it was safe to venture onto the Web, a new threat emerged: prompt injection. Like the SQL injection attacks that plagued early Web 2.0 warriors who didn’t adequately validate database input fields, prompt injections involve the surreptitious injection of a malicious prompt into a GenAI-enabled application to achieve some goal, ranging from information disclosure and code execution rights. Mitigating these sorts of attacks is difficult because of the nature of GenAI applications. Instead of inspecting code for malicious entities, organizations must investigate the entirety of a model, including all of its weights. That’s not feasible in most situations, forcing them to adopt other techniques, says data scientist Ben Lorica. “A poisoned checkpoint or a hallucinated/compromised Python package named in an LLM‑generated requirements file can give an attacker code‑execution rights inside your pipeline,” Lorica writes in a recent installment of his Gradient Flow newsletter. “Standard security scanners can’t parse multi‑gigabyte weight files, so additional safeguards are essential: digitally sign model weights, maintain a ‘bill of materials’ for training data, and keep verifiable training logs.” A twist on the prompt injection attack was recently described by researchers at HiddenLayer, who call their technique “policy puppetry.” “By reformulating prompts to look like one of a few types of policy files, such as XML, INI, or JSON, an LLM can be tricked into subverting alignments or instructions,” the researchers write in a summary of their findings. “As a result, attackers can easily bypass system prompts and any safety alignments trained into the models.” The company says its approach to spoofing policy prompts enables it to bypass model alignment and produce outputs that are in clear violation of AI safety policies, including CBRN (Chemical, Biological, Radiological, and Nuclear), mass violence, self-harm and system prompt leakage. Data Poisoning Data lies at the heart of machine learning and AI models. So if a malicious user can inject, delete, or change the data that an organization uses to train an ML or AI model, then he or she can potentially skew the learning process and force the ML or AI model to generate an adverse result. A form of adversarial AI attacks, data poisoning or data manipulation, poses a serious risk to organizations that rely on AI.

Even before generative AI arrived on the scene, companies struggled to adequately secure their data, applications, and networks. In the never-ending cat-and-mouse game between the good guys and the bad guys, the bad guys win their share of battles. However, the arrival of GenAI brings new cybersecurity threats, and adapting to them is the only hope for survival.

There’s a wide variety of ways that AI and machine learning interact with cybersecurity, some of them good and some of them bad. But in terms of what’s new to the game, there are three patterns that stand out and deserve particular attention, including slopsquatting, prompt injection, and data poisoning.

Slopsquatting

“Slopsquatting” is a fresh AI take on “typosquatting,” where ne’er-do-wells spread malware to unsuspecting Web travelers who happen to mistype a URL. With slopsquatting, the bad guys are spreading malware through software development libraries that have been hallucinated by GenAI.

We know that large language models (LLMs) are prone to hallucinations. The tendency to create things out of whole cloth is not so much a bug of LLMs, but a feature that’s intrinsic to the way LLMs are developed. Some of these confabulations are humorous, but others can be serious. Slopsquatting falls into the latter category.

Large companies have reportedly recommended Pythonic libraries that have been hallucinated by GenAI. In a recent story in The Register, Bar Lanyado, security researcher at Lasso Security, explained that Alibaba recommended users install a fake version of the legitimate library called “huggingface-cli.”

While it is still unclear whether the bad guys have weaponized slopsquatting yet, GenAI’s tendency to hallucinate software libraries is perfectly clear. Last month, researchers published a paper that concluded that GenAI recommends Python and JavaScript libraries that don’t exist about one-fifth of the time.

“Our findings reveal that that the average percentage of hallucinated packages is at least 5.2% for commercial models and 21.7% for open-source models, including a staggering 205,474 unique examples of hallucinated package names, further underscoring the severity and pervasiveness of this threat,” the researchers wrote in the paper, titled “We Have a Package for You! A Comprehensive Analysis of Package Hallucinations by Code Generating LLMs.”

Out of the 205,00+ instances of package hallucination, the names appeared to be inspired by real packages 38% of the time, were the results of typos 13% of the time, and were completely fabricated 51% of the time.

Prompt Injection

Just when you thought it was safe to venture onto the Web, a new threat emerged: prompt injection.

Like the SQL injection attacks that plagued early Web 2.0 warriors who didn’t adequately validate database input fields, prompt injections involve the surreptitious injection of a malicious prompt into a GenAI-enabled application to achieve some goal, ranging from information disclosure and code execution rights.

Mitigating these sorts of attacks is difficult because of the nature of GenAI applications. Instead of inspecting code for malicious entities, organizations must investigate the entirety of a model, including all of its weights. That’s not feasible in most situations, forcing them to adopt other techniques, says data scientist Ben Lorica.

“A poisoned checkpoint or a hallucinated/compromised Python package named in an LLM‑generated requirements file can give an attacker code‑execution rights inside your pipeline,” Lorica writes in a recent installment of his Gradient Flow newsletter. “Standard security scanners can’t parse multi‑gigabyte weight files, so additional safeguards are essential: digitally sign model weights, maintain a ‘bill of materials’ for training data, and keep verifiable training logs.”

A twist on the prompt injection attack was recently described by researchers at HiddenLayer, who call their technique “policy puppetry.”

“By reformulating prompts to look like one of a few types of policy files, such as XML, INI, or JSON, an LLM can be tricked into subverting alignments or instructions,” the researchers write in a summary of their findings. “As a result, attackers can easily bypass system prompts and any safety alignments trained into the models.”

The company says its approach to spoofing policy prompts enables it to bypass model alignment and produce outputs that are in clear violation of AI safety policies, including CBRN (Chemical, Biological, Radiological, and Nuclear), mass violence, self-harm and system prompt leakage.

Data Poisoning

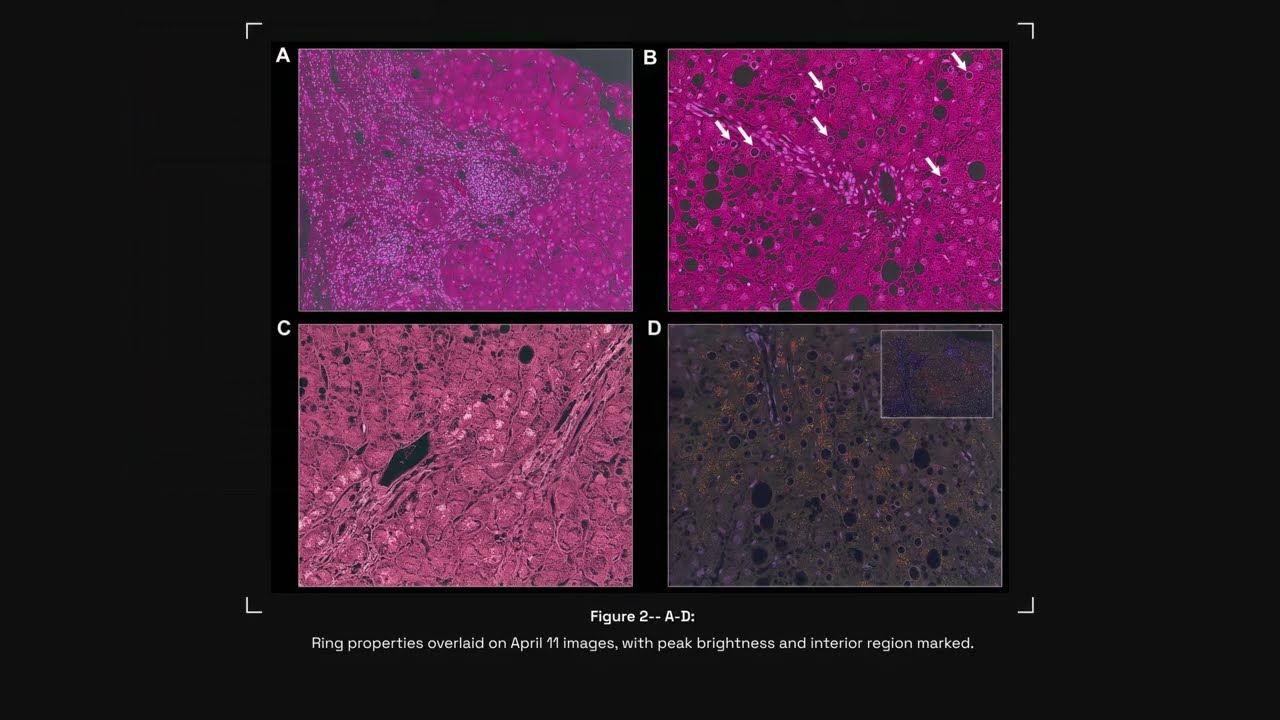

Data lies at the heart of machine learning and AI models. So if a malicious user can inject, delete, or change the data that an organization uses to train an ML or AI model, then he or she can potentially skew the learning process and force the ML or AI model to generate an adverse result.

A form of adversarial AI attacks, data poisoning or data manipulation, poses a serious risk to organizations that rely on AI. According to the security firm CrowdStrike, data poisoning is a risk to healthcare, finance, automotive, and HR use cases, and can even potentially be used to create backdoors.

“Because most AI models are constantly evolving, it can be difficult to detect when the dataset has been compromised,” the company says in a 2024 blog post. “Adversaries often make subtle–but–potent changes to the data that can go undetected. This is especially true if the adversary is an insider and therefore has in-depth information about the organization’s security measures and tools as well as their processes.”

Data poisoning can be either targeted or non-targeted. In either case, there are telltale signs that security professionals can look for that indicate whether their data has been compromised.

AI Attacks as Social Engineering

These three AI attack vectors–slopsquatting, prompt injection, and data poisoning–aren’t the only ways that cybercriminals can attack organizations via AI. But they are three avenues that AI-using organizations should be aware of to thwart the potential compromise of their systems.

Unless organizations take pains to adapt to the new ways that hackers can compromise systems through AI, they run the risk of becoming a victim. Because LLMs behave probabilistically instead of deterministically, they are much more liable to social engineering types of attacks than traditional systems, Lorica says.

“The result is a dangerous security asymmetry: exploit techniques spread rapidly through open-source repositories and Discord channels, while effective mitigations demand architectural overhauls, sophisticated testing protocols, and comprehensive staff retraining,” Lorica writes. “The longer we treat LLMs as ‘just another API,’ the wider that gap becomes.”

This article first appeared on BigDATAwire.

![Apple Reports Q2 FY25 Earnings: $95.4 Billion in Revenue, $24.8 Billion in Net Income [Chart]](https://www.iclarified.com/images/news/97188/97188/97188-640.jpg)

![Google reveals NotebookLM app for Android & iPhone, coming at I/O 2025 [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/05/NotebookLM-Android-iPhone-6-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Android Auto light theme surfaces for the first time in years and looks nearly finished [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/01/android-auto-dashboard-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![[DEALS] Mail Backup X Individual Edition: Lifetime Subscription (72% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)