A Data Scientist’s Guide to Docker Containers

How to enable your ML model to run anywhere The post A Data Scientist’s Guide to Docker Containers appeared first on Towards Data Science.

However, the production machine is usually different from the one you developed the model on. So, you ship the model to the production machine, but somehow the model doesn’t work anymore. That’s weird, right? You tested everything on your local machine and it worked fine. You even wrote unit tests.

What happened? Most likely the production machine differs from your local machine. Perhaps it does not have all the needed dependencies installed to run your model. Perhaps installed dependencies are on a different version. There can be many reasons for this.

How can you solve this problem? One approach could be to exactly replicate the production machine. But that is very inflexible as for each new production machine you would need to build a local replica.

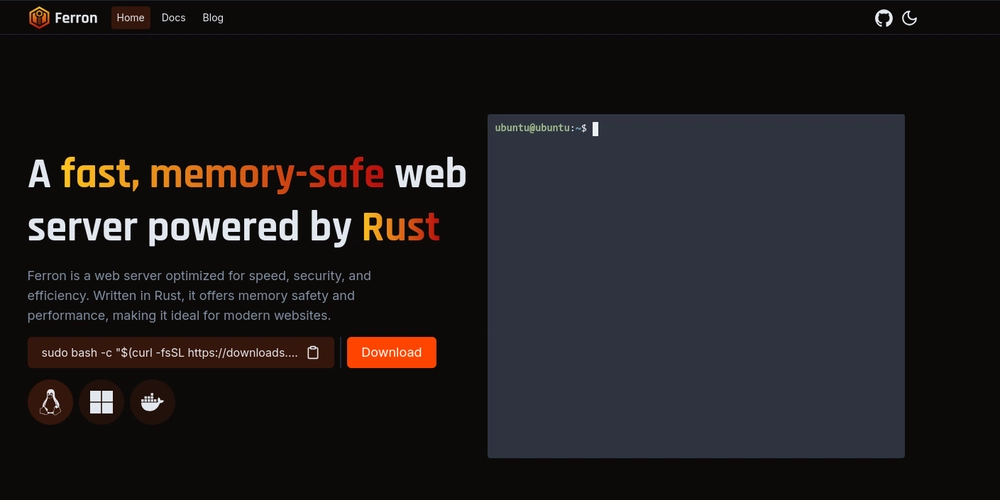

A much nicer approach is to use Docker containers.

Docker is a tool that helps us to create, manage, and run code and applications in containers. A container is a small isolated computing environment in which we can package an application with all its dependencies. In our case our ML model with all the libraries it needs to run. With this, we do not need to rely on what is installed on the host machine. A Docker Container enables us to separate applications from the underlying infrastructure.

For example, we package our ML model locally and push it to the cloud. With this, Docker helps us to ensure that our model can run anywhere and anytime. Using Docker has several advantages for us. It helps us to deliver new models faster, improve reproducibility, and make collaboration easier. All because we have exactly the same dependencies no matter where we run the container.

As Docker is widely used in the industry Data Scientists need to be able to build and run containers using Docker. Hence, in this article, I will go through the basic concept of containers. I will show you all you need to know about Docker to get started. After we have covered the theory, I will show you how you can build and run your own Docker container.

What is a container?

A container is a small, isolated environment in which everything is self-contained. The environment packages up all code and dependencies.

A container has five main features.

- self-contained: A container isolates the application/software, from its environment/infrastructure. Due to this isolation, we do not need to rely on any pre-installed dependencies on the host machine. Everything we need is part of the container. This ensures that the application can always run regardless of the infrastructure.

- isolated: The container has a minimal influence on the host and other containers and vice versa.

- independent: We can manage containers independently. Deleting a container does not affect other containers.

- portable: As a container isolates the software from the hardware, we can run it seamlessly on any machine. With this, we can move it between machines without a problem.

- lightweight: Containers are lightweight as they share the host machine’s OS. As they do not require their own OS, we do not need to partition the hardware resource of the host machine.

This might sound similar to virtual machines. But there is one big difference. The difference is in how they use their host computer’s resources. Virtual machines are an abstraction of the physical hardware. They partition one server into multiple. Thus, a VM includes a full copy of the OS which takes up more space.

In contrast, containers are an abstraction at the application layer. All containers share the host’s OS but run in isolated processes. Because containers do not contain an OS, they are more efficient in using the underlying system and resources by reducing overhead.

Now we know what containers are. Let’s get some high-level understanding of how Docker works. I will briefly introduce the technical terms that are used often.

What is Docker?

To understand how Docker works, let’s have a brief look at its architecture.

Docker uses a client-server architecture containing three main parts: A Docker client, a Docker daemon (server), and a Docker registry.

The Docker client is the primary way to interact with Docker through commands. We use the client to communicate through a REST API with as many Docker daemons as we want. Often used commands are docker run, docker build, docker pull, and docker push. I will explain later what they do.

The Docker daemon manages Docker objects, such as images and containers. The daemon listens for Docker API requests. Depending on the request the daemon builds, runs, and distributes Docker containers. The Docker daemon and client can run on the same or different systems.

The Docker registry is a centralized location that stores and manages Docker images. We can use them to share images and make them accessible to others.

Sounds a bit abstract? No worries, once we get started it will be more intuitive. But before that, let’s run through the needed steps to create a Docker container.

What do we need to create a Docker container?

It is simple. We only need to do three steps:

- create a Dockerfile

- build a Docker Image from the Dockerfile

- run the Docker Image to create a Docker container

Let’s go step-by-step.

A Dockerfile is a text file that contains instructions on how to build a Docker Image. In the Dockerfile we define what the application looks like and its dependencies. We also state what process should run when launching the Docker container. The Dockerfile is composed of layers, representing a portion of the image’s file system. Each layer either adds, removes, or modifies the layer below it.

Based on the Dockerfile we create a Docker Image. The image is a read-only template with instructions to run a Docker container. Images are immutable. Once we create a Docker Image we cannot modify it anymore. If we want to make changes, we can only add changes on top of existing images or create a new image. When we rebuild an image, Docker is clever enough to rebuild only layers that have changed, reducing the build time.

A Docker Container is a runnable instance of a Docker Image. The container is defined by the image and any configuration options that we provide when creating or starting the container. When we remove a container all changes to its internal states are also removed if they are not stored in a persistent storage.

Using Docker: An example

With all the theory, let’s get our hands dirty and put everything together.

As an example, we will package a simple ML model with Flask in a Docker container. We can then run requests against the container and receive predictions in return. We will train a model locally and only load the artifacts of the trained model in the Docker Container.

I will go through the general workflow needed to create and run a Docker container with your ML model. I will guide you through the following steps:

- build model

- create

requirements.txtfile containing all dependencies - create

Dockerfile - build docker image

- run container

Before we get started, we need to install Docker Desktop. We will use it to view and run our Docker containers later on.

1. Build a model

First, we will train a simple RandomForestClassifier on scikit-learn’s Iris dataset and then store the trained model.

Second, we build a script making our model available through a Rest API, using Flask. The script is also simple and contains three main steps:

- extract and convert the data we want to pass into the model from the payload JSON

- load the model artifacts and create an onnx session and run the model

- return the model’s predictions as json

I took most of the code from here and here and made only minor changes.

2. Create requirements

Once we have created the Python file we want to execute when the Docker container is running, we must create a requirements.txt file containing all dependencies. In our case, it looks like this:

3. Create Dockerfile

The last thing we need to prepare before being able to build a Docker Image and run a Docker container is to write a Dockerfile.

The Dockerfile contains all the instructions needed to build the Docker Image. The most common instructions are

FROM— this specifies the base image that the build will extend.WORKDIR— this instruction specifies the “working directory” or the path in the image where files will be copied and commands will be executed.COPY— this instruction tells the builder to copy files from the host and put them into the container image.RUN— this instruction tells the builder to run the specified command.ENV— this instruction sets an environment variable that a running container will use.EXPOSE— this instruction sets the configuration on the image that indicates a port the image would like to expose.USER— this instruction sets the default user for all subsequent instructions.CMD ["— this instruction sets the default command a container using this image will run.", " "]

With these, we can create the Dockerfile for our example. We need to follow the following steps:

- Determine the base image

- Install application dependencies

- Copy in any relevant source code and/or binaries

- Configure the final image

Let’s go through them step by step. Each of these steps results in a layer in the Docker Image.

First, we specify the base image that we then build upon. As we have written in the example in Python, we will use a Python base image.

Second, we set the working directory into which we will copy all the files we need to be able to run our ML model.

Third, we refresh the package index files to ensure that we have the latest available information about packages and their versions.

Fourth, we copy in and install the application dependencies.

Fifth, we copy in the source code and all other files we need. Here, we also expose port 8080, which we will use for interacting with the ML model.

Sixth, we set a user, so that the container does not run as the root user

Seventh, we define that the example.py file will be executed when we run the Docker container. With this, we create the Flask server to run our requests against.

Besides creating the Dockerfile, we can also create a .dockerignore file to improve the build speed. Similar to a .gitignore file, we can exclude directories from the build context.

If you want to know more, please go to docker.com.

4. Create Docker Image

After we created all the files we needed to build the Docker Image.

To build the image we first need to open Docker Desktop. You can check if Docker Desktop is running by running docker ps in the command line. This command shows you all running containers.

To build a Docker Image, we need to be at the same level as our Dockerfile and requirements.txt file. We can then run docker build -t our_first_image . The -t flag indicates the name of the image, i.e., our_first_image, and the . tells us to build from this current directory.

Once we built the image we can do several things. We can

- view the image by running

docker image ls - view the history or how the image was created by running

docker image history - push the image to a registry by running

docker push

5. Run Docker Container

Once we have built the Docker Image, we can run our ML model in a container.

For this, we only need to execute docker run -p 8080:8080 in the command line. With -p 8080:8080 we connect the local port (8080) with the port in the container (8080).

If the Docker Image doesn’t expose a port, we could simply run docker run . Instead of using the image_name, we can also use the image_id.

Okay, once the container is running, let’s run a request against it. For this, we will send a payload to the endpoint by running curl X POST http://localhost:8080/invocations -H "Content-Type:application/json" -d @.path/to/sample_payload.json

Conclusion

In this article, I showed you the basics of Docker Containers, what they are, and how to build them yourself. Although I only scratched the surface it should be enough to get you started and be able to package your next model. With this knowledge, you should be able to avoid the “it works on my machine” problems.

I hope that you find this article useful and that it will help you become a better Data Scientist.

See you in my next article and/or leave a comment.

The post A Data Scientist’s Guide to Docker Containers appeared first on Towards Data Science.

![Apple M4 13-inch iPad Pro On Sale for $200 Off [Deal]](https://www.iclarified.com/images/news/97056/97056/97056-640.jpg)

![Apple Shares New 'Mac Does That' Ads for MacBook Pro [Video]](https://www.iclarified.com/images/news/97055/97055/97055-640.jpg)

![Apple Releases tvOS 18.4.1 for Apple TV [Download]](https://www.iclarified.com/images/news/97047/97047/97047-640.jpg)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)