An Unbiased Review of Snowflake’s Document AI

Or, how we spared a human from manually inspecting 10,000 flu shot documents. The post An Unbiased Review of Snowflake’s Document AI appeared first on Towards Data Science.

As data

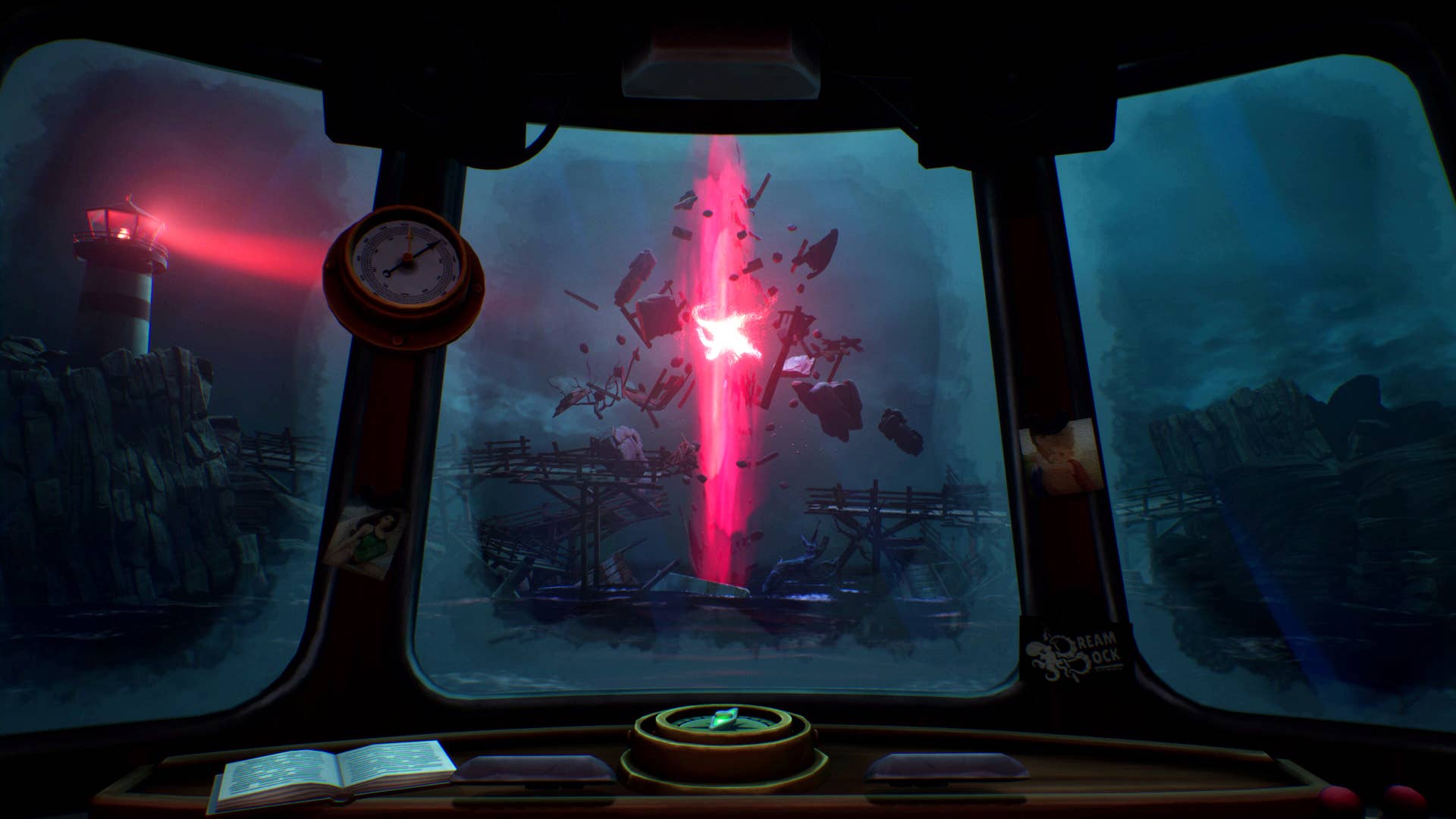

We can also handle words, json, xml feeds, and pictures of cats. But what about a cardboard box full of things like this?

The info on this receipt wants so badly to be in a tabular database somewhere. Wouldn’t it be great if we could scan all these, run them through an LLM, and save the results in a table?

Lucky for us, we live in the era of Document Ai. Document AI combines OCR with LLMs and allows us to build a bridge between the paper world and the digital database world.

All the major cloud vendors have some version of this…

- Google (Document AI),

- Microsoft (Document AI)

- AWS (Intelligent Document Processing)

- Snowflake (Document AI)

Here I’ll share my thoughts on Snowflake’s Document AI. Aside from using Snowflake at work, I have no affiliation with Snowflake. They didn’t commission me to write this piece and I’m not part of any ambassador program. All of that is to say I can write an unbiased review of Snowflake’s Document AI.

What is Document AI?

Document AI allows users to quickly extract information from digital documents. When we say “documents” we mean pictures with words. Don’t confuse this with niche NoSQL things.

The product combines OCR and LLM models so that a user can create a set of prompts and execute those prompts against a large collection of documents all at once.

LLMs and OCR both have room for error. Snowflake solved this by (1) banging their heads against OCR until it’s sharp — I see you, Snowflake developer — and (2) letting me fine-tune my LLM.

Fine-tuning the Snowflake LLM feels a lot more like glamping than some rugged outdoor adventure. I review 20+ documents, hit the “train model” button, then rinse and repeat until performance is satisfactory. Am I even a data scientist anymore?

Once the model is trained, I can run my prompts on 1000 documents at a time. I like to save the results to a table but you could do whatever you want with the results real time.

Why does it matter?

This product is cool for several reasons.

- You can build a bridge between the paper and digital world. I never thought the big box of paper invoices under my desk would make it into my cloud data warehouse, but now it can. Scan the paper invoice, upload it to snowflake, run my Document AI model, and wham! I have my desired information parsed into a tidy table.

- It’s frighteningly convenient to invoke a machine-learning model via SQL. Why didn’t we think of this sooner? In a old times this was a few hundred of lines of code to load the raw data (SQL >> python/spark/etc.), clean it, engineer features, train/test split, train a model, make predictions, and then often write the predictions back into SQL.

- To build this in-house would be a major undertaking. Yes, OCR has been around a long time but can still be finicky. Fine-tuning an LLM obviously hasn’t been around too long, but is getting easier by the week. To piece these together in a way that achieves high accuracy for a variety of documents could take a long time to hack on your own. Months of months of polish.

Of course some elements are still built in house. Once I extract information from the document I have to figure out what to do with that information. That’s relatively quick work, though.

Our Use Case — Bring on Flu Season:

I work at a company called IntelyCare. We operate in the healthcare staffing space, which means we help hospitals, nursing homes, and rehab centers find quality clinicians for individual shifts, extended contracts, or full-time/part-time engagements.

Many of our facilities require clinicians to have an up-to-date flu shot. Last year, our clinicians submitted over 10,000 flu shots in addition to hundreds of thousands of other documents. We manually reviewed all of these manually to ensure validity. Part of the joy of working in the healthcare staffing world!

Spoiler Alert: Using Document AI, we were able to reduce the number of flu-shot documents needing manual review by ~50% and all in just a couple of weeks.

To pull this off, we did the following:

- Uploaded a pile of flu-shot documents to snowflake.

- Massaged the prompts, trained the model, massaged the prompts some more, retrained the model some more…

- Built out the logic to compare the model output against the clinician’s profile (e.g. do the names match?). Definitely some trial and error here with formatting names, dates, etc.

- Built out the “decision logic” to either approve the document or send it back to the humans.

- Tested the full pipeline on bigger pile of manually reviewed documents. Took a close look at any false positives.

- Repeated until our confusion matrix was satisfactory.

For this project, false positives pose a business risk. We don’t want to approve a document that’s expired or missing key information. We kept iterating until the false-positive rate hit zero. We’ll have some false positives eventually, but fewer than what we have now with a human review process.

False negatives, however, are harmless. If our pipeline doesn’t like a flu shot, it simply routes the document to the human team for review. If they go on to approve the document, it’s business as usual.

The model does well with the clean/easy documents, which account for ~50% of all flu shots. If it’s messy or confusing, it goes back to the humans as before.

Things we learned along the way

- The model does best at reading the document, not making decisions or doing math based on the document.

Initially, our prompts attempted to determine validity of the document.

Bad: Is the document already expired?

We found it far more effective to limit our prompts to questions that could be answered by looking at the document. The LLM doesn’t determine anything. It just grabs the relevant data points off the page.

Good: What is the expiration date?

Save the results and do the math downstream.

- You still need to be thoughtful about training data

We had a few duplicate flu shots from one clinician in our training data. Call this clinician Ben. One of our prompts was, “what is the patient’s name?” Because “Ben” was in the training data multiple times, any remotely unclear document would return with “Ben” as the patient name.

So overfitting is still a thing. Over/under sampling is still a thing. We tried again with a more thoughtful collection of training documents and things did much better.

Document AI is pretty magical, but not that magical. Fundamentals still matter.

- The model could be fooled by writing on a napkin.

To my knowledge, Snowflake does not have a way to render the document image as an embedding. You can create an embedding from the extracted text, but that won’t tell you if the text was written by hand or not. As long as the text is valid, the model and downstream logic will give it a green light.

You could fix this pretty easily by comparing image embeddings of submitted documents to the embeddings of accepted documents. Any document with an embedding way out in left field is sent back for human review. This is straightforward work, but you’ll have to do it outside Snowflake for now.

- Not as expensive as I was expecting

Snowflake has a reputation of being spendy. And for HIPAA compliance concerns we run a higher-tier Snowflake account for this project. I tend to worry about running up a Snowflake tab.

In the end we had to try extra hard to spend more than $100/week while training the model. We ran thousands of documents through the model every few days to measure its accuracy while iterating on the model, but never managed to break the budget.

Better still, we’re saving money on the manual review process. The costs for AI reviewing 1000 documents (approves ~500 documents) is ~20% of the cost we spend on humans reviewing the remaining 500. All in, a 40% reduction in costs for reviewing flu-shots.

Summing up

I’ve been impressed with how quickly we could complete a project of this scope using Document AI. We’ve gone from months to days. I give it 4 stars out of 5, and am open to giving it a 5th star if Snowflake ever gives us access to image embeddings.

Since flu shots, we’ve deployed similar models for other documents with similar or better results. And with all this prep work, instead of dreading the upcoming flu season, we’re ready to bring it on.

The post An Unbiased Review of Snowflake’s Document AI appeared first on Towards Data Science.

.webp?#)

![Apple to Split Enterprise and Western Europe Roles as VP Exits [Report]](https://www.iclarified.com/images/news/97032/97032/97032-640.jpg)

![Nanoleaf Announces New Pegboard Desk Dock With Dual-Sided Lighting [Video]](https://www.iclarified.com/images/news/97030/97030/97030-640.jpg)

![Apple's Foldable iPhone May Cost Between $2100 and $2300 [Rumor]](https://www.iclarified.com/images/news/97028/97028/97028-640.jpg)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)