A Farewell to APMs — The Future of Observability is MCP tools

Like many other fields, the world of observability is about to be turned upside down The post A Farewell to APMs — The Future of Observability is MCP tools appeared first on Towards Data Science.

The past

It would be shortsighted to believe that this revolution stops at simply generating code, however. With AI agents on the loose and the ecosystem opening up to new integrations, the foundations of how we monitor, understand, and optimize software are being upended as well. The tools that served us well in a human-centric world, built around concepts such as manual alerts, datagrids, and dashboards, are becoming irrelevant and obsolete. Application Performance Monitoring (APM) platforms and, in particular, how they leverage logs, metrics, and traces, will need to acknowledge that the human user possessing the time resources required to browse, filter, and set thresholds is no longer available, the team has already delegated much of that work to AI.

Intelligent agents are becoming integral to the SDLC (Software Development Lifecycle), autonomously analyzing, diagnosing, and improving systems in real time. This emerging paradigm requires a new take on an old problem. For observability data to be incorporated to make agents and teams more productive, it must be structured for machines, not for humans. One recent technology that makes this possible is also one that has rightfully received a lot of buzz lately, the Model Context Protocol (mcp).

MCPs in a nutshell

Initially introduced by Anthropic, the Model Context Protocol (MCP) represents a communication tier between AI agents and other applications, allowing agents to access additional data sources and perform actions as they see fit. More importantly, MCPs open up new horizons for the agent to intelligently choose to act beyond its immediate scope and thereby broaden the range of use cases it can address.

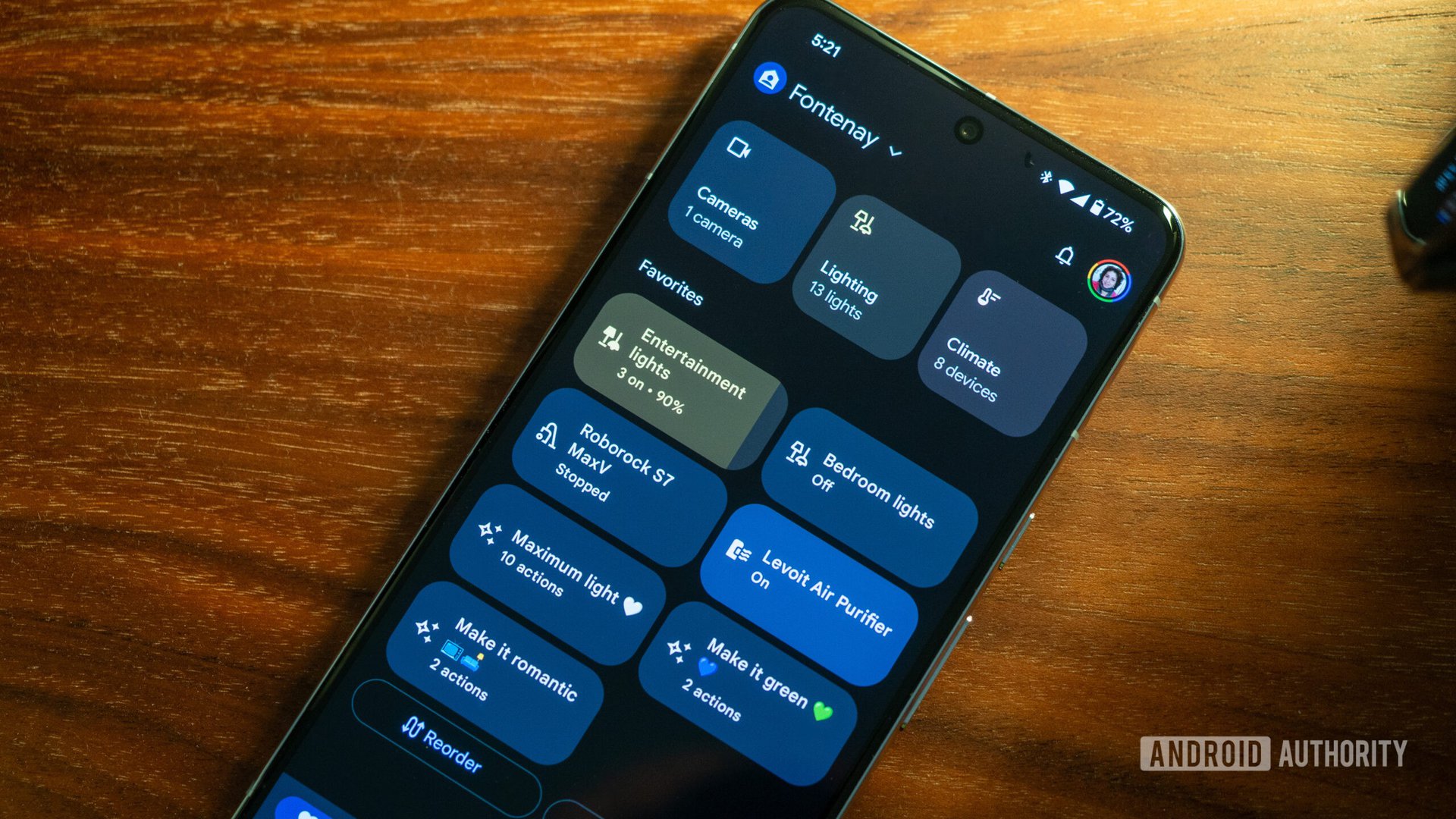

The technology is not new, but the ecosystem is. In my mind, it is the equivalent of evolving from custom mobile application development to having an app store. It is not by chance that it is currently experiencing growth of Cambrian proportions, as simply having a rich and standardized ecosystem opens up the market for new opportunities. More broadly speaking, MCPs represent an agent-centric model for creating new products that can transform how applications are built and the way in which they deliver value to end users.

The limitations of a human-centric model

Most software applications are built around humans as their primary users. Generally speaking, a vendor decides to invest in developing certain product features, which it believes will be a good match to the requirements and needs of end users. The users then try to make use of that given set of features to try to fulfill their specific needs.

There are three main limitations to this approach, which are becoming more of an impediment as teams adopt AI agents to streamline their processes:

- Fixed interface— Product managers have to anticipate and generalize the use case to create the right interfaces in the application. The UI or API set is fixed and cannot adapt itself to each unique need. Consequently, users may find that some features are completely useless to their specific requirements. Other times, even with a combination of features, the user can’t get everything they need.

- Cognitive load — The process of interacting with the application data to get to the information the user needs requires manual effort, resources, and sometimes expertise. Taking APMs as an example, understanding the root cause of a performance issue and fixing it might take some investigation, as each issue is different. Lack of automation and reliance on voluntary manual processes often means that the data is not utilized at all.

- Limited scope — Each product often only holds a part of the picture needed to solve the specific requirement. For example, the APM might have the tracing data, but no access to the code, the GitHub history, Jira trends, infrastructure data, or customer tickets. It is left to the user to triage using multiple sources to get to the root of each problem.

Agent-centric MCPs — The inverted application

With the advent of MCPs, software developers now have the choice of adopting a different model for developing software. Instead of focusing on a specific use case, trying to nail the right UI elements for hard-coded usage patterns, applications can transform into a resource for AI-driven processes. This describes a shift from supporting a handful of predefined interactions to supporting numerous emergent use cases. Rather than investing in a specific feature, an application can now choose to lend its domain expertise to the AI agent via data and actions that can be used opportunistically whenever they are relevant, even if indirectly so.

As this model scales, the agent can seamlessly consolidate data and actions from different applications and domains, such as GitHub, Jira, observability platforms, analytics tools, and the codebase itself. The agent can then automate the analysis process itself as a part of synthesizing the data, removing the manual steps and the need for specialized expertise.

Observability is not a web application; it’s data expertise

Let’s take a look at a practical example that can illustrate how an agent-centric model opens up new neural pathways in the engineering process.

Every developer knows code reviews require a lot of effort; to make matters worse, the reviewer is often context-switched away from their other tasks, further draining the team’s productivity. On the surface, this would seem like an opportunity for observability applications to shine. After all, the code under review has already accumulated meaningful data running in testing and pre-production environments. Theoretically, this information can help decipher more about the changes, what they are impacting, and how they have possibly altered the system behavior. Unforunately, the high cost of making sense of all of that data across multiple applications and data stream, makes it next to useless.

In an agent-centric flow, however, whenever an engineer asks an AI agent to assist in reviewing the new code, that entire process becomes completely autonomous. In the background, the agent will orchestrate the investigative steps across multiple applications and MCPs, including observability tools, to bring back actionable insights about the code changes. The agent can access relevant runtime data (e.g., traces and logs from staging runs), analytics on feature usage, GitHub commit metadata, and even Jira ticket history. It then correlates the diff with the relevant runtime spans, flags latency regressions or failed interactions, and points out recent incidents that might relate to the modified code.

In this scenario, the developer doesn’t need to sift through different tools or tabs or spend time trying to connect the dots— the agent brings it all together behind the scenes, identifying issues as well as possible fixes. As response itself is dynamically generated: it may begin with a concise textual summary, expand into a table showing metrics over time, include a link to the affected file in GitHub with highlighted changes, and even embed a chart visualizing the timeline of errors before and after the release.

While the above workflow was organically produced by an agent, some AI clients will allow the user to cement a desired workflows by adding rules to the agent’s memory. For example, this is is a memory file I am currenting using with Cursor to ensure that all code review prompts will consistently trigger checks to the test environment and check for usage based on production.

Death by a thousand use cases

The code review scenario is just one of many emergent use cases that demonstrate how AI can quietly make use of relevant MCP data to assist the user accomplish their goals. More importantly, the user does not need to be aware of the applications that were being used autonomously by the agent. From the user’s perspective, they just need to describe their need.

Emergent use cases can enhance user productivity across the board with data that cannot be made accessible otherwise. Here are a few other examples where observability data can make a huge difference, without anyone having to visit a single APM web page:

- Test generation based on real usage

- Selecting the right areas to refactor based on code issues affecting performance the most

- Preventing breaking changes when code is still checked out

- Detecting unused code

Products need to change

Making observability useful to the agent, however, is a little more involved than slapping on an MCP adapter to an APM. Indeed, many of the current generation tools, in rushing to support the new technology took that very route, not taking into consideration that AI agents also have their limitations.

While smart and powerful, agents cannot instantly replace any application interacting with any data, on demand. In their current iteration, at least, they are bound by the size of the dataset and stop short of applying more complex ML algorithms or even higher-order math. If the observability tool is to become an effective data provider to the agent, it must prepare the data in advance in lieu of these limitations. More broadly speaking, this defines the role of products in the age of AI — providing islands of nontrivial domain expertise to be utilized in an AI-driven process.

There are many posts on the topic on the best way to prepare data for use by generative AI agents, and I have included some links at the end of this post. However, we can describe some of the requirements of a good MCP output in broad strokes:

- Structured (schema-consistent, typed entities)

- Preprocessed (aggregated, deduplicated, tagged)

- Contextualized (grouped by session, lifecycle, or intent)

- Linked (references across code spans, logs, commits, and tickets)

Instead of surfacing raw telemetry an MCP must feed a coherent data narrative to the agent, post-analysis. The agent is not just a dashboard view to be rendered. At the same time, it must also make the relevant raw data available on demand to allow further investigation, to support the agent’s autonomous reasoning actions.

Given simple access to raw data it would be next to impossible for an agent to identify an issue manifesting in the trace internals of only 5% of the millions of available traces, let alone prioritize that problem based on its system impact, or make the determination of whether that pattern is anomalous.

To bridge that gap, many products will likely evolve into ‘AI preposessors’, bringing forth dedicated ML processes and high level statistical analysis as well as domain expertise.

Farewell to APMs

Ultimately, APMs are not legacy tools — they are representative of a legacy mindset that is slowly but surely being replaced. It might take more time for the industry to realign, but it will ultimately impact many of the products we currently use, especially in the software industry, which is racing to adopt generative AI.

As AI becomes more dominant in developing software, it will also no longer be limited to human-initiated interactions. Generative AI reasoning will be used as a part of the CI process, and in some cases, even run indefinitely as background processes continuously checking data and performing actions. With that in mind, more and more tools will come up with their agent-centric model complement and sometimes replace their direct-to-human approach, or risk being left out of their clients new AI SLDC stack.

Links and resources

- Airbyte: Normalization is key — schema consistency and relational linking improve cross-source reasoning.

- Harrison Clarke: Preprocessing must hit the sweet spot — rich enough for inference, structured enough for precision.

- DigitalOcean: Aggregation by semantic boundaries (user sessions, flows) unlocks better chunking and story-based reasoning.

Want to Connect? You can reach me on Twitter at @doppleware or via LinkedIn .

Follow my MCP for dynamic code analysis using observability at https://github.com/digma-ai/digma-mcp-server

The post A Farewell to APMs — The Future of Observability is MCP tools appeared first on Towards Data Science.

![Apple Reports Q2 FY25 Earnings: $95.4 Billion in Revenue, $24.8 Billion in Net Income [Chart]](https://www.iclarified.com/images/news/97188/97188/97188-640.jpg)

![Google reveals NotebookLM app for Android & iPhone, coming at I/O 2025 [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/05/NotebookLM-Android-iPhone-6-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![[REPOST] Installing Genymotion for Android App Pentesting: The Definitive Guide](https://media2.dev.to/dynamic/image/width=800%2Cheight=%2Cfit=scale-down%2Cgravity=auto%2Cformat=auto/https%3A%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2F7zx2oyrfun6gecomzwf2.png)