AI Agents To Drive Scientific Discovery Within a Year, Altman Predicts

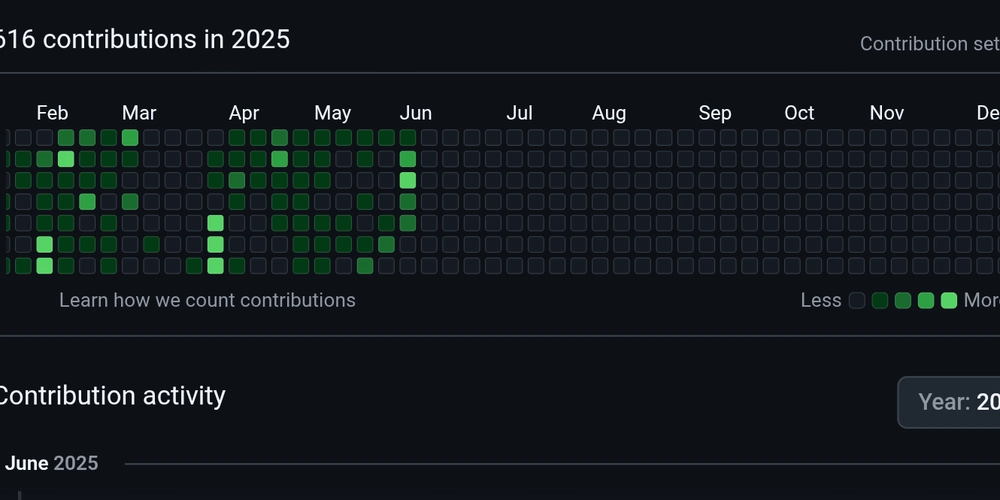

At the current pace of AI development, AI agents will be able to drive scientific discovery and solve tough technical and engineering problems within a year, OpenAI CEO and Founder Sam Altman said at the Snowflake Summit 25 conference in San Francisco Monday. “I would bet next year that in some limited cases, at least in some small ways, we start to see agents that can help us discover new knowledge or can figure out solutions to business problems that are kind of very non-trivial,” Altman said in a fireside conversation with Snowflake CEO Sridhar Ramaswamy and moderator Sarah Guo. “Right now, it’s very much in the category of, okay, if you’ve got some repetitive cognitive work, you can automate it at a kind of a low-level on a short time horizon,” Altman said. “And as that expands to longer time horizons and higher and higher levels, at some point you get to add a scientist, an AI agent, that can go discover new science. And that would be kind of a significant moment in the world.” We’re not far from being able to ask AI models to work on our hardest problems, and the models will actually be able to solve them, Altman said. “If you’re a chip design company, say go design me a better chip than I could have possibly had before,” he said. “If you’re a biotech company trying to cure some disease state, just go work on this for me. Like, that’s not so far away.” The potential for AI to assist with scientific discovery is an enticing one, indeed. Many private and public computing labs are experimenting with AI models to determine how they can be applied to tackle humanity’s toughest problems. Many of these individuals will be attending the Trillion Parameter Consortium’s conference next month to share their progress. TPC25 All Hands Hackathon and Conference will be held in San Jose July 28-31. The progress over the next year or two will be “quite breathtaking,” Altman said. “There’s a lot of progress ahead of us, a lot of improvement to come,” he said. “And like we have seen in the previous big jumps from GPT3 to GPT4, businesses can just do things that totally weren’t possible with the previous generation of models.” Guo, who is the founder of the venture capital firm Conviction, also asked Altman and Ramaswamy about AGI, or automated general intelligence. Altman said the definition of AGI keeps changing. If you could travel back in time to 2020 and gave them access to ChatGPT as it exists today, they would say that it’s definitely reached AGI, Altman said. While we hit the training wall for AI in 2024, we continue to make progress on the inference side of things. The emergence of reasoning models, in particular, is driving improvement in the accuracy of generative AI as well as the difficulty of the problems we’re asking AI to help solve. Ramaswamy, who arrived at Snowflake in 2023 when his neural search firm Neeva was acquired, talked about the “aha” moment he had working with GPT-3. “When you saw this problem of abstractive summarization actually get tackled nicely by GPT, which is basically taking a block that’s 1,500 words and writing three sentences to describe it–it’s really hard,” he said. “People struggle with doing this, and these models all of a sudden were doing it…That was a bit of a moment when it came to, oh my God, there is incredible power here. And of course it’s kept adding up.” With the proper context setting, there is nothing to stop today’s AI models from solving bigger and tougher problems, he said. Does that mean we’ll hit AGI soon? At some level, the question is absurd, Ramaswamy told Guo. “I see these models as having incredible capabilities,” he said. “Any person looking at what things are going to be like in 2030, we just declare that that’s AGI. But remember, you and I, to Sam’s point, would say the same thing in 2020 about what we are saying in ‘25. To me, it’s the rate of progress that is truly astonishing. And I sincerely believe that many great things are going to come out of it.” Altman concurred. While context is a human concept that’s infinite, the potential to improve AI by sharing more and better context with the models will drive tremendous improvement in the capability of AI over the next year or two, Altman said. “These models’ ability to understand all the context you want to possibly give them, connect to every tool, every system, whatever, and then go think really hard, like, really brilliant reasoning and come back with an answer and have enough robustness that you can trust them to go off and do some work autonomously like that–I don’t know if I thought that would feel so close, but it feels really close,” he said. If you hypothetically had 1,000 times more compute to throw at a problem, you probably wouldn’t spend that on training a better model. But with today’s reasoning models, that could potentially have an impact, according to Altman. “If you try more times on a hard problem, you can get much better answers already,” he said. “And a business that just said I’m

At the current pace of AI development, AI agents will be able to drive scientific discovery and solve tough technical and engineering problems within a year, OpenAI CEO and Founder Sam Altman said at the Snowflake Summit 25 conference in San Francisco Monday.

“I would bet next year that in some limited cases, at least in some small ways, we start to see agents that can help us discover new knowledge or can figure out solutions to business problems that are kind of very non-trivial,” Altman said in a fireside conversation with Snowflake CEO Sridhar Ramaswamy and moderator Sarah Guo.

“Right now, it’s very much in the category of, okay, if you’ve got some repetitive cognitive work, you can automate it at a kind of a low-level on a short time horizon,” Altman said. “And as that expands to longer time horizons and higher and higher levels, at some point you get to add a scientist, an AI agent, that can go discover new science. And that would be kind of a significant moment in the world.”

We’re not far from being able to ask AI models to work on our hardest problems, and the models will actually be able to solve them, Altman said.

“If you’re a chip design company, say go design me a better chip than I could have possibly had before,” he said. “If you’re a biotech company trying to cure some disease state, just go work on this for me. Like, that’s not so far away.”

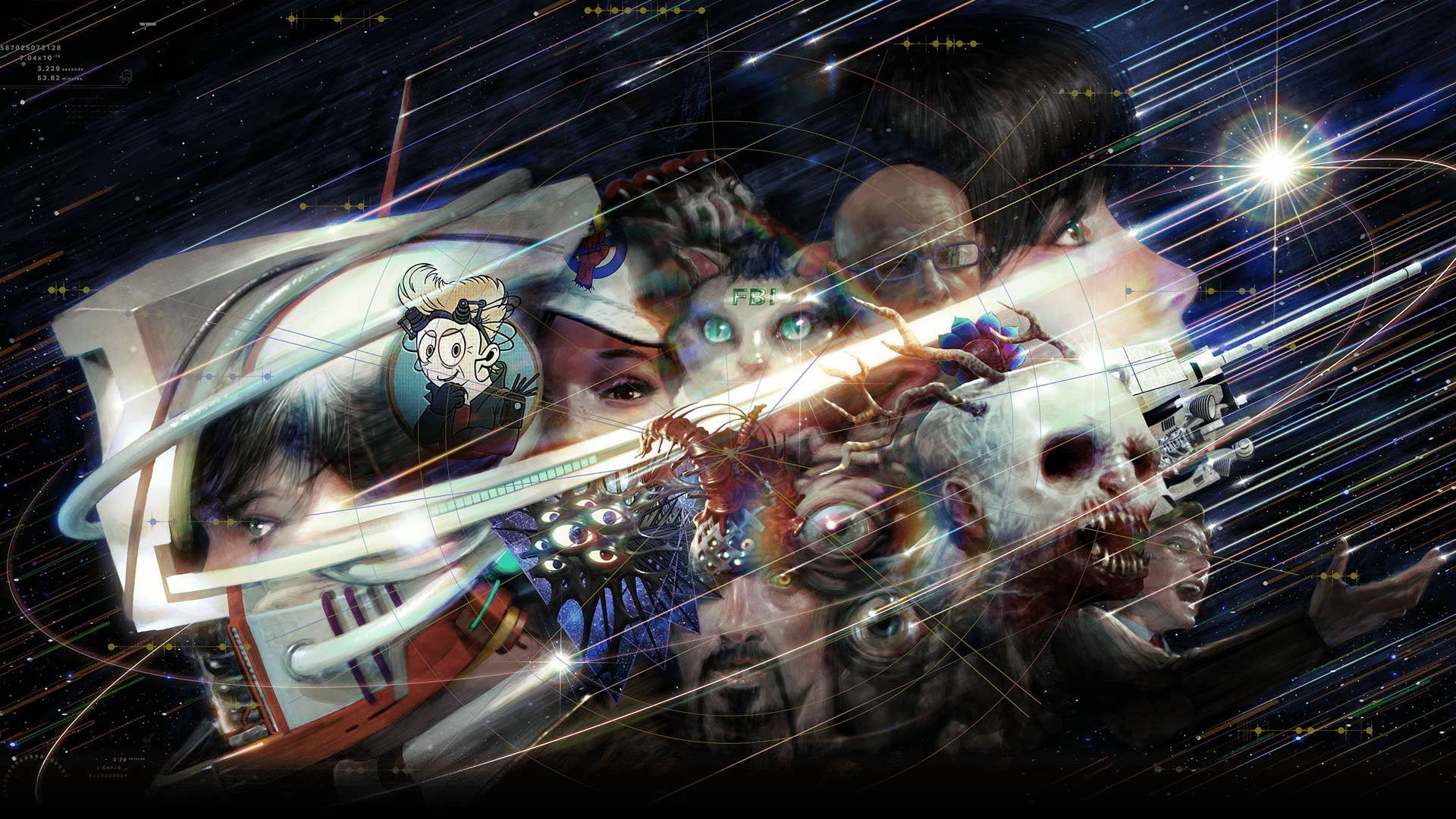

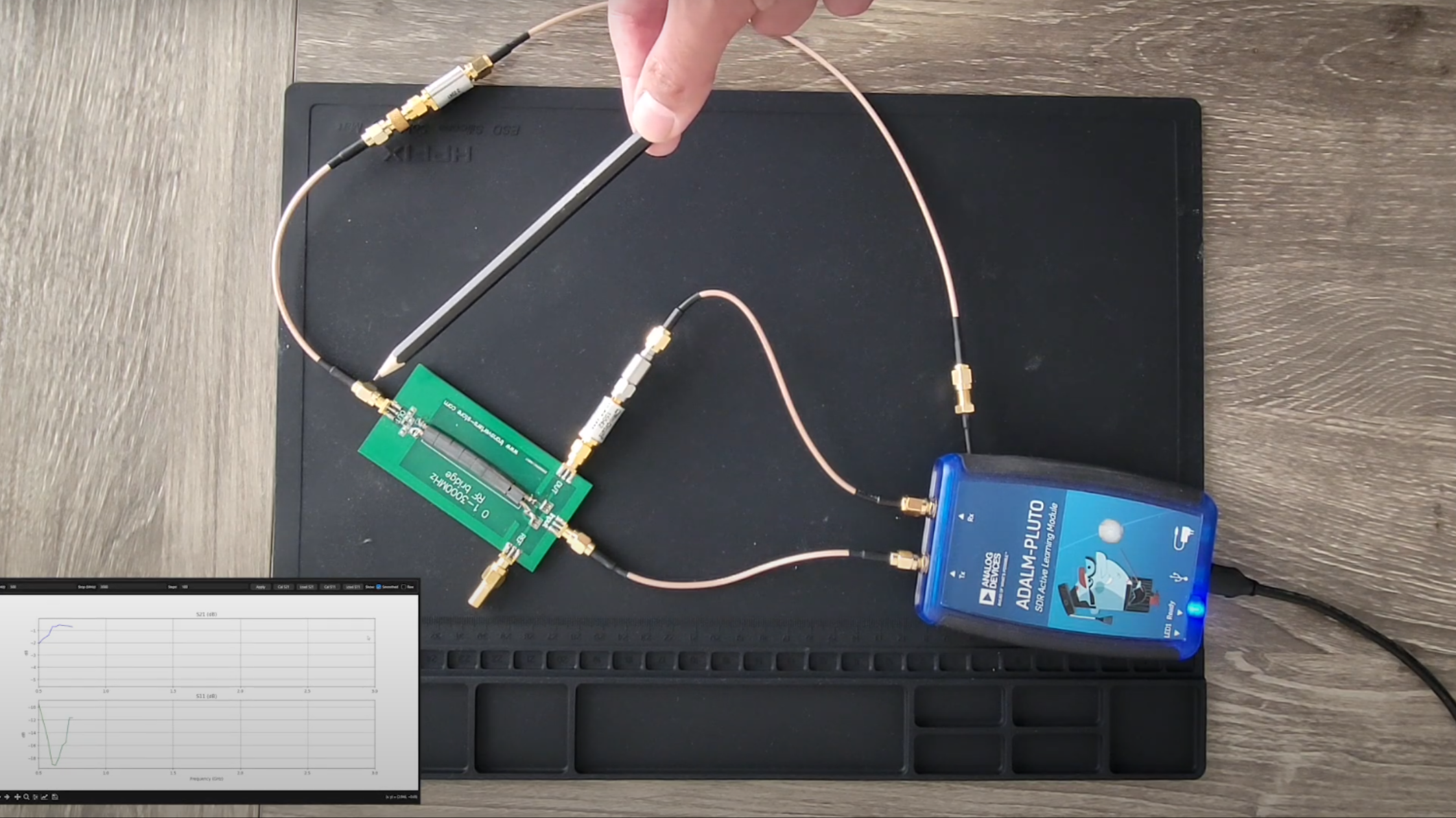

Sam Altman (left) talks with Sarah Guo (center) and Sridhar Ramaswamy during the opening keynote for Snowflake Summit 25 June 2, 2025.

The potential for AI to assist with scientific discovery is an enticing one, indeed. Many private and public computing labs are experimenting with AI models to determine how they can be applied to tackle humanity’s toughest problems. Many of these individuals will be attending the Trillion Parameter Consortium’s conference next month to share their progress. TPC25 All Hands Hackathon and Conference will be held in San Jose July 28-31.

The progress over the next year or two will be “quite breathtaking,” Altman said. “There’s a lot of progress ahead of us, a lot of improvement to come,” he said. “And like we have seen in the previous big jumps from GPT3 to GPT4, businesses can just do things that totally weren’t possible with the previous generation of models.”

Guo, who is the founder of the venture capital firm Conviction, also asked Altman and Ramaswamy about AGI, or automated general intelligence. Altman said the definition of AGI keeps changing. If you could travel back in time to 2020 and gave them access to ChatGPT as it exists today, they would say that it’s definitely reached AGI, Altman said.

While we hit the training wall for AI in 2024, we continue to make progress on the inference side of things. The emergence of reasoning models, in particular, is driving improvement in the accuracy of generative AI as well as the difficulty of the problems we’re asking AI to help solve. Ramaswamy, who arrived at Snowflake in 2023 when his neural search firm Neeva was acquired, talked about the “aha” moment he had working with GPT-3.

“When you saw this problem of abstractive summarization actually get tackled nicely by GPT, which is basically taking a block that’s 1,500 words and writing three sentences to describe it–it’s really hard,” he said. “People struggle with doing this, and these models all of a sudden were doing it…That was a bit of a moment when it came to, oh my God, there is incredible power here. And of course it’s kept adding up.”

With the proper context setting, there is nothing to stop today’s AI models from solving bigger and tougher problems, he said. Does that mean we’ll hit AGI soon? At some level, the question is absurd, Ramaswamy told Guo.

“I see these models as having incredible capabilities,” he said. “Any person looking at what things are going to be like in 2030, we just declare that that’s AGI. But remember, you and I, to Sam’s point, would say the same thing in 2020 about what we are saying in ‘25. To me, it’s the rate of progress that is truly astonishing. And I sincerely believe that many great things are going to come out of it.”

Altman concurred. While context is a human concept that’s infinite, the potential to improve AI by sharing more and better context with the models will drive tremendous improvement in the capability of AI over the next year or two, Altman said.

“These models’ ability to understand all the context you want to possibly give them, connect to every tool, every system, whatever, and then go think really hard, like, really brilliant reasoning and come back with an answer and have enough robustness that you can trust them to go off and do some work autonomously like that–I don’t know if I thought that would feel so close, but it feels really close,” he said.

If you hypothetically had 1,000 times more compute to throw at a problem, you probably wouldn’t spend that on training a better model. But with today’s reasoning models, that could potentially have an impact, according to Altman.

“If you try more times on a hard problem, you can get much better answers already,” he said. “And a business that just said I’m going to throw a thousand times more compute at every problem would get some amazing results. Now you’re not literally going to do that. You don’t have 1000 X compute. But the fact that that’s now possible, I think, does point [to an] interesting thing people could do today, which is say, okay, I’m going to really treat this as a power law and be willing to try a lot more compute for my hardest problems or most valuable things.”

AI training has hit a wall; users are pushing more compute resources to inference. (Source: Gorodenkoff/Shutterstock)

What people really mean when they say AGI isn’t solving the Turing Test, which has already been solved by today’s GenAI models. What they really mean is the moment at which AI models achieve consciousness, Guo said.

For Altman, the better question might be: When do AI models achieve superhuman capabilities? He gave an interesting description of what that would look like.

“The framework that I like to think about–this is not something we’re about to ship–but like the Platonic ideal is a very tiny model that has superhuman reasoning capabilities,” he said. “It can run ridiculously fast, and 1 trillion tokens of context and access to every tool you can possibly imagine. And so it doesn’t matter what the problem is. It doesn’t matter whether the model has the knowledge or the data in it or not. Using these models as databases is sort of ridiculous. It’s a very slow, expensive, very broken database. But the amazing thing is they can reason. And if you think of it as this reasoning engine that we can then throw like all of the possible context of a business or a person’s life into and any tool that they need for that physics simulator or whatever else, that’s like quite amazing what people can do. And I think directionally we’re headed there.”

This article first appeared on BigDATAwire.

![iOS 18 Adoption Reaches 82% [Chart]](https://www.iclarified.com/images/news/97512/97512/97512-640.jpg)

![Apple Shares Official Trailer for 'The Wild Ones' [Video]](https://www.iclarified.com/images/news/97515/97515/97515-1280.jpg)

![[The AI Show Episode 151]: Anthropic CEO: AI Will Destroy 50% of Entry-Level Jobs, Veo 3’s Scary Lifelike Videos, Meta Aims to Fully Automate Ads & Perplexity’s Burning Cash](https://www.marketingaiinstitute.com/hubfs/ep%20151%20cover.png)

![[DEALS] FileJump 2TB Cloud Storage: Lifetime Subscription (85% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

-0-8-screenshot.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)