Meta’s Full-stack HHVM optimizations for GenAI

As Meta has launched new, innovative products leveraging generative AI (GenAI), we need to make sure the underlying infrastructure components evolve along with it. Applying infrastructure knowledge and optimizations have allowed us to adapt to changing product requirements, delivering a better product along the way. Ultimately, our infrastructure systems need to balance our need to [...] Read More... The post Meta’s Full-stack HHVM optimizations for GenAI appeared first on Engineering at Meta.

As Meta has launched new, innovative products leveraging generative AI (GenAI), we need to make sure the underlying infrastructure components evolve along with it. Applying infrastructure knowledge and optimizations have allowed us to adapt to changing product requirements, delivering a better product along the way. Ultimately, our infrastructure systems need to balance our need to ship high-quality experiences with a need to run systems sustainability.

Splitting GenAI inference traffic out into a dedicated WWW tenant, which allows specialized runtime and warm-up configuration, has enabled us to meet both of those goals while delivering a 30% improvement in latency.

Who we are

As the Web Foundation team, we operate Meta’s monolithic web tier, running Hack. The team is composed of cross-functional engineers who make sure the infrastructure behind the web tier is healthy and well designed. We jump into incident response, work on some of the most complex areas of the infrastructure, and help build whatever we need to keep the site happily up and running.

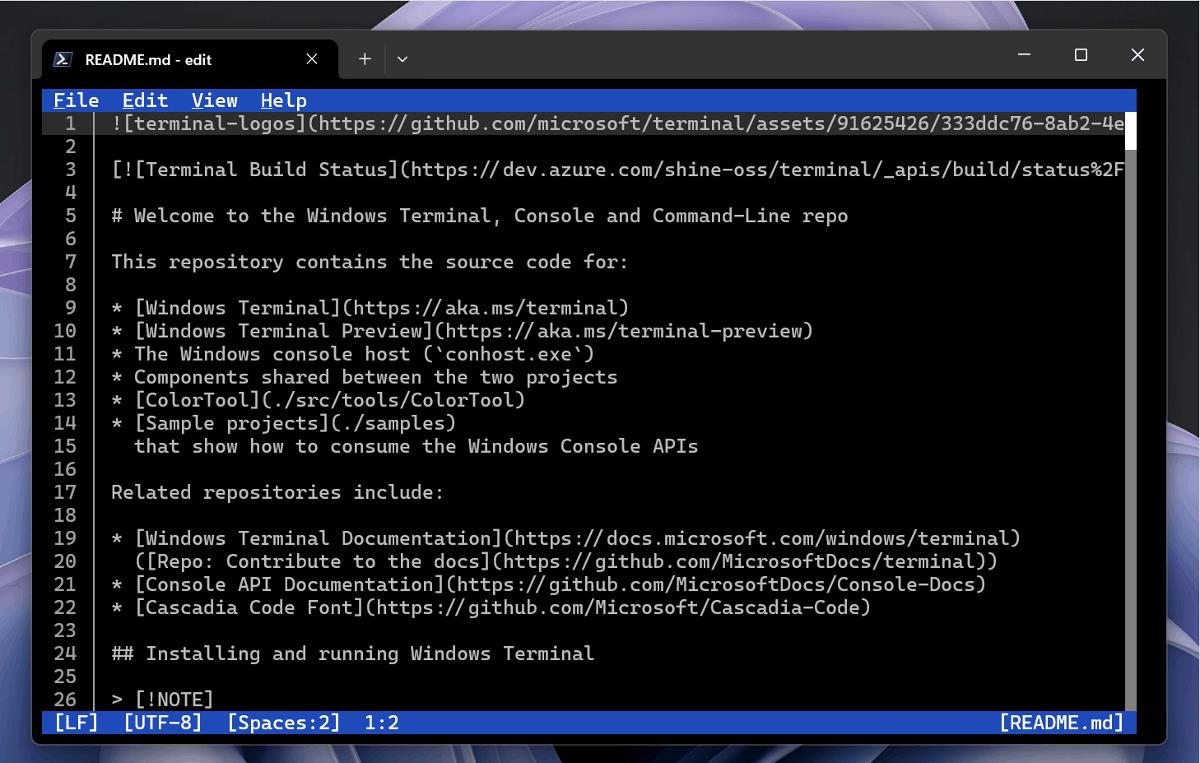

To accomplish this, we have established a series of best practices on being a “good citizen” of the shared tier. We need to ensure that all requests comply with these guidelines to prevent issues from spilling over and affecting other teams’ products. One core rule is the request runtime—limiting a request to 30 seconds of execution. This is a consequence of the HHVM (HipHop Virtual Machine) runtime—each request has a corresponding worker thread, of which there is a finite number. To ensure there are always threads available to serve incoming requests, we need to balance the resources available on each host with its expected throughput. If requests are taking too long, there will be fewer available threads to process new requests, leading to user-visible unavailability.

The changing landscape

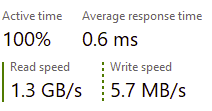

Classically, webservers at Meta are optimized for serving front-end requests—rendering webpages and serving GraphQL queries. These requests’ latency is typically measured in hundreds of milliseconds to seconds (substantially below the 30-second limit), which enables hosts to process approximately 500 queries per second.

Additionally, a web server will spend about two-thirds of its time doing input/output (I/O), and the remaining third doing CPU work. This fact has influenced the design of the Hack language, which supports asyncio, a type of cooperative multi-tasking, and all the core libraries support these primitives to increase performance and decrease the amount of time the CPU is sitting idle, waiting for I/O.

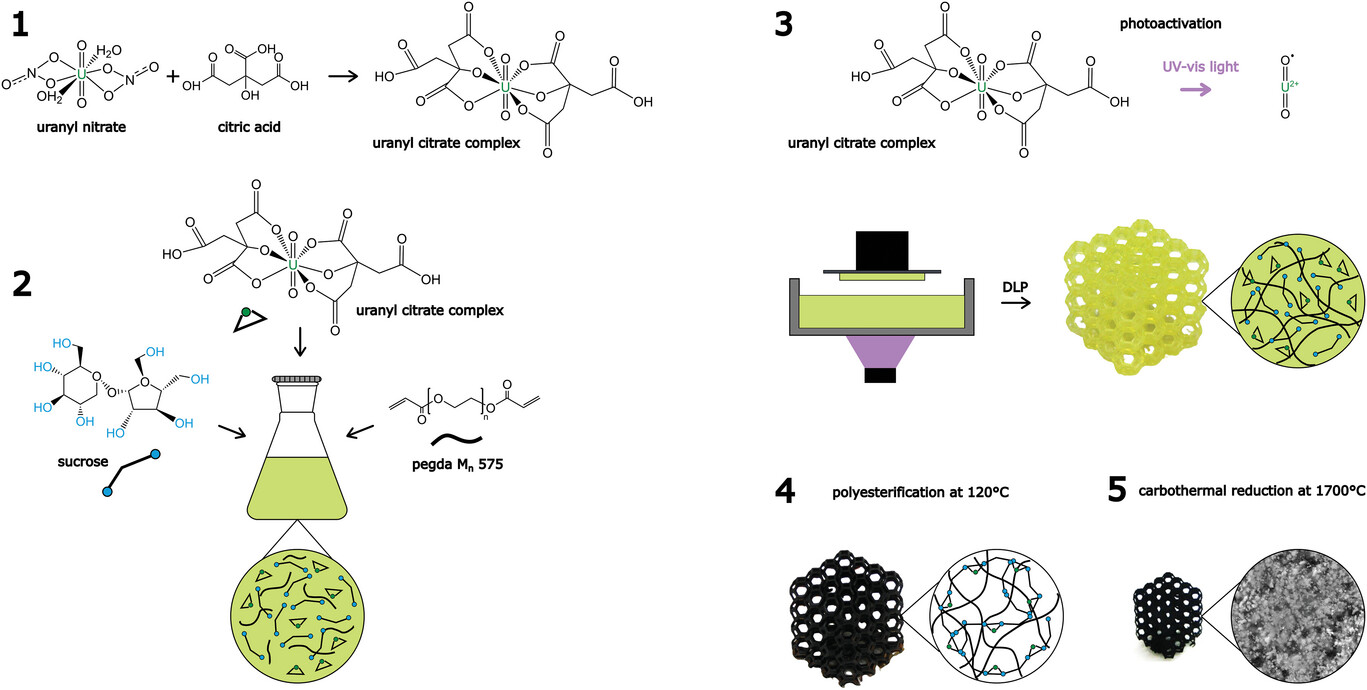

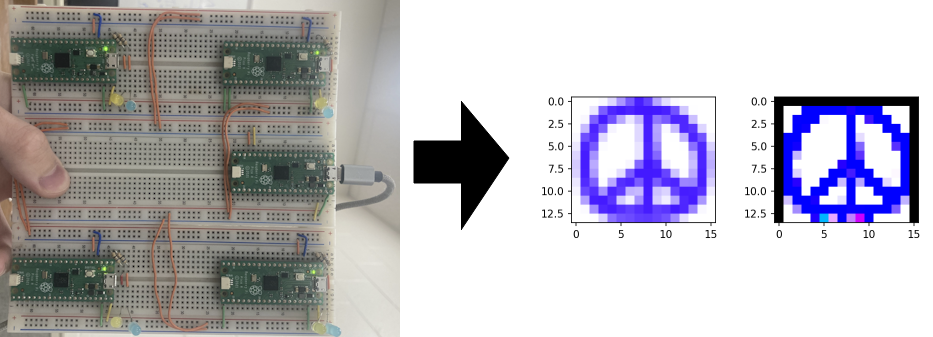

GenAI products, especially LLMs, have a different set of requirements. These are driven by the core inference flow: The model responds with a stream of tokens that can take seconds or minutes to complete. A user may see this as a chatbot “typing” a response. This isn’t an effect to make our products seem friendlier; it’s the speed at which our models think! After a user submits a query to the model, we need to start streaming these responses back to the user as fast as possible. On top of that, the total latency of the request is now substantially longer (measured in seconds). These properties have two effects on the infrastructure—minimal overhead on the critical path before calling the LLM, and a long duration for the rest of the request, most of which is spent waiting on I/O. (See Figures 1 and 2 below).

A series of optimizations

This shift in requirements allowed Web Foundation to reexamine the rules of running the monolithic web tier. We then launched a dedicated web tenant (a standalone deployment of WWW) that allowed custom configuration, which we could better tune to the needs of the workload.

Request timeout

First, running on an isolated web tier allowed us to increase the runtime limit for GenAI requests. This is a straightforward change, but it allowed us to isolate the longer-running traffic to avoid adverse impacts on the rest of the production tier. This way, we can avoid requests timing out if inference takes longer than 30 seconds.

Thread-pool sizing

Running requests for longer means there is reduced availability of worker threads (which, remember, map 1:1 with processed requests). Since webservers have a finite amount of memory, we can divide the total memory available by the per-request memory limit to get a peak number of active requests; this in turn tells us how many requests we can execute simultaneously. We ended up running with approximately 1000 threads on GenAI hosts, as compared to a couple of hundred on normal webservers.

JIT cache and “jumpstart”

HHVM is a just-in-time (JIT) interpreted language, which means the first time a given function executes, the machine needs to compile it to lower-level machine code for execution. Additionally, a technique called Jump-Start allows a webserver to seed its JIT cache with outputs from a previously warmed server. By allowing GenAI hosts to use Jump-Start profiles from the main web tier, we are able to greatly speed up execution, even if the code overlap is not identical.

Request warm-up

HHVM also supports the execution of dummy requests at server startup, which we can execute, and then we can discard the results. The intent here is to warm non-code caches within the webserver. Configuration values and service discovery info are normally fetched inline the first time they are needed and then cached within the webserver. By fetching and caching this information in warm-up requests, we prevent our users from observing the latency of these initial fetches.

Shadow traffic

Finally, Meta heavily uses real-time configuration to control feature rollouts, which means that jumpstart profiles consumed at startup time might not cover all future code paths the server will execute. To maintain coverage in the steady state, we also added request shadowing, so we can ensure that gating changes are still covered in the JIT cache.

The post Meta’s Full-stack HHVM optimizations for GenAI appeared first on Engineering at Meta.

_Prostock-studio_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What’s new in Android’s May 2025 Google System Updates [U: 5/19]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

.webp?#)

![[The AI Show Episode 147]: OpenAI Abandons For-Profit Plan, AI College Cheating Epidemic, Apple Says AI Will Replace Search Engines & HubSpot’s AI-First Scorecard](https://www.marketingaiinstitute.com/hubfs/ep%20147%20cover.png)

![How to make Developer Friends When You Don't Live in Silicon Valley, with Iraqi Engineer Code;Life [Podcast #172]](https://cdn.hashnode.com/res/hashnode/image/upload/v1747360508340/f07040cd-3eeb-443c-b4fb-370f6a4a14da.png?#)