Enhancing AI Integrations with MCP and Azure API Management

As AI Agents and assistants become increasingly central to modern applications and experiences, the need for seamless, secure integration with external tools and data sources is more critical than ever. The Model Context Protocol (MCP) is emerging as a key open standard enabling these integrations - allowing AI models to interact with APIs, Databases, and other services in a consistent, scalable way. Understanding MCP MCP utilizes a client-host-server architecture built upon JSON-RPC 2.0 for messaging.Communication between clients and servers occurs over defined transport layers, primarily: stdio: Standard input/output, suitable for efficient communication when the client and server run on the same machine. HTTP with Server-Sent Events (SSE): Uses HTTP POST for client-to-server messages and SSE for server-to-client messages, enabling communication over networks, including remote servers. Why MCP Matters While Large Language Models (LLMs) are powerful, their utility is often limited by their inability to access real-time or proprietary data. Traditionally, integrating new data sources or tools required custom connectors/ implementations and significant engineering efforts. MCP addresses this by providing a unified protocol for connecting agents to both local and remote data sources - unifying and streamlining integrations. Leveraging Azure API Management for remote MCP servers Azure API Management is a fully managed platform for publishing, securing, and monitoring APIs. By treating MCP server endpoints as other backend APIs, organizations can apply familiar governance, security, and operational controls. With MCP adoption, the need for robust management of these backend services will intensify. API Management retains a vital role in governing these underlying assets by: Applying security controls to protect the backend resources. Ensuring reliability. Effective monitoring and troubleshooting with tracing requests and context flow. In this blog post, I will walk you through a practical example: hosting an MCP server behind Azure API Management, configuring credential management, and connecting with GitHub Copilot. A Practical Example: Automating Issue Triage To follow along with this scenario, please check out our Model Context Protocol (MCP) lab available at AI-Gateway/labs/model-context-protocol Let's move from theory to practice by exploring how MCP, Azure API Management (APIM) and GitHub Copilot can transform a common engineering workflow. Imagine you're an engineering manager aiming to streamline your team's issue triage process - reducing manual steps and improving efficiency. Example workflow: Engineers log bugs/ feature requests as GitHub issues Following a manual review, a corresponding incident ticket is generated in ServiceNow. This manual handoff is inefficient and error prone. Let's see how we can automate this process - securely connecting GitHub and ServiceNow, enabling an AI Agent (GitHub Copilot in VS Code) to handle triage tasks on your behalf. A significant challenge in this integration involves securely managing delegated access to backend APIs, like GitHub and ServiceNow, from your MCP Server. Azure API Management's credential manager solves this by centralizing secure credential storage and facilitating the secure creation of connections to your third-party backend APIs. Build and deploy your MCP server(s) We'll start by building two MCP servers: GitHub Issues MCP Server Provides tools to authenticate on GitHub (authorize_github), retrieve user information (get_user) and list issues for a specified repository (list_issues). ServiceNow Incidents MCP Server Provides tools to authenticate with ServiceNow (authorize_servicenow), list existing incidents (list_incidents) and create new incidents (create_incident). We are using Azure API Management to secure and protect both MCP servers, which are built using Azure Container Apps. Azure API Management's credential manager centralizes secure credential storage and facilitates the secure creation of connections to your backend third-party APIs. Client Auth: You can leverage API Management subscriptions to generate subscription keys, enabling client access to these APIs. Optionally, to further secure /sse and /messages endpoints, we apply the validate-jwt policy to ensure that only clients presenting a valid JWT can access these endpoints, preventing unauthorized access. (see: AI-Gateway/labs/model-context-protocol/src/github/apim-api/auth-client-policy.xml) After registering OAuth applications in GitHub and ServiceNow, we update APIM's credential manager with the respective Client IDs and Client Secrets. This enables APIM to perform OAuth flows on behalf of users, securely storing and managing tokens for backend calls to GitHub and ServiceNow. Connecting your MCP Server in VS Code With your MCP servers deployed and secured behind

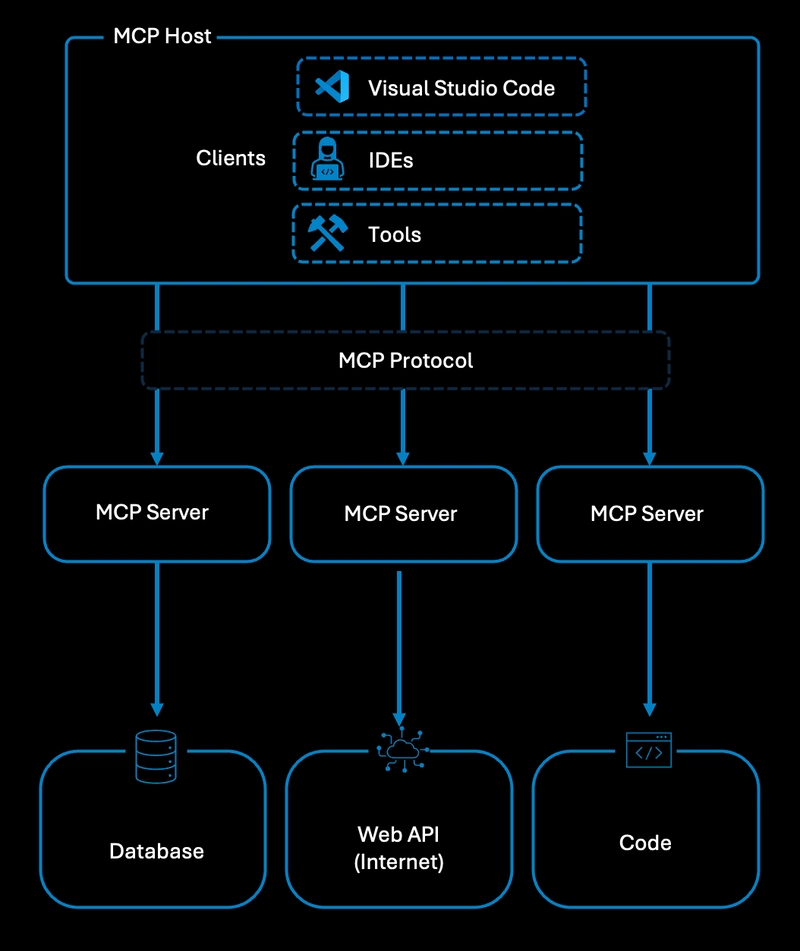

As AI Agents and assistants become increasingly central to modern applications and experiences, the need for seamless, secure integration with external tools and data sources is more critical than ever. The Model Context Protocol (MCP) is emerging as a key open standard enabling these integrations - allowing AI models to interact with APIs, Databases, and other services in a consistent, scalable way.

Understanding MCP

MCP utilizes a client-host-server architecture built upon JSON-RPC 2.0 for messaging.Communication between clients and servers occurs over defined transport layers, primarily:

stdio: Standard input/output, suitable for efficient communication when the client and server run on the same machine.

HTTP with Server-Sent Events (SSE): Uses HTTP POST for client-to-server messages and SSE for server-to-client messages, enabling communication over networks, including remote servers.

Why MCP Matters

While Large Language Models (LLMs) are powerful, their utility is often limited by their inability to access real-time or proprietary data. Traditionally, integrating new data sources or tools required custom connectors/ implementations and significant engineering efforts. MCP addresses this by providing a unified protocol for connecting agents to both local and remote data sources - unifying and streamlining integrations.

Leveraging Azure API Management for remote MCP servers

Azure API Management is a fully managed platform for publishing, securing, and monitoring APIs. By treating MCP server endpoints as other backend APIs, organizations can apply familiar governance, security, and operational controls. With MCP adoption, the need for robust management of these backend services will intensify. API Management retains a vital role in governing these underlying assets by:

- Applying security controls to protect the backend resources.

- Ensuring reliability.

- Effective monitoring and troubleshooting with tracing requests and context flow.

In this blog post, I will walk you through a practical example: hosting an MCP server behind Azure API Management, configuring credential management, and connecting with GitHub Copilot.

A Practical Example: Automating Issue Triage

To follow along with this scenario, please check out our Model Context Protocol (MCP) lab available at AI-Gateway/labs/model-context-protocol

Let's move from theory to practice by exploring how MCP, Azure API Management (APIM) and GitHub Copilot can transform a common engineering workflow. Imagine you're an engineering manager aiming to streamline your team's issue triage process - reducing manual steps and improving efficiency.

Example workflow:

- Engineers log bugs/ feature requests as GitHub issues

- Following a manual review, a corresponding incident ticket is generated in ServiceNow.

This manual handoff is inefficient and error prone. Let's see how we can automate this process - securely connecting GitHub and ServiceNow, enabling an AI Agent (GitHub Copilot in VS Code) to handle triage tasks on your behalf.

A significant challenge in this integration involves securely managing delegated access to backend APIs, like GitHub and ServiceNow, from your MCP Server. Azure API Management's credential manager solves this by centralizing secure credential storage and facilitating the secure creation of connections to your third-party backend APIs.

Build and deploy your MCP server(s)

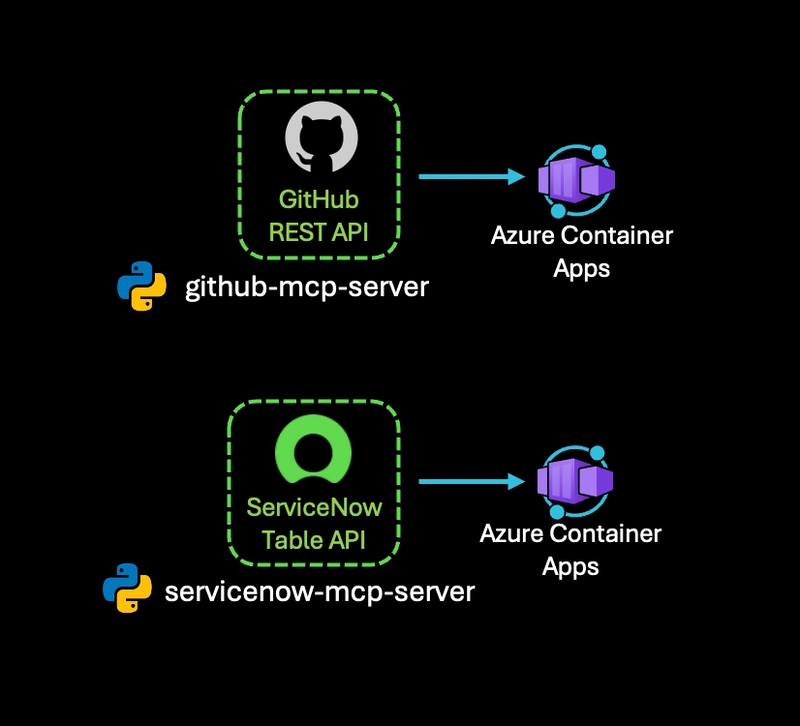

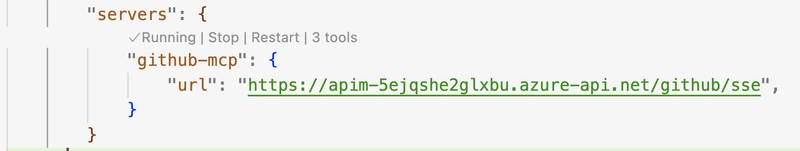

We'll start by building two MCP servers:

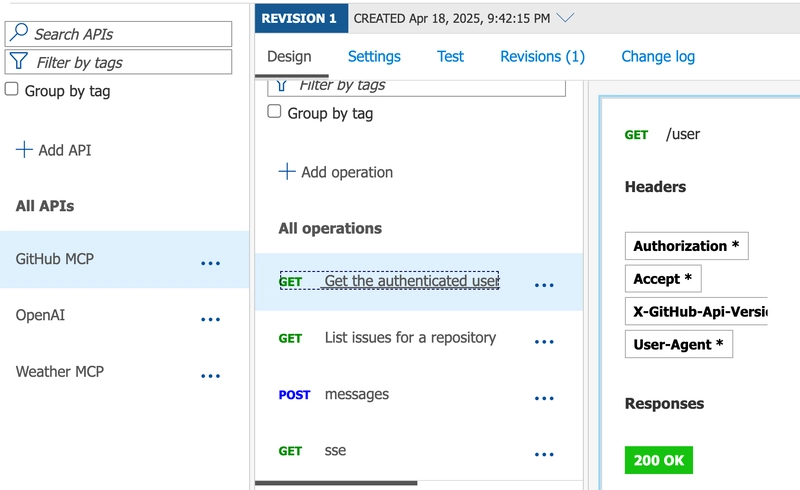

- GitHub Issues MCP Server

- Provides tools to authenticate on GitHub (authorize_github), retrieve user information (get_user) and list issues for a specified repository (list_issues).

- ServiceNow Incidents MCP Server

- Provides tools to authenticate with ServiceNow (authorize_servicenow), list existing incidents (list_incidents) and create new incidents (create_incident).

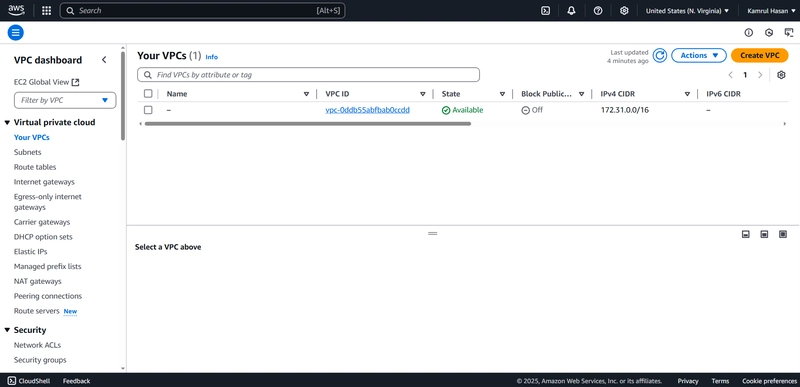

We are using Azure API Management to secure and protect both MCP servers, which are built using Azure Container Apps. Azure API Management's credential manager centralizes secure credential storage and facilitates the secure creation of connections to your backend third-party APIs.

Client Auth:

- You can leverage API Management subscriptions to generate subscription keys, enabling client access to these APIs.

- Optionally, to further secure /sse and /messages endpoints, we apply the validate-jwt policy to ensure that only clients presenting a valid JWT can access these endpoints, preventing unauthorized access. (see: AI-Gateway/labs/model-context-protocol/src/github/apim-api/auth-client-policy.xml)

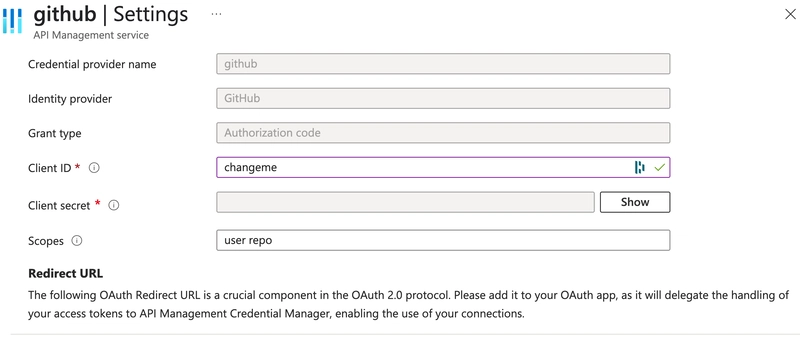

After registering OAuth applications in GitHub and ServiceNow, we update APIM's credential manager with the respective Client IDs and Client Secrets. This enables APIM to perform OAuth flows on behalf of users, securely storing and managing tokens for backend calls to GitHub and ServiceNow.

Connecting your MCP Server in VS Code

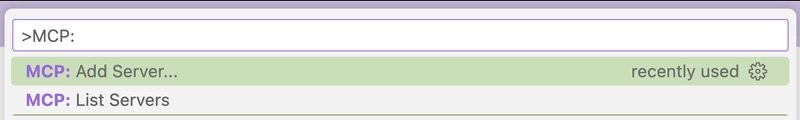

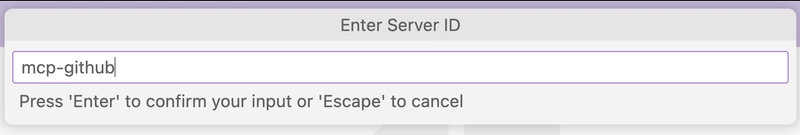

With your MCP servers deployed and secured behind Azure API Management, the next step is to connect them to your development workflow. Visual Studio Code now supports MCP, enabling GitHub Copilot's agent mode to connect to any MCP-compatible server and extend its capabilities.

Open Command Pallette and type in MCP: Add Server ...

Select server type as HTTP (HTTP or Server-Sent Events)

Paste in the Server URL

Provide a Server ID

This process automatically updates your settings.json with the MCP server configuration.

Once added, GitHub Copilot can connect to your MCP servers and access the defined tools, enabling agentic workflows such as issue triage and automation. You can repeat these steps to add the ServiceNow MCP Server.

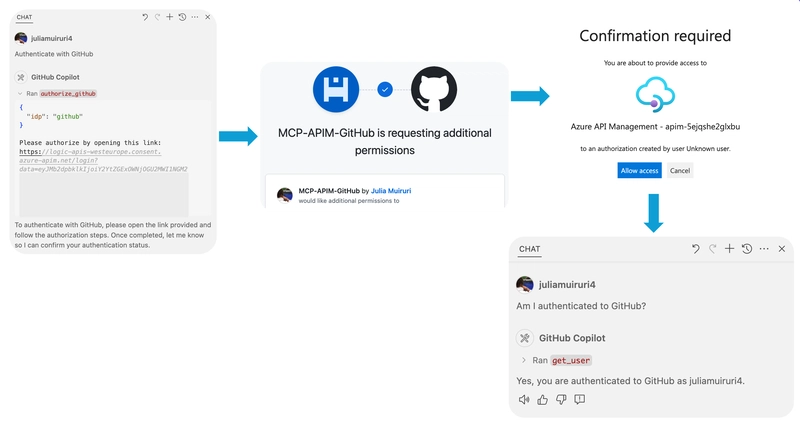

Understanding Authentication and Authorization with Credential Manager

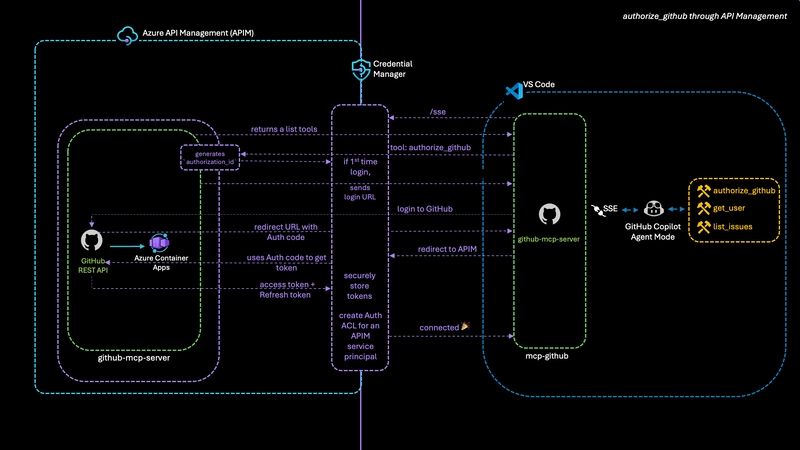

When a user initiates an authentication workflow (e.g, via the authorize_github tool), GitHub Copilot triggers the MCP server to generate an authorization request and a unique login URL. The user is redirected to a consent page, where their registered OAuth application requests permissions to access their GitHub account. Azure API Management acts as a secure intermediary, managing the OAuth flow and token storage.

Flow of authorize_github:

Step 1 - Connection initiation:

- GitHub Copilot Agent invokes a sse connection to API Management via the MCP Client (VS Code)

Step 2 - Tool Discovery:

- APIM forwards the request to the GitHub MCP Server, which responds with available tools.

Step 3 - Authorization Request:

- GitHub Copilot selects and executes authorize_github tool. The MCP server generates an authorization_id for the chat session.

Step 4 - User Consent:

- If it's the first login, APIM requests a login redirect URL from the MCP Server

- The MCP Server sends the Login URL to the client, prompting the user to authenticate with GitHub

- Upon successful login, GitHub redirects the client with an authorization code

Step 5 - Token Exchange and Storage:

- The MCP Client sends the authorization code to API Management

- APIM exchanges the code for access and refresh tokens from GitHub

- APIM securely stores the token and creates an Access Control List (ACL) for the service principal.

Step 6 - Confirmation:

- APIM confirms successful authentication to the MCP Client, and the user can now perform authenticated actions, such as accessing private repositories.

Check out the python logic for how to implement it: AI-Gateway/labs/model-context-protocol/src/github/mcp-server/mcp-server.py

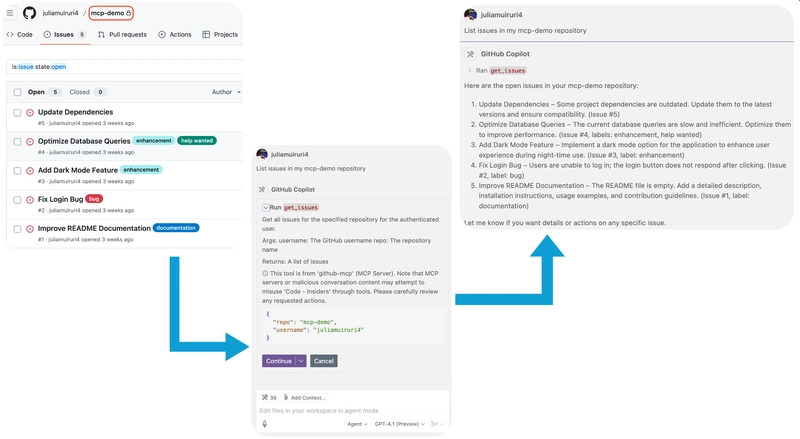

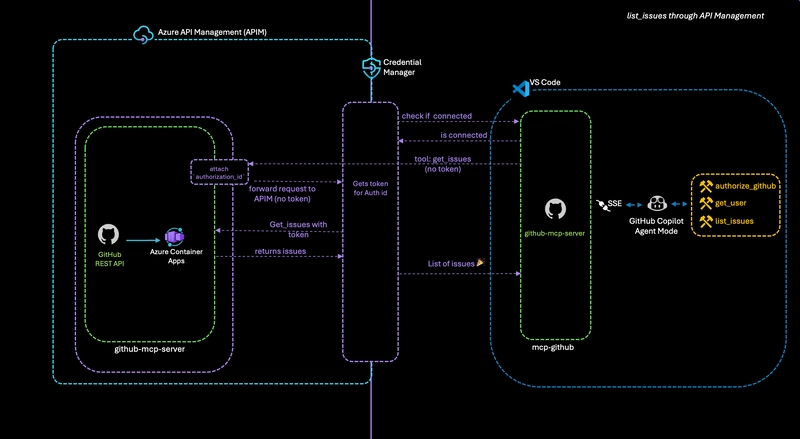

Understanding Tool Calling with underlaying APIs in API Management

Using the list_issues tool,

Connection confirmed

- APIM confirms the connection to the MCP Client Issue retrieval:

- The MCP Client requests issues from the MCP server

- The MCP Server attaches the authorization_id as a header and forwards the request to APIM

- The list of issues is returned to the agent

You can use the same process to add the ServiceNow MCP Server. With both servers connected, GitHub Copilot Agent can extract issues from a private repo in GitHub and create new incidences in ServiceNow, automating your triage workflow. You can define additional tools such as suggest_assignee tool, assign_engineer tool, update_incident_status tool, notify_engineer tool, request_feedback tool and other to demonstrate a truly closed-loop, automated engineering workflow - from issue creation to resolution and feedback.

Take a look at this brief demo showcasing the entire end-to-end process:

Summary

Azure API Management (APIM) is an essential tool for enterprise customers looking to integrate AI models with external tools using the Model Context Protocol (MCP). In this blog, we demonstrated how Azure API Management's credential manager solves the secure creation of connections to your backend APIs. By integrating MCP servers with VS Code and leveraging APIM for OAuth flows and token management, you can enable secure, agentic automation across your engineering tools. This approach not only streamlines workflows like issues triage and incident creation but also ensures enterprise-grade security and governance for all APIs.

Additional Resources

Using Credential Manager will help with managing OAuth 2.0 tokens to backend services.

Client Auth for remote MCP servers:

- AZD up: https://aka.ms/mcp-remote-apim-auth

- AI lab Client Auth: AI-Gateway/labs/mcp-client-authorization/mcp-client-authorization.ipynb

If you have any questions or would like to learn more about how MCP and Azure API Management can benefit your organization, feel free to reach out to us. We are always here to help and provide further insights.

Connect with us on LinkedIn (Julia Kasper & Julia Muiruri) and follow for more updates, insights, and discussions on AI integrations and API management.

![M4 MacBook Air Drops to New All-Time Low of $912 [Deal]](https://www.iclarified.com/images/news/97108/97108/97108-640.jpg)

![New iPhone 17 Dummy Models Surface in Black and White [Images]](https://www.iclarified.com/images/news/97106/97106/97106-640.jpg)

_Olekcii_Mach_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] Sterling Stock Picker: Lifetime Subscription (85% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

-This-LG-Smart-TV-is-an-Xbox-00-00-34.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)