How I Built an AI-Generated News Site - and What I Learned

How I Built an AI-Generated News Site - and What I Learned Hi, I'm a software engineer working in AI, and recently I built Agentic Tribune. It's a fully automated news site that publishes original, fact-based reporting each day. It has been a fun, eye-opening side project. Why I Built It I've long been thinking about the challenges and pressures on traditional media. Social media has siphoned off ad revenue, leading to layoffs and closures. To survive, news outlets have often resorted to click-bait and polarization. I started to wonder: could I build a news site where every step was performed by AI? More importantly, could it be useful and credible? The goal was neutral, factual reporting, grounded in real-world sources: government releases, legal rulings, financial reports, etc. No persuasion, just clean summaries of what actually happened. The Pipeline The system runs automatically every day via cron jobs. It creates: ~22 articles/day on weekdays ~12/day on weekends The process is: Scan for leads — pull from structured feeds and recent news headlines Score/rank leads — recency, quality, newsworthiness, novelty Select top candidates Do background research via AI + search Write an article Self-critique and revise Format, tag, and publish Each article costs around $0.25–0.30 in API usage. That adds up, but it's scalable in both directions. If the site gains traction, I can expand it. If not, I can scale it down without much pain. Managing LLM Behavior LLMs are capable, but prone to distraction and unexpected behavior. Here are some challenges I encountered: If I asked the model to rate a lead on a series of metrics like recency, newsworthiness, and redundancy, it would sometimes "reward" redundancy just because it's a high score. I would have to normalize the direction of each metric. If I combined too many tasks into one prompt (e.g. "rate the leads and write an article about the best one"), it would leak details between them. Articles would contain bits from unrelated leads. Similarly, if I asked it to rate the 'recency' of events being considered and only select recent events, it would hallucinate incorrect recent dates for events to defend its own decision. Being too explicit often backfires. Simpler prompts often worked better than elaborate, rule-filled ones. In general, LLMs seemed to respond better to positive requirements than negative ones. When I asked it to write in AP-style, it presented itself as the associated press. Careful prompt tweaking is required to fix issues like this. If I could explain what I wanted clearly enough, often the LLM could write a better prompt for itself. To manage these issues, I split everything into clean stages with hard isolation between steps. Prompts are concise and focused. Unnecessary data is stripped between phases. I also relied on OpenAI’s function calling APIs, which were helpful in constraining responses to structured data and avoiding format drift. Brave Search: Better Than Expected Though I started with only OpenAI's search-preview API for research, I've also been experimenting with Brave Search for parts of the pipeline. It’s surprisingly easy to plug into a lightweight RAG system, and have the model cite recent headlines or summaries. Using a custom search-enabled RAG system allows for more flexibility, and is cheaper than using the search-preview models. The Stack Backend: Flask, SQLite, cron jobs and python scripts, prompts generated from Jinja2 templates Metrics: Umami Frontend: Static HTML and Jinja2 templates Icons: All generated by ChatGPT Deployment: EC2 with Nginx and gunicorn It’s not fancy, but with help from ChatGPT, I was able to build a complete site from scratch in about 2–3 weeks of evenings and weekends, completely solo - built entirely in my free time, outside of work. I didn't use IDE-integrated AI-assistants - my workflow was just to copy/paste code to and from a chat window and edit myself. I chose SQLite because it's easy to manage. It's not optimized for write-heavy workloads, but this is a read-heavy application. Flask is simple, and I've always liked Jinja2-style templating. Without AI, I would have spent months just on the frontend and design alone. AI doesn't replace me as a software engineer, but the productivity boost is massive. I also never would have been able to create the icons and images on my own. Cost and Scale Right now, I’m spending over $100/month just on API calls for a site that might be useful, or might be something only I read. It's a price I'm willing to pay for a hobby project for now, and I can always scale down if needed. Custom thumbnails would cost extra ~$0.04/article, so for now I'm just using static images by "section." Posting to Twitter via API would cost another $100/month, so for now automatic posts only go to Bluesky. There's plenty I could do to improve the quality of articles with h

How I Built an AI-Generated News Site - and What I Learned

Hi, I'm a software engineer working in AI, and recently I built Agentic Tribune. It's a fully automated news site that publishes original, fact-based reporting each day. It has been a fun, eye-opening side project.

Why I Built It

I've long been thinking about the challenges and pressures on traditional media. Social media has siphoned off ad revenue, leading to layoffs and closures. To survive, news outlets have often resorted to click-bait and polarization.

I started to wonder: could I build a news site where every step was performed by AI? More importantly, could it be useful and credible?

The goal was neutral, factual reporting, grounded in real-world sources: government releases, legal rulings, financial reports, etc. No persuasion, just clean summaries of what actually happened.

The Pipeline

The system runs automatically every day via cron jobs. It creates:

- ~22 articles/day on weekdays

- ~12/day on weekends

The process is:

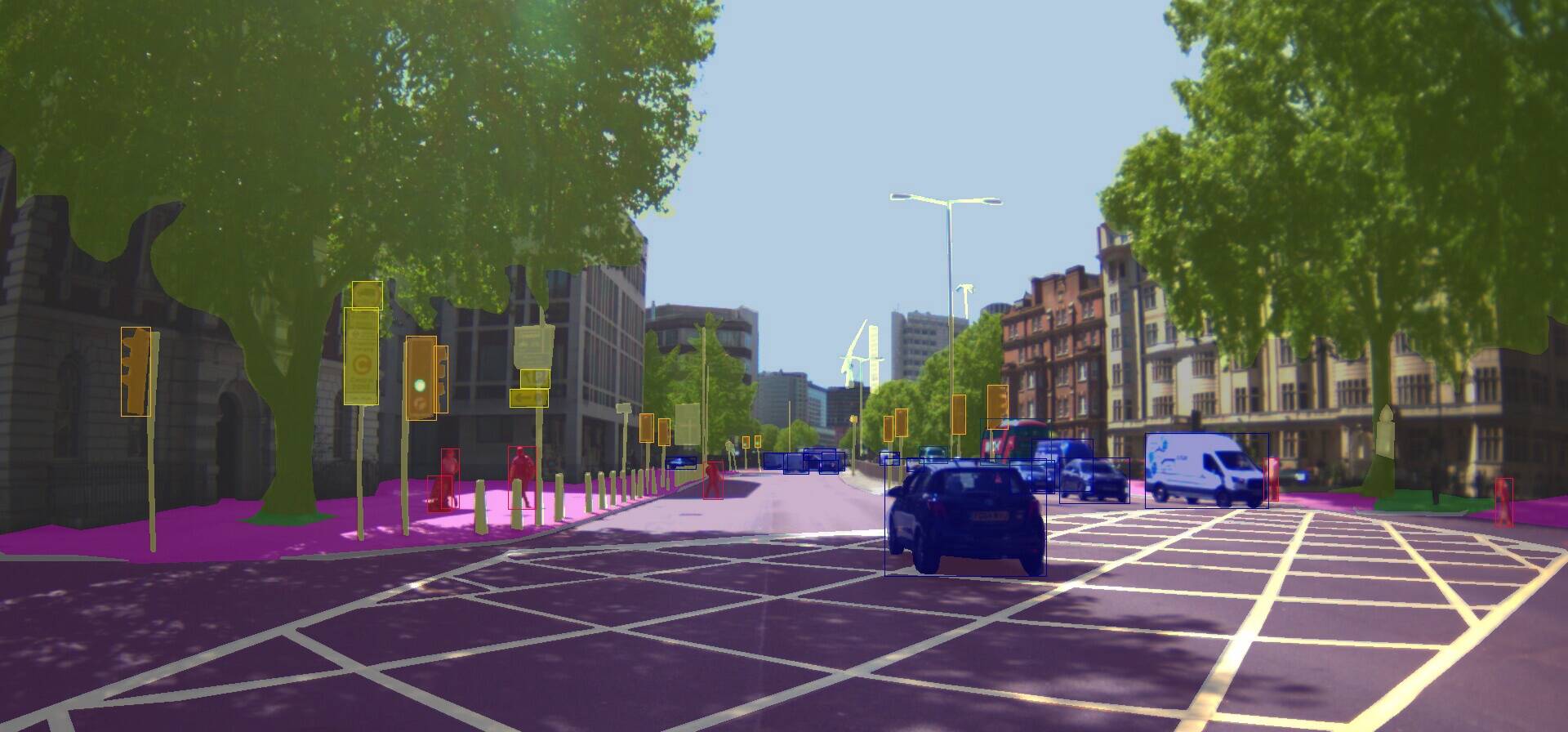

- Scan for leads — pull from structured feeds and recent news headlines

- Score/rank leads — recency, quality, newsworthiness, novelty

- Select top candidates

- Do background research via AI + search

- Write an article

- Self-critique and revise

- Format, tag, and publish

Each article costs around $0.25–0.30 in API usage. That adds up, but it's scalable in both directions. If the site gains traction, I can expand it. If not, I can scale it down without much pain.

Managing LLM Behavior

LLMs are capable, but prone to distraction and unexpected behavior. Here are some challenges I encountered:

- If I asked the model to rate a lead on a series of metrics like recency, newsworthiness, and redundancy, it would sometimes "reward" redundancy just because it's a high score. I would have to normalize the direction of each metric.

- If I combined too many tasks into one prompt (e.g. "rate the leads and write an article about the best one"), it would leak details between them. Articles would contain bits from unrelated leads.

- Similarly, if I asked it to rate the 'recency' of events being considered and only select recent events, it would hallucinate incorrect recent dates for events to defend its own decision.

- Being too explicit often backfires. Simpler prompts often worked better than elaborate, rule-filled ones.

- In general, LLMs seemed to respond better to positive requirements than negative ones.

- When I asked it to write in AP-style, it presented itself as the associated press. Careful prompt tweaking is required to fix issues like this.

- If I could explain what I wanted clearly enough, often the LLM could write a better prompt for itself.

To manage these issues, I split everything into clean stages with hard isolation between steps. Prompts are concise and focused. Unnecessary data is stripped between phases.

I also relied on OpenAI’s function calling APIs, which were helpful in constraining responses to structured data and avoiding format drift.

Brave Search: Better Than Expected

Though I started with only OpenAI's search-preview API for research, I've also been experimenting with Brave Search for parts of the pipeline. It’s surprisingly easy to plug into a lightweight RAG system, and have the model cite recent headlines or summaries.

Using a custom search-enabled RAG system allows for more flexibility, and is cheaper than using the search-preview models.

The Stack

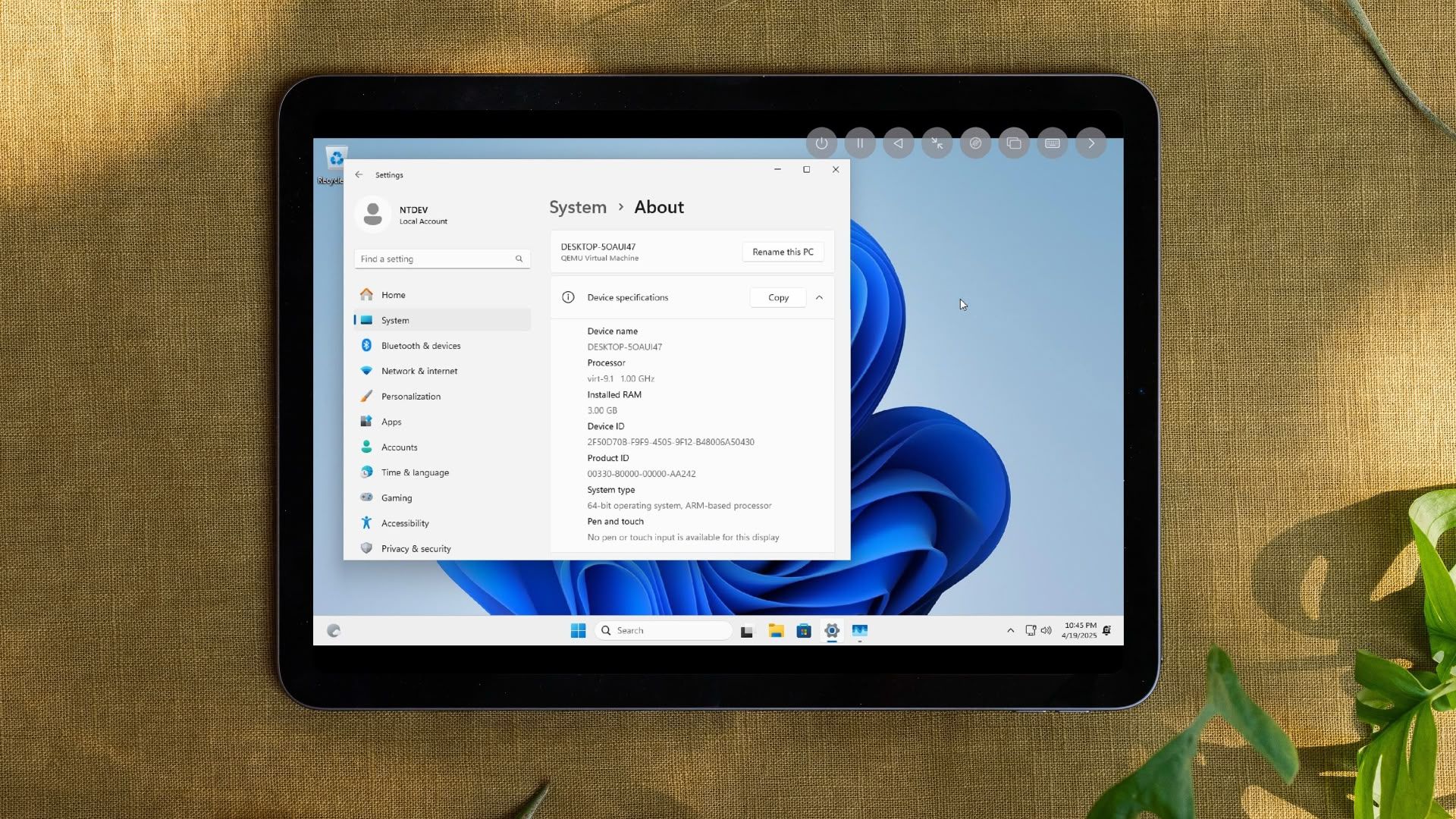

- Backend: Flask, SQLite, cron jobs and python scripts, prompts generated from Jinja2 templates

- Metrics: Umami

- Frontend: Static HTML and Jinja2 templates

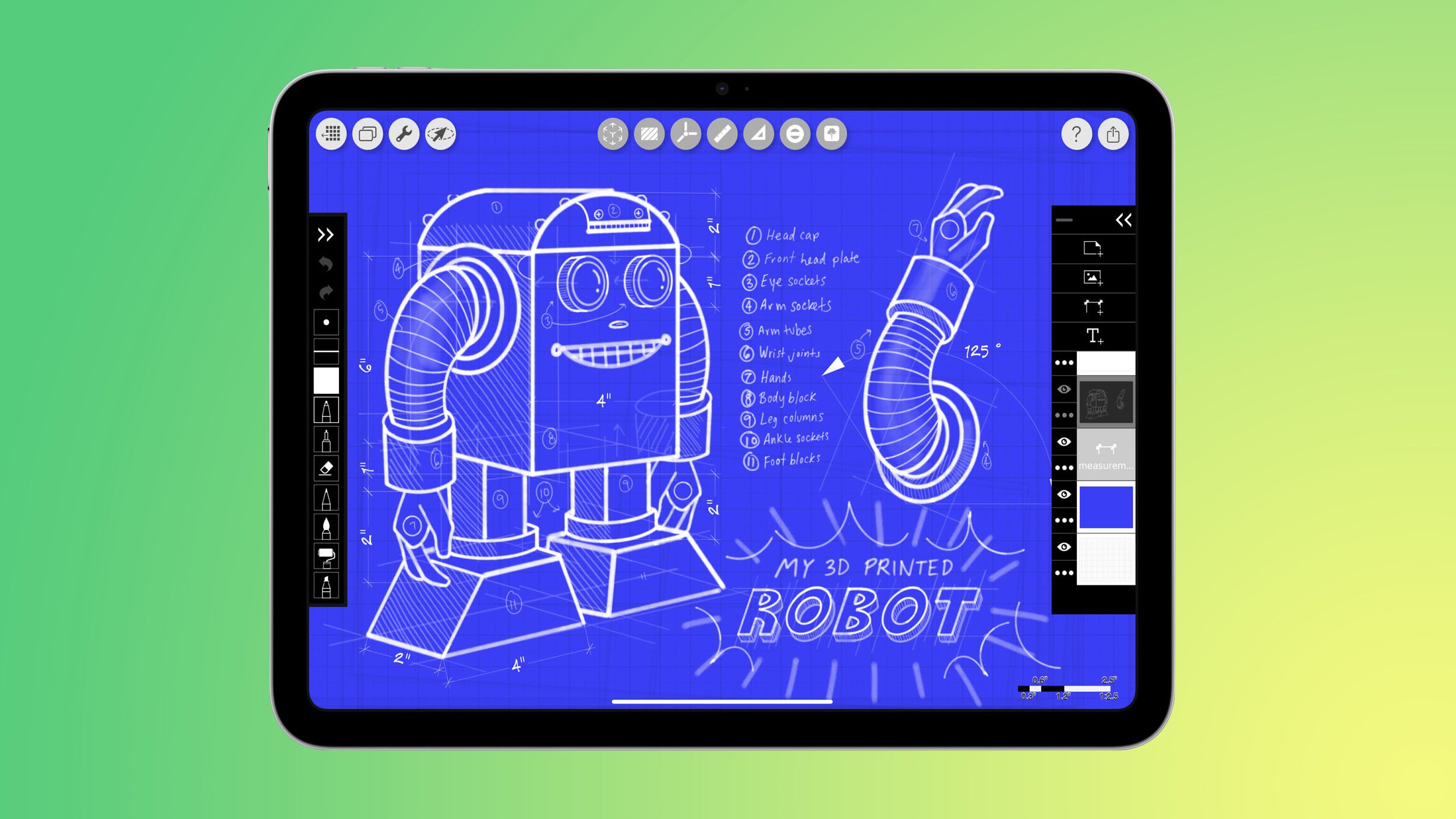

- Icons: All generated by ChatGPT

- Deployment: EC2 with Nginx and gunicorn

It’s not fancy, but with help from ChatGPT, I was able to build a complete site from scratch in about 2–3 weeks of evenings and weekends, completely solo - built entirely in my free time, outside of work.

I didn't use IDE-integrated AI-assistants - my workflow was just to copy/paste code to and from a chat window and edit myself.

I chose SQLite because it's easy to manage. It's not optimized for write-heavy workloads, but this is a read-heavy application. Flask is simple, and I've always liked Jinja2-style templating.

Without AI, I would have spent months just on the frontend and design alone. AI doesn't replace me as a software engineer, but the productivity boost is massive. I also never would have been able to create the icons and images on my own.

Cost and Scale

Right now, I’m spending over $100/month just on API calls for a site that might be useful, or might be something only I read. It's a price I'm willing to pay for a hobby project for now, and I can always scale down if needed.

Custom thumbnails would cost extra ~$0.04/article, so for now I'm just using static images by "section." Posting to Twitter via API would cost another $100/month, so for now automatic posts only go to Bluesky.

There's plenty I could do to improve the quality of articles with higher spend too -- increasing the depth of research it does for each article, evaluating a broader set of leads, more iterations of revision, etc.

What I'm Still Figuring Out

I want to add features like:

- Article upvotes or reactions

- Comment threads (and spam filtering)

- Highlight-to-comment functionality

- Basic analytics beyond Umami

- Email subscriptions

- Better customization of article-generation pipelines per-section

But I'm taking it slow and balancing other time commitments. Comments and voting introduce a lot of architectural complexity and moderation overhead. They would also need a database better suited to write-heavy workloads. For now, I’m watching traffic patterns, improving content quality, and adding structure like tags and search.

More importantly, I want to improve the quality of the content. To do so, I need a way to get feedback, and that's where I need your input.

Implications for Journalism

Agentic Tribune can't replace journalism — it can only report on what has already been made public. There’s no substitute for investigative reporters, war correspondents, or interviews that hold powerful people accountable.

If this site, or others like it, can provide trustworthy summaries of events at low cost, they might help free up human journalists to focus on the reporting only humans can do.

If a site like this were successful, it would still need a responsible way to fund primary sources of information, beyond what is already available publicly. What does that future look like? Can this be done ethically? Will society be more or less informed?

Launching

I’m planning a soft launch on Product Hunt and Indie Hackers soon. I also post selected headlines to Bluesky (automated) and Twitter (manual, for now).

Final Thoughts

This is a fun hobby, but it’s not free. I’m spending time and money on something that might be useful.

I’d love your feedback: Is the site helpful? Would you read it regularly? What’s missing? Would you trust AI-generated articles that show their sources? Would you prefer a different writing style?

Is AI-generated-news a kind of inevitable market progress, or an unethical misunderstanding of journalism? Or both?

**Check it out here:** https://agentictribune.com and comment below.

If you like it, follow on Bluesky or Twitter, or just bookmark it. Fresh articles published daily.

_Brain_light_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Next Generation iPhone 17e Nears Trial Production [Rumor]](https://www.iclarified.com/images/news/97083/97083/97083-640.jpg)

![Apple Releases iOS 18.5 Beta 3 and iPadOS 18.5 Beta 3 [Download]](https://www.iclarified.com/images/news/97076/97076/97076-640.jpg)

![Apple Seeds visionOS 2.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97077/97077/97077-640.jpg)

![Apple Seeds tvOS 18.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97078/97078/97078-640.jpg)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![BPMN-procesmodellering [closed]](https://i.sstatic.net/l7l8q49F.png)

![From fast food worker to cybersecurity engineer with Tae'lur Alexis [Podcast #169]](https://cdn.hashnode.com/res/hashnode/image/upload/v1745242807605/8a6cf71c-144f-4c91-9532-62d7c92c0f65.png?#)

-Jack-Black---Steve's-Lava-Chicken-(Official-Music-Video)-A-Minecraft-Movie-Soundtrack-WaterTower-00-00-32_lMoQ1fI.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)