How to Build an Asynchronous AI Agent Network Using Gemini for Research, Analysis, and Validation Tasks

In this tutorial, we introduce the Gemini Agent Network Protocol, a powerful and flexible framework designed to enable intelligent collaboration among specialized AI agents. Leveraging Google’s Gemini models, the protocol facilitates dynamic communication between agents, each equipped with distinct roles: Analyzer, Researcher, Synthesizer, and Validator. Users will learn to set up and configure an asynchronous […] The post How to Build an Asynchronous AI Agent Network Using Gemini for Research, Analysis, and Validation Tasks appeared first on MarkTechPost.

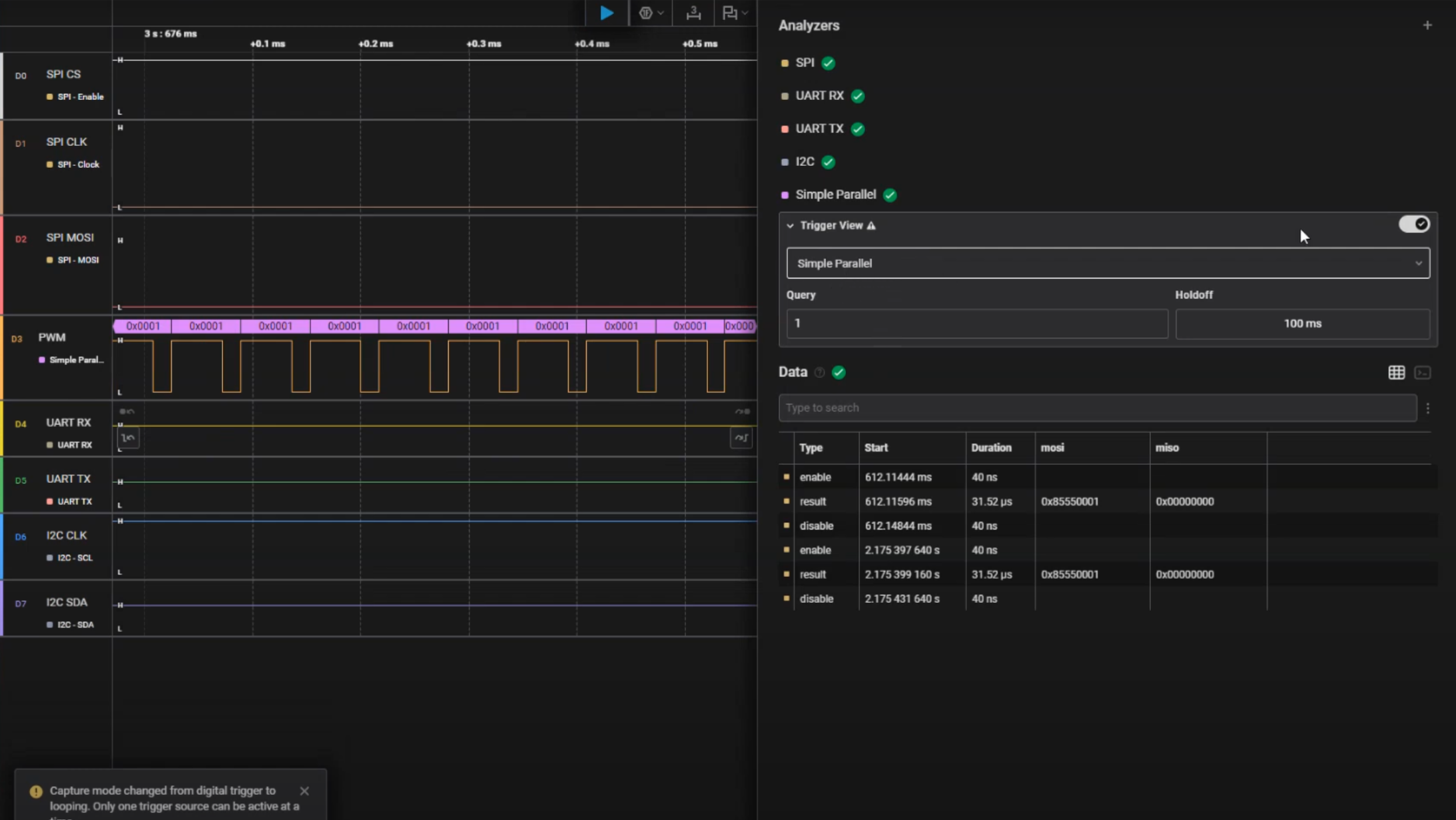

In this tutorial, we introduce the Gemini Agent Network Protocol, a powerful and flexible framework designed to enable intelligent collaboration among specialized AI agents. Leveraging Google’s Gemini models, the protocol facilitates dynamic communication between agents, each equipped with distinct roles: Analyzer, Researcher, Synthesizer, and Validator. Users will learn to set up and configure an asynchronous agent network, enabling automated task distribution, collaborative problem-solving, and enriched dialogue management. Ideal for scenarios such as in-depth research, complex data analysis, and information validation, this framework empowers users to harness collective AI intelligence efficiently.

import asyncio

import json

import random

from dataclasses import dataclass, asdict

from typing import Dict, List, Optional, Any

from enum import Enum

import google.generativeai as genaiWe leverage asyncio for concurrent execution, dataclasses for structured message management, and Google’s Generative AI (google.generativeai) to facilitate interactions among multiple AI-driven agents. It includes utilities for dynamic message handling and structured agent roles, enhancing scalability and flexibility in collaborative AI tasks.

API_KEY = None

try:

import google.colab

IN_COLAB = True

except ImportError:

IN_COLAB = FalseWe initialize the API_KEY and detect whether the code is running in a Colab environment. If the google.colab module is successfully imported, the IN_COLAB flag is set to True; otherwise, it defaults to False, allowing the script to adjust behavior accordingly.

class AgentType(Enum):

ANALYZER = "analyzer"

RESEARCHER = "researcher"

SYNTHESIZER = "synthesizer"

VALIDATOR = "validator"

@dataclass

class Message:

sender: str

receiver: str

content: str

msg_type: str

metadata: Dict = NoneCheck out the Notebook

We define the core structures for agent interaction. The AgentType enum categorizes agents into four distinct roles, Analyzer, Researcher, Synthesizer, and Validator, each with a specific function in the collaborative network. The Message dataclass represents the format for inter-agent communication, encapsulating sender and receiver IDs, message content, type, and optional metadata.

class GeminiAgent:

def __init__(self, agent_id: str, agent_type: AgentType, network: 'AgentNetwork'):

self.id = agent_id

self.type = agent_type

self.network = network

self.model = genai.GenerativeModel('gemini-2.0-flash')

self.inbox = asyncio.Queue()

self.context_memory = []

self.system_prompts = {

AgentType.ANALYZER: "You are a data analyzer. Break down complex problems into components and identify key patterns.",

AgentType.RESEARCHER: "You are a researcher. Gather information and provide detailed context on topics.",

AgentType.SYNTHESIZER: "You are a synthesizer. Combine information from multiple sources into coherent insights.",

AgentType.VALIDATOR: "You are a validator. Check accuracy and consistency of information and conclusions."

}

async def process_message(self, message: Message):

"""Process incoming message and generate response"""

if not API_KEY:

return " API key not configured. Please set API_KEY variable."

prompt = f"""

{self.system_prompts[self.type]}

Context from previous interactions: {json.dumps(self.context_memory[-3:], indent=2)}

Message from {message.sender}: {message.content}

Provide a focused response (max 100 words) that adds value to the network discussion.

"""

try:

response = await asyncio.to_thread(

self.model.generate_content, prompt

)

return response.text.strip()

except Exception as e:

return f"Error processing: {str(e)}"

async def send_message(self, receiver_id: str, content: str, msg_type: str = "task"):

"""Send message to another agent"""

message = Message(self.id, receiver_id, content, msg_type)

await self.network.route_message(message)

async def broadcast(self, content: str, exclude_self: bool = True):

"""Broadcast message to all agents in network"""

for agent_id in self.network.agents:

if exclude_self and agent_id == self.id:

continue

await self.send_message(agent_id, content, "broadcast")

async def run(self):

"""Main agent loop"""

while True:

try:

message = await asyncio.wait_for(self.inbox.get(), timeout=1.0)

response = await self.process_message(message)

self.context_memory.append({

"from": message.sender,

"content": message.content,

"my_response": response

})

if len(self.context_memory) > 10:

self.context_memory = self.context_memory[-10:]

print(f"

API key not configured. Please set API_KEY variable."

prompt = f"""

{self.system_prompts[self.type]}

Context from previous interactions: {json.dumps(self.context_memory[-3:], indent=2)}

Message from {message.sender}: {message.content}

Provide a focused response (max 100 words) that adds value to the network discussion.

"""

try:

response = await asyncio.to_thread(

self.model.generate_content, prompt

)

return response.text.strip()

except Exception as e:

return f"Error processing: {str(e)}"

async def send_message(self, receiver_id: str, content: str, msg_type: str = "task"):

"""Send message to another agent"""

message = Message(self.id, receiver_id, content, msg_type)

await self.network.route_message(message)

async def broadcast(self, content: str, exclude_self: bool = True):

"""Broadcast message to all agents in network"""

for agent_id in self.network.agents:

if exclude_self and agent_id == self.id:

continue

await self.send_message(agent_id, content, "broadcast")

async def run(self):

"""Main agent loop"""

while True:

try:

message = await asyncio.wait_for(self.inbox.get(), timeout=1.0)

response = await self.process_message(message)

self.context_memory.append({

"from": message.sender,

"content": message.content,

"my_response": response

})

if len(self.context_memory) > 10:

self.context_memory = self.context_memory[-10:]

print(f" Read More

Read More

![UGREEN FineTrack Smart Tracker With Apple Find My Support Drops to $9.99 [50% Off]](https://www.iclarified.com/images/news/97529/97529/97529-640.jpg)

![Apple Planning Futuristic 'Glasswing' iPhone With Curved Glass and No Cutouts [Gurman]](https://www.iclarified.com/images/news/97534/97534/97534-640.jpg)

![What Google Messages features are rolling out [June 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/12/google-messages-name-cover.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

_sleepyfellow_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

_Michael_Vi_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![[The AI Show Episode 151]: Anthropic CEO: AI Will Destroy 50% of Entry-Level Jobs, Veo 3’s Scary Lifelike Videos, Meta Aims to Fully Automate Ads & Perplexity’s Burning Cash](https://www.marketingaiinstitute.com/hubfs/ep%20151%20cover.png)

![[DEALS] Internxt Cloud Storage: Lifetime Subscription (85% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From electrical engineering student to CTO with Hitesh Choudhary [Podcast #175]](https://cdn.hashnode.com/res/hashnode/image/upload/v1749158756824/3996a2ad-53e5-4a8f-ab97-2c77a6f66ba3.png?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)