Nvidia Touts Next Generation GPU Superchip and New Photonic Switches

Nvidia used its GTC conference today to introduce new GPU superchips, including the second generation of its current Grace Blackwell chip, as well as the next generation, dubbed the Vera Rubin. Jensen Huang, the company’s founder and CEO, also touted new DGX systems and discussed how the power crunch is driving Nvidia to use photonics to more data more efficiently. Nvidia is a GPU company, so naturally everyone at the GPU Technology Conference (GTC) wanted to hear what Nvidia had up its GPU sleeves. Huang delivered that, and more, during a two-hour-plus keynote address at a packed SAP Center in downtown San Jose, California. Expected in the second half of 2026, Rubin will sport 288GB of high-bandwidth memory 4 (HBM4) versus the HBM3e found in the Blackwell Ultra, which the company also announced today. It will be manufactured by TSMC using a 3nm process, as Huang first disclosed back in 2024. Nvidia will pair Rubin GPUs along with CPUs, dubbed Vera. Nvidia’s newest CPU will sport 88 custom Arm cores and feature 4.2 times the RAM in Grace (its current CPU) and 2.4 times the memory bandwidth. Overall, Vera will deliver twice the performance of Grace, Nvidia said. Nvidia will combine the Vera and Rubin chips together, just as it has with Grace Blackwell, to deliver a superchip that has one CPU and two fused GPUs. Nvidia plans to deliver the first generation of its Vera Rubin superchip in the second half of 2026. It plans to follow that up with its second generation Vera Rubin superchip, dubbed Vera Rubin Ultra, which sports four GPUs. The new generation of chips, including Blackwell Ultra and Rubin, will deliver big increases in compute capacity. Compared to the previous generation of Hopper chips, Blackwell is delivering a 68x speedup, whereas Rubin will deliver a 900x speedup, according to Huang. In terms of price-performance, Blackwell will cost 0.13 what Hopper cost, while Rubin will push the margin to 0.03. Nvidia is chasing burgeoning generative AI workloads, including training massive AI models as well as running inference workloads. While training an AI model may take weeks or months and require huge amounts of data, inference workloads are expected to drive huge multiples of the training workloads. The emergence of agentic AI, whereby reasoning models handle more complex tasks on behalf of humans, will drive significant cycles for the GPU maker, Huang said. “Compute for agentic AI is 100x what we thought we needed last year,” he said during the keynote. How companies build data centers to support agentic AI is also changing. According to Huang, the data centers are becoming AI factories that generate tokens. “We’re seeing the inflection point happening in the data center buildouts,” Huang said.. “They’re AI factories because it has one job and one job only: Generating these incredible tokens that we then reconstitute into music, into words, into videos, into research into chemicals and proteins.” The huge demands of these AI factories will bump up against energy supplies, thereby driving demand for greater efficiency. One way that Nvidia plans to boost the efficiency is by adopting optical-based networking technology to move data between GPUs. Huang demonstrated the new photonic hardware that it co-developed for DGX systems with ecosystem partners–including serializers/deserializers (SerDes), lasers, and glass–that will move these bits at a fraction of the cost of straight copper. The first generation of the new photonics, dubbed Spectrum-X, will ship in the second half of 2025. The second generation, dubbed Quantum-X, will ship in the second half of 2026. “This is really crazy technology, crazy, crazy technology,” Huang said during the keynote. “It is the world’s first 1.6 terabit per second CPU. It is based on a technology called microwave resonator modulator, and it is completely built with this incredible process technology at TSMC that we’ve been working with for some time.” Stay tuned for more coverage of Nvidia’s GTC conference. This article first appeared on BigDATAwire.

Nvidia used its GTC conference today to introduce new GPU superchips, including the second generation of its current Grace Blackwell chip, as well as the next generation, dubbed the Vera Rubin. Jensen Huang, the company’s founder and CEO, also touted new DGX systems and discussed how the power crunch is driving Nvidia to use photonics to more data more efficiently.

Nvidia is a GPU company, so naturally everyone at the GPU Technology Conference (GTC) wanted to hear what Nvidia had up its GPU sleeves. Huang delivered that, and more, during a two-hour-plus keynote address at a packed SAP Center in downtown San Jose, California.

Expected in the second half of 2026, Rubin will sport 288GB of high-bandwidth memory 4 (HBM4) versus the HBM3e found in the Blackwell Ultra, which the company also announced today. It will be manufactured by TSMC using a 3nm process, as Huang first disclosed back in 2024.

Nvidia will pair Rubin GPUs along with CPUs, dubbed Vera. Nvidia’s newest CPU will sport 88 custom Arm cores and feature 4.2 times the RAM in Grace (its current CPU) and 2.4 times the memory bandwidth. Overall, Vera will deliver twice the performance of Grace, Nvidia said.

Nvidia will combine the Vera and Rubin chips together, just as it has with Grace Blackwell, to deliver a superchip that has one CPU and two fused GPUs. Nvidia plans to deliver the first generation of its Vera Rubin superchip in the second half of 2026. It plans to follow that up with its second generation Vera Rubin superchip, dubbed Vera Rubin Ultra, which sports four GPUs.

The new generation of chips, including Blackwell Ultra and Rubin, will deliver big increases in compute capacity. Compared to the previous generation of Hopper chips, Blackwell is delivering a 68x speedup, whereas Rubin will deliver a 900x speedup, according to Huang. In terms of price-performance, Blackwell will cost 0.13 what Hopper cost, while Rubin will push the margin to 0.03.

Nvidia is chasing burgeoning generative AI workloads, including training massive AI models as well as running inference workloads. While training an AI model may take weeks or months and require huge amounts of data, inference workloads are expected to drive huge multiples of the training workloads.

The emergence of agentic AI, whereby reasoning models handle more complex tasks on behalf of humans, will drive significant cycles for the GPU maker, Huang said. “Compute for agentic AI is 100x what we thought we needed last year,” he said during the keynote.

How companies build data centers to support agentic AI is also changing. According to Huang, the data centers are becoming AI factories that generate tokens.

“We’re seeing the inflection point happening in the data center buildouts,” Huang said.. “They’re AI factories because it has one job and one job only: Generating these incredible tokens that we then reconstitute into music, into words, into videos, into research into chemicals and proteins.”

The huge demands of these AI factories will bump up against energy supplies, thereby driving demand for greater efficiency. One way that Nvidia plans to boost the efficiency is by adopting optical-based networking technology to move data between GPUs.

Huang demonstrated the new photonic hardware that it co-developed for DGX systems with ecosystem partners–including serializers/deserializers (SerDes), lasers, and glass–that will move these bits at a fraction of the cost of straight copper. The first generation of the new photonics, dubbed Spectrum-X, will ship in the second half of 2025. The second generation, dubbed Quantum-X, will ship in the second half of 2026.

“This is really crazy technology, crazy, crazy technology,” Huang said during the keynote. “It is the world’s first 1.6 terabit per second CPU. It is based on a technology called microwave resonator modulator, and it is completely built with this incredible process technology at TSMC that we’ve been working with for some time.”

Stay tuned for more coverage of Nvidia’s GTC conference.

This article first appeared on BigDATAwire.

![Apple C1 vs Qualcomm Modem Performance [Speedtest]](https://www.iclarified.com/images/news/96767/96767/96767-640.jpg)

![Apple Studio Display On Sale for $1249 [Lowest Price Ever]](https://www.iclarified.com/images/news/96770/96770/96770-640.jpg)

![Alleged Case for Rumored iPhone 17 Air Surfaces Online [Image]](https://www.iclarified.com/images/news/96763/96763/96763-640.jpg)

![Google Home camera history adds double-tap to quick seek [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/08/nest-cam-google-home-app-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Galaxy Tab S10 FE leak reveals Samsung’s 10.9-inch and 13.1-inch tablets in full [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/03/galaxy-tab-s10-fe-wf-10.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

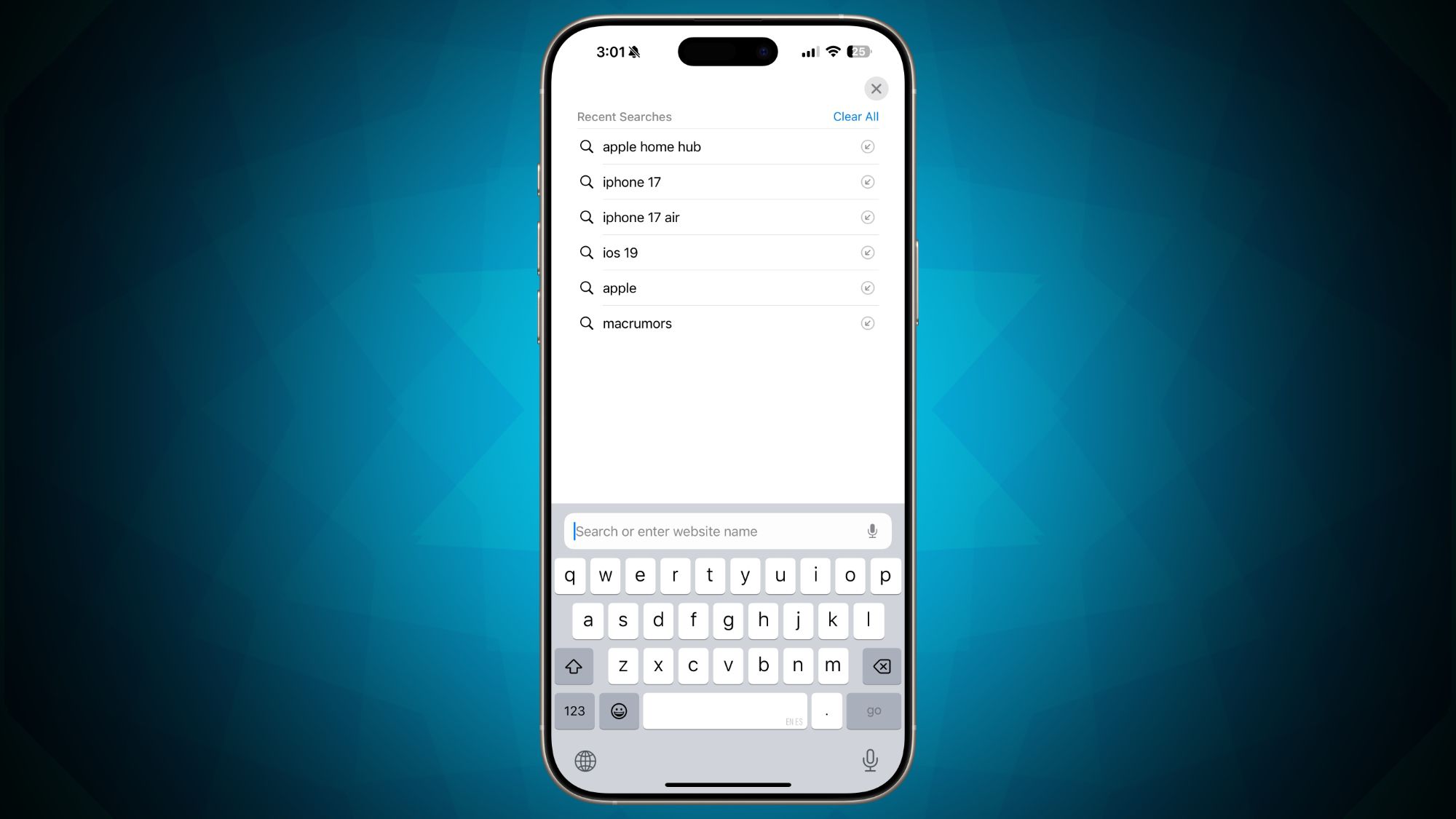

![iOS 18.4 makes your Safari search history way more visible, for better or worse [U]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2023/02/New-iPhone-browsers.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

_NicoElNino_Alamy.png?#)

_Kjetil_Kolbjørnsrud_Alamy.jpg?#)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)

-I-Interviewed-Niantic-about-Selling-Pokémon-GO-to-Scopely-00-14-13.png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

.jpg?#)