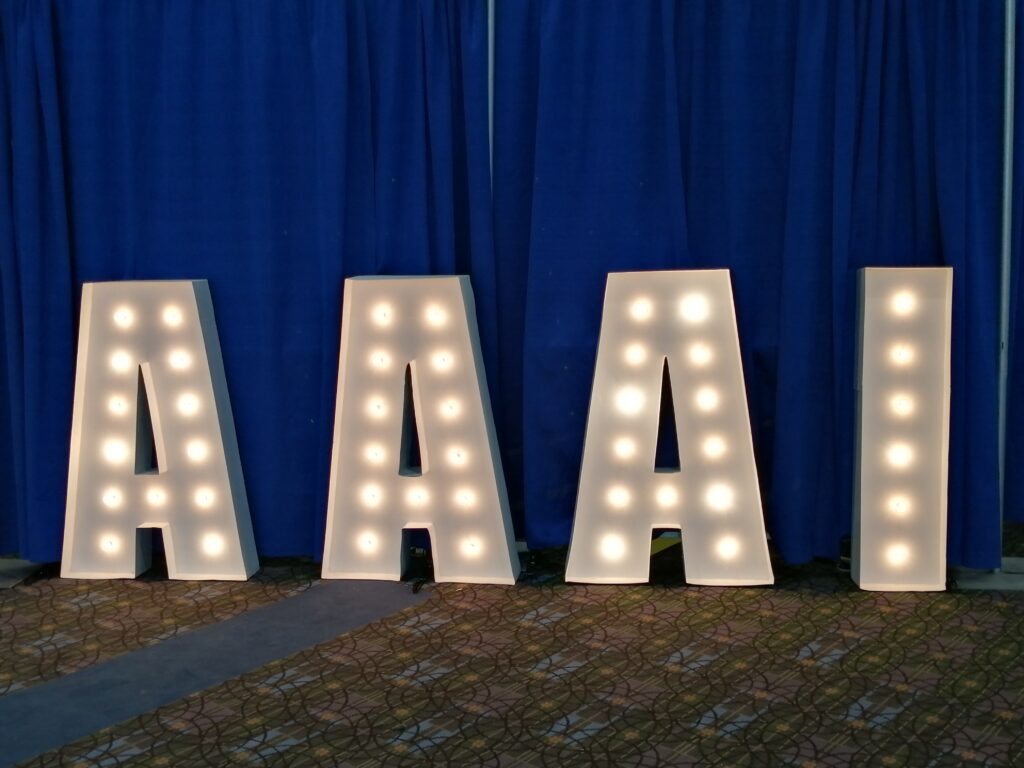

#AAAI2025 workshops round-up 1: Artificial intelligence for music, and towards a knowledge-grounded scientific research lifecycle

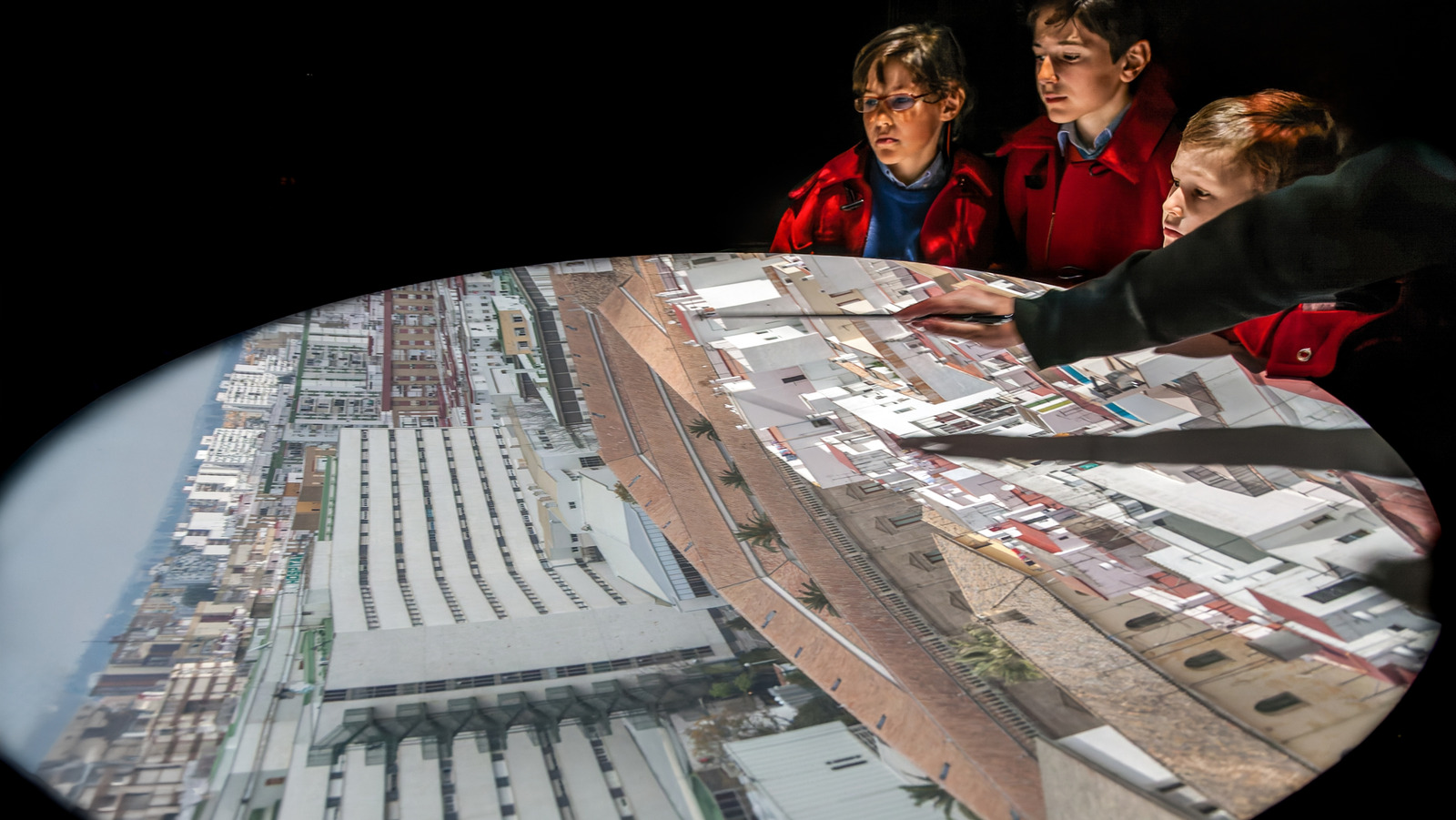

Top: Group shot from the workshop “Artificial Intelligence for Music”. Bottom: Two best paper award winners at the workshop: “AI4Research: Towards a Knowledge-grounded Scientific Research Lifecycle”. In a series of articles, we’ll be publishing summaries with some of the key takeaways from a few of workshops held at the 39th Annual AAAI Conference on Artificial […]

Top: Group shot from the workshop “Artificial Intelligence for Music”. Bottom: Two best paper award winners at the workshop: “AI4Research: Towards a Knowledge-grounded Scientific Research Lifecycle”.

Top: Group shot from the workshop “Artificial Intelligence for Music”. Bottom: Two best paper award winners at the workshop: “AI4Research: Towards a Knowledge-grounded Scientific Research Lifecycle”.

In a series of articles, we’ll be publishing summaries with some of the key takeaways from a few of workshops held at the 39th Annual AAAI Conference on Artificial Intelligence (AAAI 2025). In this first round-up, we hear from the organisers of the workshops on:

- AI4Research: Towards a Knowledge-grounded Scientific Research Lifecycle

- Artificial Intelligence for Music

AI4Research: Towards a Knowledge-grounded Scientific Research Lifecycle

By Qingyun Wang

Organisers: Qingyun Wang, Wenpeng Yin, Lifu Huang, May Fung, Xinya Du, Carl Edwards, Tom Hope

This workshop focused on grounding AI methods in existing scientific publications and experimental datasets to discover potential “Sleeping Beauties”.

The three main takeaways from the event were:

- The workshop featured 20 accepted papers across diverse application areas, including five oral presentations and 15 posters. Research topics ranged from agent debate evaluation and taxonomy expansion to hypothesis generation, AI4Research benchmarks, caption generation, drug discovery, and financial auditing. Additionally, the workshop hosted a dedicated mentoring session for early-career researchers.

- The workshop had six inspiring invited talks from academic and industry experts covering a wide range of research topics. Professor Wei Wang (UCLA) presented work on multimodal scientific foundation models for knowledge extraction and synthesis. Next, Professor Marinka Zitnik (Harvard & Broad Institute) shared their recent progress in “AI Scientists” to apply diffusion models and large language models for biomedical discovery. Professor Doug Downey (AI2 & Northwestern) presented state-of-the-art ScholarQA from AI2, a literature-based long-form question-answering assistant, and highlighted possible future directions. Professor Aviad Levis (University of Toronto) introduced physics-constrained neural fields for 3D imaging in astronomy. Professor Jinho Choi (Emory) gave a talk about AI-assisted scientific writing and explored potential solutions to the growing peer-review crisis in academic publishing. Finally, Dr Cong Lu (DeepMind) described their work about fully autonomous open-ended scientific discovery in the machine learning domain.

- Professor Doug Downey, Professor Aviad Levis, Professor Jinho Choi, and Dr Cong Lu gave an insightful panel discussion on emerging methods, challenges, and ethical considerations in AI4research, including a discussion about the potential social impact of replacing incremental research with automatic AI systems. The panelists also discussed the potential copyright issues for using LLM tools to help researchers discover new hypotheses, solutions to existing peer review crises, and the exponential growth of papers in the machine learning domain.

Artificial Intelligence for Music

By Yung-Hsiang Lu

Organisers: Yung-Hsiang Lu, Kristen Yeon-Ji Yun, George K. Thiruvathukal, Benjamin Shiue-Hal Chou

This workshop explored the dynamic intersection of artificial intelligence and music. It covered topics including the impact of AI on music education and careers of musicians, AI-driven music composition, AI-assisted sound design, AI-generated audio and video, and legal and ethical considerations of AI in music.

The three main takeaways from the event were:

- Artificial Intelligence technologies should be designed for users. Technologists should collaborate with musicians and understand users’ needs.

- AI technologies can provide many benefits to musicians and music students, for example, composition, error detection, adaptive accompaniment, transcription, generating video from music, generating music from video.

- Major barriers to further improvements include (1) lack of training data, (2) lack of widely accepted metrics for evaluation, (3) difficulty to find experts in both technologies and music.

![Apple C1 vs Qualcomm Modem Performance [Speedtest]](https://www.iclarified.com/images/news/96767/96767/96767-640.jpg)

![Apple Studio Display On Sale for $1249 [Lowest Price Ever]](https://www.iclarified.com/images/news/96770/96770/96770-640.jpg)

![[Fixed] Chromecast (2nd gen) and Audio can’t Cast in ‘Untrusted’ outage](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2019/08/chromecast_audio_1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)