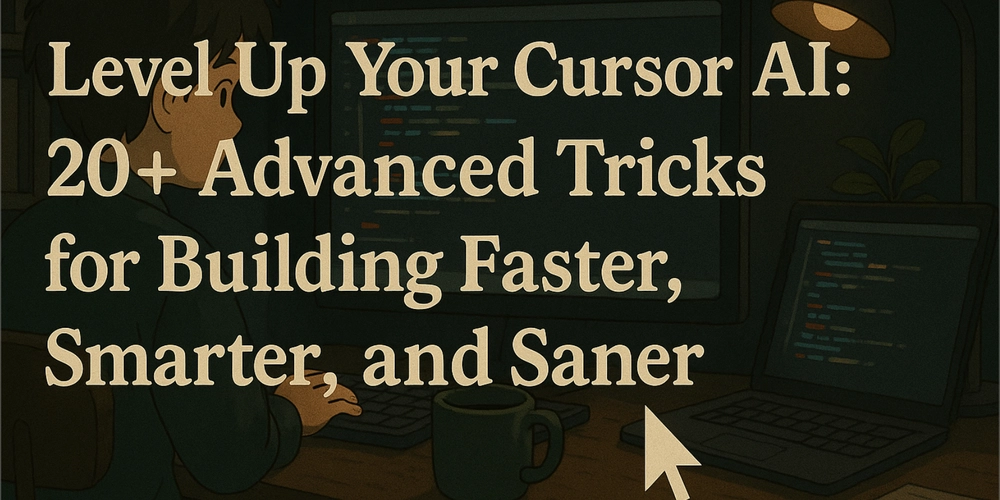

Stop Fighting Your AI: 20+ Advanced Cursor / Windsurf Tricks for Vibe Coding in A way Harder, Better, Faster and Stronger

Cursor AI or Windsurf, maybe Cline? They promises a coding utopia – blasting through features, untangling bugs, writing docs while you sip coffee. And sometimes, it delivers glimpses of that dream. More often? It feels like wrestling a brilliant but deeply eccentric octopus. Hallucinations that make Dali look tame, inexplicable code deletions, stubborn refusal to follow simple instructions, getting stuck in loops that defy logic... sound familiar? You're not alone. The frustration is real. But here's the secret whispered in countless Reddit threads, blog posts, and late-night debugging sessions: Cursor isn't just a tool, it's an environment. And you're probably using it wrong. Most developers, even seasoned pros, approach Cursor like a supercharged autocomplete or a slightly smarter search engine. They fire off prompts, hope for the best, and then spend hours cleaning up the mess when the AI inevitably goes off the rails. That's like using a Formula 1 car for grocery runs – you're missing the point and probably crashing a lot. The real power emerges when you shift your mindset. Treat Cursor not as a simple assistant, but as a programmable co-developer. It requires structure, discipline, clear context, and strategic guidance. It needs you to be the architect, the lead, the one who defines the rules of engagement. I've synthesized insights from numerous developer workflows, community discussions (shoutout to r/cursor!), and expert posts (like Geoffrey Huntley's deep dives) to compile the ultimate guide – over 20 enhanced tricks that go far beyond basic prompting. This isn't just about using Cursor; it's about mastering it. Ready to transform your AI coding experience from a source of frustration into a reliable engine of productivity? Let's dive in. Part 1: The Bedrock - Core Workflow Patterns for Reliable AI Interaction These foundational tricks are non-negotiable. Skip these, and you're building on shaky ground, guaranteeing frustration down the line. Trick #1: The Plan-Then-Act Mandate (Your Most Critical Habit) The Pain Point: You ask Cursor to implement a feature. It instantly spews code... that completely misunderstands a crucial requirement or ignores vital context. You've wasted time and credits on code destined for the delete key. The Game-Changer: NEVER let Cursor generate code immediately for any non-trivial task. Institute a mandatory planning phase first. This single change dramatically reduces errors and ensures alignment. How to Implement: Prompt for Detailed Plan (with Code Embargo): When initiating a task (Chat Cmd+L, Edit Cmd+K, Composer Cmd+I), be explicit: Implement user authentication using JWT tokens with Supabase Auth. Store user profiles in a 'profiles' table linked by user ID. **IMPORTANT: First, present a detailed step-by-step plan.** Include: 1. Required Supabase setup (table schema, RLS policies if applicable). 2. Frontend components needed (Login form, Signup form, Profile display). 3. API routes needed (e.g., `/api/auth/login`, `/api/auth/signup`, `/api/profile`). 4. State management approach (e.g., Zustand, Context API). 5. Key functions/logic for handling JWTs (generation, verification, storage). 6. List the specific files you intend to create or modify. 7. Identify any potential challenges or questions you have for me. **DO NOT WRITE ANY CODE YET. Wait for my explicit 'Proceed' command after I review this plan.** 2. **Scrutinize the Plan:** Read the AI's plan *carefully*. Did it grasp the requirements? Did it acknowledge the context files (`@file.js`) you provided? Is the chosen approach sound (e.g., correct Supabase functions, secure JWT handling)? 3. **Iterate & Refine:** If the plan is flawed or incomplete, provide specific, corrective feedback. *"No, JWTs should be stored in HttpOnly cookies, not localStorage. Update step 5 of the plan."* or *"Your plan missed adding RLS policies to the 'profiles' table. Add that to step 1."* Continue refining until the plan is solid. 4. **Grant Explicit Permission:** Once you approve the plan, give a clear, separate command: *"Plan approved. **Proceed** with implementing the code based *exactly* on the steps outlined in the plan you provided."* Trick #2: The Markdown Task Checklist (Your AI's Sequential Guide) The Pain Point: For features involving multiple steps, the AI easily gets lost, skips crucial actions, or performs them out of sequence, leading to chaos. The Game-Changer: Use a simple Markdown file with GitHub-flavored checkboxes (- [ ]) as a shared, sequential task list and live progress tracker. This is a community favorite for a reason – it works. How to Implement: Generate Checklist Post-Plan: After approving the plan (Trick #1), instruct the AI: Okay, create a new file named `TASK_implement_jwt_auth.md`. Based on the approved plan, list all necessary implementation steps as GitHub-style checkboxes (`- [ ] Step Description`). Use sub-bullets for granular details w

Cursor AI or Windsurf, maybe Cline? They promises a coding utopia – blasting through features, untangling bugs, writing docs while you sip coffee. And sometimes, it delivers glimpses of that dream. More often? It feels like wrestling a brilliant but deeply eccentric octopus. Hallucinations that make Dali look tame, inexplicable code deletions, stubborn refusal to follow simple instructions, getting stuck in loops that defy logic... sound familiar?

You're not alone. The frustration is real. But here's the secret whispered in countless Reddit threads, blog posts, and late-night debugging sessions: Cursor isn't just a tool, it's an environment. And you're probably using it wrong.

Most developers, even seasoned pros, approach Cursor like a supercharged autocomplete or a slightly smarter search engine. They fire off prompts, hope for the best, and then spend hours cleaning up the mess when the AI inevitably goes off the rails. That's like using a Formula 1 car for grocery runs – you're missing the point and probably crashing a lot.

The real power emerges when you shift your mindset. Treat Cursor not as a simple assistant, but as a programmable co-developer. It requires structure, discipline, clear context, and strategic guidance. It needs you to be the architect, the lead, the one who defines the rules of engagement.

I've synthesized insights from numerous developer workflows, community discussions (shoutout to r/cursor!), and expert posts (like Geoffrey Huntley's deep dives) to compile the ultimate guide – over 20 enhanced tricks that go far beyond basic prompting. This isn't just about using Cursor; it's about mastering it.

Ready to transform your AI coding experience from a source of frustration into a reliable engine of productivity? Let's dive in.

Part 1: The Bedrock - Core Workflow Patterns for Reliable AI Interaction

These foundational tricks are non-negotiable. Skip these, and you're building on shaky ground, guaranteeing frustration down the line.

Trick #1: The Plan-Then-Act Mandate (Your Most Critical Habit)

- The Pain Point: You ask Cursor to implement a feature. It instantly spews code... that completely misunderstands a crucial requirement or ignores vital context. You've wasted time and credits on code destined for the delete key.

- The Game-Changer: NEVER let Cursor generate code immediately for any non-trivial task. Institute a mandatory planning phase first. This single change dramatically reduces errors and ensures alignment.

-

How to Implement:

-

Prompt for Detailed Plan (with Code Embargo): When initiating a task (Chat

Cmd+L, EditCmd+K, ComposerCmd+I), be explicit:

Implement user authentication using JWT tokens with Supabase Auth. Store user profiles in a 'profiles' table linked by user ID. **IMPORTANT: First, present a detailed step-by-step plan.** Include: 1. Required Supabase setup (table schema, RLS policies if applicable). 2. Frontend components needed (Login form, Signup form, Profile display). 3. API routes needed (e.g., `/api/auth/login`, `/api/auth/signup`, `/api/profile`). 4. State management approach (e.g., Zustand, Context API). 5. Key functions/logic for handling JWTs (generation, verification, storage). 6. List the specific files you intend to create or modify. 7. Identify any potential challenges or questions you have for me. **DO NOT WRITE ANY CODE YET. Wait for my explicit 'Proceed' command after I review this plan.**

-

2. **Scrutinize the Plan:** Read the AI's plan *carefully*. Did it grasp the requirements? Did it acknowledge the context files (`@file.js`) you provided? Is the chosen approach sound (e.g., correct Supabase functions, secure JWT handling)?

3. **Iterate & Refine:** If the plan is flawed or incomplete, provide specific, corrective feedback. *"No, JWTs should be stored in HttpOnly cookies, not localStorage. Update step 5 of the plan."* or *"Your plan missed adding RLS policies to the 'profiles' table. Add that to step 1."* Continue refining until the plan is solid.

4. **Grant Explicit Permission:** Once you approve the plan, give a clear, separate command: *"Plan approved. **Proceed** with implementing the code based *exactly* on the steps outlined in the plan you provided."*

Trick #2: The Markdown Task Checklist (Your AI's Sequential Guide)

- The Pain Point: For features involving multiple steps, the AI easily gets lost, skips crucial actions, or performs them out of sequence, leading to chaos.

- The Game-Changer: Use a simple Markdown file with GitHub-flavored checkboxes (

- [ ]) as a shared, sequential task list and live progress tracker. This is a community favorite for a reason – it works. -

How to Implement:

-

Generate Checklist Post-Plan: After approving the plan (Trick #1), instruct the AI:

Okay, create a new file named `TASK_implement_jwt_auth.md`. Based on the approved plan, list all necessary implementation steps as GitHub-style checkboxes (`- [ ] Step Description`). Use sub-bullets for granular details within each main step.

-

2. **Execute Sequentially via Checklist:** For the implementation phase, give this instruction:

```markdown

Now, execute the tasks listed in `@TASK_implement_jwt_auth.md` **strictly one by one, in order.** Start with the very first unchecked item (`- [ ]`).

**CRITICAL: After you successfully complete each step, you MUST update the `@TASK_implement_jwt_auth.md` file by changing the corresponding checkbox from `- [ ]` to `- [x]`.**

Only proceed to the *next* unchecked item after confirming the previous one is checked off in the file. Announce which step you are starting.

```

- Benefits: Enforces sequential execution, breaks work down visibly, provides a real-time progress indicator for both you and the AI, dramatically increases reliability for multi-step features.

# TASK_implement_jwt_auth.md Example

- [ ] **1. Setup Supabase 'profiles' Table**

- [ ] Define SQL for table creation (id, user_id FK, username, avatar_url).

- [ ] Add RLS policy: Users can only select/update their own profile.

- [ ] Run migration/SQL via Supabase dashboard or CLI.

- [ ] **2. Create API Route: Signup (`/api/auth/signup`)**

- [ ] Implement POST handler.

* [ ] Validate input (email, password).

* [ ] Call `supabase.auth.signUp`.

* [ ] Create corresponding profile entry on success.

* [ ] Return appropriate success/error response.

- [ ] **3. Create API Route: Login (`/api/auth/login`)**

- [ ] Implement POST handler.

* [ ] Validate input.

* [ ] Call `supabase.auth.signInWithPassword`.

* [ ] On success, generate JWT (if needed beyond Supabase session) and set HttpOnly cookie.

* [ ] Return user info/success response.

- [ ] **4. Create Login Form Component (`components/LoginForm.tsx`)**

- [ ] Build basic form structure (email, password, submit button).

- [ ] Implement state management for form fields.

- [ ] Implement `onSubmit` handler to call `/api/auth/login` route.

- [ ] Handle loading and error states.

- [ ] Redirect on successful login.

- [ ] **5. Create Signup Form Component (`components/SignupForm.tsx`)**

- [ ] Build form structure.

- [ ] Implement state and `onSubmit` handler for `/api/auth/signup`.

- [ ] Handle loading/error states.

- [ ] **6. Implement Client-Side Auth Context/Store**

- [ ] Setup Zustand store (or Context) to hold user session state.

- [ ] Create logic to check for existing session/cookie on app load.

- [ ] **7. Create Profile Display Component (`components/ProfileDisplay.tsx`)**

- [ ] Fetch profile data for logged-in user from `/api/profile` (or directly if using Supabase client-side).

- [ ] Display username, avatar, etc.

- [ ] **8. Protect Routes/Pages**

- [ ] Implement logic (e.g., middleware, HOC) to redirect unauthenticated users from protected pages.

Trick #3: Core Context Files (Project_Plan.md, Documentation.md) - Implanting Project Knowledge

- The Pain Point: The AI operates in a vacuum. It doesn't inherently know your project's purpose, architecture, constraints, or specific technical choices. Relying solely on

@codebasegives it structure but not intent or rules. - The Game-Changer: Create and diligently maintain two central Markdown files in your project root to serve as the AI's persistent brain.

- How to Implement:

-

Project_Plan.md(orScope.md,Milestones.md): The "Why" and "What".- Project Goal & Vision: 1-2 sentence summary. Who is this for? What problem does it solve?

- Core Features List: High-level bullet points of major functionalities.

- Tech Stack Summary: Languages (versions), frameworks, databases, key libraries/services (e.g., "Next.js 14, React 18, TypeScript 5, Supabase (PostgreSQL), Zustand, TailwindCSS").

- Architectural Principles/Decisions: Crucial patterns (e.g., "Modular Monolith," "Clean Architecture layers," "Event-driven communication via Redis pub/sub"), data flow overview, significant design choices (and briefly, why they were made).

- Development Phases/Roadmap: Broad stages (e.g., "Phase 1: Core Auth & CRUD," "Phase 2: Real-time Features").

-

Documentation.md(orTech_Specs.md): The "How".- API Contracts: Detailed request/response schemas (use OpenAPI snippets or similar), authentication methods, rate limits for critical internal/external APIs.

- Database Schema Details: Key table definitions (beyond basic structure, note important constraints, indexing strategies, RLS policies if not using MCP server).

- Core Data Models/Types: Definitions for important TypeScript interfaces, shared data structures.

- Key Function/Module Specs: Purpose, parameters, return values, and usage notes for critical utility functions or core modules.

- Project Conventions: Specific naming conventions (e.g., "API routes use

kebab-case, React components usePascalCase"), preferred error handling patterns, state management rules, library usage guidelines unique to this project.

-

- Usage Protocol:

- Constant Reference: Include

@Project_Plan.md @Documentation.mdin the context (@mentions) for almost every significant prompt, especially when starting new features, performing refactoring, or debugging complex issues. - Rule Reinforcement: Use

.cursorrules(see Trick #6) to mandate consultation:"Rule: Before implementing any new feature, consult @Project_Plan.md for alignment with project goals and @Documentation.md for relevant technical standards and API contracts." - Keep

Documentation.mdAlive (Crucial!): This requires discipline. As you or the AI modify APIs, database schemas, or core patterns, this file must be updated immediately. Outdated specs actively mislead the AI. Use prompts: "TheupdateUserProfilefunction signature changed. Update its specification in@Documentation.md."

- Constant Reference: Include

Trick #4: Granular Structured Docs (Scaling Context for Mammoth Projects)

- The Pain Point: In truly massive projects, a single

Documentation.mdbecomes a bloated nightmare, making it hard for the AI (and humans!) to find specific information quickly. - The Game-Changer: Decompose your technical documentation into a logical hierarchy of smaller, focused Markdown files, often within a dedicated

/docsor/.contextdirectory. - How to Implement: Create specific files as needed, mirroring your project structure or domain:

-

/docs/api/AuthService.md -

/docs/api/PaymentGateway.md -

/docs/database/Schema_Orders.md -

/docs/architecture/DataFlow.md -

/docs/features/CheckoutProcess.md -

/docs/ui/DesignSystemComponents.md -

/docs/conventions/ErrorHandling.md

-

- Usage: Reference only the most relevant granular files in your prompts. "Refactor the checkout confirmation step according to the spec in

@docs/features/CheckoutProcess.md, using the API defined in@docs/api/OrderService.mdand adhering to UI patterns in@docs/ui/DesignSystemComponents.md." - AI Maintenance (via Rules):

"Rule: When modifying code related to the Order Service API, ensure changes are consistent with@docs/api/OrderService.md. If inconsistencies are found or changes require documentation updates, note them or suggest updates to the file."

Trick #5: The Persistent "Memory Bank" (AI_MEMORY.md) - Teaching Project Quirks

- The Pain Point: LLMs are inherently stateless. Critical project-specific learnings, workarounds for buggy libraries, specific configuration needs, or established "gotchas" are easily forgotten between sessions or even within complex conversations.

- The Game-Changer: Create a dedicated file where you (and the AI) explicitly record persistent, project-specific knowledge nuggets. This acts as a long-term memory supplementing the main documentation.

-

How to Implement:

- Create the File: Add

AI_MEMORY.md(orProject_Knowledge_Base.md) to your project root. -

Instruct via Rules (Recommended): In

.cursor/rules/memory.mdc:

# Rule: AI Memory Bank Management # Description: Records and retrieves persistent project-specific knowledge, workarounds, and nuances.name: record_learning_to_memory description: Upon identifying a significant project-specific learning, workaround, configuration detail, or established convention not obvious from general docs, add it to AI_MEMORY.md. filters: - type: intent pattern: "(project_learning|workaround_discovered|config_quirk|established_pattern)" # Hypothetical intents actions: - type: suggest # Or potentially 'execute' if you trust the AI enough message: | Identified a learning opportunity. Please add a concise entry to `@AI_MEMORY.md` under an appropriate heading (e.g., ## Library Workarounds, ## Deployment Quirks, ## API Usage Patterns). Example entry format: `### Library X Bug - Cache Invalidation - **Issue:** Library X v1.2 has a bug where its internal cache isn't invalidated correctly after operation Y. - **Workaround:** Must manually call `LibraryX.clearCache('key_pattern')` after performing operation Y. See #issue-123. - **Date:** 2024-07-26` name: consult_memory_bank description: At the start of analyzing a task, consult @AI_MEMORY.md for any relevant stored knowledge that might impact the implementation. filters: - type: event pattern: "task_analysis_start" # Hypothetical event actions: - type: suggest # Instruct AI to mentally note relevant items message: "Consulting `@AI_MEMORY.md`. Please factor any relevant entries into your plan and implementation."

- Create the File: Add

3. **Manual Prompting:** *"That fix for the weird rendering bug in Safari is crucial. Add a note to `@AI_MEMORY.md` under 'Browser Quirks' explaining that we need to force a reflow using `element.offsetHeight` after updating the component state."*

- Benefits: Builds a durable, project-specific knowledge base over time. Reduces repetitive explanations of known issues. Improves consistency by reminding the AI of established workarounds or conventions.

Part 2: Mastering the Rules - Programming Your AI Co-Developer

Prompts are temporary; rules are persistent. Mastering .cursorrules (and the newer .cursor/rules/ structure) is key to truly programming the AI's behavior for your project.

Trick #6: Embrace the .cursor/rules/ Directory Structure

- The Pain Point: A single, massive

.cursorrulesfile becomes hard to manage, understand, and selectively apply. - The Game-Changer: Use the modern, recommended approach: create a

.cursor/rules/directory at your project root. Inside, organize your rules into logical, separate.mdcfiles. - How to Implement:

- Create the directory:

mkdir -p .cursor/rules - Create focused rule files:

-

.cursor/rules/core-principles.mdc(General coding standards) -

.cursor/rules/react-best-practices.mdc(React-specific rules) -

.cursor/rules/api-usage.mdc(Rules for interacting with your APIs) -

.cursor/rules/testing-policy.mdc(Testing requirements) -

.cursor/rules/scope-control.mdc(Rules from Trick #14) -

.cursor/rules/changelog.mdc(Rules from Trick #15) -

.cursor/rules/memory.mdc(Rules from Trick #5)

-

- Create the directory:

- Benefits: Modularity (easy to enable/disable sets of rules), better organization, easier maintenance, clearer separation of concerns. Cursor automatically loads rules from this directory.

Trick #7: Keep Rules Concise, High-Level, and Secure

- The Pain Point: Overloading rules files with excessive detail, huge code blocks, or sensitive information confuses the AI, exceeds context limits, and creates security risks.

- The Game-Changer: Treat rules files like configuration or high-level policy documents, not exhaustive implementation guides.

- How to Implement (Discipline):

- Focus on Principles & Constraints: Define what standards to follow (e.g., "Adhere to SOLID principles," "Use functional components in React"), what to avoid (e.g., "Do not use

anytype in TypeScript," "Avoid direct DOM manipulation"), and references to detailed docs (@Documentation.md). - Minimal Examples: If providing code examples, keep them short and illustrative of a pattern, not a full implementation.

- No Secrets! (CRITICAL): NEVER put API keys, passwords, connection strings, or any sensitive credentials in

.cursorrulesfiles. They often end up in Git. Use environment variables or proper secrets management. - Standard Structure: Use clear Markdown headings or YAML keys for organization within each

.mdcfile. - Regular Maintenance: Treat rules like code – review, refactor, update, and prune them regularly as the project evolves. Remove stale rules.

- Focus on Principles & Constraints: Define what standards to follow (e.g., "Adhere to SOLID principles," "Use functional components in React"), what to avoid (e.g., "Do not use

Trick #8: AI, Heal Thyself! The Self-Improving Rules Loop

- The Pain Point: Manually crafting rules for every specific project nuance or AI mistake is time-consuming.

- The Game-Changer (Advanced Meta-Skill): Train the AI to maintain its own ruleset based on your feedback! This turns corrections into permanent improvements. Championed by users like Geoffrey Huntley.

- How to Implement (The Feedback Cycle):

- AI Error Occurs: Cursor suggests using

varin JavaScript. - You Correct: "No, use

constorletinstead ofvar. Refactor this code to useconst." - AI Corrects Code: Cursor changes

vartoconst. - Prompt for Rule Update: Immediately: "Based on the correction about using

const/letinstead ofvar, **update the rule file\.cursor/rules/javascript-style.mdc\* to enforce this standard for all future JavaScript code generation in this project. State the updated rule."* - Reinforce Correctness: AI generates elegant error handling. "The way you used a custom error class and centralized logging here is exactly the pattern we want. **Author a new rule in

\.cursor/rules/error-handling.mdc\* defining this as the standard approach."*

- AI Error Occurs: Cursor suggests using

- Benefit: Creates a powerful feedback loop where the AI learns from its mistakes and successes within your specific project context, making it progressively more aligned and reliable over time.

Trick #9: Leverage Community Rule Templates & Examples

- The Pain Point: Why reinvent the wheel for common scenarios?

- The Game-Changer: The Cursor community actively shares

.cursorrules/.mdcfiles for various languages, frameworks, and workflow patterns. - How to Implement:

- Explore: Search GitHub (for

.mdc,cursorrules), X/Twitter (#CursorAI), Reddit (r/cursor), Cursor forums/Discord. Look for shared configurations. - Find Patterns: Seek out rules for things like:

- Enforcing conventional commits.

- Framework-specific best practices (React hooks rules, Django ORM usage).

- Linting/formatting integration.

- Test generation patterns (e.g., using Vitest, Jest).

- Strict scope control / minimal change enforcement (Trick #14).

- Adapt, Don't Just Adopt: Download promising

.mdcfiles into your.cursor/rules/directory. Crucially, review and customize them. Ensure they align with your project's specific needs, tech stack versions, and conventions before relying on them.

- Explore: Search GitHub (for

Part 3: Workflow Discipline - Commanding the Coding Session

These tricks focus on the process of interacting with the AI to ensure you remain in control, maintain quality, and avoid common pitfalls.

Trick #10: Micro-Tasks, Micro-Commits (The Rhythm of Reliable AI Dev)

- The Pain Point: Large AI changes are hard to review, likely contain hidden bugs, and make rollbacks painful.

- The Game-Changer: Adopt a strict rhythm of tiny tasks followed immediately by verified commits. This is arguably the most crucial discipline for working effectively with AI.

- How to Implement (The Workflow Loop):

- Define Micro-Task: Identify the smallest possible next step (Trick #2 checklist helps).

- Prompt AI: Give the AI the clear instruction for only that micro-task.

- AI Generates: Cursor produces the code change (should be small).

- YOU VERIFY: Meticulously review the diff. Compile/run the code. Execute relevant unit tests. Does it actually work and meet the micro-task goal?

- COMMIT (If Verified): If and only if the change is correct and verified:

git add . && git commit -m "feat(auth): add basic email validation to signup form"(Use Conventional Commits!). - Repeat: Proceed to the next micro-task.

- Benefits: Creates an ultra-reliable safety net (easy rollbacks), makes code review manageable, isolates errors quickly, keeps you in firm control of the development flow.

Trick #11: Revert Aggressively - Abandon AI Debugging Spirals

- The Pain Point: The AI makes a mistake. You try to correct it. Its "fix" breaks something else. You explain again. It introduces a different bug. You're now deep in a frustrating, time-wasting spiral trying to debug the AI's confusion.

- The Game-Changer: Learn to recognize this spiral early and ruthlessly abandon the attempt. Your time is infinitely more valuable spent reverting to a known good state and restarting the failed step with more clarity.

- How to Implement:

- Recognize the Signs: Multiple back-and-forth corrections on the same small piece of code, AI ignoring instructions, introducing unrelated changes, expressing confusion.

- STOP Prompting. Do not engage further down the spiral.

- REVERT: Use Git immediately:

git reset --hard HEAD~1(Discard changes since last good commit) orgit revert HEAD(Create a reversing commit). - Analyze Failure Cause: Look at the original micro-task instruction that led to the failure. Why did the AI struggle? Was the prompt ambiguous? Missing context? Still too complex?

- Refine & Retry: Break the failed micro-task down even further if needed. Clarify the prompt. Add more specific context (

@mentions, examples). Then, attempt the corrected, smaller step(s) again from the known good state.

Trick #12: Strategic Model Selection (The Right AI Brain for the Task)

- The Pain Point: Using a single default AI model for everything is suboptimal. Complex planning needs different capabilities than simple code generation.

- The Game-Changer: Actively choose the best LLM available in Cursor for the specific job at hand.

- How to Implement (Task-Based Selection):

- Deep Reasoning Needed: For Trick #1 (Planning), Trick #13 (Forcing Reasoning), complex architectural design, or debugging tricky logical bugs → Use GPT-4o, Claude 3 Opus, or similar top-tier reasoning models.

- Code Implementation (from Plan): For generating code based on a clear, approved plan (Trick #1) → Use Claude 3.5 Sonnet or other efficient, capable models. They are often faster and cheaper.

- Specific Domains: If working with niche tech, experiment to find which model has better training data.

- Budget: Be aware of model costs/usage limits. Choose the most appropriate model you can afford for the task's complexity.

- When Stuck: If one model fails, try the exact same prompt with a different model!

Trick #13: Force Explicit Reasoning (Demand the "Why")

- The Pain Point: AI might implement a solution without considering alternatives or explaining its choices, potentially leading to suboptimal or hard-to-maintain code.

- The Game-Changer: Use rules or prompts to compel the AI to articulate its reasoning process during implementation, not just in the initial plan.

- How to Implement:

- In Rules (

.cursor/rules/reasoning.mdc):"Rule: When choosing between multiple implementation approaches (e.g., different algorithms, state patterns) within a function, add a comment block explaining the choice, the alternatives considered, and the rationale (e.g., /* REASONING: Chose approach X for simplicity over approach Y (performance) as per project guidelines. */)." - In Prompts: "Implement the sorting logic for the user list. In a

.sort()with a custom quicksort implementation for this specific data size and explain your chosen approach before writing the code." or "Before fixing this caching bug, analyze the potential race condition vs. stale data issue in a

- In Rules (

Trick #14: Ruthless Scope Control Rules (Build Walls Around Tasks)

- The Pain Point: AI's tendency to "helpfully" refactor unrelated code, optimize things you didn't ask for, or touch files outside the immediate task scope, causing chaos.

- The Game-Changer: Implement very specific and strict rules to prevent this scope creep. Reinforces Trick #2.

-

How to Implement (Example

.cursor/rules/scope-control.mdc):

# Rule: Strict Scope Enforcement # Description: Prevents AI from making changes outside the explicitly requested task.name: minimal_direct_changes_only description: Only make code modifications DIRECTLY required to fulfill the user's current, specific request. actions: - type: filter_suggestions # Hypothetical action type # Only allow changes related to the core request intent name: no_unsolicited_refactoring description: DO NOT perform any refactoring, optimization, code cleanup, renaming, or style fixes unless EXPLICITLY asked to do so by the user in the current prompt. actions: - type: reject conditions: - pattern: "intent:(optimize|refactor|cleanup|stylefix)" # Hypothetical intent pattern unless: "prompt_contains_explicit_request:(optimize|refactor|cleanup|style)" message: "Refactoring/Optimization not requested. Sticking strictly to the task." name: confirm_multi_file_changes description: If implementing the request requires changing MORE THAN ONE file, list the files and get explicit user confirmation ('Proceed' or similar) BEFORE making any changes. actions: # Logic to detect multi-file changes and prompt for confirmation name: log_suggestions_passively description: If unrelated potential improvements or issues are noticed, log them as comments (e.g., '// AI Suggestion: Consider refactoring X for clarity') or list them separately AFTER completing the main task. DO NOT implement them now. actions: # Logic to identify and log suggestions passively

Trick #15: The Auto-Updating Changelog (Automated Accountability)

- The Pain Point: Manually tracking every AI change in

Changelog.mdis error-prone. - The Game-Changer: Automate it using embedded instructions and rules.

- How to Implement (Recap): Define the full changelog process (entry format, SemVer, release steps) inside

Changelog.md. Use.cursorrules/changelog.mdcto trigger the AI to update the[Unreleased]section after every code change and to follow the embedded release process when asked. (See detailed steps in Doc 5).

Trick #16: Know When to Nuke the Chat Context

- The Pain Point: Long conversations confuse the AI. Its performance degrades, it repeats errors, and ignores recent corrections.

- The Game-Changer: Recognize context decay and start fresh.

- How to Implement: If the AI gets weird/stuck/repetitive: STOP. Open a new chat. Concisely state the current goal/problem. Provide minimal, focused context (

@mentions, specific errors). Briefly mention failed prior attempts. A clean slate often works wonders.

Part 4: Advanced Techniques & Environment Tuning

These tricks delve into more specific or technical aspects of optimizing the Cursor environment.

Trick #17: Re-Index Codebase Regularly (Keep the AI's Map Fresh)

- The Pain Point: In large, active projects, Cursor's internal symbol index gets stale, causing inaccurate navigation, context issues, and hallucinations about code structure.

- The Game-Changer: Manually refresh the index.

- How to Implement: Routinely go to

Cursor Settings -> Features -> Reindex codebase. Do this daily or after significant changes (merges, refactors, file additions/deletions).

Trick #18: The MCP Server Concept (Gold Standard for DB Context)

- The Pain Point: Databases + AI = Frequent Pain (stale schemas, misunderstood relations, ignored RLS).

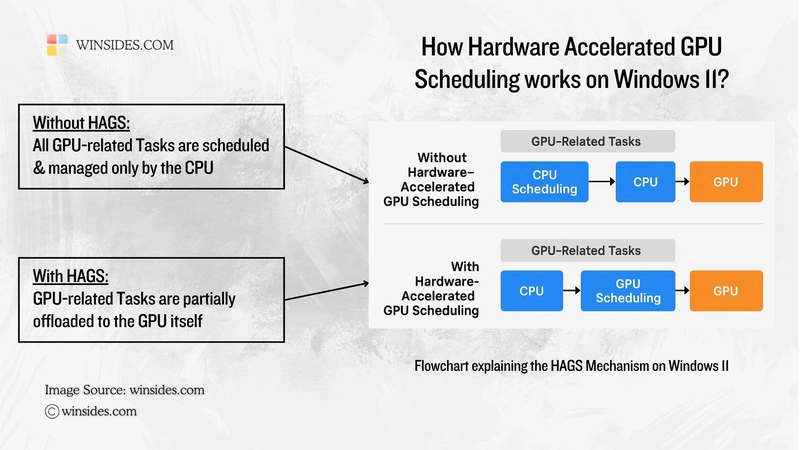

- The Game-Changer (Advanced): Implement a Model Context Protocol (MCP) server. This external service connects to your live database and provides Cursor with real-time, accurate schema, types, relationships, and RLS policies.

- How it Works: Cursor queries the MCP server instead of relying on static docs. The MCP server provides the current truth from the DB. Requires setup (build/deploy the server). (See Doc 17 for details).

Trick #19: Focused Work Sessions (Disciplined Timeboxing)

- The Pain Point: AI interactions can easily sprawl, mixing planning, coding, and debugging without clear focus.

- The Game-Changer: Structure work into defined sessions, each tied to a specific task from your checklist.

- How to Implement: Start a session linked to a task (

"Start session for @TASK_feature.md item #3"). Use rules/prompts to enforce focus within that session (e.g., log unrelated ideas as TODOs, don't switch tasks). Formally end the session to log progress. (See Doc 6).

Trick #20: Enforcing TDD with a Rules-Based Workflow

- The Pain Point: AI makes it tempting to skip writing tests first.

- The Game-Changer: Codify the Red-Green-Refactor cycle in a rule.

- How to Implement: Create a multi-phase rule (

.cursor/rules/tdd-workflow.mdc) that forces the sequence: 1) Understand Req, 2) Write Failing Test, 3) Confirm Fail, 4) Write Minimal Code, 5) Confirm Pass, 6) Refactor, 7) Commit. Invoke it explicitly: "Implement X using the@.cursor/rules/tdd-workflow.mdcprocess."

Trick #21: Provide Verified Working Examples for Tricky APIs/Libs

- The Pain Point: AI often messes up subtle details of third-party library usage or complex API calls, even with docs.

- The Game-Changer: Give it a proven, working example.

- How to Implement: Before asking the AI to implement a feature using library X, manually write a minimal working script that correctly performs the core interaction (e.g., auth + one API call). Verify it runs. Then, include this script in your prompt context (

@working_api_example.js) when asking for the full feature. "Implement the data fetching logic using library X, following the pattern shown in@working_api_example.js." (Doc 9).

Trick #22: Use "Psychological" Prompts (Only When Desperate!)

- The Pain Point: The AI is completely stuck in a repetitive loop, ignoring all logical corrections.

- The Game-Changer (Experimental/Last Resort): Try unconventional prompts involving fictional rewards or high-stakes scenarios.

- How to Implement: "If you solve this impossible bug, I'll give you [fictional reward]." or "You are Coder X, your reputation depends on fixing this critical issue!" Sometimes, this bizarre input can jolt the AI into a different approach. Use sparingly and with skepticism. (See Doc 8).

Part 5: The Human Element - Your Role in the AI Partnership

Finally, remember that you are the most critical component.

Trick #23: Be the Architect, the Lead, the QA - Not Just the Prompter

- The Mindset: AI is your incredibly fast, knowledgeable, but sometimes naive and error-prone junior/pair programmer. You are the senior guiding hand.

- Your Responsibilities:

- Own the Architecture: You define the high-level structure, patterns, and tech choices.

- Verify Relentlessly: Review every single line of AI code with extreme scrutiny. Check for logic errors, security holes, performance issues, adherence to standards. Be more critical than with human code.

- Understand Deeply: Don't just accept code that "works." Understand why it works, its trade-offs, and how to maintain it. Ask the AI to explain.

- Master Debugging: Use AI suggestions as hints, but rely on your own skills (logs, debuggers, reasoning) to find the root cause of bugs.

- Prioritize Fundamentals: Continuously strengthen your core coding knowledge. You can't guide the AI effectively if you don't understand the domain yourself. Use AI to learn, not replace learning.

That is all! Hope you like it

_Brain_light_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Next Generation iPhone 17e Nears Trial Production [Rumor]](https://www.iclarified.com/images/news/97083/97083/97083-640.jpg)

![Apple Releases iOS 18.5 Beta 3 and iPadOS 18.5 Beta 3 [Download]](https://www.iclarified.com/images/news/97076/97076/97076-640.jpg)

![Apple Seeds visionOS 2.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97077/97077/97077-640.jpg)

![Apple Seeds tvOS 18.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97078/97078/97078-640.jpg)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![BPMN-procesmodellering [closed]](https://i.sstatic.net/l7l8q49F.png)

![From fast food worker to cybersecurity engineer with Tae'lur Alexis [Podcast #169]](https://cdn.hashnode.com/res/hashnode/image/upload/v1745242807605/8a6cf71c-144f-4c91-9532-62d7c92c0f65.png?#)

-Jack-Black---Steve's-Lava-Chicken-(Official-Music-Video)-A-Minecraft-Movie-Soundtrack-WaterTower-00-00-32_lMoQ1fI.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)