What Is MCP ?

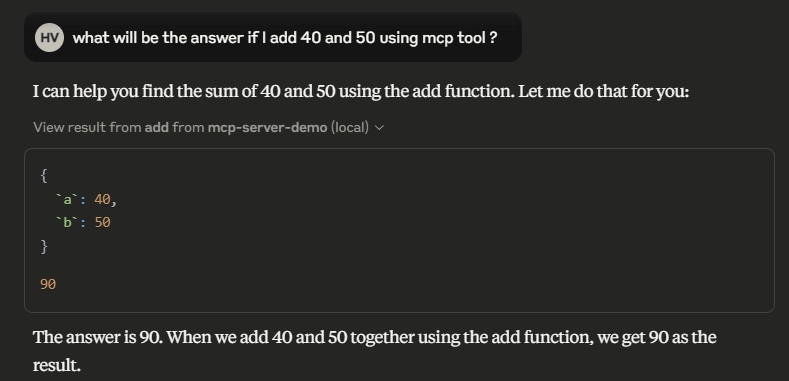

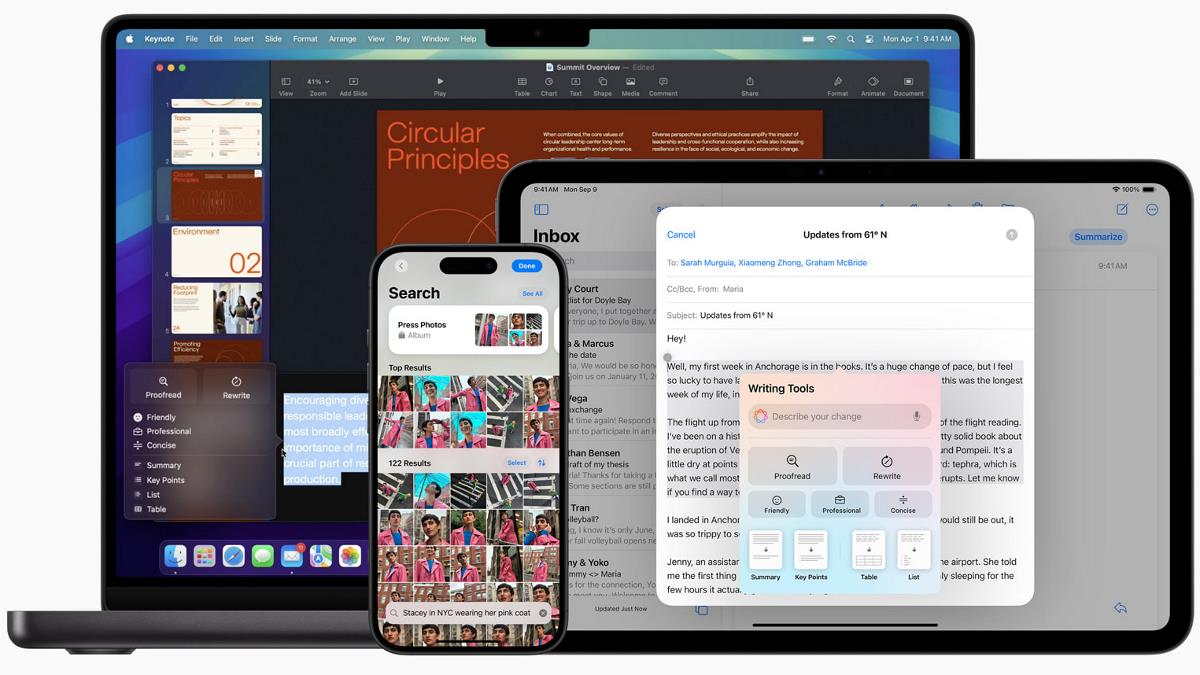

Have you ever wondered what MCP is and why it’s gaining so much attention in the AI community? You might have seen posts about different MCP servers, and the term is slowly but surely becoming more widely discussed. But why is this happening now? Let’s break it down. Before we dive into the details of what MCP is, it’s important to understand why it’s suddenly becoming a key focus in the AI community. Why Do We Need to Understand MCP? Imagine this scenario: A client of yours sends you some changes to their requirements, but they do so informally. You need to send these changes to your team in a more formal manner. To help you with this, you use a large language model (LLM) to draft a formal email. Now, here’s the catch: while the LLM can draft the email for you, it can't actually send it. You still need to copy, paste, format the email, and manually select each team member to send the message. But what if there was a way to automate this? What if an MCP server could securely access your Gmail or Outlook account, so after the LLM drafts the email, it could automatically send it to your team? This would save you time and effort! There are a couple of MCP servers available which do make it possible, one of which is Gmail MCP Server. This is just one small example, but the possibilities expand far beyond that. Consider other applications such as: Managing your Discord or Slack community and extracting insights from past conversations Automating browser tasks via MCP Managing your GitHub repository and other tasks With this, you can see how MCP has the potential to enhance productivity by automating a variety of tasks. How MCP Came into the Picture On November 25, 2024, Anthropic, the developers behind Claude, introduced a new protocol called Model Context Protocol (MCP). They released comprehensive documentation and examples to encourage the community to engage with this innovative concept. This announcement marked the beginning of MCP's journey. You can trace it back to this blog post by Anthropic. Think of this moment as something similar to the release of the paper "Attention is All You Need", which revolutionized the world of AI. You might wonder, why link MCP with Transformers? The reason is simple: MCP could be the next big thing after the transformation that LLMs brought to the AI world. The Potential of MCP Imagine this: you give a prompt to any AI host (like Claude, Cursor, etc.) saying, "Design a prototype for my portfolio website. Go through my GitHub, LinkedIn, Twitter, and Dev.to profiles to gather more information about me and create the design on Figma. Make it aesthetic and reflect a modern coder vibe." Note: This might become a reality' might be a hedge, but MCP holds the potential to make it happen. Sounds crazy, right? That’s because it is, but that’s the exciting part! Why Can’t We Do This Right Now? The main reason we can’t do this yet is that current AI hosts lack the ability to interact directly with services like GitHub, LinkedIn, Twitter, Figma, and others. MCP addresses this gap. What Exactly is MCP? In simple terms, you can think of MCP as a bridge between AI hosts (like Claude or Cursor) and external services (such as GitHub, LinkedIn, Twitter, Figma, and Dev.to). It allows AI hosts to securely access these services and expand the functionality of existing LLMs. MCP facilitates us to transform instructions generated by LLMs into actions to automate the services we currently use. Technical Definition of MCP In technical terms, Model Context Protocol (MCP) is a set of rules that defines how communication between an MCP client and an MCP server should be managed to utilize the services. Now, you may be wondering: What are MCP clients and servers? Breaking Down MCP Architecture Let’s take a look at the key components of MCP: MCP Host: This is the interface that the end user directly interacts with. Examples include AI hosts like Claude, Cursor, IDE tools, and any other platforms that support MCP. MCP Client: The client manages the 1:1 communication between the host and the server. MCP Server: This is the lightweight code that you (or someone else) write. It enables the host to securely access various local and internet-based services such as GitHub, Twitter, Slack, email, databases, and more. MCP server contains a number of MCP tools. MCP tools are nothing but a simple function in any programming language. # An addition MCP tool @mcp.tool() def add(a: int, b: int) -> int: """Add two numbers""" return a + b Local and Internet-based Functionalities The local services may include tasks like allowing the host to run commands in the command prompt or accessing local files. On the other hand, internet-based services could involve allowing the host to securely make changes to a GitHub repository, send messages over Slac

Have you ever wondered what MCP is and why it’s gaining so much attention in the AI community? You might have seen posts about different MCP servers, and the term is slowly but surely becoming more widely discussed. But why is this happening now?

Let’s break it down.

Before we dive into the details of what MCP is, it’s important to understand why it’s suddenly becoming a key focus in the AI community.

Why Do We Need to Understand MCP?

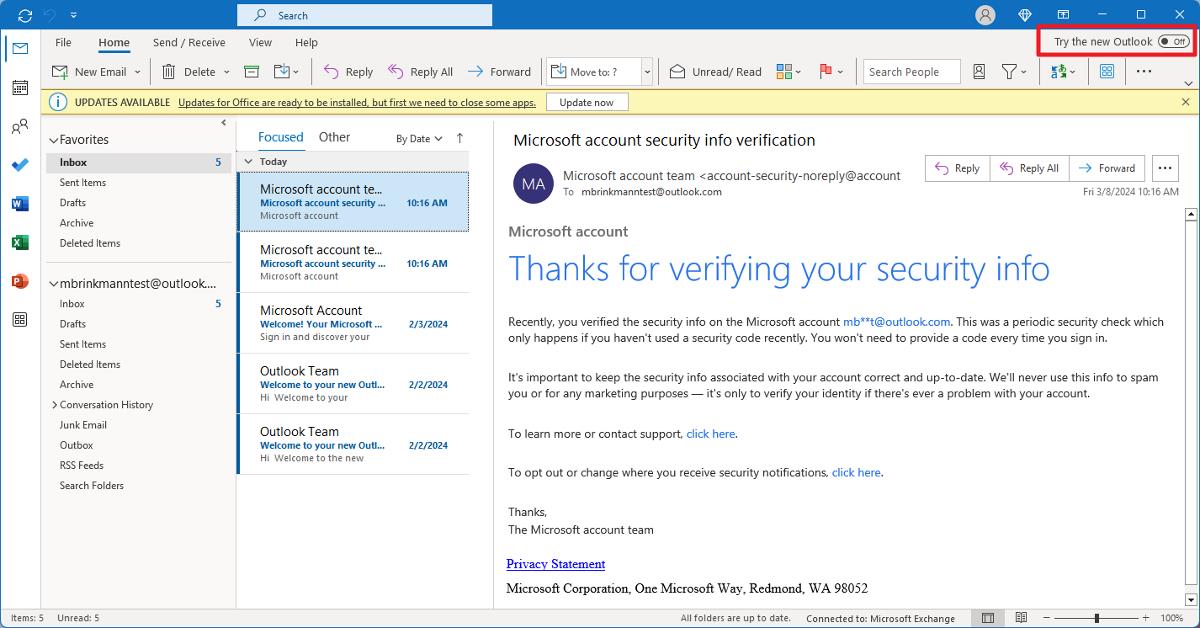

Imagine this scenario: A client of yours sends you some changes to their requirements, but they do so informally. You need to send these changes to your team in a more formal manner. To help you with this, you use a large language model (LLM) to draft a formal email.

Now, here’s the catch: while the LLM can draft the email for you, it can't actually send it. You still need to copy, paste, format the email, and manually select each team member to send the message.

But what if there was a way to automate this? What if an MCP server could securely access your Gmail or Outlook account, so after the LLM drafts the email, it could automatically send it to your team? This would save you time and effort!

There are a couple of MCP servers available which do make it possible, one of which is Gmail MCP Server.

This is just one small example, but the possibilities expand far beyond that. Consider other applications such as:

- Managing your Discord or Slack community and extracting insights from past conversations

- Automating browser tasks via MCP

- Managing your GitHub repository and other tasks

With this, you can see how MCP has the potential to enhance productivity by automating a variety of tasks.

How MCP Came into the Picture

On November 25, 2024, Anthropic, the developers behind Claude, introduced a new protocol called Model Context Protocol (MCP). They released comprehensive documentation and examples to encourage the community to engage with this innovative concept.

This announcement marked the beginning of MCP's journey. You can trace it back to this blog post by Anthropic. Think of this moment as something similar to the release of the paper "Attention is All You Need", which revolutionized the world of AI.

You might wonder, why link MCP with Transformers? The reason is simple: MCP could be the next big thing after the transformation that LLMs brought to the AI world.

The Potential of MCP

Imagine this: you give a prompt to any AI host (like Claude, Cursor, etc.) saying, "Design a prototype for my portfolio website. Go through my GitHub, LinkedIn, Twitter, and Dev.to profiles to gather more information about me and create the design on Figma. Make it aesthetic and reflect a modern coder vibe."

Note: This might become a reality' might be a hedge, but MCP holds the potential to make it happen.

Sounds crazy, right? That’s because it is, but that’s the exciting part!

Why Can’t We Do This Right Now?

The main reason we can’t do this yet is that current AI hosts lack the ability to interact directly with services like GitHub, LinkedIn, Twitter, Figma, and others.

MCP addresses this gap.

What Exactly is MCP?

- In simple terms, you can think of MCP as a bridge between AI hosts (like Claude or Cursor) and external services (such as GitHub, LinkedIn, Twitter, Figma, and Dev.to). It allows AI hosts to securely access these services and expand the functionality of existing LLMs.

MCP facilitates us to transform instructions generated by LLMs into actions to automate the services we currently use.

Technical Definition of MCP

- In technical terms, Model Context Protocol (MCP) is a set of rules that defines how communication between an MCP client and an MCP server should be managed to utilize the services.

Now, you may be wondering: What are MCP clients and servers?

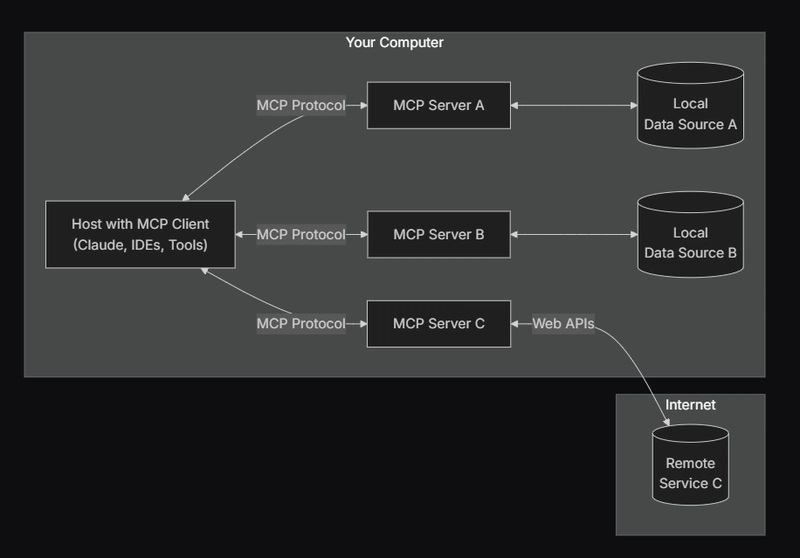

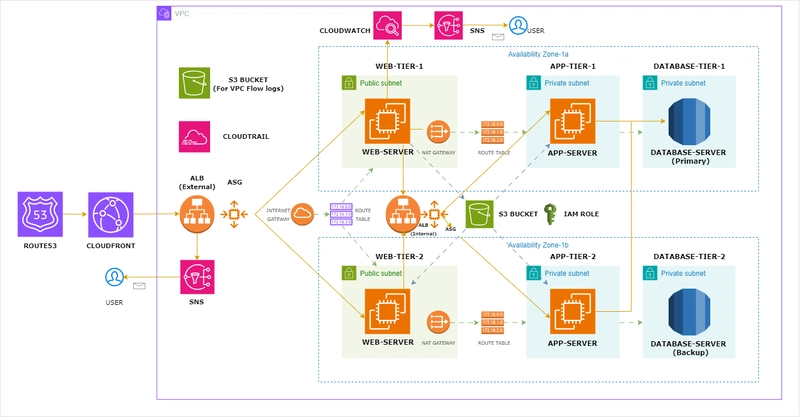

Breaking Down MCP Architecture

Let’s take a look at the key components of MCP:

- MCP Host: This is the interface that the end user directly interacts with. Examples include AI hosts like Claude, Cursor, IDE tools, and any other platforms that support MCP.

- MCP Client: The client manages the 1:1 communication between the host and the server.

- MCP Server: This is the lightweight code that you (or someone else) write. It enables the host to securely access various local and internet-based services such as GitHub, Twitter, Slack, email, databases, and more.

MCP server contains a number of MCP tools. MCP tools are nothing but a simple function in any programming language.

# An addition MCP tool

@mcp.tool()

def add(a: int, b: int) -> int:

"""Add two numbers"""

return a + b

Local and Internet-based Functionalities

- The local services may include tasks like allowing the host to run commands in the command prompt or accessing local files. On the other hand, internet-based services could involve allowing the host to securely make changes to a GitHub repository, send messages over Slack, or even draft and send emails on its own.

Example for local service MCP, Desktop Commander MCP, to control terminal.

The possibilities are endless. MCP could enable a range of functionalities as long as the service has an API that allows for interaction.

An Example to Build a Demo

Let’s now think about how we can build a simple demo using MCP.

Prerequisite:

- install

uvfrom here, it is an extremely fast Python package and project manager, written in Rust. It is essential for managing the server and dependencies in this project.

Follow along:

1) Initialise project: run the command:

uv init mcp-server-demo

cd mcp-server-demo

2) Add MCP to your project dependencies:

uv add "mcp[cli]"

3) Create a server.py file and add the following content.

from mcp.server.fastmcp import FastMCP

# Create an MCP server

mcp = FastMCP("Demo")

# Add an addition tool

@mcp.tool()

def add(a: int, b: int) -> int:

"""Add two numbers"""

return a + b

if __name__ == "__main__":

mcp.run(transport="stdio")

4) Configure Claude Desktop:

-

To integrate the server with Claude Desktop, you will need to modify the Claude configuration file. Follow the instructions for your operating system:

- For macOS or Linux:

code ~/Library/Application\ Support/Claude/claude_desktop_config.json

- For Windows:

code $env:AppData\Claude\claude_desktop_config.json

- In the configuration file, locate the

mcpServerssection, and replace the placeholder paths with the absolute paths to youruvinstallation and the mcp-server-demo project directory. It should look like this:

{

"mcpServers":

{

"mcp-server-demo":

{

"command": "ABSOLUTE/PATH/TO/.local/bin/uv",

"args":

[

"--directory",

"ABSOLUTE/PATH/TO/YOUR-MCP-SERVER-DEMO-REPO",

"run",

"server.py"

]

}

}

}

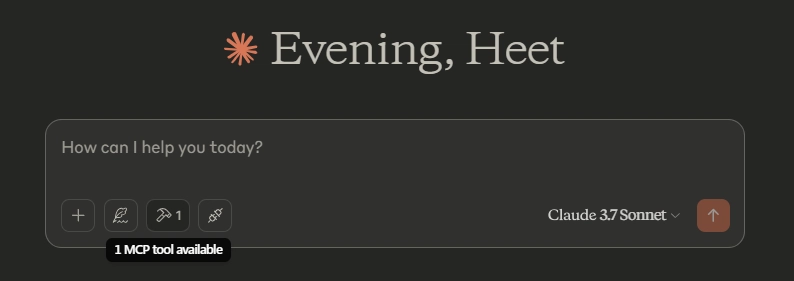

- Once the file is saved, restart Claude Desktop to link with the MCP server and you will be able to see one MCP tool linked.

- Ask Claude to add two values and you will see it accessing MCP tool and getting output from it.

Congratulations, you’ve created your first MCP! Stay tuned for more exciting ideas and tutorials on MCP!

For Reference

- GitHub repo of MCP: modelcontextprotocol/modelcontextprotocol

- Documentation: Model Context Protocol Documentation

- Train ML model using Claude: Linear Regression MCP Tutorial

Conclusion

MCP is an exciting new concept that could revolutionize how we interact with AI models. It serves as a bridge between AI hosts and the services we already use daily, expanding the capabilities of language models in ways we’ve only dreamed of. As the technology continues to evolve, we might soon see AI taking over more routine tasks, giving us more time to focus on creative and high-level work.

![New Beats USB-C Charging Cables Now Available on Amazon [Video]](https://www.iclarified.com/images/news/97060/97060/97060-640.jpg)

![Apple M4 13-inch iPad Pro On Sale for $200 Off [Deal]](https://www.iclarified.com/images/news/97056/97056/97056-640.jpg)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)