Why I Chose Service Discovery Over Service Connect for ECS Inter-Service Communication

If you've ever looked at an infrastructure setup and thought, "Why on earth is it built this way?" — you're not alone. In my case, I discovered that two ECS applications running in separate VPCs were communicating via the public internet. Since they were in different VPCs, traffic between ECS tasks flowed through NAT Gateways and an ALB — a setup that was suboptimal in terms of performance, security, and cost. TL;DR: I Dropped Service Connect and Went with Service Discovery Despite AWS documentation and the ECS console strongly recommending Service Connect, I ended up going with Cloud Map-based Service Discovery — which initially felt like the "old way" of doing things. Why Service Connect Wasn't an Option: Incompatible with Blue/Green Deployments In this architecture, ECS Service A communicates with ECS Service B — a supporting microservice. Service A is exposed externally via an ALB and uses Blue/Green deployments with CodeDeploy for safe rollbacks and minimal downtime. When I tried to enable Service Connect in the ECS service definition, I got the following error: InvalidParameterException: DeploymentController#type CODE_DEPLOY is not supported by ECS Service Connect. Turns out, Service Connect doesn't support Blue/Green deployments via CodeDeploy. So, that was the deal-breaker for me. I had to abandon Service Connect. The Appeal of Service Connect Service Connect automatically injects an Envoy sidecar proxy into each ECS task and provides modern service mesh features like: Simplified DNS with clientAliases Service graph visualization Enhanced security with mTLS and policy control However, Envoy consumes additional CPU and memory, which can increase costs — especially on Fargate, where resources directly affect pricing. In my case, since Service B was a minor supporting service and I didn't need traffic insights, I opted for a simpler solution: Service Discovery. Quick Comparison: Service Connect vs. Service Discovery Feature Service Connect Service Discovery Sidecar Required (Envoy) Not needed Communication Path ECS A → Envoy A → Envoy B → ECS B ECS A → ECS B Resource Usage Higher (sidecar overhead) Lower (direct connection) Blue/Green Deployment Not supported Supported DNS Simplicity clientAliases (short) service.service.local (long) For a deeper dive, I recommend this article: Comparing 5 Methods of ECS Interservice Communication Including VPC Lattice How to Set Up Service Discovery (Cloud Map) Here’s how I configured the Service Discovery architecture using Cloud Map to enable ECS Service A to resolve and communicate with ECS Service B. 1. Create a Cloud Map Namespace The first step is to create a namespace in Cloud Map. Think of a namespace as a private DNS domain — for example, service.local. If you're using Terraform: resource "aws_service_discovery_private_dns_namespace" "service_local" { name = "service.local" description = "Private DNS namespace" vpc = } 2. Create a Service within the Namespace Next, register a service for ECS B within the newly created namespace. resource "aws_service_discovery_service" "service_b" { name = "service-b" namespace_id = aws_service_discovery_private_dns_namespace.service_local.id dns_config { namespace_id = aws_service_discovery_private_dns_namespace.service_local.id dns_records { type = "A" ttl = 30 } routing_policy = "MULTIVALUE" } health_check_custom_config { failure_threshold = 3 } } 3. Create Security Groups For ECS A (Caller) resource "aws_security_group" "service_a_ecs_sg" { name = "service-a-ecs-sg" description = "Security group for ECS A (caller)" vpc_id = } resource "aws_security_group_rule" "service_a_to_b_egress" { type = "egress" from_port = 8080 to_port = 8080 protocol = "tcp" security_group_id = aws_security_group.service_a_ecs_sg.id source_security_group_id = aws_security_group.service_b_ecs_sg.id } For ECS B (Callee) resource "aws_security_group" "service_b_ecs_sg" { name = "service-b-ecs-sg" description = "Security group for ECS B (callee)" vpc_id = } resource "aws_security_group_rule" "service_b_from_a_ingress" { type = "ingress" from_port = 8080 to_port = 8080 protocol = "tcp" security_group_id = aws_security_group.service_b_ecs_sg.id source_security_group_id = aws_security_group.service_a_ecs_sg.id } resource "aws_security_group_rule" "service_b_egress_all" { type = "egress" from_port = 0 to_port = 0 protocol = "-1" security_group_id = aws_security_group.service_b_ecs_sg.id cidr_blocks = ["0.0.0.0/0"] }

If you've ever looked at an infrastructure setup and thought, "Why on earth is it built this way?" — you're not alone.

In my case, I discovered that two ECS applications running in separate VPCs were communicating via the public internet. Since they were in different VPCs, traffic between ECS tasks flowed through NAT Gateways and an ALB — a setup that was suboptimal in terms of performance, security, and cost.

TL;DR: I Dropped Service Connect and Went with Service Discovery

Despite AWS documentation and the ECS console strongly recommending Service Connect, I ended up going with Cloud Map-based Service Discovery — which initially felt like the "old way" of doing things.

Why Service Connect Wasn't an Option: Incompatible with Blue/Green Deployments

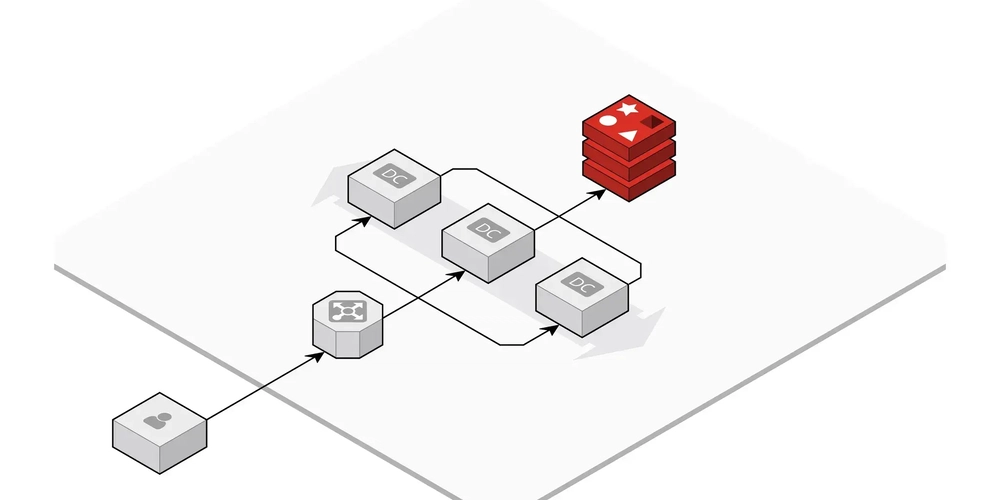

In this architecture, ECS Service A communicates with ECS Service B — a supporting microservice. Service A is exposed externally via an ALB and uses Blue/Green deployments with CodeDeploy for safe rollbacks and minimal downtime.

When I tried to enable Service Connect in the ECS service definition, I got the following error:

InvalidParameterException: DeploymentController#type CODE_DEPLOY is not supported by ECS Service Connect.

Turns out, Service Connect doesn't support Blue/Green deployments via CodeDeploy.

So, that was the deal-breaker for me. I had to abandon Service Connect.

The Appeal of Service Connect

Service Connect automatically injects an Envoy sidecar proxy into each ECS task and provides modern service mesh features like:

- Simplified DNS with

clientAliases - Service graph visualization

- Enhanced security with mTLS and policy control

However, Envoy consumes additional CPU and memory, which can increase costs — especially on Fargate, where resources directly affect pricing.

In my case, since Service B was a minor supporting service and I didn't need traffic insights, I opted for a simpler solution: Service Discovery.

Quick Comparison: Service Connect vs. Service Discovery

| Feature | Service Connect | Service Discovery |

|---|---|---|

| Sidecar | Required (Envoy) | Not needed |

| Communication Path | ECS A → Envoy A → Envoy B → ECS B | ECS A → ECS B |

| Resource Usage | Higher (sidecar overhead) | Lower (direct connection) |

| Blue/Green Deployment | Not supported | Supported |

| DNS Simplicity |

clientAliases (short) |

service.service.local (long) |

For a deeper dive, I recommend this article:

Comparing 5 Methods of ECS Interservice Communication Including VPC Lattice

How to Set Up Service Discovery (Cloud Map)

Here’s how I configured the Service Discovery architecture using Cloud Map to enable ECS Service A to resolve and communicate with ECS Service B.

1. Create a Cloud Map Namespace

The first step is to create a namespace in Cloud Map. Think of a namespace as a private DNS domain — for example, service.local.

If you're using Terraform:

resource "aws_service_discovery_private_dns_namespace" "service_local" {

name = "service.local"

description = "Private DNS namespace"

vpc = <vpc_id>

}

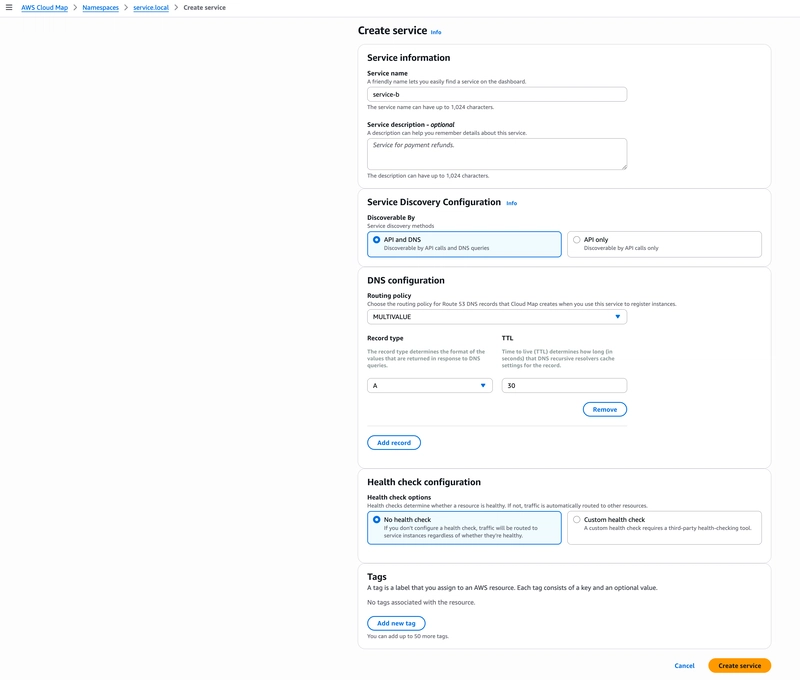

2. Create a Service within the Namespace

Next, register a service for ECS B within the newly created namespace.

resource "aws_service_discovery_service" "service_b" {

name = "service-b"

namespace_id = aws_service_discovery_private_dns_namespace.service_local.id

dns_config {

namespace_id = aws_service_discovery_private_dns_namespace.service_local.id

dns_records {

type = "A"

ttl = 30

}

routing_policy = "MULTIVALUE"

}

health_check_custom_config {

failure_threshold = 3

}

}

3. Create Security Groups

For ECS A (Caller)

resource "aws_security_group" "service_a_ecs_sg" {

name = "service-a-ecs-sg"

description = "Security group for ECS A (caller)"

vpc_id = <vpc-id>

}

resource "aws_security_group_rule" "service_a_to_b_egress" {

type = "egress"

from_port = 8080

to_port = 8080

protocol = "tcp"

security_group_id = aws_security_group.service_a_ecs_sg.id

source_security_group_id = aws_security_group.service_b_ecs_sg.id

}

For ECS B (Callee)

resource "aws_security_group" "service_b_ecs_sg" {

name = "service-b-ecs-sg"

description = "Security group for ECS B (callee)"

vpc_id = <vpc-id>

}

resource "aws_security_group_rule" "service_b_from_a_ingress" {

type = "ingress"

from_port = 8080

to_port = 8080

protocol = "tcp"

security_group_id = aws_security_group.service_b_ecs_sg.id

source_security_group_id = aws_security_group.service_a_ecs_sg.id

}

resource "aws_security_group_rule" "service_b_egress_all" {

type = "egress"

from_port = 0

to_port = 0

protocol = "-1"

security_group_id = aws_security_group.service_b_ecs_sg.id

cidr_blocks = ["0.0.0.0/0"]

}

4. Update the Application Endpoint

From ECS A, you can now access ECS B using:

http://service-b.service.local:8080

Note: The port number must be explicitly specified.

5. Update ECS B’s Service and Task Definitions

Service Definition

In ECS B’s service definition, associate it with the Cloud Map service you created:

If you're using ecspresso:

"serviceRegistries": [

{

"registryArn": "arn:aws:servicediscovery:ap-northeast-1:*********:service/srv-*********"

}

]

Task Definition

Since ALB is no longer used, the ECS task must handle health checks itself:

"containerDefinitions": [

{

// ...

"portMappings": [

{

"containerPort": 8080,

"protocol": "tcp"

}

],

"healthCheck": {

"command": [

"CMD-SHELL",

"curl -f http://localhost:8080/health || exit 1"

],

"interval": 30,

"timeout": 5,

"retries": 3,

"startPeriod": 10

}

}

]

If your container image doesn’t include curl, make sure to install it in your Dockerfile:

RUN apk add --no-cache curl

How to Set Up Service Connect (for Reference)

Although I didn’t end up using Service Connect due to Blue/Green incompatibility, here’s how you would set it up.

1. Create a Cloud Map Namespace

Same as with Service Discovery — create a private DNS namespace like service.local.

Note: With Service Connect, you don't need to register services manually — ECS does that for you during deployment.

2. Configure Security Groups

Service Connect routes traffic through Envoy (TCP), so you still need appropriate security group rules.

For ECS A (Caller)

resource "aws_security_group_rule" "service_a_to_b_egress" {

type = "egress"

from_port = 8080

to_port = 8080

protocol = "tcp"

security_group_id = aws_security_group.service_a_ecs_sg.id

source_security_group_id = aws_security_group.service_b_ecs_sg.id

}

For ECS B (Callee)

resource "aws_security_group_rule" "service_b_from_a_ingress" {

type = "ingress"

from_port = 8080

to_port = 8080

protocol = "tcp"

security_group_id = aws_security_group.service_b_ecs_sg.id

source_security_group_id = aws_security_group.service_a_ecs_sg.id

}

resource "aws_security_group_rule" "service_b_egress_all" {

type = "egress"

from_port = 0

to_port = 0

protocol = "-1"

security_group_id = aws_security_group.service_b_ecs_sg.id

cidr_blocks = ["0.0.0.0/0"]

}

3. Update ECS A’s Service Definition

In the ECS console, enable Service Connect:

In ecspresso, add the following:

"serviceConnectConfiguration": {

"enabled": true,

"namespace": "service.local",

"services": [

{

"portName": "http",

"discoveryName": "service",

"clientAliases": [

{

"port": 80,

"dnsName": "service"

}

]

}

]

}

Warning: Service Connect cannot be used with Blue/Green deployments via CodeDeploy. You’ll get:

DeploymentController#type CODE_DEPLOY is not supported

Update the Application

Just use the alias as your target:

http://service

No need to specify a port number.

4. Update ECS B’s Service Definition

Enable Service Connect for ECS B in the same way:

Using ecspresso:

"serviceConnectConfiguration": {

"enabled": true,

"namespace": "service.local",

"services": [

{

"portName": "http",

"discoveryName": "service",

"clientAliases": [

{

"port": 80,

"dnsName": "service"

}

]

}

]

}

5. Update ECS B’s Task Definition

Just like with Service Discovery, ECS B must respond to internal health checks:

"containerDefinitions": [

{

// ...

"portMappings": [

{

"containerPort": 8080,

"protocol": "tcp"

}

],

"healthCheck": {

"command": [

"CMD-SHELL",

"curl -f http://localhost:8080/health || exit 1"

],

"interval": 30,

"timeout": 5,

"retries": 3,

"startPeriod": 10

}

}

]

Install curl in your Dockerfile if needed:

RUN apk add --no-cache curl

Conclusion

Service Connect comes with clear benefits like simplified DNS, traffic visibility, and future-ready service mesh capabilities. However, its current incompatibility with Blue/Green deployments can be a blocker.

For smaller-scale setups — like mine — where Blue/Green is essential and sidecar overhead isn’t justifiable, Service Discovery is the better fit.

But if you're working in a mid- to large-scale microservices environment with strict security and observability needs, Service Connect is worth serious consideration.

![Apple Shares Official Teaser for 'Highest 2 Lowest' Starring Denzel Washington [Video]](https://www.iclarified.com/images/news/97221/97221/97221-640.jpg)

![New Powerbeats Pro 2 Wireless Earbuds On Sale for $199.95 [Lowest Price Ever]](https://www.iclarified.com/images/news/97217/97217/97217-640.jpg)

![Chrome 136 tones down some Dynamic Color on Android [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/03/google-chrome-logo-4.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

Stolen 884,000 Credit Card Details on 13 Million Clicks from Users Worldwide.webp?#)

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

-Assassin's-Creed-Shadows---How-to-Romance-Lady-Oichi-00-06-00.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)