Darwin Gödel Machine: A Self-Improving AI Agent That Evolves Code Using Foundation Models and Real-World Benchmarks

Introduction: The Limits of Traditional AI Systems Conventional artificial intelligence systems are limited by their static architectures. These models operate within fixed, human-engineered frameworks and cannot autonomously improve after deployment. In contrast, human scientific progress is iterative and cumulative—each advancement builds upon prior insights. Taking inspiration from this model of continuous refinement, AI researchers are […] The post Darwin Gödel Machine: A Self-Improving AI Agent That Evolves Code Using Foundation Models and Real-World Benchmarks appeared first on MarkTechPost.

Introduction: The Limits of Traditional AI Systems

Conventional artificial intelligence systems are limited by their static architectures. These models operate within fixed, human-engineered frameworks and cannot autonomously improve after deployment. In contrast, human scientific progress is iterative and cumulative—each advancement builds upon prior insights. Taking inspiration from this model of continuous refinement, AI researchers are now exploring evolutionary and self-reflective techniques that allow machines to improve through code modification and performance feedback.

Darwin Gödel Machine: A Practical Framework for Self-Improving AI

Researchers from the Sakana AI, the University of British Columbia and the Vector Institute have introduced the Darwin Gödel Machine (DGM), a novel self-modifying AI system designed to evolve autonomously. Unlike theoretical constructs like the Gödel Machine, which rely on provable modifications, DGM embraces empirical learning. The system evolves by continuously editing its own code, guided by performance metrics from real-world coding benchmarks such as SWE-bench and Polyglot.

Foundation Models and Evolutionary AI Design

To drive this self-improvement loop, DGM uses frozen foundation models that facilitate code execution and generation. It begins with a base coding agent capable of self-editing, then iteratively modifies it to produce new agent variants. These variants are evaluated and retained in an archive if they demonstrate successful compilation and self-improvement. This open-ended search process mimics biological evolution—preserving diversity and enabling previously suboptimal designs to become the basis for future breakthroughs.

Benchmark Results: Validating Progress on SWE-bench and Polyglot

DGM was tested on two well-known coding benchmarks:

- SWE-bench: Performance improved from 20.0% to 50.0%

- Polyglot: Accuracy increased from 14.2% to 30.7%

These results highlight DGM’s ability to evolve its architecture and reasoning strategies without human intervention. The study also compared DGM with simplified variants that lacked self-modification or exploration capabilities, confirming that both elements are critical for sustained performance improvements. Notably, DGM even outperformed hand-tuned systems like Aider in multiple scenarios.

Technical Significance and Limitations

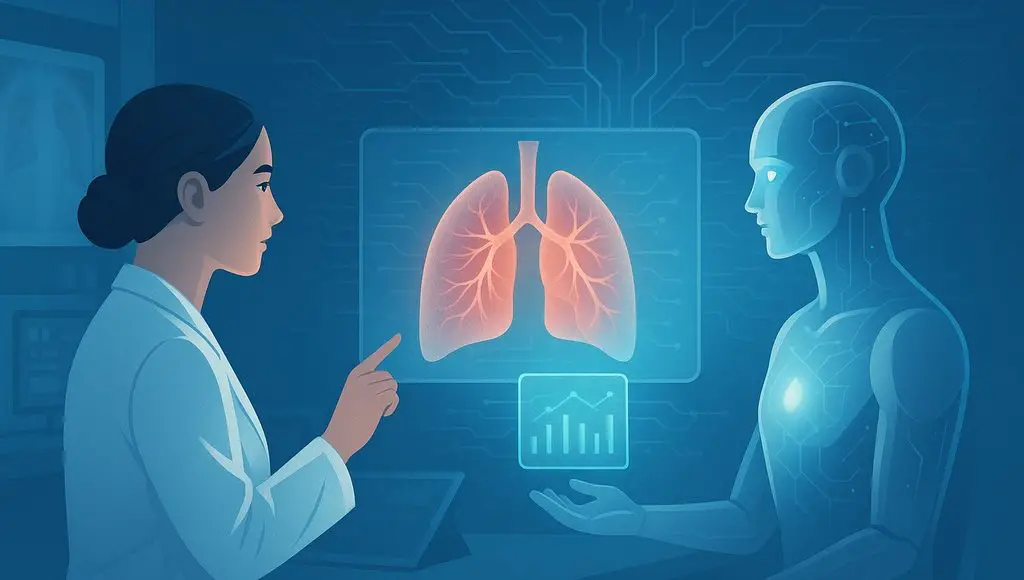

DGM represents a practical reinterpretation of the Gödel Machine by shifting from logical proof to evidence-driven iteration. It treats AI improvement as a search problem—exploring agent architectures through trial and error. While still computationally intensive and not yet on par with expert-tuned closed systems, the framework offers a scalable path toward open-ended AI evolution in software engineering and beyond.

Conclusion: Toward General, Self-Evolving AI Architectures

The Darwin Gödel Machine shows that AI systems can autonomously refine themselves through a cycle of code modification, evaluation, and selection. By integrating foundation models, real-world benchmarks, and evolutionary search principles, DGM demonstrates meaningful performance gains and lays the groundwork for more adaptable AI. While current applications are limited to code generation, future versions could expand to broader domains—moving closer to general-purpose, self-improving AI systems aligned with human goals.

_sleepyfellow_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![watchOS 26 May Bring Third-Party Widgets to Control Center [Report]](https://www.iclarified.com/images/news/97520/97520/97520-640.jpg)

![AirPods Pro 2 On Sale for $169 — Save $80! [Deal]](https://www.iclarified.com/images/news/97526/97526/97526-640.jpg)

_Michael_Vi_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![[The AI Show Episode 151]: Anthropic CEO: AI Will Destroy 50% of Entry-Level Jobs, Veo 3’s Scary Lifelike Videos, Meta Aims to Fully Automate Ads & Perplexity’s Burning Cash](https://www.marketingaiinstitute.com/hubfs/ep%20151%20cover.png)

![[FREE EBOOKS] Solutions Architect’s Handbook, Continuous Testing, Quality, Security, and Feedback & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From electrical engineering student to CTO with Hitesh Choudhary [Podcast #175]](https://cdn.hashnode.com/res/hashnode/image/upload/v1749158756824/3996a2ad-53e5-4a8f-ab97-2c77a6f66ba3.png?#)