LLM Agent Internal As a Graph - Tutorial For Dummies

Ever wondered how AI agents actually work behind the scenes? This guide breaks down how agent systems are built as simple graphs - explained in the most beginner-friendly way possible! Note: This is a super-friendly, step-by-step version of the official PocketFlow Agent documentation. We've expanded all the concepts with examples and simplified explanations to make them easier to understand. Have you been hearing about "LLM agents" everywhere but feel lost in all the technical jargon? You're not alone! While companies are racing to build increasingly complex AI agents like GitHub Copilot, PerplexityAI, and AutoGPT, most explanations make them sound like rocket science. Good news: they're not. In this beginner-friendly guide, you'll learn: The surprisingly simple concept behind all AI agents How agents actually make decisions (in plain English!) How to build your own simple agent with just a few lines of code Why most frameworks overcomplicate what's actually happening In this tutorial, we'll use PocketFlow - a tiny 100-line framework that strips away all the complexity to show you how agents really work under the hood. Unlike other frameworks that hide the important details, PocketFlow lets you see the entire system at once. Why Learn Agents with PocketFlow? Most agent frameworks hide what's really happening behind complex abstractions that look impressive but confuse beginners. PocketFlow takes a different approach - it's just 100 lines of code that lets you see exactly how agents work! Benefits for beginners: Crystal clear: No mysterious black boxes or complex abstractions See everything: The entire framework fits in one readable file Learn fundamentals: Perfect for understanding how agents really operate No baggage: No massive dependencies or vendor lock-in Instead of trying to understand a gigantic framework with thousands of files, PocketFlow gives you the fundamentals so you can build your own understanding from the ground up. The Simple Building Blocks Imagine our agent system like a kitchen: Nodes are like different cooking stations (chopping station, cooking station, plating station) Flow is like the recipe that tells you which station to go to next Shared store is like the big countertop where everyone can see and use the ingredients In our kitchen (agent system): Each station (Node) has three simple jobs: Prep: Grab what you need from the countertop (like getting ingredients) Exec: Do your special job (like cooking the ingredients) Post: Put your results back on the countertop and tell everyone where to go next (like serving the dish and deciding what to make next) The recipe (Flow) just tells you which station to visit based on decisions: "If the vegetables are chopped, go to the cooking station" "If the meal is cooked, go to the plating station" Let's see how this works with our research helper! What's an LLM Agent (In Human Terms)? An LLM (Large Language Model) agent is basically a smart assistant (like ChatGPT but with the ability to take actions) that can: Think about what to do next Choose from a menu of actions Actually do something in the real world See what happened Think again... Think of it like having a personal assistant managing your tasks: They review your inbox and calendar to understand the situation They decide what needs attention first (reply to urgent email? schedule a meeting?) They take action (draft a response, book a conference room) They observe the result (did someone reply? was the room available?) They plan the next task based on what happened The Big Secret: Agents Are Just Simple Graphs! Here's the mind-blowing truth about agents that frameworks overcomplicate: That's it! Every agent is just a graph with: A decision node that branches to different actions Action nodes that do specific tasks A finish node that ends the process Edges that connect everything together Loops that bring execution back to the decision node No complex math, no mysterious algorithms - just nodes and arrows! Everything else is just details. Let's see how this works with a real example. Let's Build a Super Simple Research Agent Imagine we want to build an AI assistant that can search the web and answer questions - similar to tools like Perplexity AI, but much simpler. We want our agent to be able to: Read a question from a user Decide if it needs to search for information Look things up on the web if needed Provide an answer once it has enough information Let's break down our agent into individual "stations" that each handle one specific job. Think of these stations like workers on an assembly line - each with their own specific task. Here's a simple diagram of our research agent: In this diagram: DecideAction is our "thinking station" where the agent decides what to do next SearchWeb is our "research station" where the agent looks up information AnswerQuestion is

Ever wondered how AI agents actually work behind the scenes? This guide breaks down how agent systems are built as simple graphs - explained in the most beginner-friendly way possible!

Note: This is a super-friendly, step-by-step version of the official PocketFlow Agent documentation. We've expanded all the concepts with examples and simplified explanations to make them easier to understand.

Have you been hearing about "LLM agents" everywhere but feel lost in all the technical jargon? You're not alone! While companies are racing to build increasingly complex AI agents like GitHub Copilot, PerplexityAI, and AutoGPT, most explanations make them sound like rocket science.

Good news: they're not. In this beginner-friendly guide, you'll learn:

- The surprisingly simple concept behind all AI agents

- How agents actually make decisions (in plain English!)

- How to build your own simple agent with just a few lines of code

- Why most frameworks overcomplicate what's actually happening

In this tutorial, we'll use PocketFlow - a tiny 100-line framework that strips away all the complexity to show you how agents really work under the hood. Unlike other frameworks that hide the important details, PocketFlow lets you see the entire system at once.

Why Learn Agents with PocketFlow?

Most agent frameworks hide what's really happening behind complex abstractions that look impressive but confuse beginners. PocketFlow takes a different approach - it's just 100 lines of code that lets you see exactly how agents work!

Benefits for beginners:

- Crystal clear: No mysterious black boxes or complex abstractions

- See everything: The entire framework fits in one readable file

- Learn fundamentals: Perfect for understanding how agents really operate

- No baggage: No massive dependencies or vendor lock-in

Instead of trying to understand a gigantic framework with thousands of files, PocketFlow gives you the fundamentals so you can build your own understanding from the ground up.

The Simple Building Blocks

Imagine our agent system like a kitchen:

- Nodes are like different cooking stations (chopping station, cooking station, plating station)

- Flow is like the recipe that tells you which station to go to next

- Shared store is like the big countertop where everyone can see and use the ingredients

In our kitchen (agent system):

-

Each station (Node) has three simple jobs:

- Prep: Grab what you need from the countertop (like getting ingredients)

- Exec: Do your special job (like cooking the ingredients)

- Post: Put your results back on the countertop and tell everyone where to go next (like serving the dish and deciding what to make next)

-

The recipe (Flow) just tells you which station to visit based on decisions:

- "If the vegetables are chopped, go to the cooking station"

- "If the meal is cooked, go to the plating station"

Let's see how this works with our research helper!

What's an LLM Agent (In Human Terms)?

An LLM (Large Language Model) agent is basically a smart assistant (like ChatGPT but with the ability to take actions) that can:

- Think about what to do next

- Choose from a menu of actions

- Actually do something in the real world

- See what happened

- Think again...

Think of it like having a personal assistant managing your tasks:

- They review your inbox and calendar to understand the situation

- They decide what needs attention first (reply to urgent email? schedule a meeting?)

- They take action (draft a response, book a conference room)

- They observe the result (did someone reply? was the room available?)

- They plan the next task based on what happened

The Big Secret: Agents Are Just Simple Graphs!

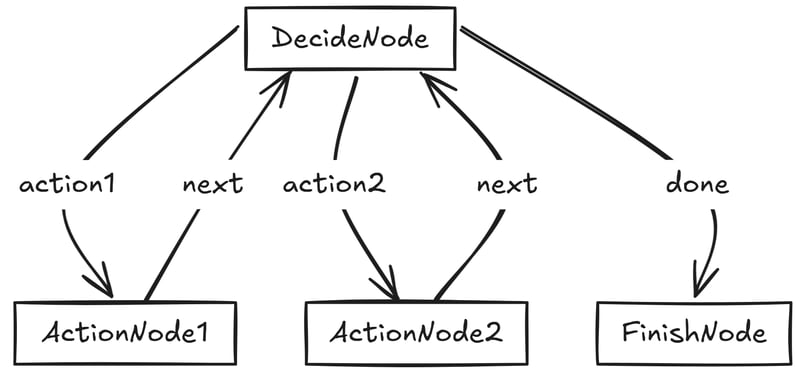

Here's the mind-blowing truth about agents that frameworks overcomplicate:

That's it! Every agent is just a graph with:

- A decision node that branches to different actions

- Action nodes that do specific tasks

- A finish node that ends the process

- Edges that connect everything together

- Loops that bring execution back to the decision node

No complex math, no mysterious algorithms - just nodes and arrows! Everything else is just details. Let's see how this works with a real example.

Let's Build a Super Simple Research Agent

Imagine we want to build an AI assistant that can search the web and answer questions - similar to tools like Perplexity AI, but much simpler. We want our agent to be able to:

- Read a question from a user

- Decide if it needs to search for information

- Look things up on the web if needed

- Provide an answer once it has enough information

Let's break down our agent into individual "stations" that each handle one specific job. Think of these stations like workers on an assembly line - each with their own specific task.

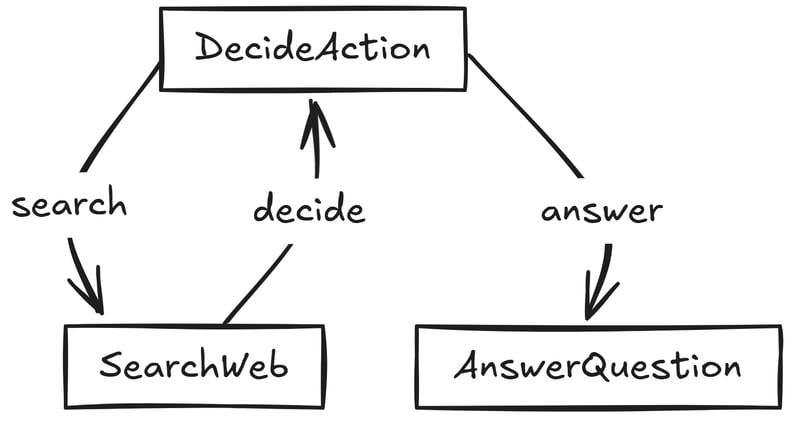

Here's a simple diagram of our research agent:

In this diagram:

- DecideAction is our "thinking station" where the agent decides what to do next

- SearchWeb is our "research station" where the agent looks up information

- AnswerQuestion is our "response station" where the agent creates the final answer

Before We Code: Let's Walk Through an Example

Imagine you asked our agent: "Who won the 2023 Super Bowl?"

Here's what would happen step-by-step:

-

DecideAction station:

- LOOKS AT: Your question and what we know so far (nothing yet)

- THINKS: "I don't know who won the 2023 Super Bowl, I need to search"

- DECIDES: Search for "2023 Super Bowl winner"

- PASSES TO: SearchWeb station

-

SearchWeb station:

- LOOKS AT: The search query "2023 Super Bowl winner"

- DOES: Searches the internet (imagine it finds "The Kansas City Chiefs won")

- SAVES: The search results to our shared countertop

- PASSES TO: Back to DecideAction station

-

DecideAction station (second time):

- LOOKS AT: Your question and what we know now (search results)

- THINKS: "Great, now I know the Chiefs won the 2023 Super Bowl"

- DECIDES: We have enough info to answer

- PASSES TO: AnswerQuestion station

-

AnswerQuestion station:

- LOOKS AT: Your question and all our research

- DOES: Creates a friendly answer using all the information

- SAVES: The final answer

- FINISHES: The task is complete!

This is exactly what our code will do - just expressed in programming language.

What Our Agent Actually "Sees" and "Thinks"

When our agent runs, here's exactly what it processes:

![Apple C1 vs Qualcomm Modem Performance [Speedtest]](https://www.iclarified.com/images/news/96767/96767/96767-640.jpg)

![Apple Studio Display On Sale for $1249 [Lowest Price Ever]](https://www.iclarified.com/images/news/96770/96770/96770-640.jpg)

![[Fixed] Chromecast (2nd gen) and Audio can’t Cast in ‘Untrusted’ outage](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2019/08/chromecast_audio_1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)