Unlocking Efficiency: The Power of Kong AI Gateway

In today’s rapidly evolving digital landscape, organizations increasingly rely on APIs to drive innovation, enhance connectivity, and streamline operations. However, as the complexity of managing these APIs grows, the need for advanced solutions becomes paramount. Enter Kong’s AI Gateway—a revolutionary product designed to transform the way we think about API management by leveraging artificial intelligence to provide intelligent insights, enhanced security, and optimized performance. What is the AI Gateway? The rapid emergence of various AI LLM providers, including open-source and self-hosted models, has led to a fragmented technology landscape with inconsistent standards and controls. This fragmentation complicates how developers and organizations utilize and govern AI services. Kong Gateway’s extensive API management capabilities and plugin extensibility make it an ideal solution for providing AI-specific management and governance services. Since AI providers often do not adhere to a standardized API specification, the AI Gateway offers a normalized API layer that enables clients to access multiple AI services from a single codebase. It enhances capabilities such as credential management, AI usage observability, governance, an prompt engineering for fine-tuning models. Developers can utilize no-code AI plugins to enrich their existing API traffic, thereby enhancing application functionality with ease. You can activate the AI Gateway features through a range of modern, specialized plugins, following the same approach as with other Kong Gateway plugins. When integrated with existing Kong Gateway plugins, users can quickly build a robust AI management platform without the need for custom coding or introducing new, unfamiliar tools. Key Features and Benefits 1. Intelligent Traffic Management: The AI Gateway’s intelligent traffic management capabilities analyze real-time data to optimize request routing. By employing machine learning algorithms, the gateway adapts to changing conditions, ensuring that requests are processed through the most efficient paths. This results in lower latency, improved performance, and a seamless user experience. Additionally, the gateway can automatically balance loads across services, preventing any single service from becoming a bottleneck. 2. Enhanced Security: Security is a top concern for any organization operating in the digital realm. The AI Gateway takes a proactive stance by using AI to identify and mitigate threats before they impact your systems. It can detect anomalies in traffic patterns, flag suspicious activities, and automatically apply security protocols to safeguard APIs. This real-time threat detection ensures that your APIs are resilient against attacks, providing peace of mind to organizations and their users. 3. Seamless Integration: The AI Gateway is designed to fit seamlessly within existing infrastructures. It supports a wide array of integrations, allowing organizations to connect with their current tools and platforms without any disruption. This flexibility means teams can leverage the AI Gateway's capabilities without overhauling their entire tech stack, making it easier to adopt and implement. 4. Automated Insights and Reporting: With the AI Gateway, organizations benefit from automated insights that simplify the understanding of API usage and performance metrics. These insights are generated through AI analysis, allowing teams to access critical information at a glance. This capability enables informed decision making, optimizes resource allocation, and drives continuous improvement, ultimately enhancing overall performance. 5. Collaborative Features: Modern workplaces thrive on collaboration, and the AI Gateway facilitates teamwork across various departments. It provides features that enhance communication between developers, security teams, and operations staff, fostering a unified approach to API management. By breaking down silos, the AI Gateway promotes efficiency and accelerates the development lifecycle. 6. Plugin Ecosystem: One of the standout aspects of the AI Gateway is its robust plugin ecosystem. Kong offers a variety of plugins that extend the functionality of the gateway, allowing organizations to customize their API management experience. Most used LLMs: The infographic presents a breakdown of the most popular Large Language Models (LLMs) currently in use. Here's a summary of the key takeaways: Dominant Players: 1. ChatGPT: With a significant share of 27%, ChatGPT remains the most widely used LLM. Its user-friendly interface and impressive language capabilities have contributed to its popularity. 2. Azure AI: Closely following ChatGPT, Azure AI holds a 18% share. It's backed by Microsoft's extensive resources and offers a range of AI services, including LLM capabilities. 3. Google Gemini: Google's latest LLM, Gemini, has quickly gained traction with a 17% share. It's known for its advanced capabilities in va

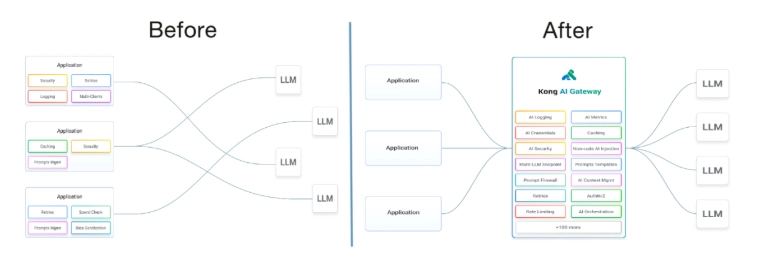

In today’s rapidly evolving digital landscape, organizations increasingly rely on APIs to drive innovation, enhance connectivity, and streamline operations. However, as the complexity of managing these APIs grows, the need for advanced solutions becomes paramount.

Enter Kong’s AI Gateway—a revolutionary product designed to transform the way we think about API management by leveraging artificial intelligence to provide intelligent insights, enhanced security, and optimized performance.

What is the AI Gateway?

The rapid emergence of various AI LLM providers, including open-source and self-hosted models, has led to a fragmented technology landscape with inconsistent standards and controls.

This fragmentation complicates how developers and organizations utilize

and govern AI services. Kong Gateway’s extensive API management

capabilities and plugin extensibility make it an ideal solution for providing AI-specific management and governance services.

Since AI providers often do not adhere to a standardized API specification, the AI Gateway offers a normalized API layer that enables clients to access multiple AI services from a single codebase. It

enhances capabilities such as credential management, AI usage observability, governance, an prompt engineering for fine-tuning models. Developers can utilize no-code AI plugins to enrich their existing API traffic, thereby enhancing application functionality with ease.

You can activate the AI Gateway features through a range of modern, specialized plugins, following the same approach as with other Kong Gateway plugins. When integrated with existing Kong Gateway plugins, users can quickly build a robust AI management platform without the need for custom coding or introducing new, unfamiliar tools.

Key Features and Benefits

1. Intelligent Traffic Management: The AI Gateway’s intelligent traffic management capabilities analyze real-time data to optimize request routing. By employing machine learning algorithms, the gateway adapts to changing conditions, ensuring that requests are processed through the most efficient paths. This results in lower latency, improved performance, and a seamless user experience. Additionally, the gateway can automatically balance loads across services, preventing any single service from becoming a bottleneck.

2. Enhanced Security: Security is a top concern for any organization operating in the digital realm. The AI Gateway takes a proactive stance by using AI to identify and mitigate threats before they impact your systems. It can detect anomalies in traffic patterns, flag suspicious

activities, and automatically apply security protocols to safeguard APIs. This real-time threat detection ensures that your APIs are resilient against attacks, providing peace of mind to organizations and their users.

3. Seamless Integration: The AI Gateway is designed to fit seamlessly within existing infrastructures. It supports a wide array of integrations, allowing organizations to connect with their current tools and platforms without any disruption. This flexibility means teams

can leverage the AI Gateway's capabilities without overhauling their entire tech stack, making it easier to adopt and implement.

4. Automated Insights and Reporting: With the AI Gateway, organizations benefit from automated insights that simplify the understanding of API usage and performance metrics. These insights are generated through AI analysis, allowing teams to access critical

information at a glance. This capability enables informed decision making, optimizes resource allocation, and drives continuous improvement, ultimately enhancing overall performance.

5. Collaborative Features: Modern workplaces thrive on collaboration, and the AI Gateway facilitates teamwork across various departments. It provides features that enhance communication between developers, security teams, and operations staff, fostering a unified approach to API management. By breaking down silos, the AI Gateway promotes efficiency

and accelerates the development lifecycle.

6. Plugin Ecosystem: One of the standout aspects of the AI Gateway is its robust plugin ecosystem. Kong offers a variety of plugins that extend the functionality of the gateway, allowing organizations to customize their API management experience.

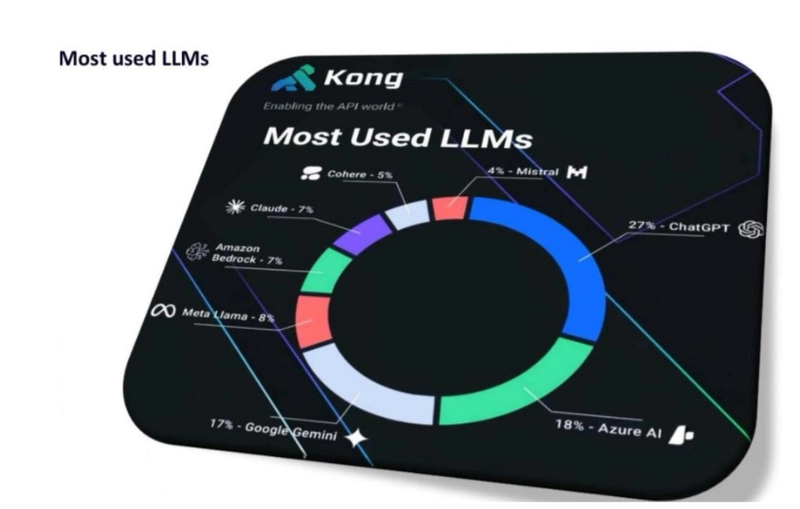

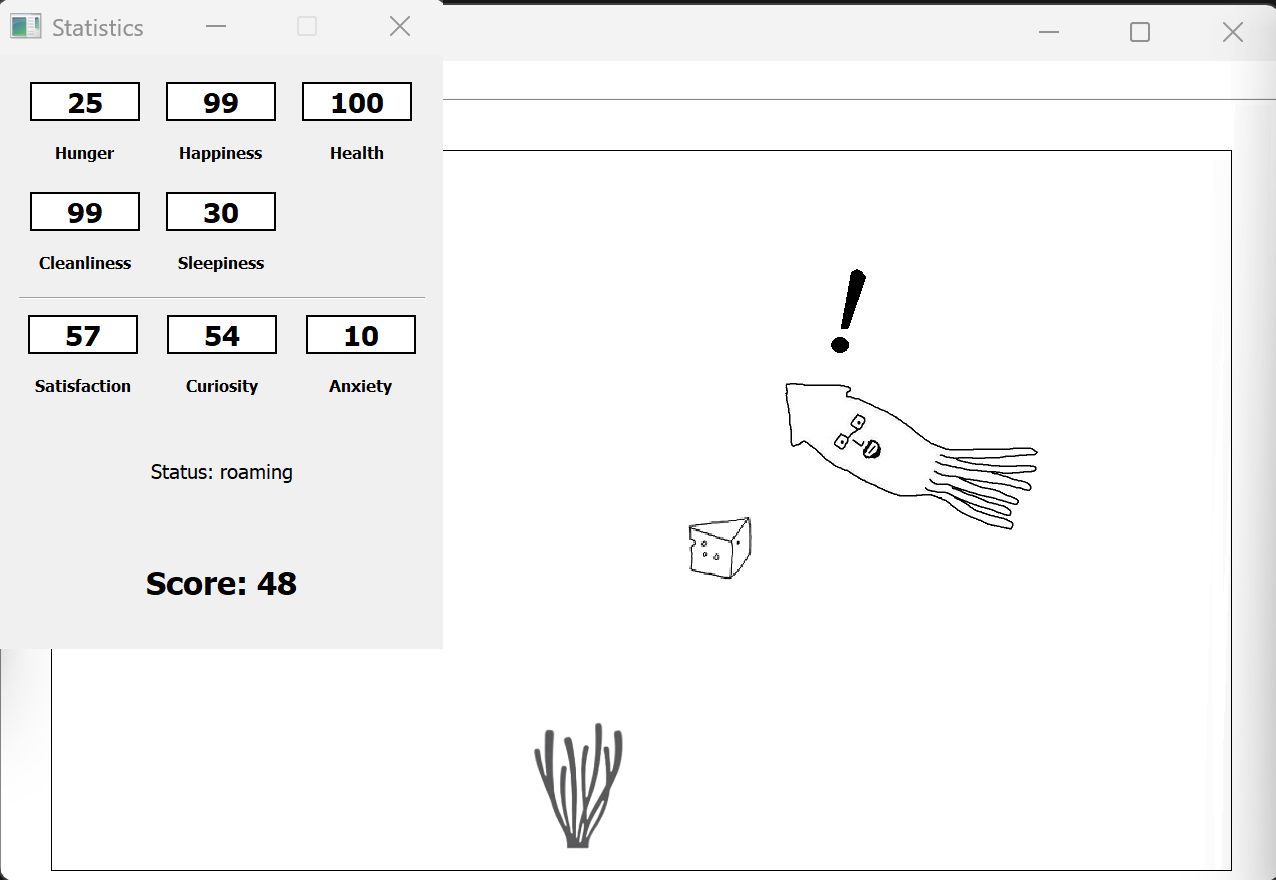

Most used LLMs:

The infographic presents a breakdown of the most popular Large Language Models (LLMs) currently in use. Here's a summary of the key takeaways:

Dominant Players:

1. ChatGPT: With a significant share of 27%, ChatGPT remains the most widely used LLM. Its user-friendly interface and impressive language capabilities have contributed to its popularity.

2. Azure AI: Closely following ChatGPT, Azure AI holds a 18% share. It's backed by Microsoft's extensive resources and offers a range of AI services, including LLM capabilities.

3. Google Gemini: Google's latest LLM, Gemini, has quickly gained traction with a 17% share. It's known for its advanced capabilities in various language tasks.

Other Notable LLMs:

1. Meta Llama: With an 8% share, Meta Llama is a powerful LLM developed by Meta (formerly Facebook).

2. Amazon Bedrock: Amazon's Bedrock offers access to a variety of foundation models, including LLMs. It holds a 7% share.

3. Claude: Anthropic's Claude is a versatile LLM with a 7% share, known for its ability to generate creative and informative text.

4. Cohere: Cohere provides a suite of AI tools, including LLMs, with a 5% share.

5. Mistral: Mistral is a relatively new LLM with a 4% share, gaining attention for its promising capabilities.

Key Insights:

1. ChatGPT's Dominance: ChatGPT's strong market position highlights its ability to meet a wide range of user needs.

2. Cloud Providers' Influence: Major cloud providers like Microsoft and Google are making significant strides in the LLM space, providing powerful AI capabilities to their users.

3. Emerging Players: LLMs like Meta Llama and Mistral demonstrate the growing diversity and innovation in the LLM landscape.

This infographic offers a snapshot of the current LLM landscape, highlighting the leading models and their relative popularity. As the field of AI continues to evolve, we can expect further developments

and shifts in the usage of LLMs.

Kong AI Plugins

1. AI Proxy:

Purpose: This plugin simplifies making your first AI request.

How it works: It provides a user-friendly interface to connect to various AI models or services, allowing you to experiment and build AI-powered applications without deep technical knowledge.

2. AI Gateway:

Purpose: This is the core plugin that enables you to build new AI applications faster and power existing API traffic with AI capabilities.

How it works: It integrates multiple LLMs (Large Language Models) and provides security, metrics, and other essential functionalities for AI-powered applications. It also supports declarative configuration, making it easier to manage AI integrations.

3. Request Transformer:

Purpose: This plugin allows you to transform API requests with no-code AI integrations.

How it works: It uses AI models to modify, enhance, or augment incoming requests, making them more suitable for processing by the backend services.

4. Response Transformer:

Purpose: This plugin transforms, enriches, and augments API responses using no-code AI integrations.

How it works: It uses AI models to process API responses and generate more informative or personalized outputs.

5. AI Prompt Guard:

Purpose: This plugin secures your AI prompts by implementing advanced prompt security.

How it works: It analyses prompts and filters out malicious or inappropriate content, ensuring that the AI models are used responsibly.

6. AI Prompt Template:

Purpose: This plugin helps you create better prompts by building AI templates that are compatible with the OpenAI interface.

How it works: It provides a structured way to create effective prompts for AI models, improving the quality of AI-generated outputs.

7. AI Prompt Decorator:

Purpose: This plugin helps you build better AI contexts by centrally managing the contexts and behaviors of every AI prompt.

How it works: It allows you to define and manage the context of an AI prompt, ensuring that the AI model understands the desired outcome and generates more relevant responses.

In essence, the Kong AI Gateway plugins provide a comprehensive suite of tools to build and manage AI-powered applications. They simplify the integration of AI models, enhance security, and improve the overall performance of AI-driven systems.

Use cases of Kong AI Gateway

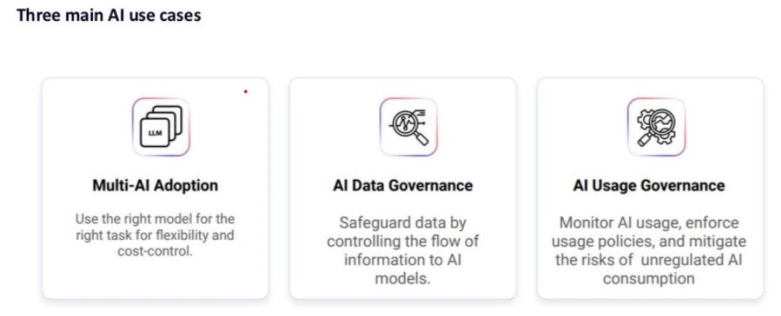

1. Multi-AI Adoption

- Core Concept: This approach emphasizes utilizing the right AI model for the right task. It's about tailoring AI solutions to specific needs, rather than relying on a one-size-fits-all model.

Benefits:

Flexibility: Different AI models excel in different tasks (e.g., image recognition, natural language processing, etc.). Choosing the best-suited model allows for optimal results.

Cost-Control: Not every task requires the most advanced AI model. By selecting the right model, you can optimize costs without compromising performance.

2. AI Data Governance

- Core Concept: This focuses on ensuring responsible and ethical use of AI by controlling the flow of information to AI models.

Importance:

Data Privacy: Protecting sensitive data from unauthorized access or misuse is paramount.

Model Bias: Preventing AI models from learning and perpetuating biases in the data they are trained on.

3. AI Usage Governance

- Core Concept: This involves monitoring and regulating the use of AI to mitigate risks and ensure compliance with policies.

Why it Matters:

Unregulated AI: Uncontrolled AI usage can lead to unintended consequences, such as job displacement or algorithmic bias.

Policy Enforcement: AI Usage Governance ensures that AI is used in accordance with ethical guidelines and legal regulations.

Conclusion

Kong’s AI Gateway represents a significant advancement in API management, merging cutting-edge AI technology with robust functionality. As businesses navigate the complexities of the digital landscape, solutions like the AI Gateway offer the tools needed to thrive.

Whether you aim to improve traffic management, enhance security, or gain deeper insights into your API performance, the AI Gateway is designed to meet these challenges head-on. With its intelligent features, seamless integration, and customizable plugins, it’s time to explore how Kong’s AI Gateway can transform your API strategy and drive your organization forward.

For more information about the AI Gateway and to explore the available plugins, visit the official Kong documentation and hub. Embrace the future of API management today!

![Apple Drops New Immersive Adventure Episode for Vision Pro: 'Hill Climb' [Video]](https://www.iclarified.com/images/news/97133/97133/97133-640.jpg)

![Most iPhones Sold in the U.S. Will Be Made in India by 2026 [Report]](https://www.iclarified.com/images/news/97130/97130/97130-640.jpg)

![This new Google TV streaming dongle looks just like a Chromecast [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/04/thomson-cast-150-google-tv-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

_Olekcii_Mach_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![How to Convert Text to Video with AI [Tutorial]](https://findnewai.com/wp-content/uploads/2023/07/how-to-convert-text-to-video-pictory-ai-tutorial.webp)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[FREE EBOOKS] AI and Business Rule Engines for Excel Power Users, Machine Learning Hero & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)