The Truth About Preloading in Modern Web

One of the most common ways to optimize the size of an application's bundle is code splitting. With code splitting, we divide the application into smaller chunks that can be loaded on demand. This allows us to reduce the amount of JavaScript that needs to be downloaded and parsed when the user first opens the app. A simple and widely used approach to code splitting is splitting by page. The logic is straightforward: when a user opens Page A, there’s no need to load the code for Page B at that moment. This approach provides a significant improvement in initial load time, which is especially important for large applications. However, like any solution, this method comes with trade-offs. While first-time loading becomes faster, navigating between pages can feel slower. When the user switches to a new page, there may be a noticeable delay between clicking a link or button and actually seeing the new content. This delay can negatively impact user experience, especially if the transition feels unresponsive. Preloading to Improve Page Transitions To mitigate this issue, we can introduce preloading strategies. The idea is to load certain chunks of code before the user actually requests them, reducing or even eliminating the perceived delay during navigation. However, implementing an effective preloading strategy is not trivial. The main challenges are choosing what to preload and when to preload it. Timing Challenges One option is to preload chunks after a fixed timeout once the page is idle. But this approach is unreliable, as every user has a different internet connection, device performance, and interaction pattern. In some cases, preloading at the wrong moment can harm user experience — for example, if preloading starts while the user is actively interacting with the page, it may slow down ongoing interactions. Mouseover and Touchstart Preloading Some frameworks apply mouseover-based preloading: preloading starts when the user hovers over a link. This approach helps preload content shortly before it's needed and works reasonably well on desktop. However, it has limitations on mobile devices where no hover event exists. Some libraries therefore listen to touchstart on mobile as a fallback. But even touchstart happens very close to the actual navigation event — often too late to fully hide the loading delay, resulting in suboptimal UX. Viewport-Based Strategies Another group of strategies is based on Intersection Observer or simple viewport scanning. The idea is to preload links that are currently visible on screen or that are likely to be seen soon. Alternatively, one might preload all links on the page shortly after the initial load. While these strategies help prioritize relevant links, they introduce new risks: If preloading starts too early, it may compete for resources with critical page content and degrade UX. If delayed too much, the preload may not complete before navigation. On pages with many links, preloading too aggressively may result in unnecessary bandwidth usage and increased memory consumption. Finding the right moment to start preloading remains difficult: we want to wait “a little bit” after the page has settled, but defining this "little bit" precisely is complicated. Browser Limitations One of the core challenges is that browsers give us very limited APIs to design truly smart preloading. There is no reliable way to query network conditions or system load in advance. APIs like requestIdleCallback help defer work during runtime idleness, but are not designed specifically for preloading, and don’t account for network bandwidth or user interactions. In practice, most preloading strategies rely on heuristics, which may or may not work well across different user scenarios. Declarative vs. Imperative Navigation A key principle for enabling robust preloading is declarative navigation. When navigation is declared via tags or dedicated Link components, the system has full knowledge of possible navigation targets ahead of time. This allows frameworks to implement automatic preloading strategies behind the scenes. In contrast, when navigation is implemented imperatively (e.g. via custom JavaScript function calls like navigateTo(url)), it becomes much harder — or even impossible — to build generalized preloading logic without manually instrumenting every possible navigation trigger. Declarative navigation should always be preferred not only for maintainability, but also because it opens the door for better UX optimizations like preloading. If your framework supports declarative routing via Link components (or even plain ), and you stick to this declarative model, even if the framework doesn’t provide built-in preloading strategies — it's usually possible to implement your own preloading layer with reasonable effort. Legacy Codebases & Imperative Navigation However, if you already have a large codebase heavi

One of the most common ways to optimize the size of an application's bundle is code splitting. With code splitting, we divide the application into smaller chunks that can be loaded on demand. This allows us to reduce the amount of JavaScript that needs to be downloaded and parsed when the user first opens the app.

A simple and widely used approach to code splitting is splitting by page. The logic is straightforward: when a user opens Page A, there’s no need to load the code for Page B at that moment. This approach provides a significant improvement in initial load time, which is especially important for large applications.

However, like any solution, this method comes with trade-offs. While first-time loading becomes faster, navigating between pages can feel slower. When the user switches to a new page, there may be a noticeable delay between clicking a link or button and actually seeing the new content. This delay can negatively impact user experience, especially if the transition feels unresponsive.

Preloading to Improve Page Transitions

To mitigate this issue, we can introduce preloading strategies. The idea is to load certain chunks of code before the user actually requests them, reducing or even eliminating the perceived delay during navigation.

However, implementing an effective preloading strategy is not trivial. The main challenges are choosing what to preload and when to preload it.

Timing Challenges

One option is to preload chunks after a fixed timeout once the page is idle. But this approach is unreliable, as every user has a different internet connection, device performance, and interaction pattern. In some cases, preloading at the wrong moment can harm user experience — for example, if preloading starts while the user is actively interacting with the page, it may slow down ongoing interactions.

Mouseover and Touchstart Preloading

Some frameworks apply mouseover-based preloading: preloading starts when the user hovers over a link. This approach helps preload content shortly before it's needed and works reasonably well on desktop.

However, it has limitations on mobile devices where no hover event exists. Some libraries therefore listen to touchstart on mobile as a fallback. But even touchstart happens very close to the actual navigation event — often too late to fully hide the loading delay, resulting in suboptimal UX.

Viewport-Based Strategies

Another group of strategies is based on Intersection Observer or simple viewport scanning. The idea is to preload links that are currently visible on screen or that are likely to be seen soon. Alternatively, one might preload all links on the page shortly after the initial load.

While these strategies help prioritize relevant links, they introduce new risks:

- If preloading starts too early, it may compete for resources with critical page content and degrade UX.

- If delayed too much, the preload may not complete before navigation.

- On pages with many links, preloading too aggressively may result in unnecessary bandwidth usage and increased memory consumption.

Finding the right moment to start preloading remains difficult: we want to wait “a little bit” after the page has settled, but defining this "little bit" precisely is complicated.

Browser Limitations

One of the core challenges is that browsers give us very limited APIs to design truly smart preloading. There is no reliable way to query network conditions or system load in advance. APIs like requestIdleCallback help defer work during runtime idleness, but are not designed specifically for preloading, and don’t account for network bandwidth or user interactions.

In practice, most preloading strategies rely on heuristics, which may or may not work well across different user scenarios.

Declarative vs. Imperative Navigation

A key principle for enabling robust preloading is declarative navigation. When navigation is declared via tags or dedicated Link components, the system has full knowledge of possible navigation targets ahead of time. This allows frameworks to implement automatic preloading strategies behind the scenes.

In contrast, when navigation is implemented imperatively (e.g. via custom JavaScript function calls like navigateTo(url)), it becomes much harder — or even impossible — to build generalized preloading logic without manually instrumenting every possible navigation trigger.

Declarative navigation should always be preferred not only for maintainability, but also because it opens the door for better UX optimizations like preloading.

If your framework supports declarative routing via Link components (or even plain ), and you stick to this declarative model, even if the framework doesn’t provide built-in preloading strategies — it's usually possible to implement your own preloading layer with reasonable effort.

Legacy Codebases & Imperative Navigation

However, if you already have a large codebase heavily relying on imperative navigation, adding preloading becomes much harder. In such cases, you typically have to:

- Apply heuristics (e.g. manually preload certain key pages after a delay);

- Manually instrument navigation calls to inject preloading;

- Accept that fully automated preloading may not be feasible without architectural refactoring.

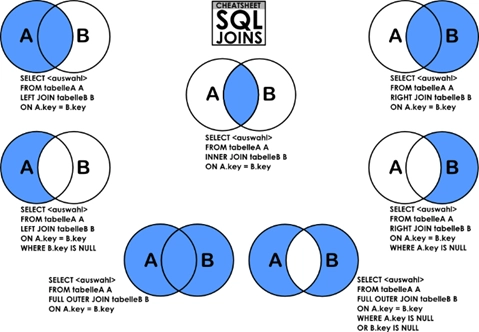

Preloading Support Across Frameworks

| Framework | Preloading Triggers | Global Configuration | Notes |

|---|---|---|---|

| SvelteKit |

mouseover, touchstart, viewport (IntersectionObserver)

|

✅ Yes | Multiple built-in strategies; globally configurable. |

| TanStack Router |

mouseover, touchstart, viewport (IntersectionObserver)

|

✅ Yes | Rich set of built-in strategies; global configuration available. |

| Angular | After initial load (PreloadAllModules) |

⚠️ Yes (only global strategy) | Has one built-in strategy that preloads all modules after initial load. |

| Vue Router | – | ❌ No | No built-in preloading; requires custom solutions. |

| Nuxt.js |

viewport, interaction-based (hover, touchstart)

|

✅ Yes | Built-in preloading based on links and user interaction; globally configurable. |

| Next.js | viewport (prefetch in ) |

⚠ Limited | No way to fully configure strategy globally; per-link prefetch attribute controls behavior. |

| React Router |

viewport, interaction-based (hover, touchstart)

|

❌ No | Supports preloading per |

Summary

- Code splitting helps optimize bundle size but introduces navigation delays.

- Preloading helps hide navigation delays but is tricky to implement well.

- Timing and link selection are two biggest challenges in preloading design.

- Declarative navigation significantly simplifies adding preloading strategies.

- Framework support varies widely — some offer global preloading, some offer none.

![WWDC 2025 May Disappoint on AI [Gurman]](https://www.iclarified.com/images/news/97473/97473/97473-640.jpg)

![M4 MacBook Air Hits New All-Time Low of $837.19 [Deal]](https://www.iclarified.com/images/news/97480/97480/97480-640.jpg)

![[The AI Show Episode 150]: AI Answers: AI Roadmaps, Which Tools to Use, Making the Case for AI, Training, and Building GPTs](https://www.marketingaiinstitute.com/hubfs/ep%20150%20cover.png)

![[The AI Show Episode 149]: Google I/O, Claude 4, White Collar Jobs Automated in 5 Years, Jony Ive Joins OpenAI, and AI’s Impact on the Environment](https://www.marketingaiinstitute.com/hubfs/ep%20149%20cover.png)

![Z buffer problem in a 2.5D engine similar to monument valley [closed]](https://i.sstatic.net/OlHwug81.jpg)