"Unlocking Multimodal Mastery: The Power of TULIP and NORA in Imaging"

In the rapidly evolving landscape of medical imaging, professionals often grapple with the challenge of integrating diverse data modalities to achieve a comprehensive understanding of patient health. Have you ever wondered how cutting-edge technologies can enhance diagnostic accuracy and treatment efficacy? Enter TULIP and NORA—two groundbreaking tools that are revolutionizing multimodal imaging by seamlessly combining various data sources into cohesive visual narratives. In this blog post, we will embark on an enlightening journey through the realm of multimodal imaging, unveiling the intricacies of TULIP's transformative capabilities alongside NORA's innovative approaches. Together, these powerful systems not only streamline workflows but also empower healthcare providers to make informed decisions faster than ever before. As we delve deeper into their synergy and explore real-world applications in healthcare settings, you'll discover how embracing these advanced methodologies can elevate your practice and improve patient outcomes. Are you ready to unlock the potential of multimodal mastery? Join us as we explore future trends that promise to shape the next generation of medical imaging! Introduction to Multimodal Imaging Multimodal imaging integrates various data types, such as visual and textual information, enhancing our understanding of complex phenomena. The TULIP model exemplifies this integration by excelling in tasks like zero-shot classification and text-to-image retrieval through innovative techniques such as generative data augmentation and enhanced contrastive learning. By leveraging multimodal datasets, TULIP demonstrates improved performance across benchmarks like ImageNet and ObjectNet, showcasing its ability to understand fine-grained details and visual similarities effectively. Key Features of Multimodal Imaging Techniques The advancements in multimodal imaging are not limited to models like TULIP; they also encompass novel approaches such as NORA for neural imaging. This technique enhances speed and resolution while maintaining high-quality reconstructions from sparse samples using low-rank matrix completion methods. Both models highlight the importance of combining different modalities—textual context with images or temporal correlations in video sequences—to achieve superior results in their respective fields. By fostering a deeper comprehension of these technologies, researchers can explore further applications within healthcare diagnostics, neuroscience research, and beyond. The synergy between diverse methodologies paves the way for more robust systems capable of tackling intricate challenges across multiple domains. What is TULIP? TULIP, or the Text-Image Unified Learning and Inference Paradigm, represents a groundbreaking model for language-image pretraining that significantly enhances existing contrastive image-text models. It excels in zero-shot classification, text-to-image retrieval, and linear probing tasks by leveraging generative data augmentation and improved contrastive learning techniques. The model's architecture includes mechanisms for image/text reconstruction regularization which aids in understanding fine-grained details and visual similarities while estimating depth effectively. TULIP’s versatility is evidenced through rigorous evaluations on prominent datasets such as ImageNet, ObjectNet, and Winoground. Its ability to manage multimodal tasks efficiently not only diversifies vision datasets but also showcases its compositional reasoning capabilities. Key Features of TULIP The innovative aspects of TULIP include enhanced performance metrics across various benchmarks compared to state-of-the-art models. By integrating advanced data augmentation strategies with robust training methodologies, it addresses limitations seen in previous frameworks. This positions TULIP as a formidable tool for researchers aiming to explore further applications within multimodal systems—potentially leading to new breakthroughs in artificial intelligence-driven visual comprehension tasks. Exploring NORA's Capabilities NORA, or Neuroimaging with Oblong Random Acquisition, revolutionizes fast two-photon microscopy by enhancing imaging speed and resolution. This innovative approach employs subsampling scanning lines and an elongated point-spread function (PSF) to optimize video reconstruction through nuclear-norm minimization. By diversifying information content across frames, NORA efficiently recovers full video sequences from sparse samples, significantly reducing data acquisition time while maintaining high-quality images. The low-rank matrix completion technique utilized in the recovery algorithm leverages spatial and temporal correlations for effective data reconstruction. As a result, NORA demonstrates remarkable robustness against extreme subsampling and motion artifacts. Key Features of NORA NORA’s capabilities extend beyond mere speed; it simplifies complex imaging p

In the rapidly evolving landscape of medical imaging, professionals often grapple with the challenge of integrating diverse data modalities to achieve a comprehensive understanding of patient health. Have you ever wondered how cutting-edge technologies can enhance diagnostic accuracy and treatment efficacy? Enter TULIP and NORA—two groundbreaking tools that are revolutionizing multimodal imaging by seamlessly combining various data sources into cohesive visual narratives. In this blog post, we will embark on an enlightening journey through the realm of multimodal imaging, unveiling the intricacies of TULIP's transformative capabilities alongside NORA's innovative approaches. Together, these powerful systems not only streamline workflows but also empower healthcare providers to make informed decisions faster than ever before. As we delve deeper into their synergy and explore real-world applications in healthcare settings, you'll discover how embracing these advanced methodologies can elevate your practice and improve patient outcomes. Are you ready to unlock the potential of multimodal mastery? Join us as we explore future trends that promise to shape the next generation of medical imaging!

Introduction to Multimodal Imaging

Multimodal imaging integrates various data types, such as visual and textual information, enhancing our understanding of complex phenomena. The TULIP model exemplifies this integration by excelling in tasks like zero-shot classification and text-to-image retrieval through innovative techniques such as generative data augmentation and enhanced contrastive learning. By leveraging multimodal datasets, TULIP demonstrates improved performance across benchmarks like ImageNet and ObjectNet, showcasing its ability to understand fine-grained details and visual similarities effectively.

Key Features of Multimodal Imaging Techniques

The advancements in multimodal imaging are not limited to models like TULIP; they also encompass novel approaches such as NORA for neural imaging. This technique enhances speed and resolution while maintaining high-quality reconstructions from sparse samples using low-rank matrix completion methods. Both models highlight the importance of combining different modalities—textual context with images or temporal correlations in video sequences—to achieve superior results in their respective fields.

By fostering a deeper comprehension of these technologies, researchers can explore further applications within healthcare diagnostics, neuroscience research, and beyond. The synergy between diverse methodologies paves the way for more robust systems capable of tackling intricate challenges across multiple domains.

What is TULIP?

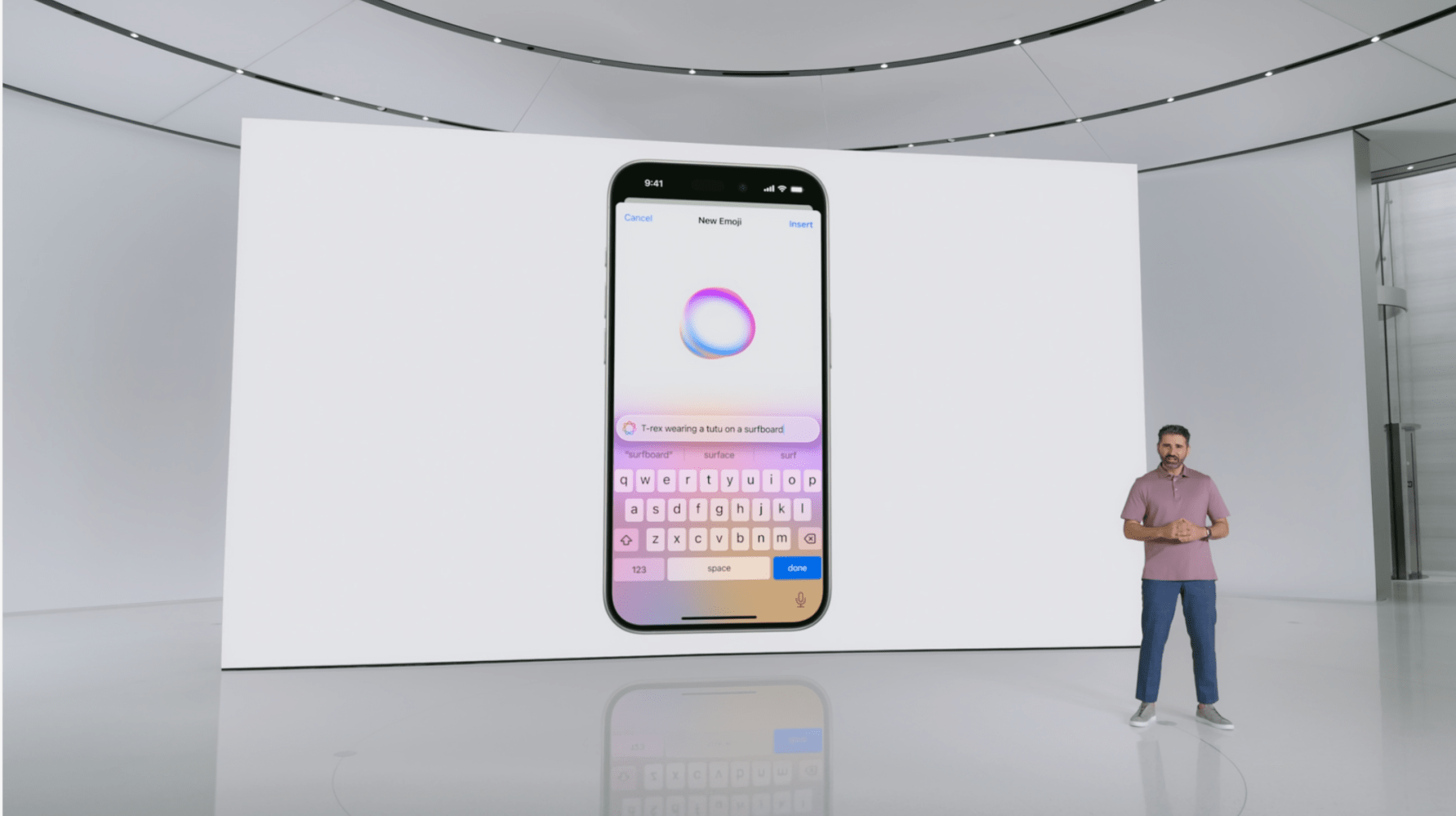

TULIP, or the Text-Image Unified Learning and Inference Paradigm, represents a groundbreaking model for language-image pretraining that significantly enhances existing contrastive image-text models. It excels in zero-shot classification, text-to-image retrieval, and linear probing tasks by leveraging generative data augmentation and improved contrastive learning techniques. The model's architecture includes mechanisms for image/text reconstruction regularization which aids in understanding fine-grained details and visual similarities while estimating depth effectively. TULIP’s versatility is evidenced through rigorous evaluations on prominent datasets such as ImageNet, ObjectNet, and Winoground. Its ability to manage multimodal tasks efficiently not only diversifies vision datasets but also showcases its compositional reasoning capabilities.

Key Features of TULIP

The innovative aspects of TULIP include enhanced performance metrics across various benchmarks compared to state-of-the-art models. By integrating advanced data augmentation strategies with robust training methodologies, it addresses limitations seen in previous frameworks. This positions TULIP as a formidable tool for researchers aiming to explore further applications within multimodal systems—potentially leading to new breakthroughs in artificial intelligence-driven visual comprehension tasks.

Exploring NORA's Capabilities

NORA, or Neuroimaging with Oblong Random Acquisition, revolutionizes fast two-photon microscopy by enhancing imaging speed and resolution. This innovative approach employs subsampling scanning lines and an elongated point-spread function (PSF) to optimize video reconstruction through nuclear-norm minimization. By diversifying information content across frames, NORA efficiently recovers full video sequences from sparse samples, significantly reducing data acquisition time while maintaining high-quality images. The low-rank matrix completion technique utilized in the recovery algorithm leverages spatial and temporal correlations for effective data reconstruction. As a result, NORA demonstrates remarkable robustness against extreme subsampling and motion artifacts.

Key Features of NORA

NORA’s capabilities extend beyond mere speed; it simplifies complex imaging pathways essential for neuroscience research. Its advanced techniques allow researchers to capture neural activity at unprecedented frame rates without compromising detail integrity. Furthermore, the integration of penalized matrix decomposition enhances noise resilience during functional optical microscopy analysis. With applications ranging from basic neuroscience studies to clinical settings requiring rapid imaging solutions, NORA stands as a transformative tool that promises significant advancements in our understanding of neural dynamics and related phenomena within living tissues.

The Synergy of TULIP and NORA

The integration of the TULIP model with NORA presents a transformative approach to multimodal imaging, particularly in neural research. TULIP's strengths in zero-shot classification and text-to-image retrieval complement NORA’s rapid imaging capabilities. By leveraging generative data augmentation and enhanced contrastive learning from TULIP, researchers can improve the quality of images captured by NORA while maintaining high-resolution outputs through its low-rank matrix completion techniques. This synergy not only enhances image clarity but also accelerates data acquisition processes, allowing for real-time analysis of neural activity.

Enhanced Data Processing Techniques

Combining these two models facilitates advanced data processing methods that optimize both spatial and temporal resolution in microscopy. The robust reconstruction algorithms employed by NORA benefit from the compositional reasoning abilities inherent in TULIP, leading to more accurate representations of complex neural structures. Furthermore, this collaboration opens avenues for innovative applications such as improved diagnostics in clinical settings or deeper insights into neurological disorders through comprehensive visual analytics. Together, they exemplify how advancements in AI-driven methodologies can revolutionize our understanding of intricate biological systems.

Real-World Applications in Healthcare

The integration of advanced imaging techniques like TULIP and NORA is revolutionizing healthcare, particularly in diagnostics and treatment planning. TULIP's ability to perform zero-shot classification and text-to-image retrieval enhances the accuracy of medical image analysis, allowing for more precise identification of conditions from multimodal data sources. For instance, radiologists can leverage TULIP to analyze X-rays or MRIs alongside textual patient histories, improving diagnostic confidence.

NORA complements this by enabling ultra-fast neural imaging with high-resolution outputs crucial for observing dynamic biological processes. Its capability to recover full video sequences from sparse samples allows researchers to monitor neuronal activity in real-time during various physiological states. This synergy not only streamlines workflows but also enhances our understanding of complex diseases such as Alzheimer's or Parkinson's through detailed visualization.

Enhanced Diagnostic Accuracy

Combining these technologies facilitates a comprehensive approach to patient care. By integrating visual data with contextual information, healthcare professionals can make informed decisions swiftly—ultimately leading to better outcomes and personalized treatment plans tailored specifically for individual patients' needs.# Future Trends in Multimodal Imaging

The future of multimodal imaging is poised for transformative advancements, driven by innovative models like TULIP and NORA. These technologies not only enhance the integration of diverse data types but also improve accuracy and efficiency in various applications. The synergy between generative data augmentation techniques and advanced reconstruction algorithms will lead to more robust systems capable of handling complex tasks such as zero-shot classification and real-time neural imaging. As these models evolve, we can expect significant improvements in areas like telemedicine, where rapid image processing combined with detailed analysis will facilitate better diagnostic capabilities.

Advancements on the Horizon

Emerging trends indicate a shift towards greater automation within multimodal frameworks, leveraging artificial intelligence to streamline workflows across healthcare settings. Additionally, developments in low-rank matrix completion methods promise enhanced performance in sparse sampling scenarios typical of high-speed imaging environments. Furthermore, interdisciplinary collaborations are likely to yield novel applications that blend neuroscience with machine learning techniques, resulting in groundbreaking insights into brain function and disorders. Overall, the trajectory suggests an exciting era for multimodal imaging characterized by increased accessibility and improved patient outcomes through cutting-edge technology integration.

In conclusion, the exploration of multimodal imaging through TULIP and NORA reveals a transformative potential in the healthcare landscape. By integrating various imaging modalities, these innovative tools enhance diagnostic accuracy and patient outcomes significantly. TULIP's unique capabilities streamline image processing while NORA offers advanced analytical features that together create a powerful synergy for clinicians. The real-world applications discussed highlight how these technologies are already making strides in areas such as oncology and neurology, paving the way for more personalized treatment plans. As we look to the future, advancements in multimodal imaging promise not only improved methodologies but also greater accessibility to cutting-edge diagnostics across diverse medical fields. Embracing this evolution will be crucial for healthcare professionals aiming to leverage technology for enhanced patient care and better health management strategies moving forward.

FAQs on "Unlocking Multimodal Mastery: The Power of TULIP and NORA in Imaging"

1. What is multimodal imaging, and why is it important?

Multimodal imaging refers to the integration of different imaging techniques to provide a comprehensive view of biological processes or structures. It combines modalities like MRI, CT, PET, and ultrasound to enhance diagnostic accuracy and treatment planning in healthcare.

2. What does TULIP stand for, and what are its main features?

TULIP stands for "Two-dimensional Unsupervised Learning Image Processing." It utilizes advanced algorithms to analyze images from various sources without requiring extensive labeled data. Its main features include enhanced image quality, noise reduction, and improved feature extraction.

3. How does NORA differ from traditional imaging methods?

NORA (Neural Optimized Reconstruction Algorithm) employs deep learning techniques to reconstruct images with higher fidelity than traditional methods. Unlike conventional approaches that may rely heavily on prior knowledge or assumptions about the data structure, NORA adapts dynamically based on input data characteristics.

4. In what ways can TULIP and NORA work together effectively?

The synergy between TULIP and NORA enhances multimodal imaging by combining TULIP's unsupervised learning capabilities with NORA's advanced reconstruction techniques. This collaboration allows for more accurate interpretations of complex datasets while improving overall image clarity across multiple modalities.

5. What are some real-world applications of TULIP and NORA in healthcare?

In healthcare settings, TULIP and NORA can be applied in areas such as cancer detection through enhanced tumor visualization across different scans or monitoring chronic diseases by integrating functional information from various imaging types into a cohesive analysis framework for better patient outcomes.

![Apple Reorganizes Executive Team to Rescue Siri [Report]](https://www.iclarified.com/images/news/96777/96777/96777-640.jpg)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)

![What are good examples of 'preflight check patterns' on existing applications? [closed]](https://i.sstatic.net/yl2Tr80w.png)

![Release: Rendering Ranger: R² [Rewind]](https://images-3.gog-statics.com/48a9164e1467b7da3bb4ce148b93c2f92cac99bdaa9f96b00268427e797fc455.jpg)