YouTube hates AI movie trailers as much as I do

Bullshit trailers are a blight on YouTube. And they have been for a long time. Just throw together clips of older movies and actors, title it “The Dark Knight Rises 2: Robin’s First Flight,” and sit back and watch the clicks (and the ad revenue) roll in. But with generative “AI” videos now just a few clicks away, they’ve become an infestation, clogging up every relevant search. YouTube has finally had enough. AI-generated trailers have created a huge crop of videos that do nothing but lie to viewers by stealing a movie studio’s IP and then regurgitating it back at you, all delivered with a tiny “concept” disclaimer somewhere in the description text (and often not even that). It is genuinely horrible stuff, all the more detestable because it takes about three minutes of human work and hours upon hours of datacenter computation, boiling the planet and benefiting no one and nothing in the process. I have half a mind to [Editor’s note: At this point Michael ranted for approximately 1500 words on the evils of the AI industry and those who use its products. Terms like “perfidious” and “blatherskite” were used, along with some shorter ones that we won’t repeat. Suffice it to say, he is rather upset.] After letting them run rampant for a couple of years, it looks like YouTube is finally cracking down on these slop factories. Deadline reports that YouTube has suspended a total of four separate channels dedicated to AI-generated trailers for fake movies (or real, upcoming movies that don’t have trailers yet). Two were suspended back in March, and their alternate channels have now been smacked with the same banhammer. The channels, allegedly created by just two individual users, are not actually removed from the platform. But they cannot monetize their videos, and are presumably suffering some pretty big losses in search visibility as well. Combined, the initial two channels had more than two million subscribers. Deadline doesn’t have specific statements from YouTube on what policies the channels violated, but speculates that YouTube finally decided to enforce its misinformation policy, basic original material policies that mirror US copyright and fair use rules, and guidelines that deter uploaders from creating videos with the “sole purpose of getting views.” It didn’t take long to find an example of this slop. A search for “star wars trailer 2026” shows two blatantly fake AI trailers, complete with Disney logos on the thumbnails, popping up in search ahead of the first results from the official Star Wars channel itself. The latest one was generated less than a week ago, splicing in clips from the real movies with AI-generated video clips and narration trained on the actors’ voices. Awkward and unconvincing shifts between short splices of video show the current limitations of the technology, even after it has progressed rapidly. A Deadline report in March brought broader attention to the AI trailer problem, highlighting that some Hollywood studios have chosen to use YouTube’s content flagging system to simply claim the ad revenue from the fake trailer rather than getting them removed. After all, if someone else is doing all the “work” and getting paid, why try to protect your intellectual property and artistic integrity, when you can just grab the money instead? Turning off the monetization faucet for these channels might get the studios to finally enforce their own copyright, now that the money well is gone. YouTube continues to suffer from an absolute flood of AI slop from every direction. AI-generated video, narration, and even scripts are becoming a larger presence on the platform as a whole, particularly in YouTube Shorts, mirroring pretty much every social network on the web. This extremely basic enforcement of YouTube’s policies aside, the platform doesn’t seem all that interested in stemming the tide…and perhaps that has something to do with parent company Google selling its own generative AI products, and integrating them into YouTube itself.

Bullshit trailers are a blight on YouTube. And they have been for a long time. Just throw together clips of older movies and actors, title it “The Dark Knight Rises 2: Robin’s First Flight,” and sit back and watch the clicks (and the ad revenue) roll in. But with generative “AI” videos now just a few clicks away, they’ve become an infestation, clogging up every relevant search. YouTube has finally had enough.

AI-generated trailers have created a huge crop of videos that do nothing but lie to viewers by stealing a movie studio’s IP and then regurgitating it back at you, all delivered with a tiny “concept” disclaimer somewhere in the description text (and often not even that). It is genuinely horrible stuff, all the more detestable because it takes about three minutes of human work and hours upon hours of datacenter computation, boiling the planet and benefiting no one and nothing in the process. I have half a mind to

[Editor’s note: At this point Michael ranted for approximately 1500 words on the evils of the AI industry and those who use its products. Terms like “perfidious” and “blatherskite” were used, along with some shorter ones that we won’t repeat. Suffice it to say, he is rather upset.]

After letting them run rampant for a couple of years, it looks like YouTube is finally cracking down on these slop factories. Deadline reports that YouTube has suspended a total of four separate channels dedicated to AI-generated trailers for fake movies (or real, upcoming movies that don’t have trailers yet). Two were suspended back in March, and their alternate channels have now been smacked with the same banhammer.

The channels, allegedly created by just two individual users, are not actually removed from the platform. But they cannot monetize their videos, and are presumably suffering some pretty big losses in search visibility as well. Combined, the initial two channels had more than two million subscribers.

Deadline doesn’t have specific statements from YouTube on what policies the channels violated, but speculates that YouTube finally decided to enforce its misinformation policy, basic original material policies that mirror US copyright and fair use rules, and guidelines that deter uploaders from creating videos with the “sole purpose of getting views.”

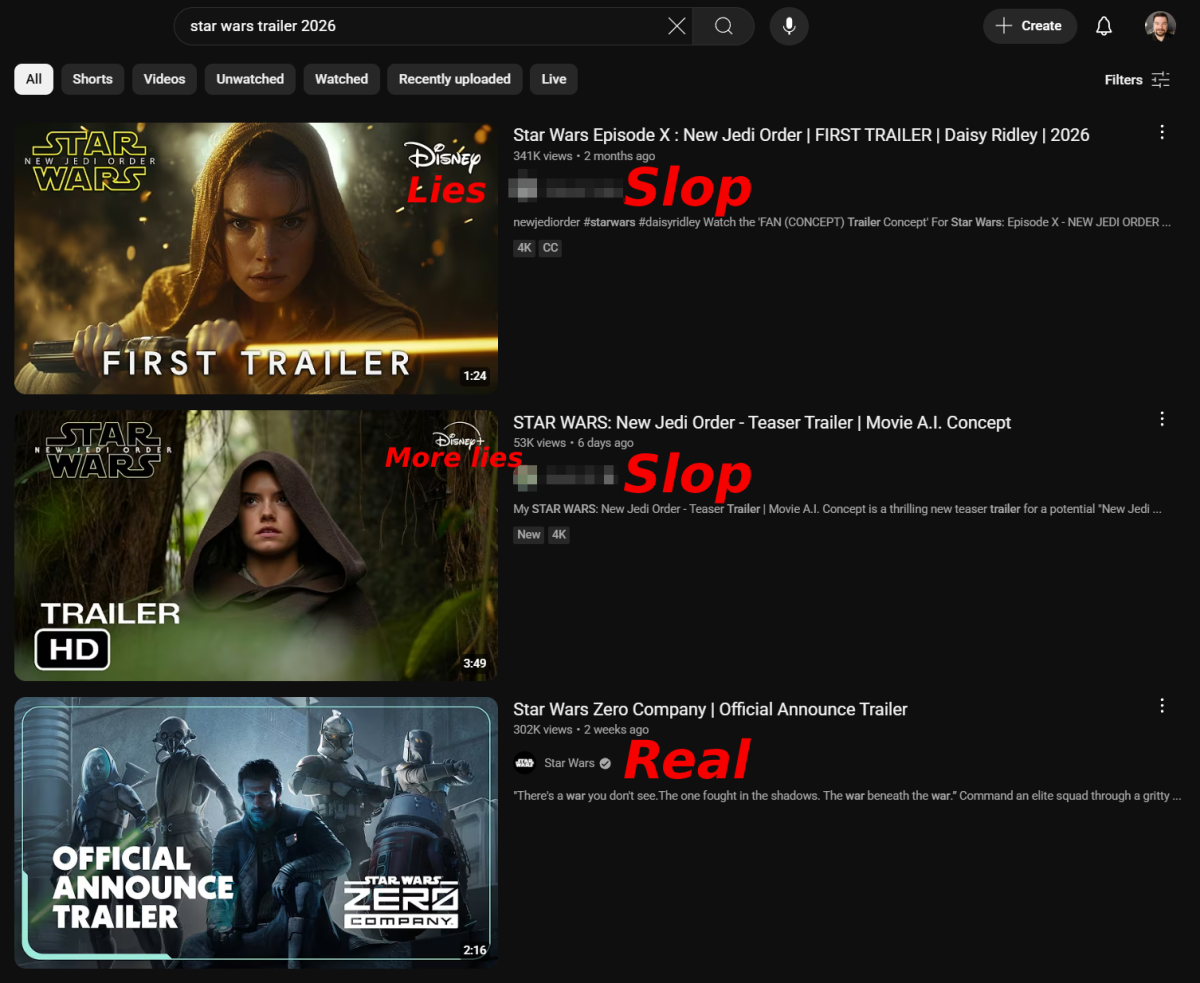

It didn’t take long to find an example of this slop. A search for “star wars trailer 2026” shows two blatantly fake AI trailers, complete with Disney logos on the thumbnails, popping up in search ahead of the first results from the official Star Wars channel itself. The latest one was generated less than a week ago, splicing in clips from the real movies with AI-generated video clips and narration trained on the actors’ voices. Awkward and unconvincing shifts between short splices of video show the current limitations of the technology, even after it has progressed rapidly.

A Deadline report in March brought broader attention to the AI trailer problem, highlighting that some Hollywood studios have chosen to use YouTube’s content flagging system to simply claim the ad revenue from the fake trailer rather than getting them removed. After all, if someone else is doing all the “work” and getting paid, why try to protect your intellectual property and artistic integrity, when you can just grab the money instead? Turning off the monetization faucet for these channels might get the studios to finally enforce their own copyright, now that the money well is gone.

YouTube continues to suffer from an absolute flood of AI slop from every direction. AI-generated video, narration, and even scripts are becoming a larger presence on the platform as a whole, particularly in YouTube Shorts, mirroring pretty much every social network on the web. This extremely basic enforcement of YouTube’s policies aside, the platform doesn’t seem all that interested in stemming the tide…and perhaps that has something to do with parent company Google selling its own generative AI products, and integrating them into YouTube itself.

_ElenaBs_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Unveils Powerful New Accessibility Features for iOS 19 and macOS 16 [Video]](https://www.iclarified.com/images/news/97311/97311/97311-640.jpg)

![Apple Working on Brain-Controlled iPhone With Synchron [Report]](https://www.iclarified.com/images/news/97312/97312/97312-640.jpg)

![Samsung's New Galaxy S25 Edge Takes Aim at 'iPhone 17 Air' [Video]](https://www.iclarified.com/images/news/97276/97276/97276-640.jpg)

![Apple to Launch AI-Powered Battery Saver Mode in iOS 19 [Report]](https://www.iclarified.com/images/news/97309/97309/97309-640.jpg)

![[The AI Show Episode 147]: OpenAI Abandons For-Profit Plan, AI College Cheating Epidemic, Apple Says AI Will Replace Search Engines & HubSpot’s AI-First Scorecard](https://www.marketingaiinstitute.com/hubfs/ep%20147%20cover.png)

![Ditching a Microsoft Job to Enter Startup Purgatory with Lonewolf Engineer Sam Crombie [Podcast #171]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746753508177/0cd57f66-fdb0-4972-b285-1443a7db39fc.png?#)