Adapting Technical Interviews to Counter AI-Assisted Cheating

I've been hiring developers for over 10 years—initially as a senior engineer, and for the last two years, as a dedicated technical recruiter. During that time, I’ve reviewed dozens of take-home projects, attended numerous whiteboard sessions, and observed the evolution of interview trends. But lately, something’s changed. Candidates are using AI tools like ChatGPT and GitHub Copilot to pass technical interviews, often in ways that bypass actual engineering judgment. I’m not against AI—it's a powerful tool when used responsibly. But when it's used to mask a candidate’s true skill level, it undermines the purpose of technical hiring. This article outlines some practical strategies and observations I’ve developed to help design interviews that are fairer, more insightful, and harder to game with AI. Personalize the Problem Generic problems are the easiest to solve with AI. So I’ve started giving contextual, personalized exercises that reflect challenges from our actual codebase or systems. For example: “Here’s a simplified version of our logging system. We recently had issues with performance under load. What would you change, and why?” This forces the candidate to think beyond “just code” and engage with the problem like an engineer, not a copy-paste operator. Use Live, Interactive Interviews Cheating is harder when someone’s watching. I run most coding interviews live, using platforms like CoderPad or VSCode Live Share. Cameras on, code shared, and I ask candidates to think out loud as they work. This reveals so much more than a finished solution. I’m not just hiring for correct syntax—I’m looking for how they reason, debug, and adjust when they hit a wall. Focus on Thought Process Over Output I’ve learned to push beyond the “Did it run?” mindset. Instead, I ask: Why did you choose that approach? What’s the trade-off of doing it this way? What would break if the data doubled? AI can give you an answer. It can’t (yet) explain why that answer matters in context. Break Challenges into Timed Parts Rather than one long take-home, I now use multi-part, timed challenges during live sessions. For example: Part 1: Quick algorithm Part 2: Real-world refactor Part 3: Code review or optimization I tell them upfront: “You probably won’t finish it all.” That’s the point. I’m looking at how they prioritize, communicate, and think under fair pressure, not just what they ship. Add a Bit of Ambiguity Real-world specs are rarely perfect, so I sometimes leave edge cases open-ended. I want to see: Do they ask clarifying questions? Do they make smart assumptions? Do they catch corner cases without prompting? This is where junior devs and senior engineers start to show their differences. Ask Open-Ended, Non-Code Questions I’ve also added open-ended discussions into my process. Things like: “How would you design a feature to reduce backend load?” “How would you onboard a junior into a legacy system?” “How do you approach technical debt?” These are the kinds of questions you can’t just Google or AI your way through—they demand real experience and opinions. Set Boundaries on AI Use For take-home assignments, I now include a clear statement: “This test must be completed without the assistance of AI tools such as ChatGPT, Copilot, or similar.” Is it foolproof? No. But it sets the tone—and the ethical candidates take it seriously. I’m not trying to “catch” candidates using AI. I’m trying to design a process where genuine skill and thought shine through, regardless of whether a candidate has ChatGPT open in another tab. AI is changing the game, and as interviewers, we need to adapt—not by being punitive, but by being thoughtful. AI is here to stay, I believe it is a human extension (Iron Man), not to replace developers (Androids). However, you need to be the best you can be with the tool; not to mention that people trust individuals to handle ethics. If you're a fellow tech hirer seeing similar patterns, I hope these ideas help you refine your process. Let’s keep interviews human—and fair. AI usage: Chat-GPT for the cover image Grammarly for final redaction

I've been hiring developers for over 10 years—initially as a senior engineer, and for the last two years, as a dedicated technical recruiter. During that time, I’ve reviewed dozens of take-home projects, attended numerous whiteboard sessions, and observed the evolution of interview trends.

But lately, something’s changed.

Candidates are using AI tools like ChatGPT and GitHub Copilot to pass technical interviews, often in ways that bypass actual engineering judgment. I’m not against AI—it's a powerful tool when used responsibly. But when it's used to mask a candidate’s true skill level, it undermines the purpose of technical hiring.

This article outlines some practical strategies and observations I’ve developed to help design interviews that are fairer, more insightful, and harder to game with AI.

Personalize the Problem

Generic problems are the easiest to solve with AI. So I’ve started giving contextual, personalized exercises that reflect challenges from our actual codebase or systems.

For example:

“Here’s a simplified version of our logging system. We recently had issues with performance under load. What would you change, and why?”

This forces the candidate to think beyond “just code” and engage with the problem like an engineer, not a copy-paste operator.

Use Live, Interactive Interviews

Cheating is harder when someone’s watching. I run most coding interviews live, using platforms like CoderPad or VSCode Live Share. Cameras on, code shared, and I ask candidates to think out loud as they work.

This reveals so much more than a finished solution. I’m not just hiring for correct syntax—I’m looking for how they reason, debug, and adjust when they hit a wall.

Focus on Thought Process Over Output

I’ve learned to push beyond the “Did it run?” mindset. Instead, I ask:

Why did you choose that approach?

What’s the trade-off of doing it this way?

What would break if the data doubled?

AI can give you an answer. It can’t (yet) explain why that answer matters in context.

Break Challenges into Timed Parts

Rather than one long take-home, I now use multi-part, timed challenges during live sessions. For example:

Part 1: Quick algorithm

Part 2: Real-world refactor

Part 3: Code review or optimization

I tell them upfront: “You probably won’t finish it all.” That’s the point. I’m looking at how they prioritize, communicate, and think under fair pressure, not just what they ship.

Add a Bit of Ambiguity

Real-world specs are rarely perfect, so I sometimes leave edge cases open-ended. I want to see:

Do they ask clarifying questions?

Do they make smart assumptions?

Do they catch corner cases without prompting?

This is where junior devs and senior engineers start to show their differences.

Ask Open-Ended, Non-Code Questions

I’ve also added open-ended discussions into my process. Things like:

“How would you design a feature to reduce backend load?”

“How would you onboard a junior into a legacy system?”

“How do you approach technical debt?”

These are the kinds of questions you can’t just Google or AI your way through—they demand real experience and opinions.

Set Boundaries on AI Use

For take-home assignments, I now include a clear statement:

“This test must be completed without the assistance of AI tools such as ChatGPT, Copilot, or similar.”

Is it foolproof? No. But it sets the tone—and the ethical candidates take it seriously.

I’m not trying to “catch” candidates using AI. I’m trying to design a process where genuine skill and thought shine through, regardless of whether a candidate has ChatGPT open in another tab. AI is changing the game, and as interviewers, we need to adapt—not by being punitive, but by being thoughtful.

AI is here to stay, I believe it is a human extension (Iron Man), not to replace developers (Androids). However, you need to be the best you can be with the tool; not to mention that people trust individuals to handle ethics.

If you're a fellow tech hirer seeing similar patterns, I hope these ideas help you refine your process.

Let’s keep interviews human—and fair.

AI usage:

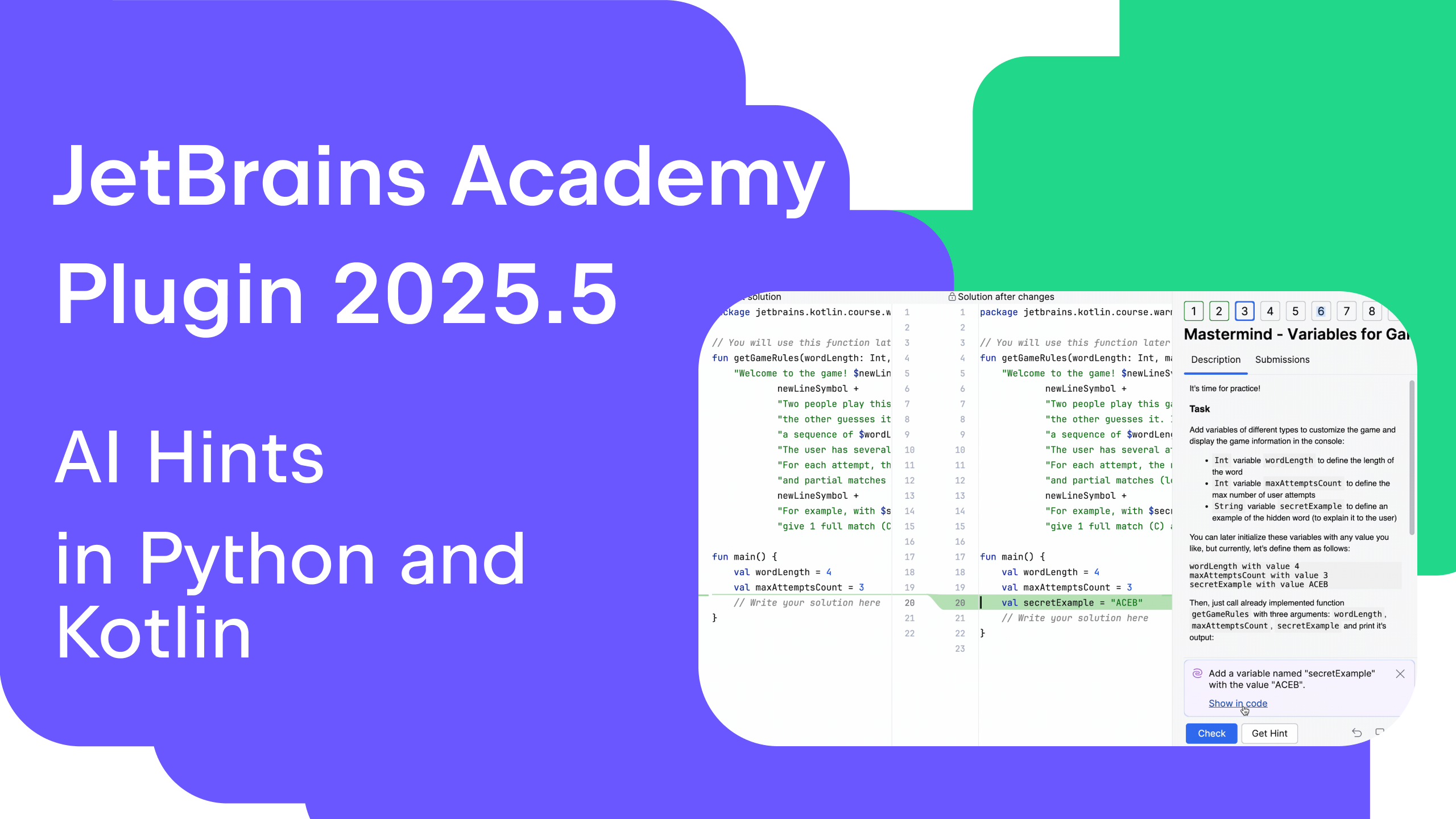

- Chat-GPT for the cover image

- Grammarly for final redaction

![WWDC 2025 May Disappoint on AI [Gurman]](https://www.iclarified.com/images/news/97473/97473/97473-640.jpg)

![M4 MacBook Air Hits New All-Time Low of $837.19 [Deal]](https://www.iclarified.com/images/news/97480/97480/97480-640.jpg)

![[The AI Show Episode 150]: AI Answers: AI Roadmaps, Which Tools to Use, Making the Case for AI, Training, and Building GPTs](https://www.marketingaiinstitute.com/hubfs/ep%20150%20cover.png)

![[The AI Show Episode 149]: Google I/O, Claude 4, White Collar Jobs Automated in 5 Years, Jony Ive Joins OpenAI, and AI’s Impact on the Environment](https://www.marketingaiinstitute.com/hubfs/ep%20149%20cover.png)

![Z buffer problem in a 2.5D engine similar to monument valley [closed]](https://i.sstatic.net/OlHwug81.jpg)