AI Fact-Checks Itself: Detects Hallucinated Concepts in Chatbots

This is a Plain English Papers summary of a research paper called AI Fact-Checks Itself: Detects Hallucinated Concepts in Chatbots. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter. Overview Researchers developed a robust method to identify hallucinated concepts in language models Used crosscoder architecture to detect concepts introduced during chat fine-tuning Method successfully detected problematic concepts like "REaLM" in Claude and "System 1/2" in GPT-4 Outperformed traditional embedding similarity approaches Technique could improve AI safety by identifying concepts that weren't in pre-training data Plain English Explanation When companies take large language models and fine-tune them to be helpful assistants, sometimes new concepts get introduced that weren't in the original training data. This can be problematic when the model insists these concepts are real when they actually aren't. Think of i... Click here to read the full summary of this paper

This is a Plain English Papers summary of a research paper called AI Fact-Checks Itself: Detects Hallucinated Concepts in Chatbots. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

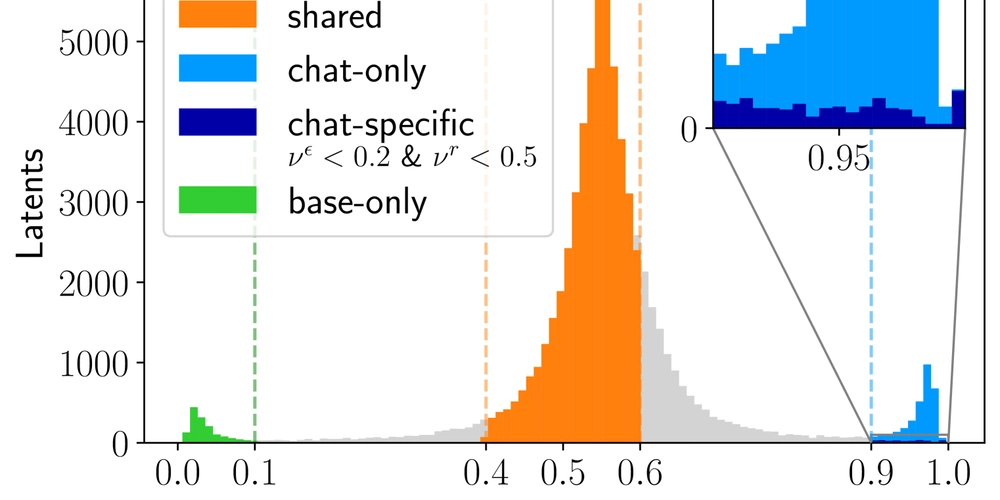

- Researchers developed a robust method to identify hallucinated concepts in language models

- Used crosscoder architecture to detect concepts introduced during chat fine-tuning

- Method successfully detected problematic concepts like "REaLM" in Claude and "System 1/2" in GPT-4

- Outperformed traditional embedding similarity approaches

- Technique could improve AI safety by identifying concepts that weren't in pre-training data

Plain English Explanation

When companies take large language models and fine-tune them to be helpful assistants, sometimes new concepts get introduced that weren't in the original training data. This can be problematic when the model insists these concepts are real when they actually aren't.

Think of i...

![Apple to Shift Robotics Unit From AI Division to Hardware Engineering [Report]](https://www.iclarified.com/images/news/97128/97128/97128-640.jpg)

![Apple Shares New Ad for iPhone 16: 'Trust Issues' [Video]](https://www.iclarified.com/images/news/97125/97125/97125-640.jpg)

![Hands-on: Motorola’s new trio of Razr phones are beautiful, if familiar vessels for AI [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/04/motorola-razr-2025-family-9.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![The big yearly Android upgrade doesn’t matter all that much now [Video]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/04/Android-versions-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] Sterling Stock Picker: Lifetime Subscription (85% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

.jpg?#)