backup and restore etcd

.setup self managed k8s cluster using kubeadm and those steps are : create vpc and subnet and in this lab we create vpc with cidr block=77.132.0.0/16 and 3 public subnets with block size=24 deploy 3 ec2 instance for all node and define which be control plane , others be worker nodes create 2 security groups for control plane node and worker nodes 4 . setup network acceess control list 5 . associate elastic ip with those instances integrate internet gateway with vpc 7 . create routetable and associate it with subnets after the infrastructure are up and running example ex2 instances note: we use control plane node ports which need it by its components apiserver port 6443 ectd port 2379-2380 scheduler port 10257 controller-manager 10259 access to control plane node port 22 only to my ip address /32 and for workers node port kubelet port 10250 kube-proxy port 10256 nodePort 30000-32767 access to worker node port 22 only to my ip address /32 we add those ports to control plane security group and worker security groups before start setup , first update and upgrade packages using sudo apt update -y && sudo apt upgrade -y step for setup control plane node as master node : disable swap update kernel params 3 install container runtime install runc install cni plugin install kubeadm , kubelet , kubectl and check version initialize control plane node using kubeadm and print output token which let you to join worker nodes 8 . install cni plugin calico 9 . use kubeadm join to master optional: you can change hostname at nodes change hostname to be worker1 , worker2 for worker nodes step for setup worker nodes : disable swap update kernel params 3 install container runtime install runc install cni plugin install kubeadm , kubelet , kubectl and check version use kubeadm join to master Note: copy kubeconfig from control plane to workers node at .kube/config at control plane node cd .kube/ cat config from master node and copy it to worker node Note: you can deploy it at console or as infrastructure as code in this lab , I used pulumi python for provisioning the resources. """An AWS Python Pulumi program""" import pulumi , pulumi_aws as aws , json cfg1=pulumi.Config() vpc1=aws.ec2.Vpc( "vpc1", aws.ec2.VpcArgs( cidr_block=cfg1.require(key='block1'), tags={ "Name": "vpc1" } ) ) intgw1=aws.ec2.InternetGateway( "intgw1", aws.ec2.InternetGatewayArgs( vpc_id=vpc1.id, tags={ "Name": "intgw1" } ) ) zones="us-east-1a" publicsubnets=["subnet1","subnet2","subnet3"] cidr1=cfg1.require(key="cidr1") cidr2=cfg1.require(key="cidr2") cidr3=cfg1.require(key="cidr3") cidrs=[ cidr1 , cidr2 , cidr3 ] for allsubnets in range(len(publicsubnets)): publicsubnets[allsubnets]=aws.ec2.Subnet( publicsubnets[allsubnets], aws.ec2.SubnetArgs( vpc_id=vpc1.id, cidr_block=cidrs[allsubnets], map_public_ip_on_launch=False, availability_zone=zones, tags={ "Name" : publicsubnets[allsubnets] } ) ) table1=aws.ec2.RouteTable( "table1", aws.ec2.RouteTableArgs( vpc_id=vpc1.id, routes=[ aws.ec2.RouteTableRouteArgs( cidr_block=cfg1.require(key="any_ipv4_traffic"), gateway_id=intgw1.id ) ], tags={ "Name" : "table1" } ) ) associate1=aws.ec2.RouteTableAssociation( "associate1", aws.ec2.RouteTableAssociationArgs( subnet_id=publicsubnets[0].id, route_table_id=table1.id ) ) associate2=aws.ec2.RouteTableAssociation( "associate2", aws.ec2.RouteTableAssociationArgs( subnet_id=publicsubnets[1].id, route_table_id=table1.id ) ) associate3=aws.ec2.RouteTableAssociation( "associate3", aws.ec2.RouteTableAssociationArgs( subnet_id=publicsubnets[2].id, route_table_id=table1.id ) ) ingress_traffic=[ aws.ec2.NetworkAclIngressArgs( from_port=22, to_port=22, protocol="tcp", cidr_block=cfg1.require(key="myips"), icmp_code=0, icmp_type=0, action="allow", rule_no=100 ), aws.ec2.NetworkAclIngressArgs( from_port=22, to_port=22, protocol="tcp", cidr_block=cfg1.require(key="any_ipv4_traffic"), icmp_code=0, icmp_type=0, action="deny", rule_no=101 ), aws.ec2.NetworkAclIngressArgs( from_port=80, to_port=80, protocol="tcp", cidr_block=cfg1.require(key="any_ipv4_traffic"), icmp_code=0, icmp_type=0, action="allow", rule_no=200 ), aws.ec2.NetworkAclIngressArgs( from_port=443, to_port=443, protocol="tcp", cidr_block=cfg1.require(key="any_ipv4_traffic"), icmp_code=0, icmp_type=0, action="allow", rule_no=300 ), aws.ec2.NetworkAclIngressArgs( from_port=0, to_port=0, protocol="-1", cidr_block=cfg1.require(key="any_ipv4_traffic"), icmp_code=0, icmp_type=0, action="allow", rule_no=400 ) ] egress_traffic=[ aws.ec2.NetworkAclEgressArgs( from_port=22, to_port=22, protocol="tcp", cidr_block=cfg1.require(key="myips"), icmp_code=0, icmp_type=0, action="allow", rule_no=100 ), aws.ec2.NetworkAclEgressArgs( from_port=22, to_port=22, protocol="tcp", cidr_block=cfg

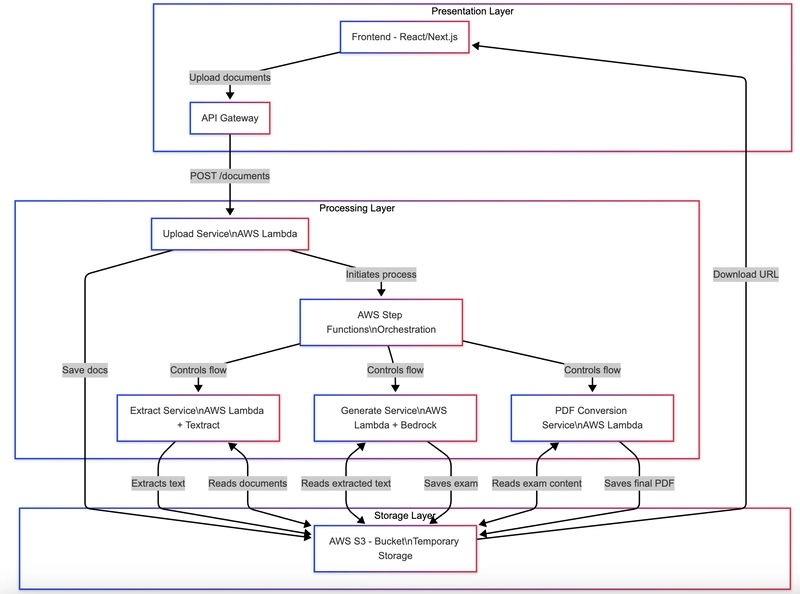

.setup self managed k8s cluster using kubeadm and those steps are :

- create vpc and subnet and in this lab we create vpc with cidr block=77.132.0.0/16 and 3 public subnets with block size=24

- deploy 3 ec2 instance for all node and define which be control plane , others be worker nodes

- create 2 security groups for control plane node and worker nodes 4 . setup network acceess control list 5 . associate elastic ip with those instances

- integrate internet gateway with vpc 7 . create routetable and associate it with subnets

- after the infrastructure are up and running example ex2 instances

note:

we use control plane node ports which need it by its components

apiserver port 6443

ectd port 2379-2380

scheduler port 10257

controller-manager 10259

access to control plane node port 22 only to my ip address /32

and for workers node port

kubelet port 10250

kube-proxy port 10256

nodePort 30000-32767

access to worker node port 22 only to my ip address /32

we add those ports to control plane security group and worker security groups

before start setup , first update and upgrade packages using sudo apt update -y && sudo apt upgrade -y

step for setup control plane node as master node :

- disable swap

- update kernel params 3 install container runtime

- install runc

- install cni plugin

- install kubeadm , kubelet , kubectl and check version

- initialize control plane node using kubeadm and print output token which let you to join worker nodes 8 . install cni plugin calico 9 . use kubeadm join to master optional: you can change hostname at nodes change hostname to be worker1 , worker2 for worker nodes

step for setup worker nodes :

- disable swap

- update kernel params 3 install container runtime

- install runc

- install cni plugin

- install kubeadm , kubelet , kubectl and check version

- use kubeadm join to master

Note: copy kubeconfig from control plane to workers node at .kube/config

at control plane node

cd .kube/

cat config from master node and copy it to worker node

Note:

you can deploy it at console or as infrastructure as code

in this lab , I used pulumi python for provisioning the resources.

"""An AWS Python Pulumi program"""

import pulumi , pulumi_aws as aws , json

cfg1=pulumi.Config()

vpc1=aws.ec2.Vpc(

"vpc1",

aws.ec2.VpcArgs(

cidr_block=cfg1.require(key='block1'),

tags={

"Name": "vpc1"

}

)

)

intgw1=aws.ec2.InternetGateway(

"intgw1",

aws.ec2.InternetGatewayArgs(

vpc_id=vpc1.id,

tags={

"Name": "intgw1"

}

)

)

zones="us-east-1a"

publicsubnets=["subnet1","subnet2","subnet3"]

cidr1=cfg1.require(key="cidr1")

cidr2=cfg1.require(key="cidr2")

cidr3=cfg1.require(key="cidr3")

cidrs=[ cidr1 , cidr2 , cidr3 ]

for allsubnets in range(len(publicsubnets)):

publicsubnets[allsubnets]=aws.ec2.Subnet(

publicsubnets[allsubnets],

aws.ec2.SubnetArgs(

vpc_id=vpc1.id,

cidr_block=cidrs[allsubnets],

map_public_ip_on_launch=False,

availability_zone=zones,

tags={

"Name" : publicsubnets[allsubnets]

}

)

)

table1=aws.ec2.RouteTable(

"table1",

aws.ec2.RouteTableArgs(

vpc_id=vpc1.id,

routes=[

aws.ec2.RouteTableRouteArgs(

cidr_block=cfg1.require(key="any_ipv4_traffic"),

gateway_id=intgw1.id

)

],

tags={

"Name" : "table1"

}

)

)

associate1=aws.ec2.RouteTableAssociation(

"associate1",

aws.ec2.RouteTableAssociationArgs(

subnet_id=publicsubnets[0].id,

route_table_id=table1.id

)

)

associate2=aws.ec2.RouteTableAssociation(

"associate2",

aws.ec2.RouteTableAssociationArgs(

subnet_id=publicsubnets[1].id,

route_table_id=table1.id

)

)

associate3=aws.ec2.RouteTableAssociation(

"associate3",

aws.ec2.RouteTableAssociationArgs(

subnet_id=publicsubnets[2].id,

route_table_id=table1.id

)

)

ingress_traffic=[

aws.ec2.NetworkAclIngressArgs(

from_port=22,

to_port=22,

protocol="tcp",

cidr_block=cfg1.require(key="myips"),

icmp_code=0,

icmp_type=0,

action="allow",

rule_no=100

),

aws.ec2.NetworkAclIngressArgs(

from_port=22,

to_port=22,

protocol="tcp",

cidr_block=cfg1.require(key="any_ipv4_traffic"),

icmp_code=0,

icmp_type=0,

action="deny",

rule_no=101

),

aws.ec2.NetworkAclIngressArgs(

from_port=80,

to_port=80,

protocol="tcp",

cidr_block=cfg1.require(key="any_ipv4_traffic"),

icmp_code=0,

icmp_type=0,

action="allow",

rule_no=200

),

aws.ec2.NetworkAclIngressArgs(

from_port=443,

to_port=443,

protocol="tcp",

cidr_block=cfg1.require(key="any_ipv4_traffic"),

icmp_code=0,

icmp_type=0,

action="allow",

rule_no=300

),

aws.ec2.NetworkAclIngressArgs(

from_port=0,

to_port=0,

protocol="-1",

cidr_block=cfg1.require(key="any_ipv4_traffic"),

icmp_code=0,

icmp_type=0,

action="allow",

rule_no=400

)

]

egress_traffic=[

aws.ec2.NetworkAclEgressArgs(

from_port=22,

to_port=22,

protocol="tcp",

cidr_block=cfg1.require(key="myips"),

icmp_code=0,

icmp_type=0,

action="allow",

rule_no=100

),

aws.ec2.NetworkAclEgressArgs(

from_port=22,

to_port=22,

protocol="tcp",

cidr_block=cfg1.require(key="any_ipv4_traffic"),

icmp_code=0,

icmp_type=0,

action="deny",

rule_no=101

),

aws.ec2.NetworkAclEgressArgs(

from_port=80,

to_port=80,

protocol="tcp",

cidr_block=cfg1.require(key="any_ipv4_traffic"),

icmp_code=0,

icmp_type=0,

action="allow",

rule_no=200

),

aws.ec2.NetworkAclEgressArgs(

from_port=443,

to_port=443,

protocol="tcp",

cidr_block=cfg1.require(key="any_ipv4_traffic"),

icmp_code=0,

icmp_type=0,

action="allow",

rule_no=300

),

aws.ec2.NetworkAclEgressArgs(

from_port=0,

to_port=0,

protocol="-1",

cidr_block=cfg1.require(key="any_ipv4_traffic"),

icmp_code=0,

icmp_type=0,

action="allow",

rule_no=400

)

]

nacls1=aws.ec2.NetworkAcl(

"nacls1",

aws.ec2.NetworkAclArgs(

vpc_id=vpc1.id,

ingress=ingress_traffic,

egress=egress_traffic,

tags={

"Name" : "nacls1"

},

)

)

nacllink1=aws.ec2.NetworkAclAssociation(

"nacllink1",

aws.ec2.NetworkAclAssociationArgs(

network_acl_id=nacls1.id,

subnet_id=publicsubnets[0].id

)

)

nacllink2=aws.ec2.NetworkAclAssociation(

"nacllink2",

aws.ec2.NetworkAclAssociationArgs(

network_acl_id=nacls1.id,

subnet_id=publicsubnets[1].id

)

)

nacllink3=aws.ec2.NetworkAclAssociation(

"nacllink3",

aws.ec2.NetworkAclAssociationArgs(

network_acl_id=nacls1.id,

subnet_id=publicsubnets[2].id

)

)

masteringress=[

aws.ec2.SecurityGroupIngressArgs(

from_port=22,

to_port=22,

protocol="tcp",

cidr_blocks=[cfg1.require(key="myips")],

),

aws.ec2.SecurityGroupIngressArgs(

from_port=6443,

to_port=6443,

protocol="tcp",

cidr_blocks=[vpc1.cidr_block]

),

aws.ec2.SecurityGroupIngressArgs(

from_port=2379,

to_port=2380,

protocol="tcp",

cidr_blocks=[vpc1.cidr_block]

),

aws.ec2.SecurityGroupIngressArgs(

from_port=10249,

to_port=10260,

protocol="tcp",

cidr_blocks=[vpc1.cidr_block]

)

]

masteregress=[

aws.ec2.SecurityGroupEgressArgs(

from_port=0,

to_port=0,

protocol="-1",

cidr_blocks=[cfg1.require(key="any_ipv4_traffic")]

),

]

mastersecurity=aws.ec2.SecurityGroup(

"mastersecurity",

aws.ec2.SecurityGroupArgs(

vpc_id=vpc1.id,

name="mastersecurity",

ingress=masteringress,

egress=masteregress,

tags={

"Name" : "mastersecurity"

}

)

)

workeringress=[

aws.ec2.SecurityGroupIngressArgs(

from_port=22,

to_port=22,

protocol="tcp",

cidr_blocks=[cfg1.require(key="myips")]

),

aws.ec2.SecurityGroupIngressArgs(

from_port=10250,

to_port=10250,

protocol="tcp",

cidr_blocks=[vpc1.cidr_block]

),

aws.ec2.SecurityGroupIngressArgs(

from_port=10256,

to_port=10256,

protocol="tcp",

cidr_blocks=[vpc1.cidr_block]

),

aws.ec2.SecurityGroupIngressArgs(

from_port=30000,

to_port=32767,

protocol="tcp",

cidr_blocks=[vpc1.cidr_block]

),

aws.ec2.SecurityGroupIngressArgs(

from_port=443,

to_port=443,

protocol="tcp",

cidr_blocks=[cfg1.require(key="any_ipv4_traffic")]

),

aws.ec2.SecurityGroupIngressArgs(

from_port=80,

to_port=80,

protocol="tcp",

cidr_blocks=[cfg1.require(key="any_ipv4_traffic")]

)

]

workeregress=[

aws.ec2.SecurityGroupEgressArgs(

from_port=0,

to_port=0,

protocol="-1",

cidr_blocks=[cfg1.require(key="any_ipv4_traffic")]

),

]

workersecurity=aws.ec2.SecurityGroup(

"workersecurity",

aws.ec2.SecurityGroupArgs(

vpc_id=vpc1.id,

name="workersecurity",

ingress=workeringress,

egress=workeregress,

tags={

"Name" : "workersecurity"

}

)

)

master=aws.ec2.Instance(

"master",

aws.ec2.InstanceArgs(

ami=cfg1.require(key='ami'),

instance_type=cfg1.require(key='instance-type'),

vpc_security_group_ids=[mastersecurity.id],

subnet_id=publicsubnets[0].id,

availability_zone=zones,

key_name="mykey1",

tags={

"Name" : "master"

},

ebs_block_devices=[

aws.ec2.InstanceEbsBlockDeviceArgs(

device_name="/dev/sdm",

volume_size=8,

volume_type="gp3"

)

]

)

)

worker1=aws.ec2.Instance(

"worker1",

aws.ec2.InstanceArgs(

ami=cfg1.require(key='ami'),

instance_type=cfg1.require(key='instance-type'),

vpc_security_group_ids=[workersecurity.id],

subnet_id=publicsubnets[1].id,

availability_zone=zones,

key_name="mykey2",

tags={

"Name" : "worker1"

},

ebs_block_devices=[

aws.ec2.InstanceEbsBlockDeviceArgs(

device_name="/dev/sdb",

volume_size=8,

volume_type="gp3"

)

],

)

)

worker2=aws.ec2.Instance(

"worker2",

aws.ec2.InstanceArgs(

ami=cfg1.require(key='ami'),

instance_type=cfg1.require(key='instance-type'),

vpc_security_group_ids=[workersecurity.id],

subnet_id=publicsubnets[2].id,

key_name="mykey2",

tags={

"Name" : "worker2"

},

ebs_block_devices=[

aws.ec2.InstanceEbsBlockDeviceArgs(

device_name="/dev/sdc",

volume_size=8,

volume_type="gp3"

)

],

)

)

eips=[ "eip1" , "eip2" , "eip3" ]

for alleips in range(len(eips)):

eips[alleips]=aws.ec2.Eip(

eips[alleips],

aws.ec2.EipArgs(

domain="vpc",

tags={

"Name" : eips[alleips]

}

)

)

eiplink1=aws.ec2.EipAssociation(

"eiplink1",

aws.ec2.EipAssociationArgs(

allocation_id=eips[0].id,

instance_id=master.id

)

)

eiplink2=aws.ec2.EipAssociation(

"eiplink2",

aws.ec2.EipAssociationArgs(

allocation_id=eips[1].id,

instance_id=worker1.id

)

)

eiplink3=aws.ec2.EipAssociation(

"eiplink3",

aws.ec2.EipAssociationArgs(

allocation_id=eips[2].id,

instance_id=worker2.id

)

)

pulumi.export("master_eip" , value=eips[0].public_ip )

pulumi.export("worker1_eip", value=eips[1].public_ip )

pulumi.export("worker2_eip", value=eips[2].public_ip )

pulumi.export("master_private_ip", value=master.private_ip)

pulumi.export("worker1_private_ip" , value=worker1.private_ip)

pulumi.export( "worker2_private_ip" , value=worker2.private_ip )

master.sh script : for master node

!/bin/bash

disable swap

sudo swapoff -a

sudo sed -i '/ swap / s/^(.*)$/#\1/g' /etc/fstab

update kernel params

cat < sudo modprobe overlay cat < sudo sysctl --system

sudo tar Cxzvf /usr/local containerd-1.7.27-linux-amd64.tar.gz

curl -LO https://raw.githubusercontent.com/containerd/containerd/main/containerd.service

sudo mkdir -p /usr/local/lib/systemd/system/ sudo systemctl daemon-reload curl -LO https://github.com/opencontainers/runc/releases/download/v1.1.12/runc.amd64

sudo install -m 755 runc.amd64 /usr/local/sbin/runc

sudo mkdir -p /opt/cni/bin

sudo tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.6.2.tgz

sudo apt-get update -y kubeadm version sudo crictl config runtime-endpoint unix:///run/containerd/containerd.sock sudo kubeadm init --pod-network-cidr=192.168.0.0/16 --apiserver-advertise-address=77.132.100.111 --node-name master

mkdir -p $HOME/.kube sudo chmod 777 .kube/ export KUBECONFIG=/etc/kubernetes/admin.conf

kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.29.2/manifests/tigera-operator.yaml

curl -o custom-resources.yaml https://raw.githubusercontent.com/projectcalico/calico/v3.29.2/manifests/custom-resources.yaml

kubectl apply -f custom-resources.yaml

Notice:

sudo kubeadm join 77.132.100.111:6443 --token uwic8i.4btsato9aj46v7pz \ worker1.sh script for worker node1

sudo swapoff -a cat < sudo modprobe overlay cat < sudo sysctl --system

sudo tar Cxzvf /usr/local containerd-1.7.27-linux-amd64.tar.gz

curl -LO https://raw.githubusercontent.com/containerd/containerd/main/containerd.service

sudo mkdir -p /usr/local/lib/systemd/system/ sudo systemctl daemon-reload curl -LO https://github.com/opencontainers/runc/releases/download/v1.1.12/runc.amd64

sudo install -m 755 runc.amd64 /usr/local/sbin/runc

sudo mkdir -p /opt/cni/bin

sudo tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.6.2.tgz

sudo apt-get update -y kubeadm version sudo crictl config runtime-endpoint unix:///run/containerd/containerd.sock sudo hostname worker1

mkdir -p $HOME/.kube sudo chmod 777 .kube/ sudo kubeadm join 77.132.100.111:6443 --token uwic8i.4btsato9aj46v7pz \ worker2 script for worker node 2

sudo swapoff -a cat < sudo modprobe overlay cat < sudo sysctl --system

sudo tar Cxzvf /usr/local containerd-1.7.27-linux-amd64.tar.gz

curl -LO https://raw.githubusercontent.com/containerd/containerd/main/containerd.service

sudo mkdir -p /usr/local/lib/systemd/system/ sudo systemctl daemon-reload curl -LO https://github.com/opencontainers/runc/releases/download/v1.1.12/runc.amd64

sudo install -m 755 runc.amd64 /usr/local/sbin/runc

sudo mkdir -p /opt/cni/bin

sudo tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.6.2.tgz

sudo apt-get update -y kubeadm version sudo crictl config runtime-endpoint unix:///run/containerd/containerd.sock sudo hostname worker2

mkdir -p $HOME/.kube sudo chmod 777 .kube/ sudo kubeadm join 77.132.100.111:6443 --token uwic8i.4btsato9aj46v7pz \ create alias for kubectll command

Exmaple

alias k=kubectl > .bashrc for all nodes

create deployment nginx1 with image nginx:1.27.3 , replicas=4 --port=8000 k create deployment/nginx1 –image=nginx:1.27.3 –replicas=4 –port=8000 ECTDCTL_API=3 so you can use command etcdctl

export ECTDCTL_API=3

type etcdctl

cd /etc/kubernetes/manifest

cat etcd.yaml

default directory for etcd /var/lib/etcd

1.backup all datta into /opt/etcd-backup.db use nano or vim editor to change new path of --data-dir=/var/lib/etcd-backup-and-restore on etcd.yaml also with volume mountpath

restart all components by :

mv .yaml /tmp also recommeded to restart the kubelet and all daemon

sudo systemctl restart kubelet && sudo systemctl daemon-reload

check on pod etcd-master using k describe pod etcd-master

Example for etcdctl

sudo etcdctl --endpoints=https://127.0.0.1:2379 \ etcdctl snapshot restore output table of etcd-backup.db for etcdctll References:

br_netfilter

EOF

sudo modprobe br_netfilter

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

install container runtime

install containerd 1.7.27

sudo mv containerd.service /usr/local/lib/systemd/system/

sudo mkdir -p /etc/containerd

sudo containerd config default | sudo tee /etc/containerd/config.toml

sudo sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml

sudo systemctl enable containerd --now

install runc

install cni plugins

install kubeadm, kubelet, kubectl

sudo apt-get install -y apt-transport-https ca-certificates curl gpg

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.32/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.32/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update -y

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

kubelet --version

kubectl version --client

configure crictl to work with containerd

sudo chmod 777 -R /var/run/containerd/

initialize the control plane node using kubeadm

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

sudo chmod 777 -R /etc/kubernetes/

install cni calico

--discovery-token-ca-cert-hash sha256:084b7c1384239e35a6d18d5b6034cfc621193a2d4dd206fa3dfc405dd6976335

output from kubadm after finished installation so can use to access worker node

if you missing the token , you can initiate new token that worker node can be ue it

sudo kubeadm token create --print-join-command >> join-master.sh

sudo chmod +x join-master.sh

!/bin/bash

disable swap

sudo sed -i '/ swap / s/^(.*)$/#\1/g' /etc/fstab

update kernel params

br_netfilter

EOF

sudo modprobe br_netfilter

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

install container runtime

install containerd 1.7.27

sudo mv containerd.service /usr/local/lib/systemd/system/

sudo mkdir -p /etc/containerd

sudo containerd config default | sudo tee /etc/containerd/config.toml

sudo sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml

sudo systemctl enable containerd --now

install runc

install cni plugins

install kubeadm, kubelet, kubectl

sudo apt-get install -y apt-transport-https ca-certificates curl gpg

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.32/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.32/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update -y

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

kubelet --version

kubectl version --client

configure crictl to work with containerd

sudo chmod 777 -R /var/run/containerd/

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

sudo chmod 777 -R /etc/kubernetes/

--discovery-token-ca-cert-hash sha256:084b7c1384239e35a6d18d5b6034cfc621193a2d4dd206fa3dfc405dd6976335

!/bin/bash

disable swap

sudo sed -i '/ swap / s/^(.*)$/#\1/g' /etc/fstab

update kernel params

br_netfilter

EOF

sudo modprobe br_netfilter

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

install container runtime

install containerd 1.7.27

sudo mv containerd.service /usr/local/lib/systemd/system/

sudo mkdir -p /etc/containerd

sudo containerd config default | sudo tee /etc/containerd/config.toml

sudo sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml

sudo systemctl enable containerd --now

install runc

install cni plugins

install kubeadm, kubelet, kubectl

install kubeadm, kubelet, kubectl

sudo apt-get install -y apt-transport-https ca-certificates curl gpg

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.32/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.32/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update -y

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

kubelet --version

kubectl version --client

configure crictl to work with containerd

sudo chmod 777 -R /var/run/containerd/

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

sudo chmod 777 -R /etc/kubernetes/

--discovery-token-ca-cert-hash sha256:084b7c1384239e35a6d18d5b6034cfc621193a2d4dd206fa3dfc405dd6976335

after finishiing the setup for kuberbernetes cluster and worker node:

expose deployment nginx1 with type=NodePort –port=80, --target-port=8000 –name=nginx-svc

k expose deployment/nginx1 --port=80 --target-port=8000 --name=nginx-svc

k get pods

k get svc

k get deploy

k descrinbe pod pod-name

k describe deploy deployment-name

k describe svc service-name

Install etcdctl on master node

sudo apt install -y etcd-client

notice --data-dir | --cacert= |--cert= | --trust-ca-file | --key

sudo etcdctl --endpoints= \ --cacert= \ --cert= \ --key= snapshot save /opt/etcd-backup.db

kube-apiserver.yaml

kube-scheduler.yaml

kube-controller-manager.yaml

etcd.yaml

sudo mv /tmp/.yaml .

--cacert=/etc/kubernetes/pki/etcd/ca.crt \

--cert=/etc/kubernetes/pki/etcd/server.crt \

--key=/etc/kubernetes/pki/etcd/server.key \

snapshot save /opt/etcd-backup.db

sudo etcdctl --endpoints=https://127.0.0.1:2379 \

--cacert=/etc/kubernetes/pki/etcd/ca.crt \

--cert=/etc/kubernetes/pki/etcd/server.crt \

--key=/etc/kubernetes/pki/etcd/server.key \

snapshot restore /opt/etcd-backup.db \

--data-dir /var/lib/etcd-backup-and-restore

sudo etcdctl --write-out=table --endpoints=https://127.0.0.1:2379 \

--cacert=/etc/kubernetes/pki/etcd/ca.crt \

--cert=/etc/kubernetes/pki/etcd/server.crt \

--key=/etc/kubernetes/pki/etcd/server.key \

snapshot status /opt/etcd-backup.dd

![Apple Watch Series 10 Prototype with Mystery Sensor Surfaces [Images]](https://www.iclarified.com/images/news/96892/96892/96892-640.jpg)

![Get Up to 69% Off Anker and Eufy Products on Final Day of Amazon's Big Spring Sale [Deal]](https://www.iclarified.com/images/news/96888/96888/96888-640.jpg)

![Apple Officially Releases macOS Sequoia 15.4 [Download]](https://www.iclarified.com/images/news/96887/96887/96887-640.jpg)

![What’s new in Android’s March 2025 Google System Updates [U: 3/31]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

-xl-xl.jpg)

![[The AI Show Episode 141]: Road to AGI (and Beyond) #1 — The AI Timeline is Accelerating](https://www.marketingaiinstitute.com/hubfs/ep%20141.1.png)

![[The AI Show Episode 140]: New AGI Warnings, OpenAI Suggests Government Policy, Sam Altman Teases Creative Writing Model, Claude Web Search & Apple’s AI Woes](https://www.marketingaiinstitute.com/hubfs/ep%20140%20cover.png)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![From broke musician to working dev. How college drop-out Ryan Furrer taught himself to code [Podcast #166]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743189826063/2080cde4-6fc0-46fb-b98d-b3d59841e8c4.png?#)

-1280x720.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)