This AI app claims it can see what I'm looking at – which it mostly can

Hugging Face debuts AI vision app.

- Hugging Face has launched HuggingSnap, an iOS app that can analyze and describe whatever your iPhone's camera sees.

- The app works offline, never sending data to the cloud.

- HuggingSnap is imperfect but demonstrates what can be done entirely on-device.

Giving eyesight to AI is becoming increasingly common as tools like ChatGPT, Microsoft Copilot, and Google Gemini roll out glasses for their AI tools. Hugging Face has just dropped its own spin on the idea with a new iOS app called HuggingSnap that offers to look at the world through your iPhone’s camera and describe what it sees without ever connecting to the cloud.

Think of it like having a personal tour guide who knows how to keep their mouth shut. HuggingSnap runs entirely offline using Hugging Face’s in-house vision model, smolVLM2, to enable instant object recognition, scene descriptions, text reading, and general observations about your surroundings without any of your data being sent off into the internet void.

That offline capability makes HuggingSnap particularly useful in situations where connectivity is spotty. If you’re hiking in the wilderness, traveling abroad without reliable internet, or simply in one of those grocery store aisles where cell service mysteriously disappears, then having the capacity on your phone is a real boon. Plus, the app claims to be super efficient, meaning it won’t drain your battery the way cloud-based AI models do.

HuggingSnap looks at my world

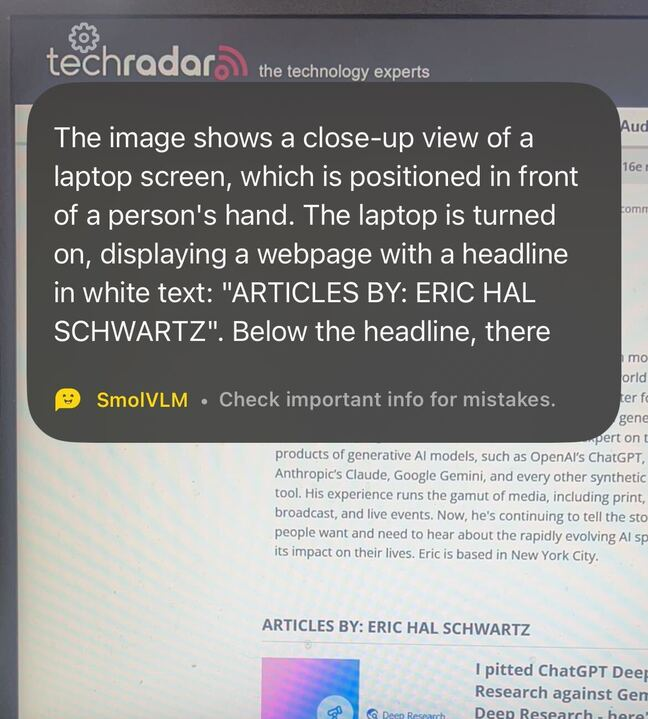

I decided to give the app a whirl. First, I pointed it at my laptop screen while my browser was on my TechRadar biography. At first, the app did a solid job transcribing the text and explaining what it saw. It drifted from reality when it saw the headlines and other details around my bio, however. HuggingSnap thought the references to new computer chips in a headline were an indicator of what's powering my laptop, and seemed to think some of the names in headlines indicated other people who use my laptop.

I then pointed my camera at my son's playpen full of toys I hadn't cleaned up yet. Again, the AI did a great job with the broad strokes in describing the play area and the toys inside. It got the colors and even the textures right when identifying stuffed toys versus blocks. It also fell down in some of the details. For instance, it called a bear a dog and seemed to think a stacking ring was a ball. Overall, I'd call HuggingSnap's AI great for describing a scene to a friend but not quite good enough for a police report.

See the future

HuggingSnap’s on-device approach stands out from your iPhone's built-in abilities. While the device can identify plants, copy text from images, and tell you whether that spider on your wall is the kind that should make you relocate, it almost always has to send some information to the cloud.

HuggingSnap is notable in a world where most apps want to track everything short of your blood type. That said, Apple is heavily investing in on-device AI for its future iPhones. But for now, if you want privacy with your AI vision, HuggingSnap might be perfect for you.

![Apple to Craft Key Foldable iPhone Components From Liquid Metal [Kuo]](https://www.iclarified.com/images/news/96784/96784/96784-640.jpg)

![Apple to Focus on Battery Life and Power Efficiency in Future Premium Devices [Rumor]](https://www.iclarified.com/images/news/96781/96781/96781-640.jpg)

_Federico_Caputo_Alamy.jpg?#)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)

.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)