Why Anthropic’s Claude still hasn’t beaten Pokémon

Weeks later, Sonnet's "reasoning" model is struggling with a game designed for children.

In recent months, the AI industry's biggest boosters have started converging on a public expectation that we're on the verge of “artificial general intelligence” (AGI)—virtual agents that can match or surpass "human-level" understanding and performance on most cognitive tasks.

OpenAI is quietly seeding expectations for a "PhD-level" AI agent that could operate autonomously at the level of a "high-income knowledge worker" in the near future. Elon Musk says that "we'll have AI smarter than any one human probably" by the end of 2025. Anthropic CEO Dario Amodei thinks it might take a bit longer but similarly says it's plausible that AI will be "better than humans at almost everything" by the end of 2027.

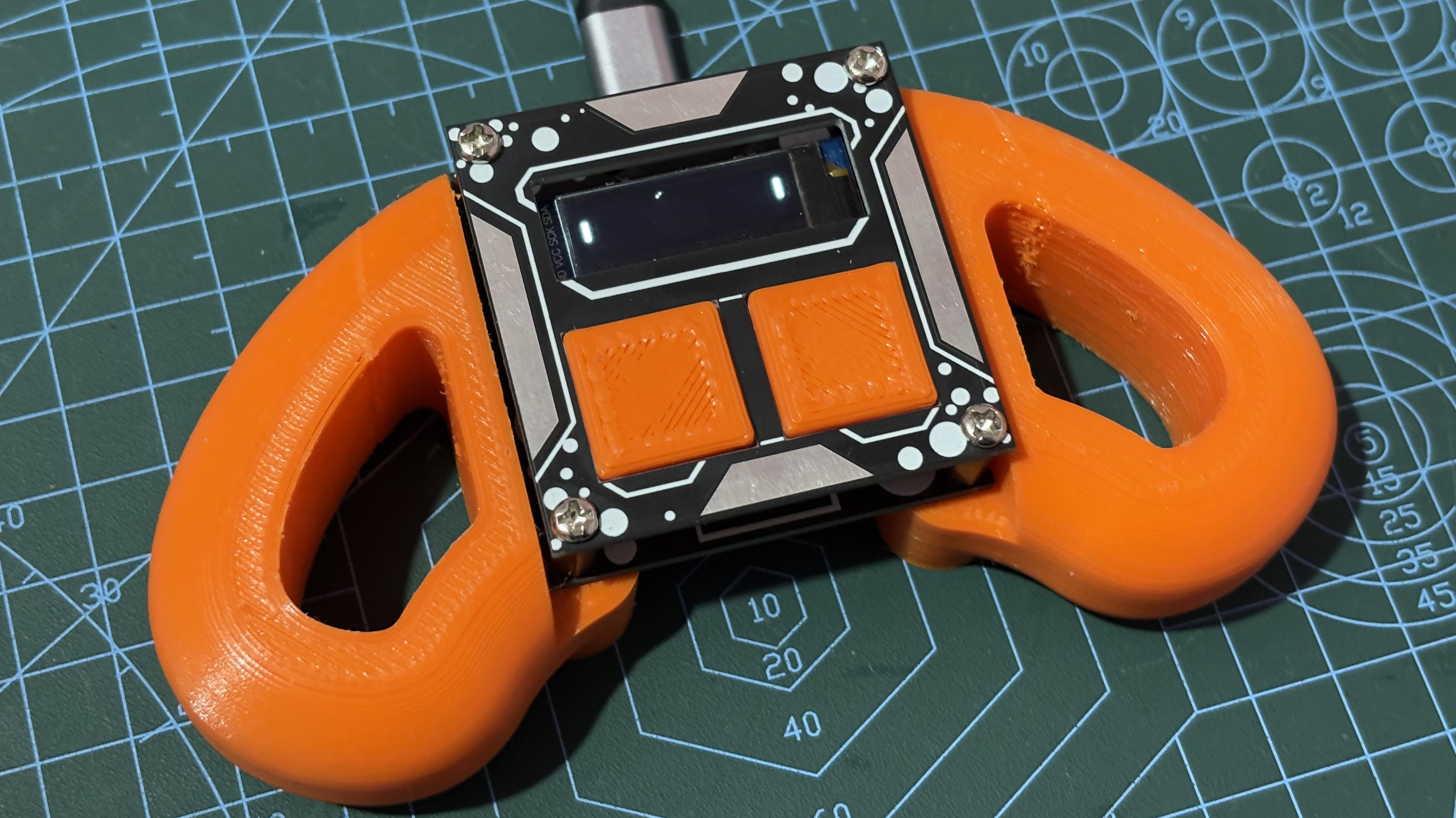

A few researchers at Anthropic have, over the past year, had a part-time obsession with a peculiar problem.

Can Claude play Pokémon?

A thread: pic.twitter.com/K8SkNXCxYJ

— Anthropic (@AnthropicAI) February 25, 2025

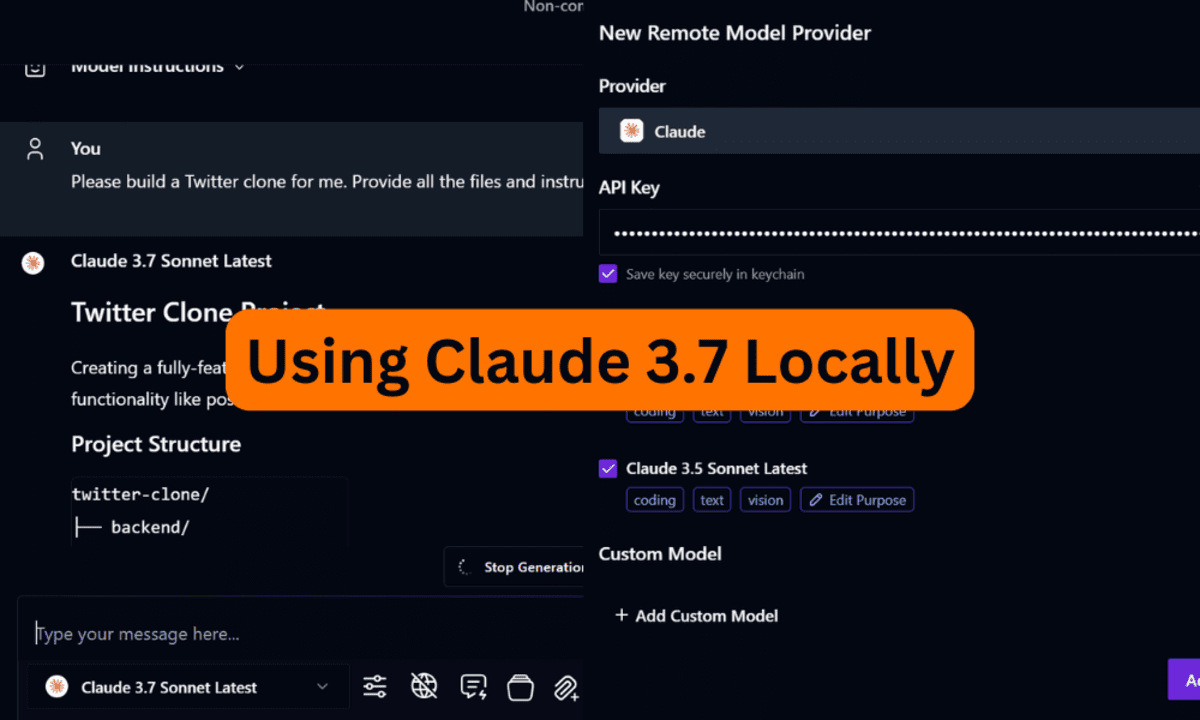

Last month, Anthropic presented its “Claude Plays Pokémon” experiment as a waypoint on the road to that predicted AGI future. It's a project the company said shows "glimmers of AI systems that tackle challenges with increasing competence, not just through training but with generalized reasoning." Anthropic made headlines by trumpeting how Claude 3.7 Sonnet’s "improved reasoning capabilities" let the company's latest model make progress in the popular old-school Game Boy RPG in ways "that older models had little hope of achieving."

![Apple to Craft Key Foldable iPhone Components From Liquid Metal [Kuo]](https://www.iclarified.com/images/news/96784/96784/96784-640.jpg)

![Apple to Focus on Battery Life and Power Efficiency in Future Premium Devices [Rumor]](https://www.iclarified.com/images/news/96781/96781/96781-640.jpg)

_Federico_Caputo_Alamy.jpg?#)

![[The AI Show Episode 139]: The Government Knows AGI Is Coming, Superintelligence Strategy, OpenAI’s $20,000 Per Month Agents & Top 100 Gen AI Apps](https://www.marketingaiinstitute.com/hubfs/ep%20139%20cover-2.png)

![[The AI Show Episode 138]: Introducing GPT-4.5, Claude 3.7 Sonnet, Alexa+, Deep Research Now in ChatGPT Plus & How AI Is Disrupting Writing](https://www.marketingaiinstitute.com/hubfs/ep%20138%20cover.png)

.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)