XYZ% of Code is Now Written by AI... Who Cares?

Microsoft CEO Satya Nadella said that "as much as 30% of the company’s code is now written by artificial intelligence" (Apr 2025). Anthropic's CEO made a forecast that "in 12 months, we may be in a world where AI is writing essentially all of the code," (Mar 2025). Google CEO stated that "more than a quarter of code they've been adding was AI-generated" (Oct 2024). When I see this sort of title I often have a sense the XYZ figure has the connotation of software engineers replacement rate. Code written by AI is the code not written by humans, we don't need those 30% of humans to type on their keyboards. With the media's focus on sensationalism and competition for the reader's attention, I don't see why they wouldn't optimize for more drama... While this sort of speculation is curious (how can those CEOs practically measure this sort of metric beyond making guesstimates based on some clues/heuristics?), I don't see much meaning beyond merely evaluating the rates of adoption of AI coding tools... 100% of Code is Generated, 70% of Code is Deleted After Review Let me give you a recent example. I have worked on a small project creating a local python interpreter wrapped as MCP Tool, think of Code Interpreter for ChatGPT. Why even bother, aren't there Python tools already? There are ones, yet it's either Python execution in local env which is dangerous OR relying on Docker or remote environments that need some effort to set up. The idea was to wrap into an MCP Server the custom-made, sandboxed, local Python interpreter provided with HuggingFace's smolagents library. After cloning smolagents' repo, investigating the codebase, and creating a small example of isolated use of the interpreter I've instructed Cursor's Agent to create a new MCP Server project. I showed it the example, and the interpreter code, and gave a link to MCP Server docs my Anthropic. The agents create a complete linter-warnings-free code base. Yet in the next couple of hours, I have iterated on the produced code base. I've removed most of the files and lines. Used AI actively, both the autocompletion and chat, i.e. typed not much Python by myself. Can I state that 100% of the project was AI-generated? Probably I can do that. Can this imply that: I was not needed in the process of building software, and was I 100% replaced? Or did I get a 300x productivity boost since as an average human I can type 30 words per minute while SOTA models generate them at ~3000WPM (~150-200 tokens per second) Here's the stats: 1st version by Claude 3.7/Cursor Agent: 9 files, 1062 lines, 45 comments, 158 blanks Final modified and published version: 4 files, 318 lines, 9 comments, 79 blanks While iterating on the code base I used my brain cycles to make sense of what AI had produced, also gaining a better understanding of what actually needed to be built - and that takes effort and time. Sometimes writing code is easier than reading. Besides writing code (or better say low-level modifications) has a very important function of learning the code base and giving you time for the task to sink in and make sense. After all, I dropped ~70% of AI-generated code. Does it tell much? Does it mean AI code is junk if it had to be thrown away? Generating in minutes reworking/debugging in hours? I don't think so. Yet the rework percentage isn't that telling metric alone, just like the percent generated metric. One might say that the example is isolated. Creating from scratch some small project is a corner case not met that often in real life. That's true. Yet I think it makes a relevant point and puts some numbers. There's the same tendency to remove/rework a lot of generated code when maintaining a large code base. The larger the scope of the task, the more agentic the workflow, and the more lines/files are touched - the more you have to fix. For some reason, the best AI tools still have a hard time getting the "vibes" of the project creating consistent changes fitting the "spirit" of the code base. Building Software is not About Writing Code It's about integrating and shipping code. Did you know that at some point Microsoft had a 3-year release cycle of Windows and "on average, a release took about three years from inception to completion but only about six to nine months of that time was spent developing “new” code? The rest of the time was spent in integration, testing, alpha and beta periods" 1, 2. Writing code is just one very important part, yet it is not the only one. Did you know that (according to a recent Microsoft study) developers spend just 20% of their time coding/refactoring (that's where the XYZ% AI generated metric lands): Working with teams and customers, building software I see many things where AI can barely help. What if your stakeholders become unresponsive, play internal politics, and can't make up their minds about the requirements? Will ChatGPT (or some fancy "agent") chaise the client, flash out all the co

- Microsoft CEO Satya Nadella said that "as much as 30% of the company’s code is now written by artificial intelligence" (Apr 2025).

- Anthropic's CEO made a forecast that "in 12 months, we may be in a world where AI is writing essentially all of the code," (Mar 2025).

- Google CEO stated that "more than a quarter of code they've been adding was AI-generated" (Oct 2024).

When I see this sort of title I often have a sense the XYZ figure has the connotation of software engineers replacement rate. Code written by AI is the code not written by humans, we don't need those 30% of humans to type on their keyboards. With the media's focus on sensationalism and competition for the reader's attention, I don't see why they wouldn't optimize for more drama...

While this sort of speculation is curious (how can those CEOs practically measure this sort of metric beyond making guesstimates based on some clues/heuristics?), I don't see much meaning beyond merely evaluating the rates of adoption of AI coding tools...

100% of Code is Generated, 70% of Code is Deleted After Review

Let me give you a recent example. I have worked on a small project creating a local python interpreter wrapped as MCP Tool, think of Code Interpreter for ChatGPT.

Why even bother, aren't there Python tools already? There are ones, yet it's either Python execution in local env which is dangerous OR relying on Docker or remote environments that need some effort to set up.

The idea was to wrap into an MCP Server the custom-made, sandboxed, local Python interpreter provided with HuggingFace's smolagents library.

After cloning smolagents' repo, investigating the codebase, and creating a small example of isolated use of the interpreter I've instructed Cursor's Agent to create a new MCP Server project. I showed it the example, and the interpreter code, and gave a link to MCP Server docs my Anthropic. The agents create a complete linter-warnings-free code base.

Yet in the next couple of hours, I have iterated on the produced code base. I've removed most of the files and lines. Used AI actively, both the autocompletion and chat, i.e. typed not much Python by myself.

Can I state that 100% of the project was AI-generated? Probably I can do that. Can this imply that:

- I was not needed in the process of building software, and was I 100% replaced?

- Or did I get a 300x productivity boost since as an average human I can type 30 words per minute while SOTA models generate them at ~3000WPM (~150-200 tokens per second)

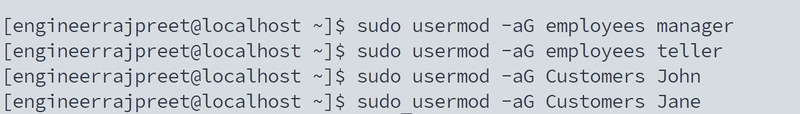

Here's the stats:

- 1st version by Claude 3.7/Cursor Agent: 9 files, 1062 lines, 45 comments, 158 blanks

- Final modified and published version: 4 files, 318 lines, 9 comments, 79 blanks

While iterating on the code base I used my brain cycles to make sense of what AI had produced, also gaining a better understanding of what actually needed to be built - and that takes effort and time. Sometimes writing code is easier than reading. Besides writing code (or better say low-level modifications) has a very important function of learning the code base and giving you time for the task to sink in and make sense.

After all, I dropped ~70% of AI-generated code. Does it tell much? Does it mean AI code is junk if it had to be thrown away? Generating in minutes reworking/debugging in hours? I don't think so. Yet the rework percentage isn't that telling metric alone, just like the percent generated metric.

One might say that the example is isolated. Creating from scratch some small project is a corner case not met that often in real life. That's true. Yet I think it makes a relevant point and puts some numbers. There's the same tendency to remove/rework a lot of generated code when maintaining a large code base. The larger the scope of the task, the more agentic the workflow, and the more lines/files are touched - the more you have to fix. For some reason, the best AI tools still have a hard time getting the "vibes" of the project creating consistent changes fitting the "spirit" of the code base.

Building Software is not About Writing Code

It's about integrating and shipping code. Did you know that at some point Microsoft had a 3-year release cycle of Windows and "on average, a release took about three years from inception to completion but only about six to nine months of that time was spent developing “new” code? The rest of the time was spent in integration, testing, alpha and beta periods" 1, 2.

Writing code is just one very important part, yet it is not the only one. Did you know that (according to a recent Microsoft study) developers spend just 20% of their time coding/refactoring (that's where the XYZ% AI generated metric lands):

Working with teams and customers, building software I see many things where AI can barely help.

What if your stakeholders become unresponsive, play internal politics, and can't make up their minds about the requirements? Will ChatGPT (or some fancy "agent") chaise the client, flash out all the contradictions in requirements (7 green lines, 1 must be transparent), communicate with the whole team and mitigate any of the core risks?

Even if you have what seems to be refined requirements... How much time will it take for every individual team member to embrace what is the "thing" he or she is trying to achieve? How much time will it take for the team to find the internal consensus on how to organize around the goal, break down the scope, and bridge business requirements to implementation detail? Will Gen-AI tools accelerate the team dynamics leapfrogging from forming and storing to norming and performance in days, not weeks?

I see it all the time: people are slow thinkers, there are natural constraints on how much info our brain can process, how many social connections we can build and maintain, etc.

Given the current state and trajectory of AI tools in software development, I see them as isolated productivity tools where human is the bottleneck. There's little progress with AI agents filling all the gaps a human work can do. Even at a higher level of AI autonomy people would still need time to make up their minds, evolve their perspectives, talk, and agree.

Productivity

Ultimately businesses seek for more work to be done with less effort/money. Adopt AI in dev teams, and cut costs/headcounts by some magic number (for some reason it's always 20-30%) - that doesn't seem to be working that way. There's no definitive demonstration of step changes in developer productivity across the industry. I like these 2 examples, studies into developer productivity with AI from last Autumn:

![Apple Reports Q2 FY25 Earnings: $95.4 Billion in Revenue, $24.8 Billion in Net Income [Chart]](https://www.iclarified.com/images/news/97188/97188/97188-640.jpg)

![Apple Ships 55 Million iPhones, Claims Second Place in Q1 2025 Smartphone Market [Report]](https://www.iclarified.com/images/news/97185/97185/97185-640.jpg)

![Android Auto light theme surfaces for the first time in years and looks nearly finished [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/01/android-auto-dashboard-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![[DEALS] Mail Backup X Individual Edition: Lifetime Subscription (72% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)